This guide will walk you through setting up a cluster in Hyper-V with Failover Cluster Manager and System Centre Virtual Machine Manager

You’ll ideally want 2+ nodes for this

This guide will also run you through using on board local storage, EG Dell BOSS, for boot, and then iSCSI from a SAN for everything else

This has also been tested as part of a live deployment using real world hardware, but this guide will focus on specs for a virtual environment, networking though is the same

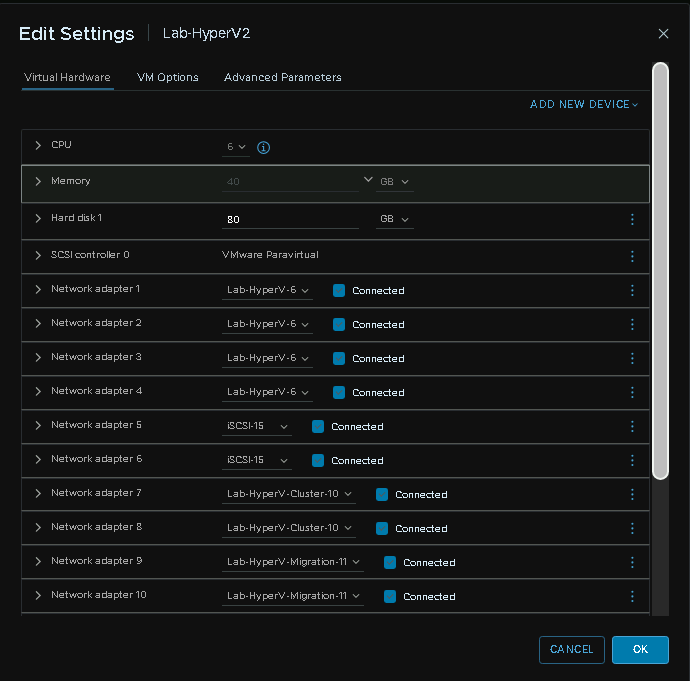

Virtual Host Specs – Minimum

4 vCPU

30GB RAM – This gives us enough to run some VMs and test

~80GB Drive for Windows, direct attached

10GB iSCSI drive for Quorum

A drive for VM data, Eg 500GB, iSCSI

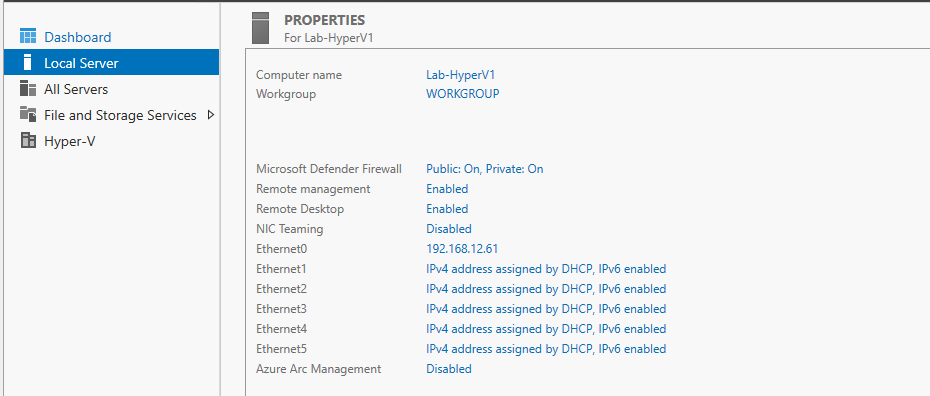

10 NICs with a total of 5 networks, these should be redundancy networks, in my lab deployment iSCSI is Teamed, you may also wish to have 2 separate NICs for two fault domains for iSCSI, but the rest should be teamed NICs

- Management

- iSCSI

- Live Migration

- Cluster

- VM Traffic

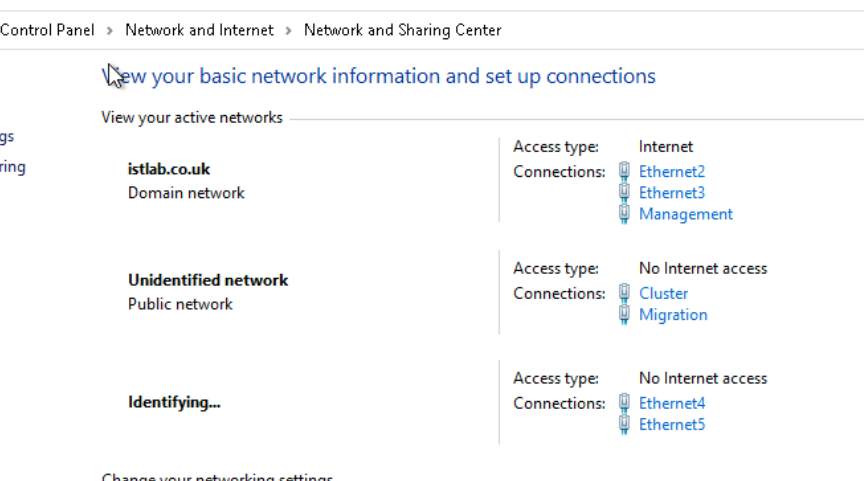

Here is an example host from my deployment, this has slightly beefier specs, here management and VM run of the same /24 network for the Hyper-V Lab, is production the VM traffic may be the same network but is also likely trunked at the switch level for VM VLAN tagging

But generally your Hyper-V host should really look like a switch for the networking

Important – By continuing you are agreeing to the disclaimer here

1 – Preparation

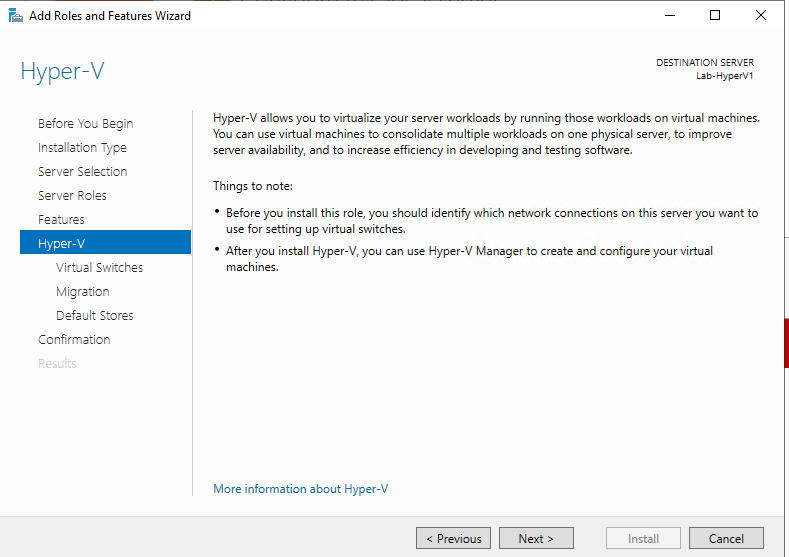

1.1 – Hyper-V Installation

First you’ll need a standard install of Windows server

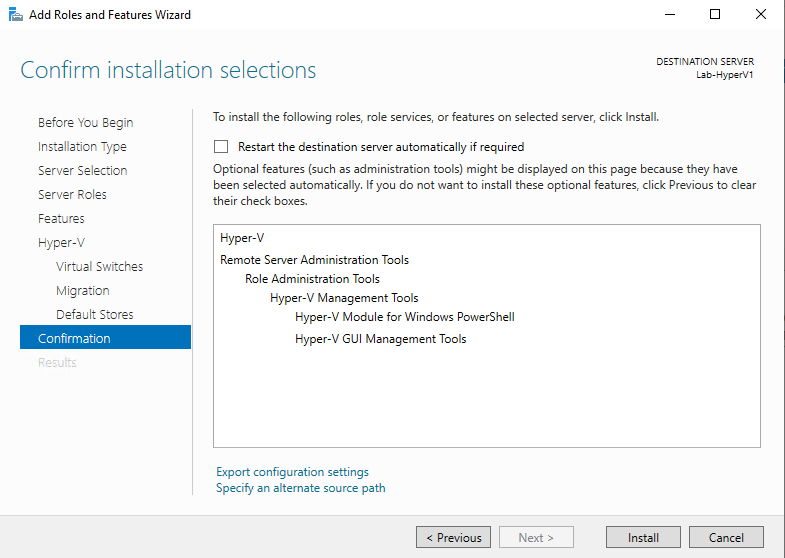

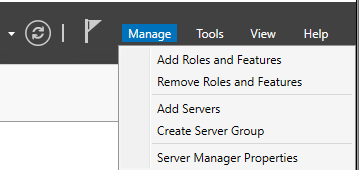

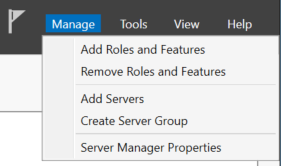

To install Hyper-V you need to add the role to your server, open Server manager and on the top right click Add Roles Or Features

Click next here

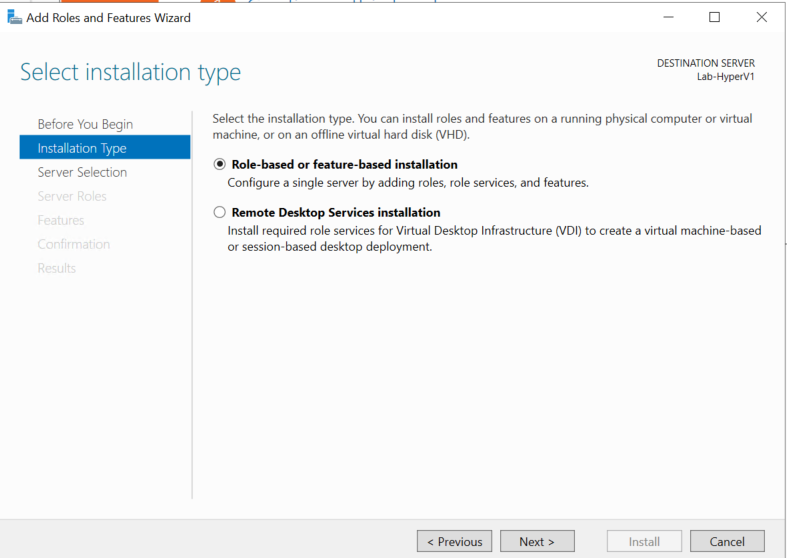

Keep the default role or feature based installation

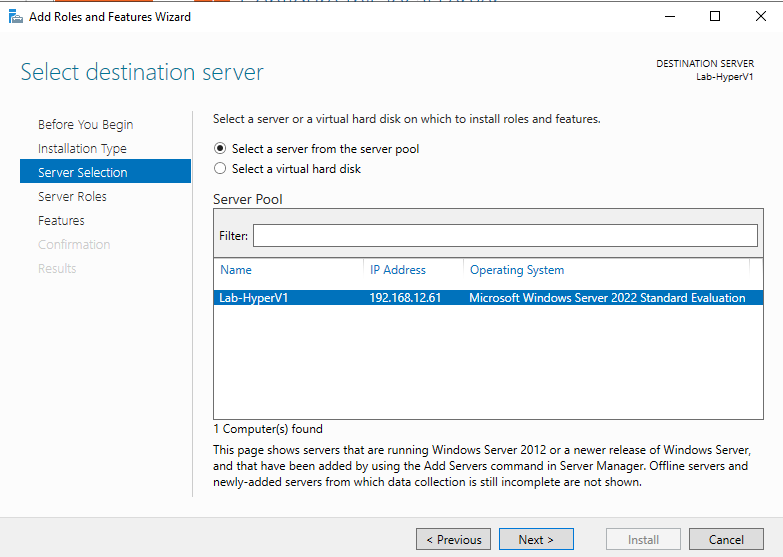

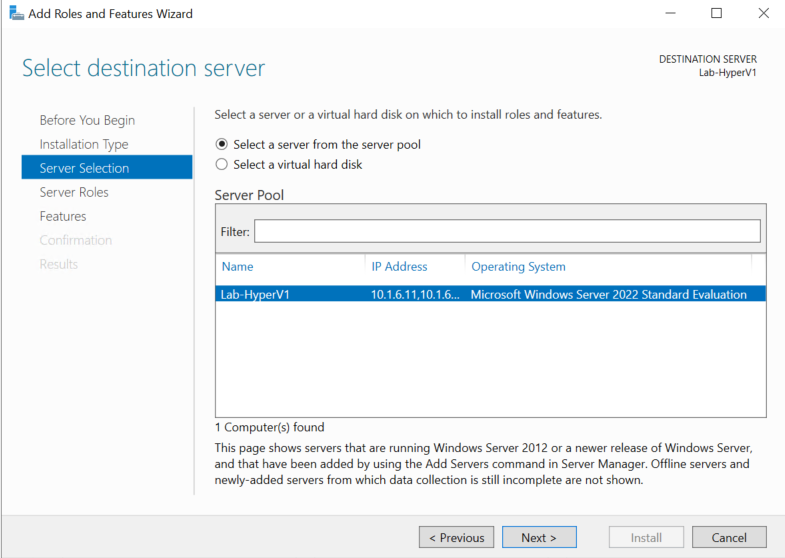

Select a server from the pool, if you have a pool, if not like I do, your server will already be selected

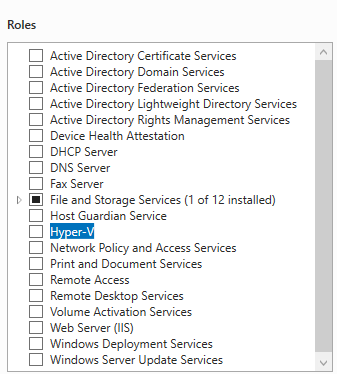

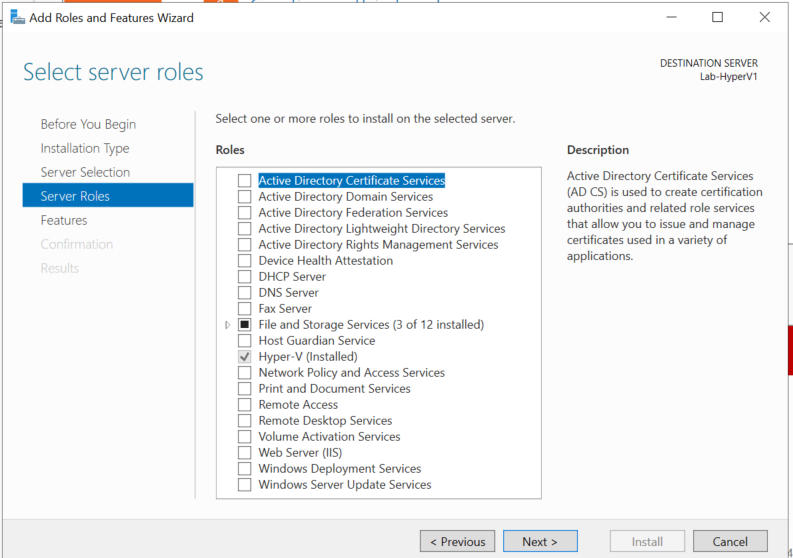

Check Hyper-V here

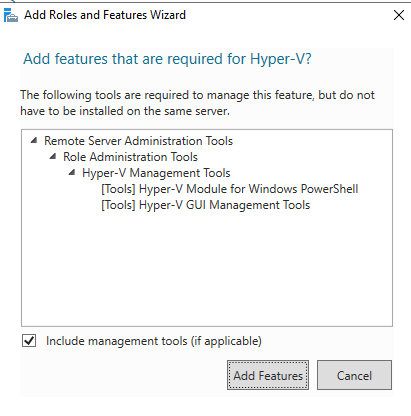

And then add the features

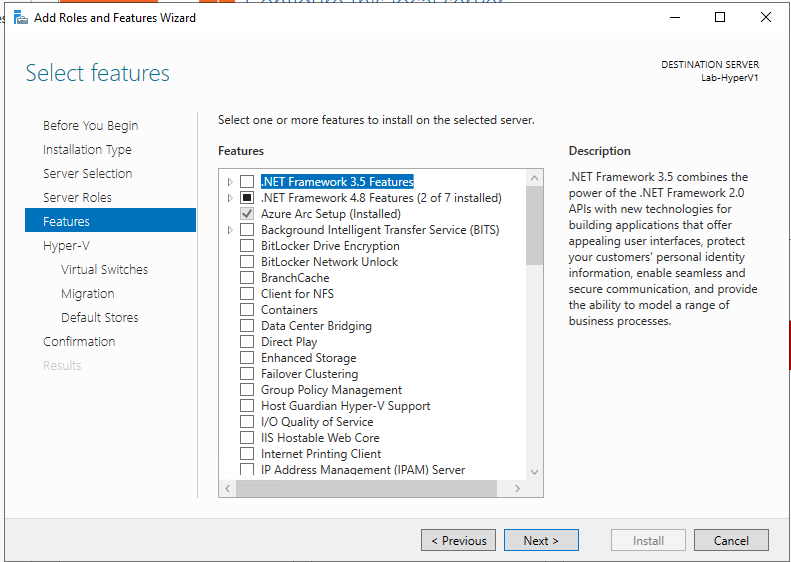

Click next here, we dont need any features

Click next again

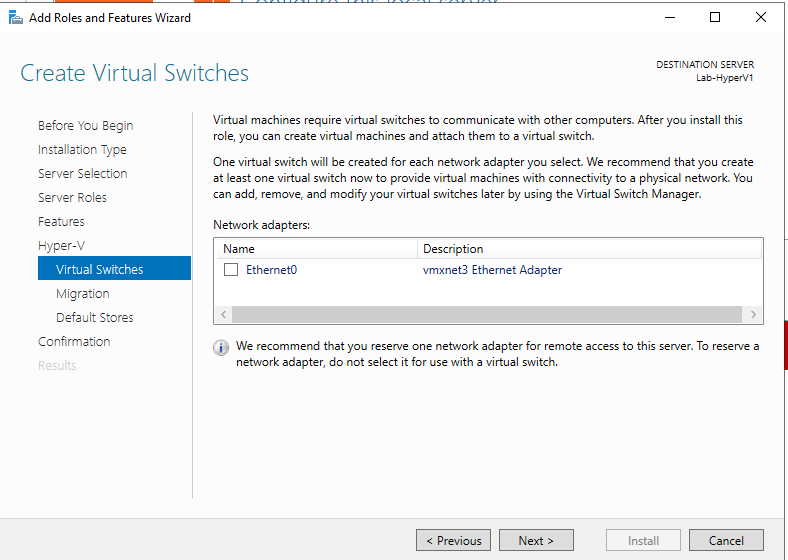

I am skipping over the networking, as I will be setting that up later, so just click next here

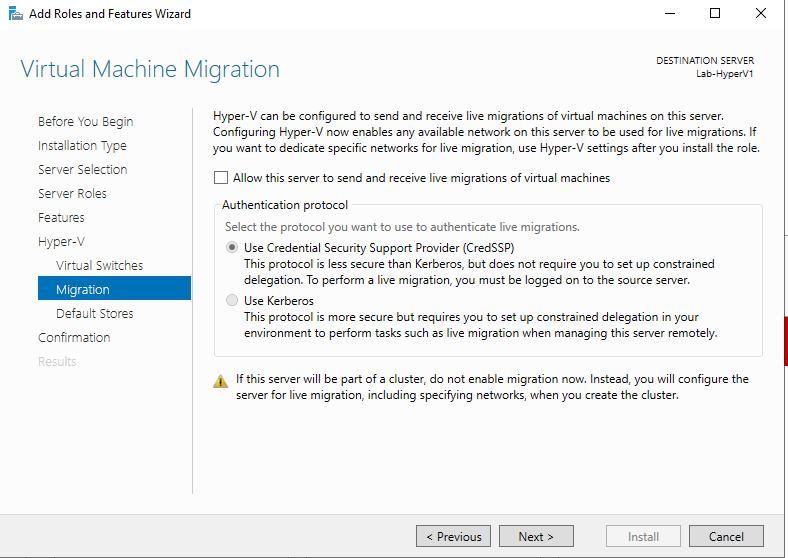

Leave this blank, even if you are clustering Hyper-V you want to set this up later

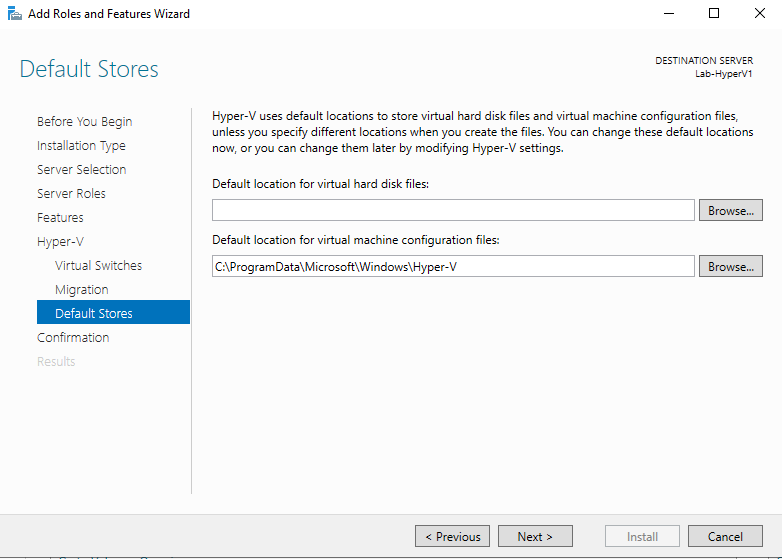

You can specify the default storage location here, I will be using an iSCSI disk from a SAN, so I am leaving tis blank as that disk isnt mapped yet

Then hit install

Then reboot your host and Hyper-V will be installed

1.2 – Enabling MPIO

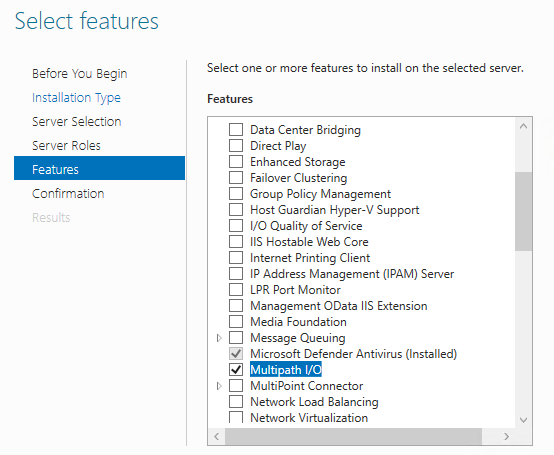

For a Windows server to be able to map an iSCSI disk it needs to have the role for Multipath IO added from server manager

Open server manager and add a role or feature

From there click next till you get to the features, add Multipath IO

Then next, next again and install

1.3 – Mapping Disks

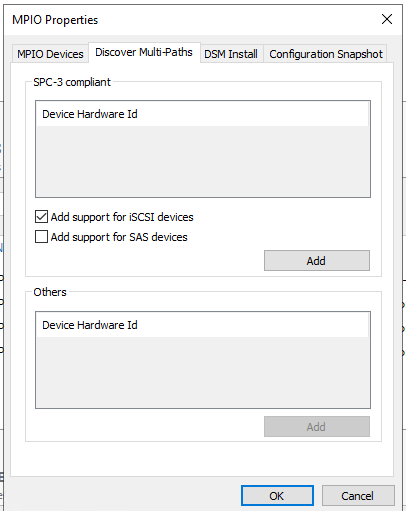

Now that iSCSI has been added we need to map the disks to the server, open MPIO

Add support for iSCSI devices and click ok

Then open the iSCSI initiator settings

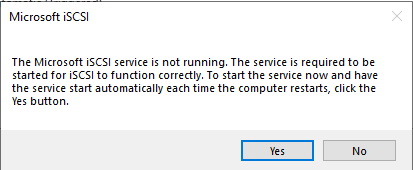

If the iSCSI service isnt running, enable it

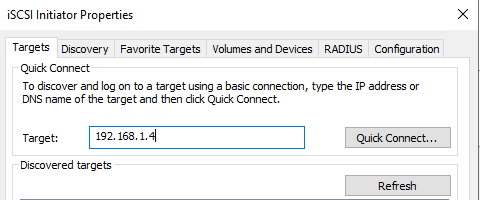

From here we can use dynamic discovery by adding the IP of our target, and using Quick Connect

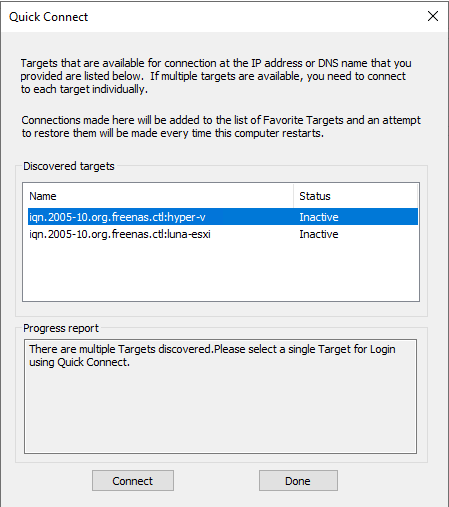

Once you click Quick Connect, we can add the targets, I am mapping the Hyper-V target

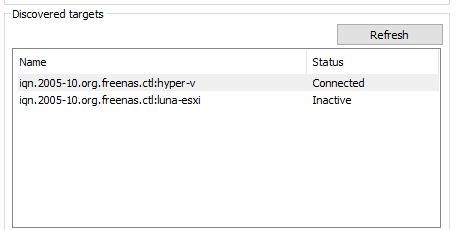

Now it will show as connected

Then from PowerShell, claim the disks for MPIO to fully use with

mpclaim -r -i -a “”

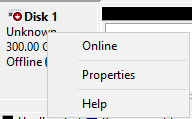

From disk manager you can then bring the disk online

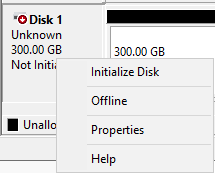

Initialise the disk

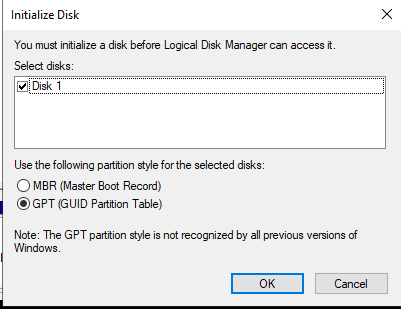

Setup a GPT partition

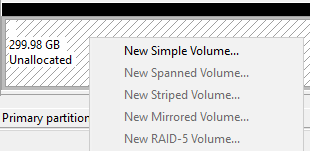

And create a new volume

You’ll need to create a new NTFS or ReFS partition to be able to use the disk in CVS later on

1.4 – Enabling Jumbo Frames

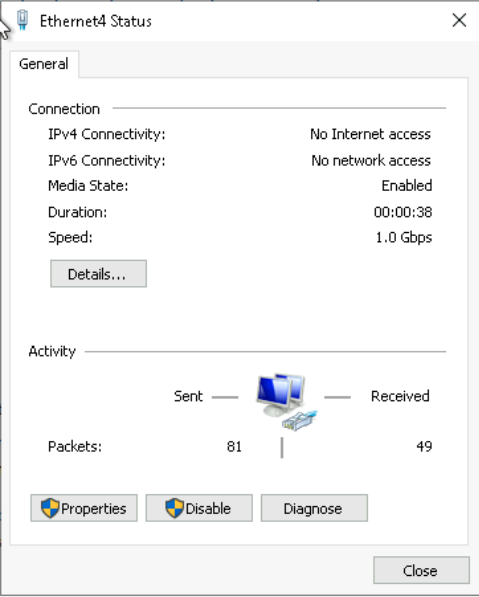

To do this edit this interface from the network and sharing centre, I will be editing my iSCSI NICs, before creating the team, this is Ethernet4/5

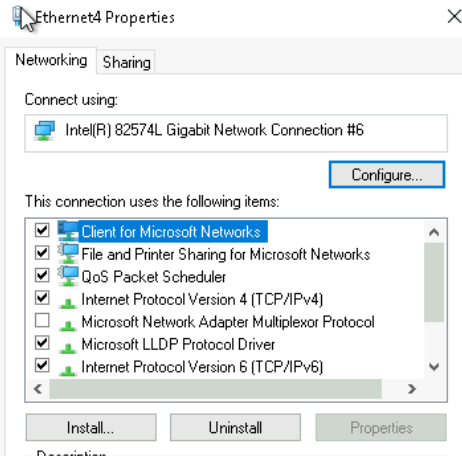

Then properties

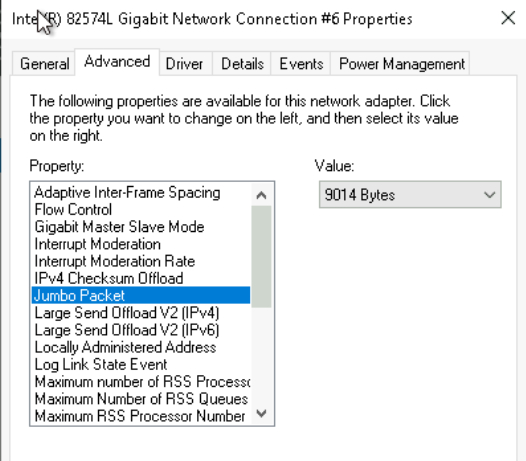

Click configure

Then under advanced you can enable jumbo frames

Then create your team, as this inherits the MTU of the adapters

To test this, ping the iSCSI IP with a high MTU with this command

ping <iSCSI-IP> -f -l <MTu>

A good value for an iSCSI network, which should have an MTU of 9000, is an MTU of 8800

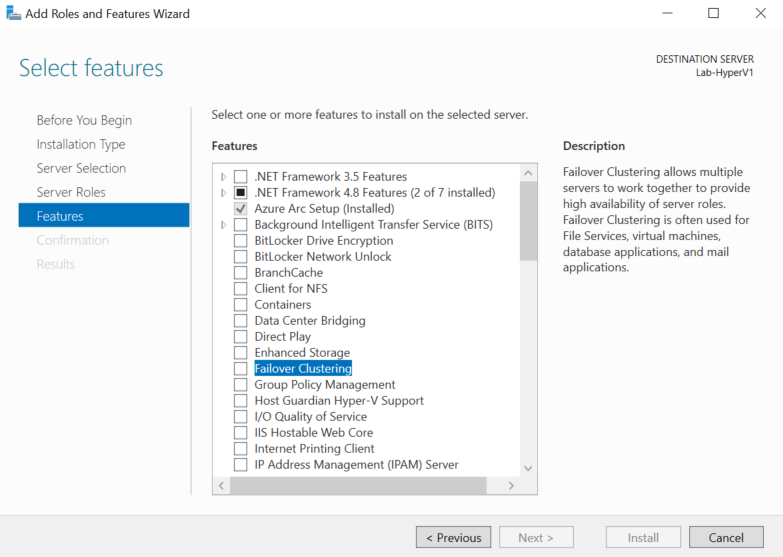

1.5 – Failover Clustering Installation

To install Fail Over Cluster Manager you need to add the Feature to your server

Open server manager and add a role or feature in the top right

Click next

Next again, making sure your server is selected

Next over the roles

Check failover clustering

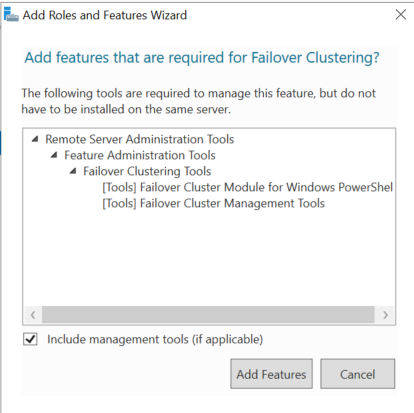

Click add features

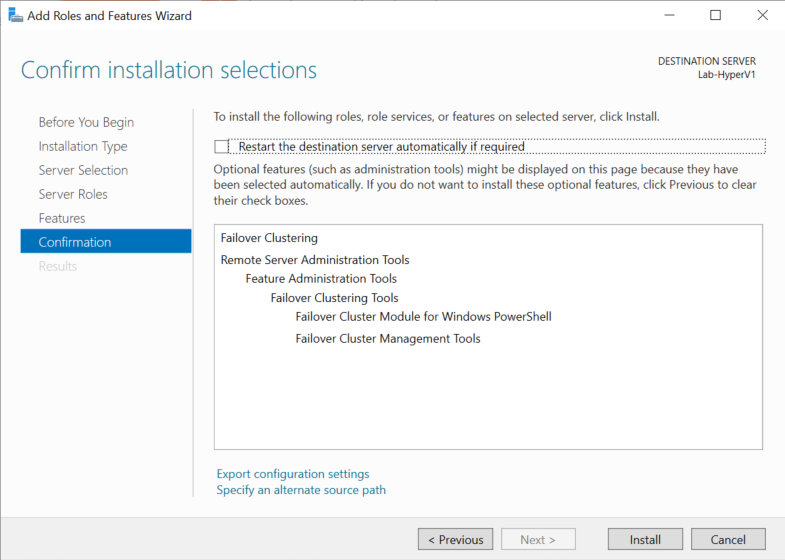

Then install

1.6 – Setting Up NIC Teaming

For redundancy you will want to configure NIC teaming to use 2 physical ports for one connection

I am going to setup NIC teaming for my Windows Server management

From the local server in Server manager, enable NIC teaming

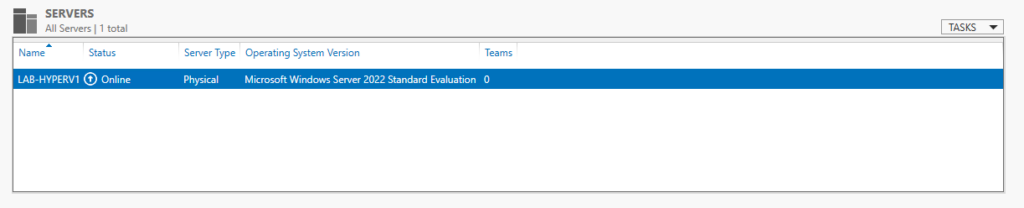

Ensure your server is selected at the top

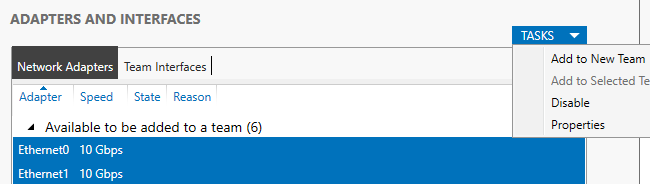

Then lets create a new task to add a teaming for management and select our NICs

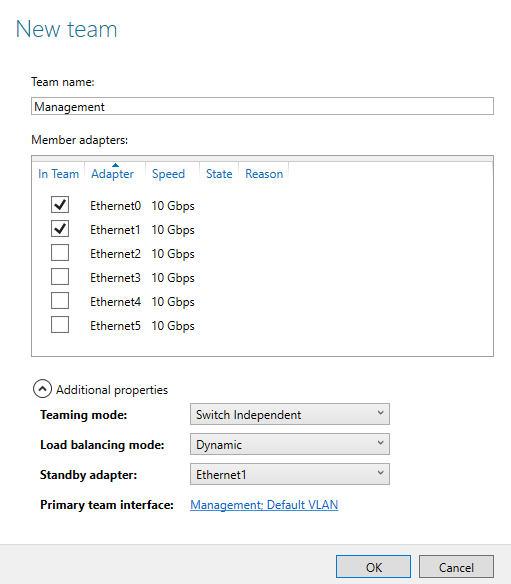

Lets name the Team, ensure the right NICs are being used, I want active passive, so Ethernet1 is setup as standby, and I am leaving the VLAN as the default, as the switch these hosts connect to has the VLAN sorted

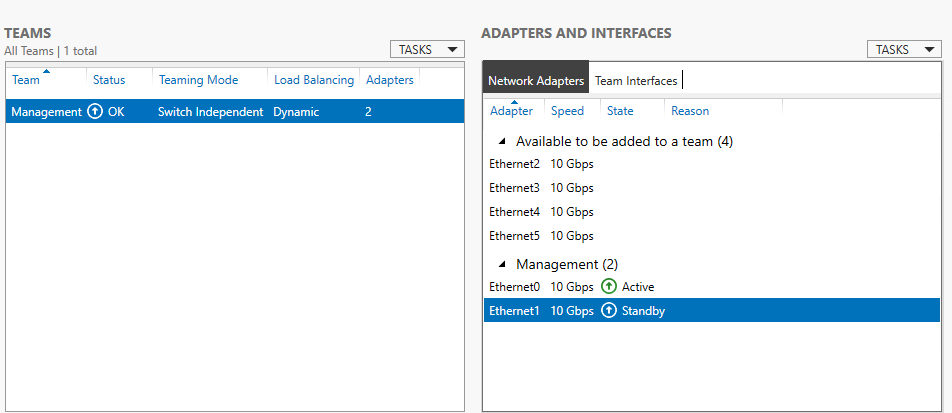

Once its done it should look like this

If you had management configured on Ethernet0, like I do, this will wipe it and you need to configure the networking for the Team, Management, via control panel

1.7 – Setting Up A Teamed vSwitch

You’ll need two adapters for this, not in any Team, as Hyper-V has deprecated LBFO, this needs to be done via PowerShell and will setup an active passive setup with both NICs able to do traffic with the throughput of 1 NIC

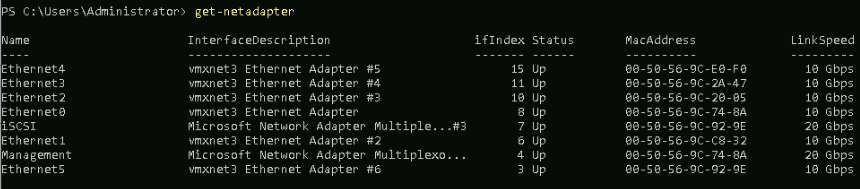

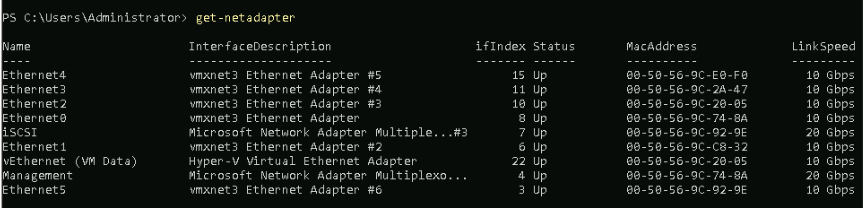

To see your NICs with their names for the command to create a vSwitch use

Get-NetAdapter

First, disable VM queues on the NICs you want to use on the Team with

Disable-NetAdapterVmq -Name “NICx”

Disable-NetAdapterVmq -Name “NICy”

For me, I am ising Ethernet2 and Ethernet3 so my command is

Disable-NetAdapterVmq -Name “Ethernet2”

Disable-NetAdapterVmq -Name “Ethernet3”

To create a new vSwitch use the following substituting NICx and NICy for the NICs you want to use, you can add NICz for example if you want more

New-VMSwitch -Name “<vSwitch Name>” -NetAdapterName “NICx”,”” -EnableEmbeddedTeaming $true -AllowManagementOS $false

I am using Ethernet2 and so my command is

New-VMSwitch -Name “VM Data” -NetAdapterName “Ethernet2″,”Ethernet3” -EnableEmbeddedTeaming $true -AllowManagementOS $false

If you check the adapters now, you will see the new adapter

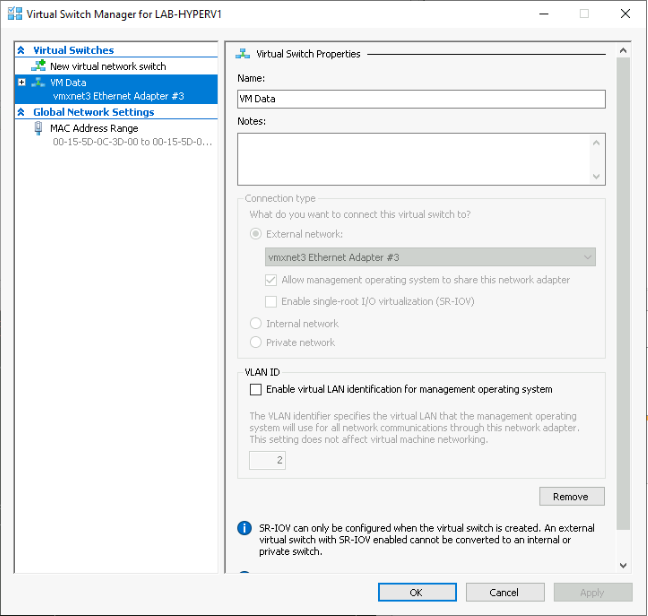

You can see the vSwitch in Hyper-V switch manager, but you cant manage it, it is also using both NICs despite not showing it

If you use ipconfig to view the DHCP IP assigned to that new adapter, the pings work with no drops if you disable the 2 adapters one at a time, this works only if you have -AllowManagementOS $true, which I used for testing the redundancy out, if management allowed is false, it wont have an IP

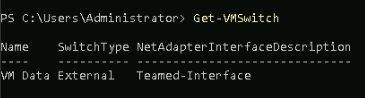

You can also view the switch with

Get-VMSwitch

1.8 – Setup WAC (Optional)

Firstly, download the latest Windows Admin Centre From here

And join your WAC server to the domain

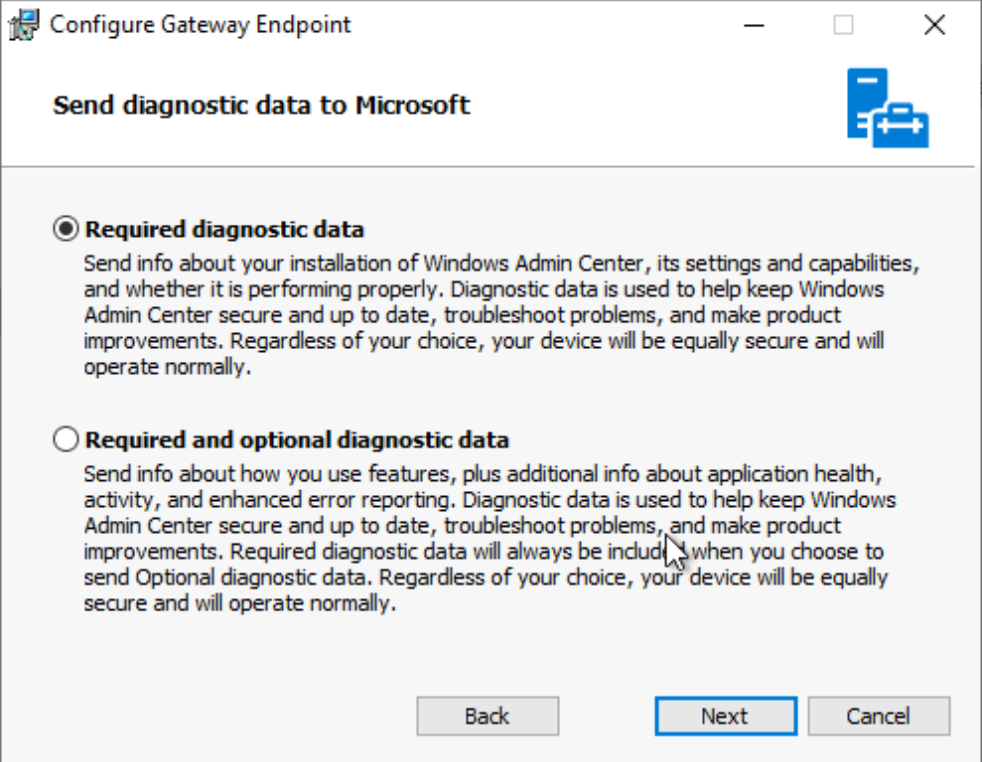

Run the executable, and select the diagnostic data

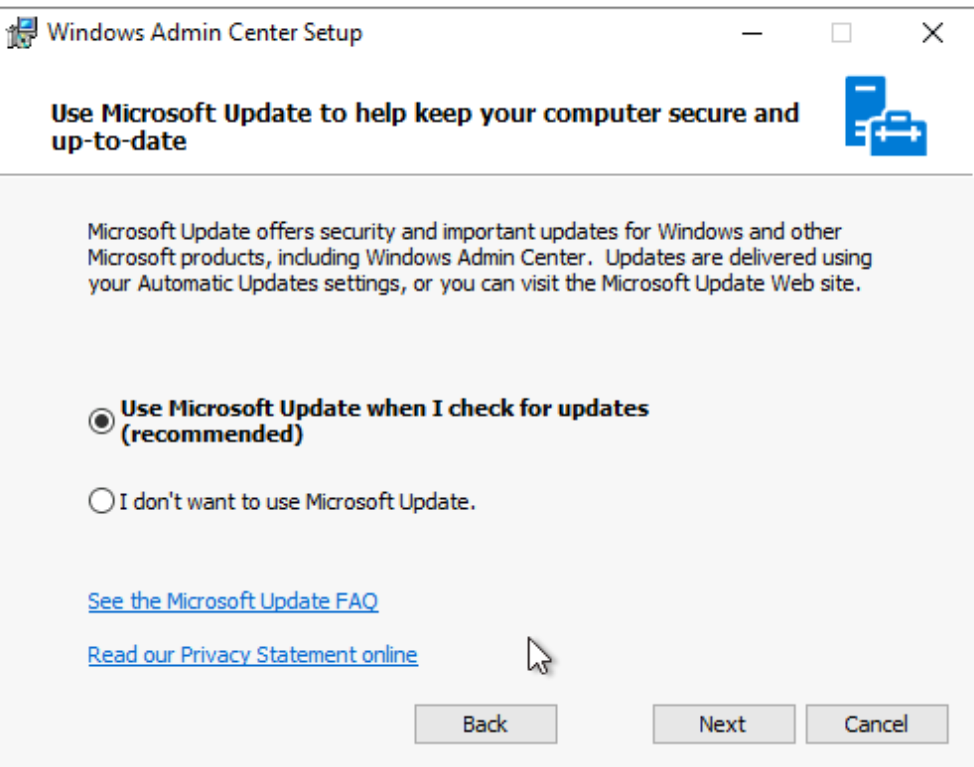

Enable Microsoft updates

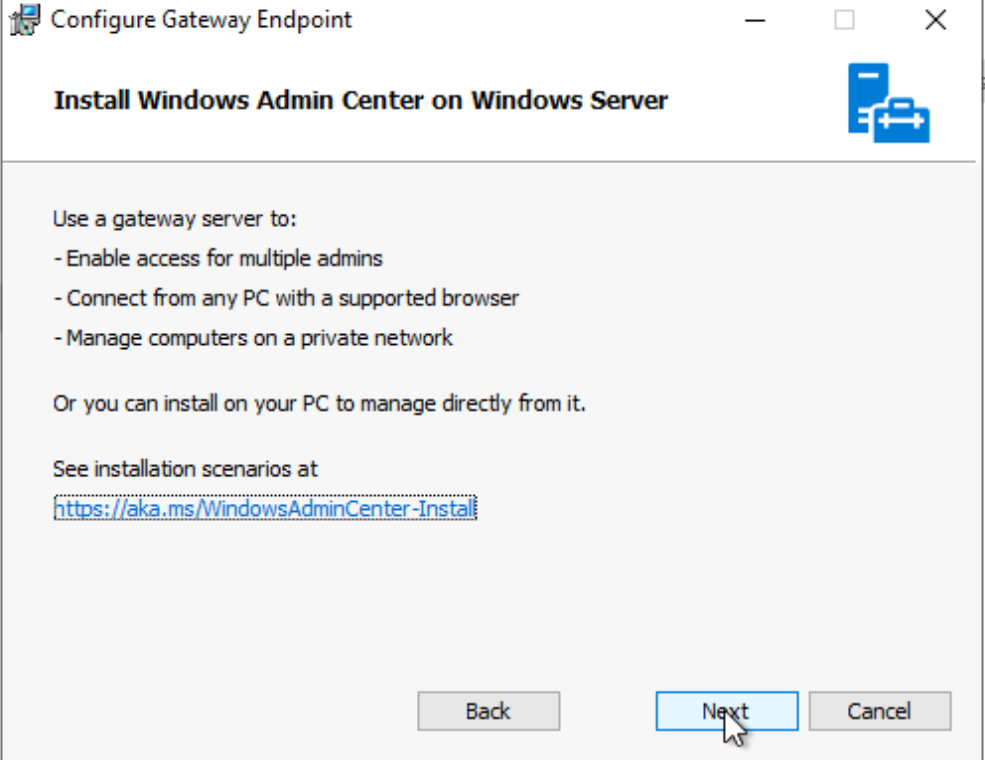

Click next here

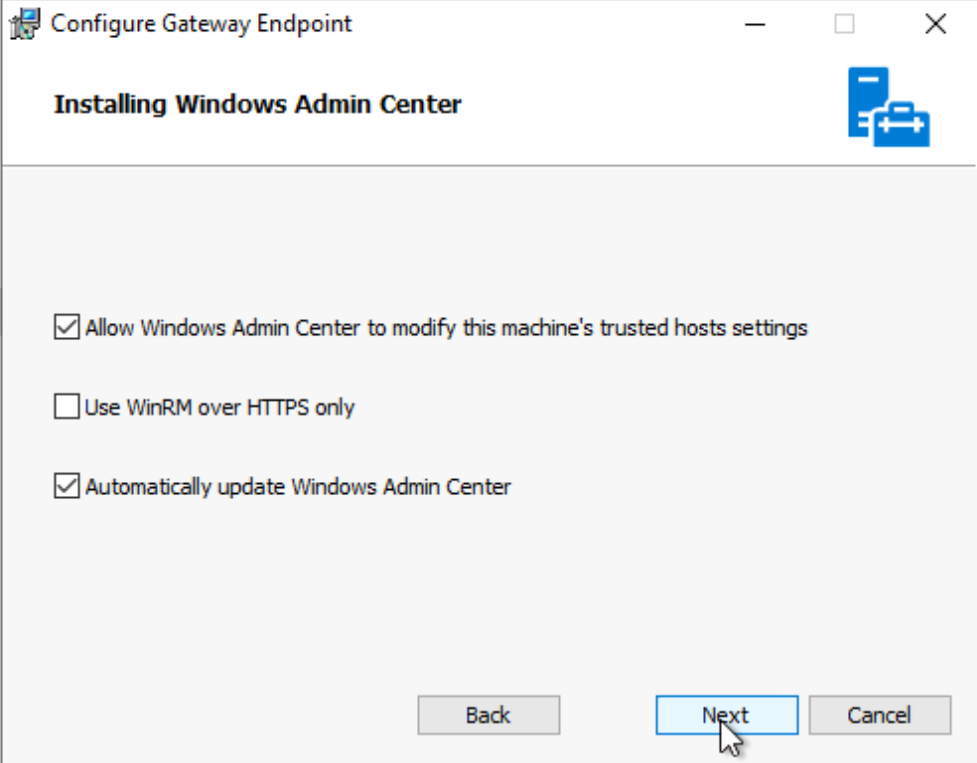

Leave the defaults here, and click next

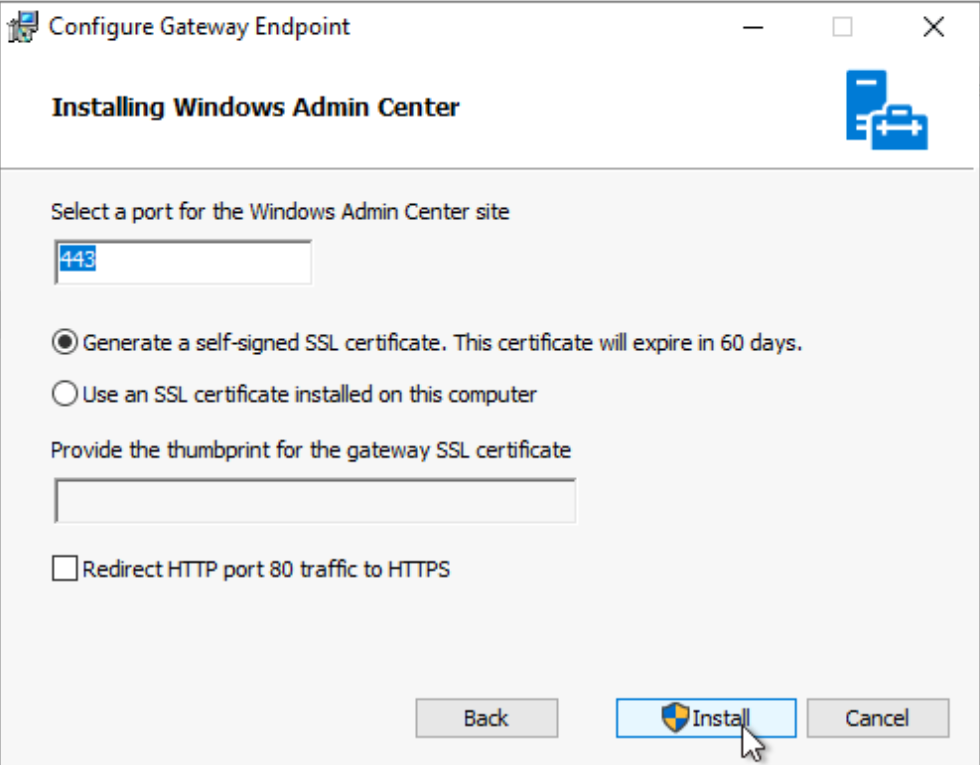

Leave the default port as 443 and generate a self signed certificate unless you want a specific certificate

When you log in, it will update all extensions for you

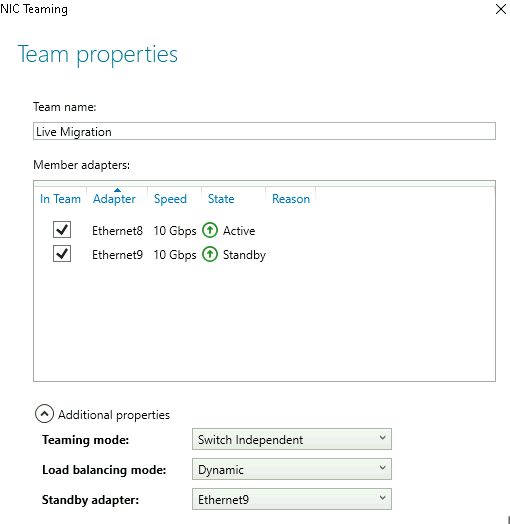

You will also want to add Teams for configure the IPs for iSCSI, Live Migration and Cluster networks like management, Live Migration/Cluster should be separate non routable networks, for Live migration I would recommend using Active/Standby

2 – Failover Cluster Manager

2.1 – Creating The Cluster

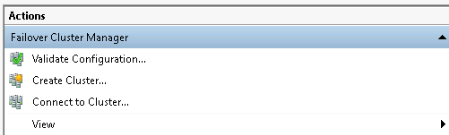

Open the failover cluster manager app

Click create cluster on the right

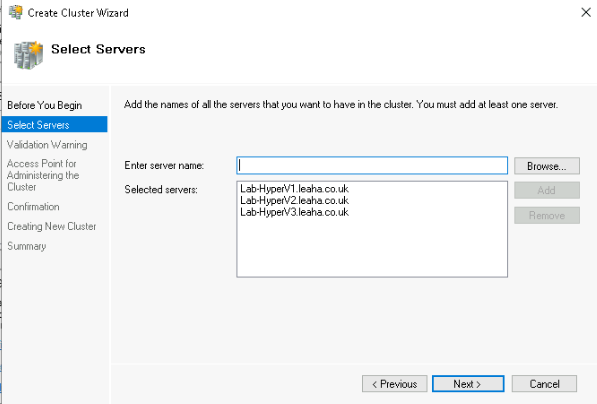

Add the servers you want to use to a cluster

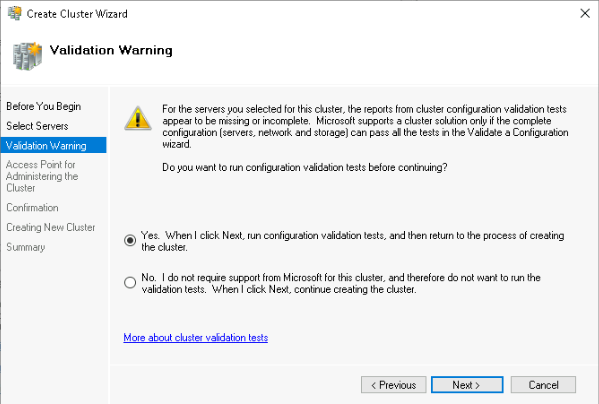

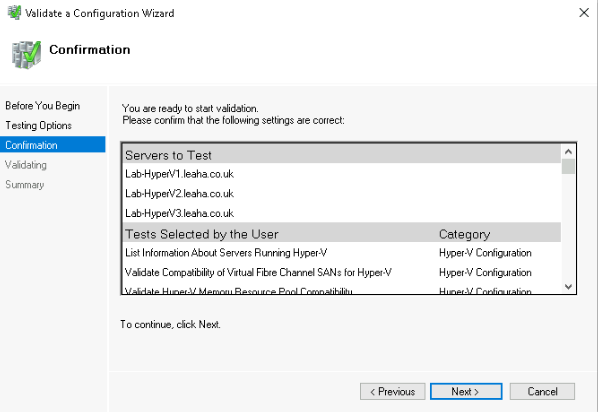

Keep it on yes to validate the cluster, this will tell you if there are any glaring issues

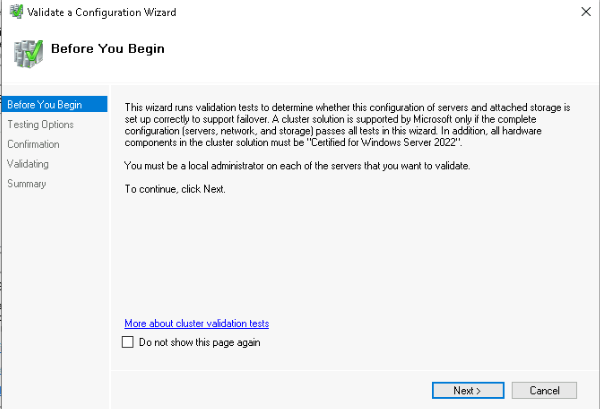

Click next

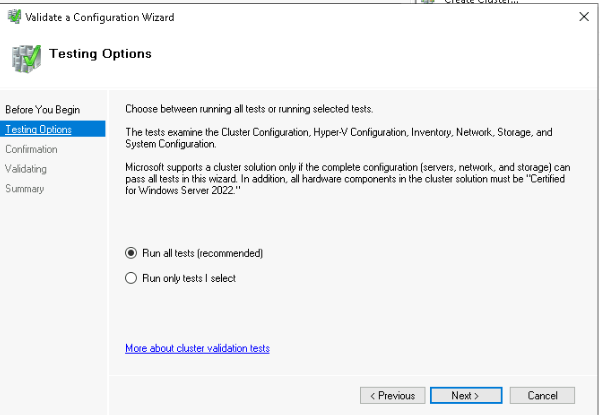

Next again to run all tests

And next one last time to start it

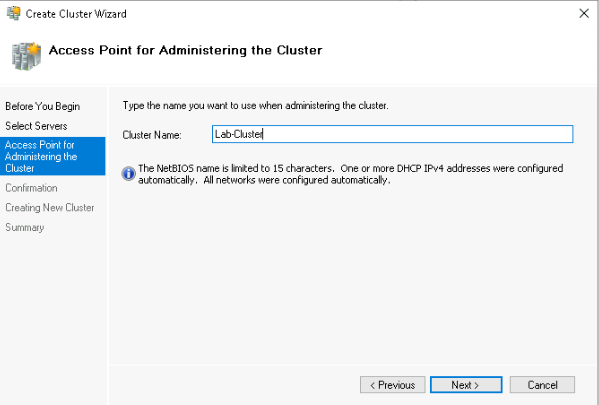

Double check any issues it might have, and if they likely wont impact the cluster, name your cluster

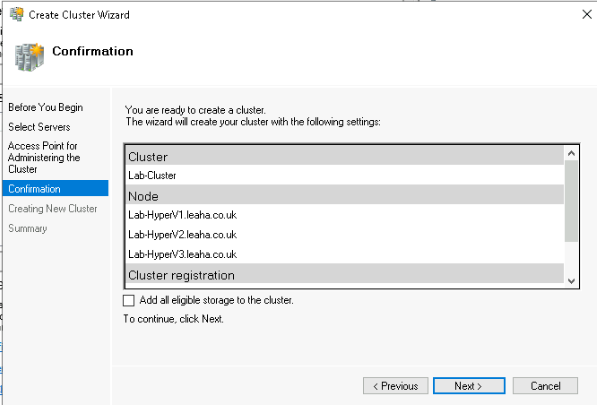

I want to configure the storage manually later, so I have unchecked the box, once you are happy click next

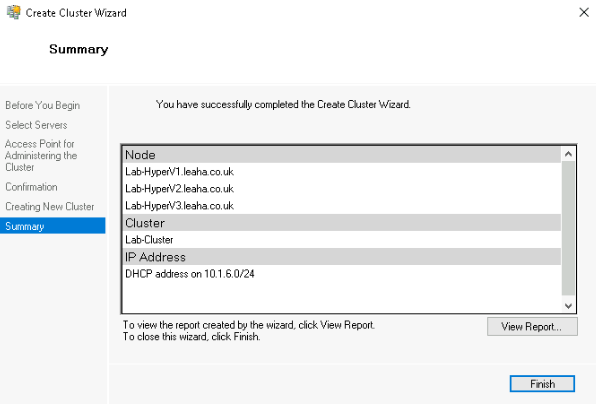

Once its done it will give you an overview

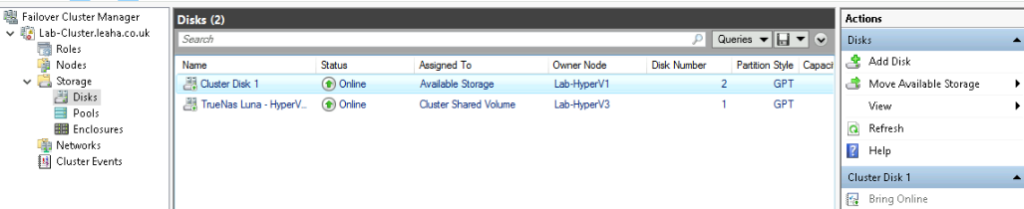

2.2 – Deploying The CSV

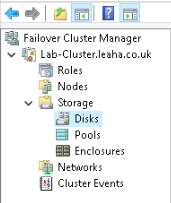

Once you have nodes added to failover cluster manager and a cluster setup, you can deploy CSV volumes from iSCSI storage

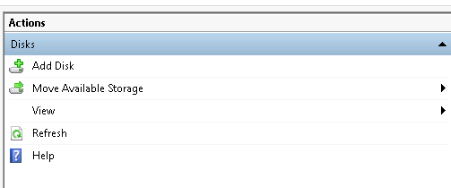

And add a disk on the right

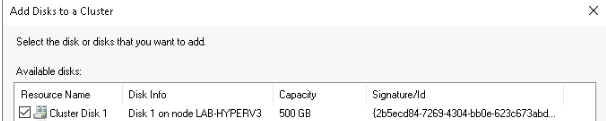

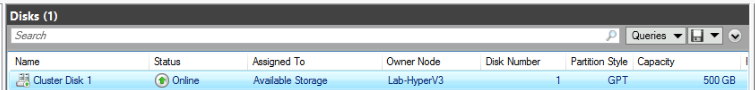

I have 1 500GB drive I plan to use for VMs

What you’ll notice is that only those one disk shows, despite being shared via iSCSI to all 3 nodes, and 1 node controls the disk, so the 500GB drive only appears once

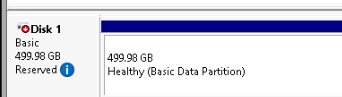

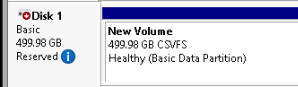

If you were to open disk manager, you’ll notice disk 1 is offline and is reserved and offline and cannot be brought online, this is normal

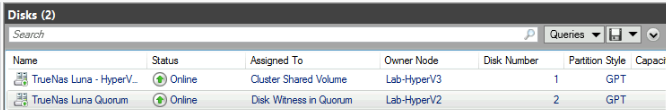

Now the cluster disk is available with HyperV3 as the owner node

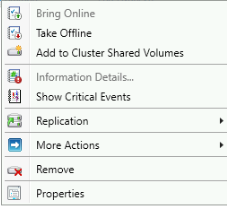

If we right click it, we can add it to a Cluster Shared Volume

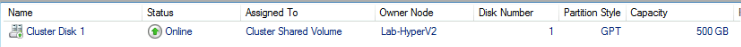

Now you can see its assigned to the Cluster Shared Volume, and the owner node has changed, this will happen from time to time

The disk registered in disk manager as a CSVFS disk now, rather than something more traditional like NTFS, this is because a different file system that is shared volume aware is needed, like VMware’s VMFS

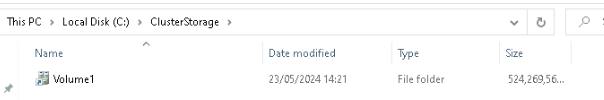

The disks dont get mounted onto This PC like normal drives, they are locked under C:\ClusterStorage

If we right click the volume and go to properties we can rename the volume to what ever you want

This is off my TrueNas server on a pool called Luna, so I have named it accordingly, this can be whatever you want though

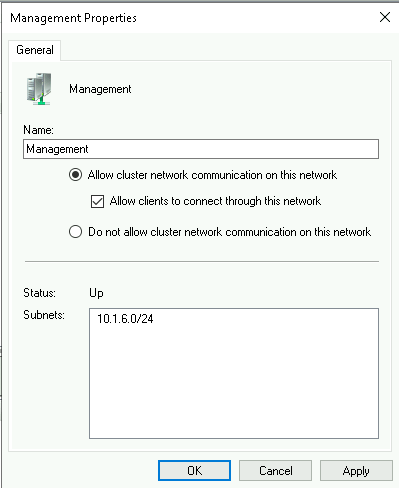

2.3 – Cluster Networking

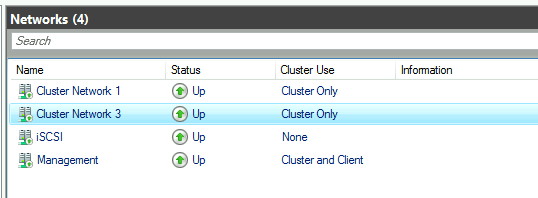

Under networks in Failover Cluster Manager you’ll see any networks with IP addresses the cluster can use

Its worth noting the SET Team vSwitch we created earlier will NOT show up here as it doesnt have an IP and is for clients only, so thats normal

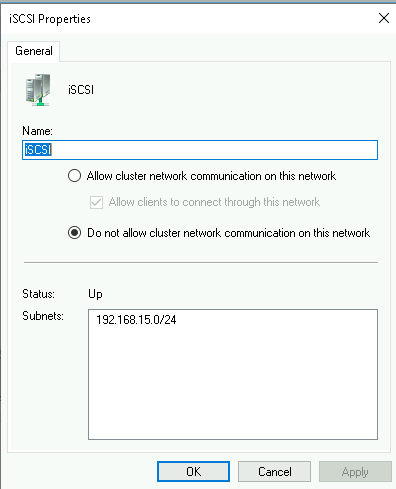

Cluster networks will often be for you iSCSI, Management, Cluster Heartbeat and Live Migration Networks, I have renamed a couple, but you should have 4, one network for everything except the vSwitch

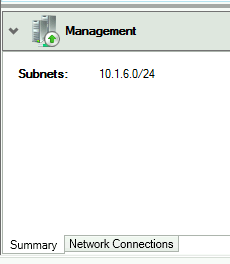

You can see at the bottom if you click a network which it is

Eg

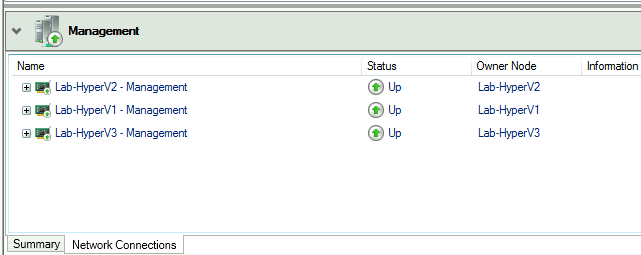

You can also verify by the adapters present in the network

So this is my management network, to name it right click it and go to properties

Here you can also also make sure to allow cluster network communication on this network for the Cluster Heartbeat network, I’d leave this enabled as a backup

iSCSI, this just needs naming, and everything else disabling

Live Migration, this just need cluster communication

Lastly, cluster, this should also only be cluster communication

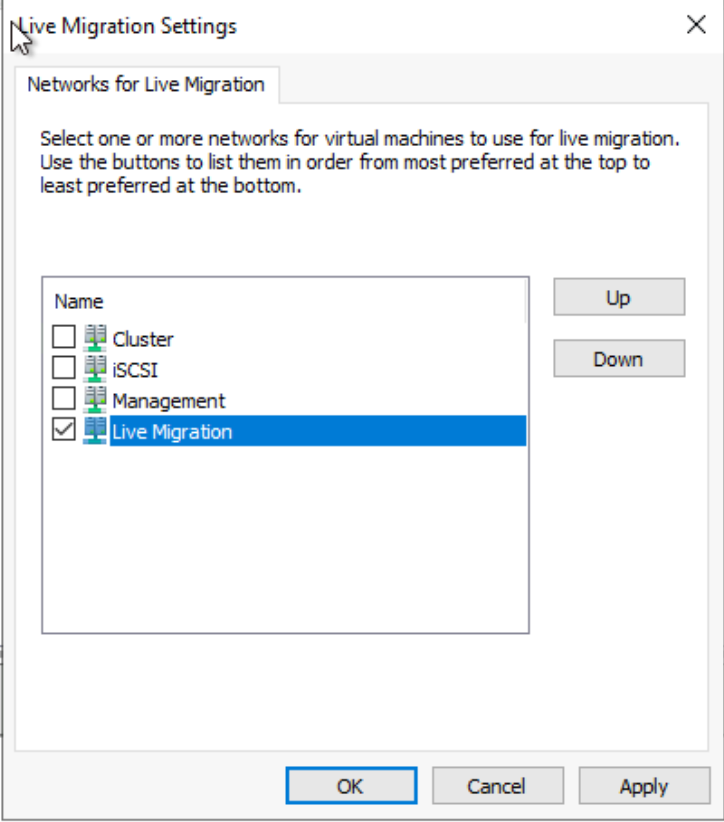

2.4 – Enabling Live Migration

For this, you’ll want a redundancy NIC team on a separate isolated network thats available in the network list from Failover Cluster Manager

Under the networks section on the left

Click Live Migration Settings on the right

And check the network you have for live migration, I have renamed the networks from the default names to match their use

Then click ok

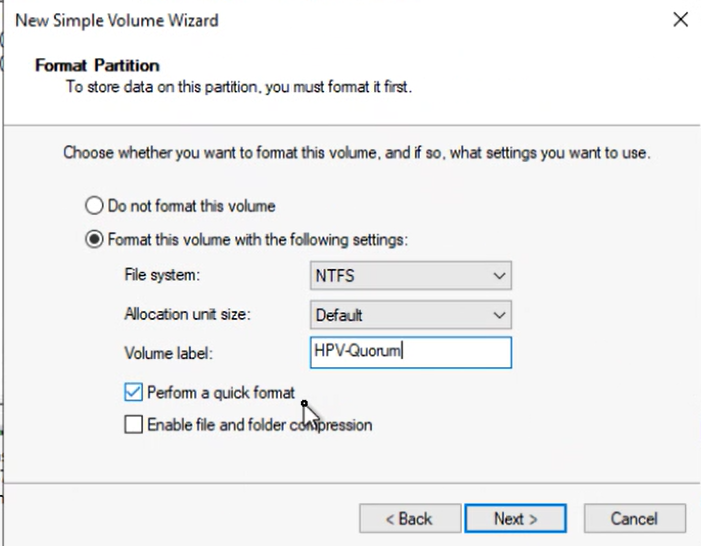

2.5 – Setting Up The Quorum Drive

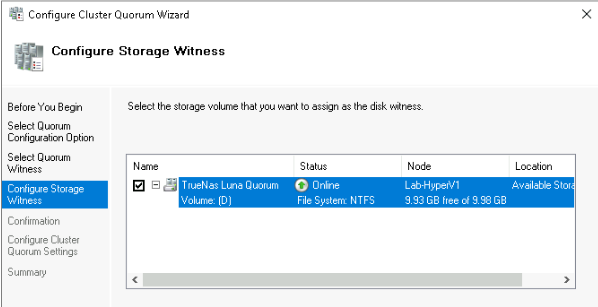

First online and initialize the Quorum iSCSI disk with a GPT partition from disk manager

Then add the disk to storage, but do NOT add to cluster shared Volumes, so just click add disk and select the Quorum disk

You can then also re name the drive, I have named mine Quorum

Format it onto a letter, such as D, like so

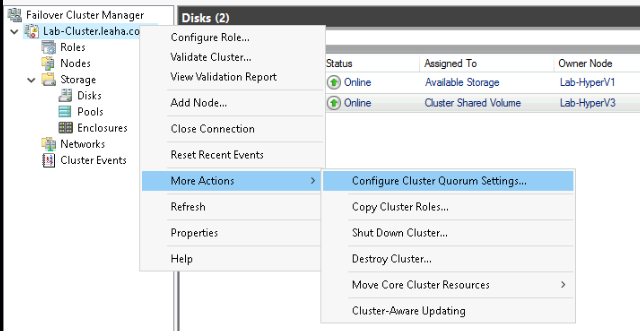

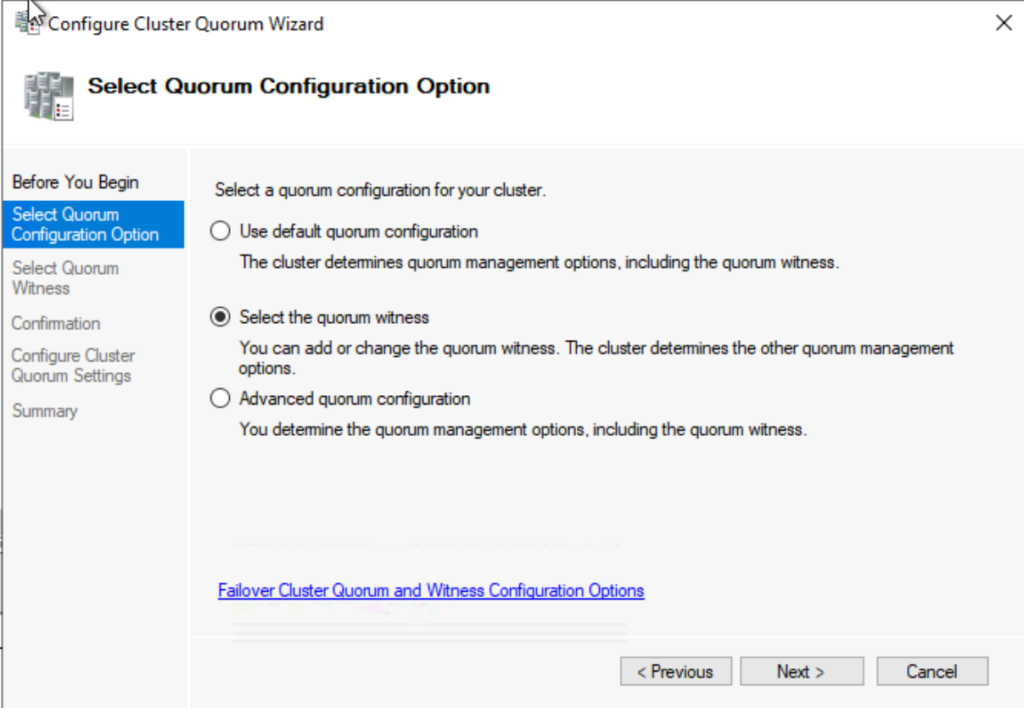

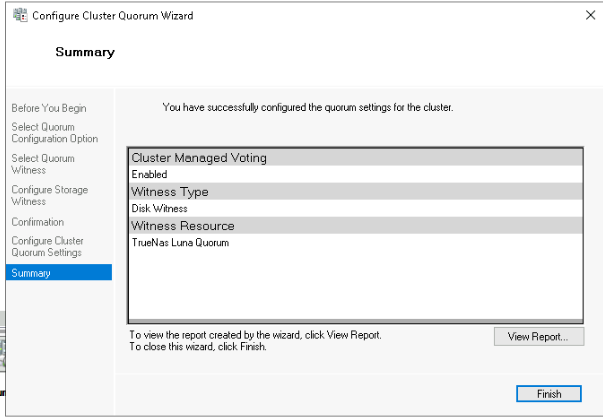

Then, configure the cluster Quorum settings, buy right clicking the cluster

When going through the wizard, select select the Quorum Witness to manually select the drive

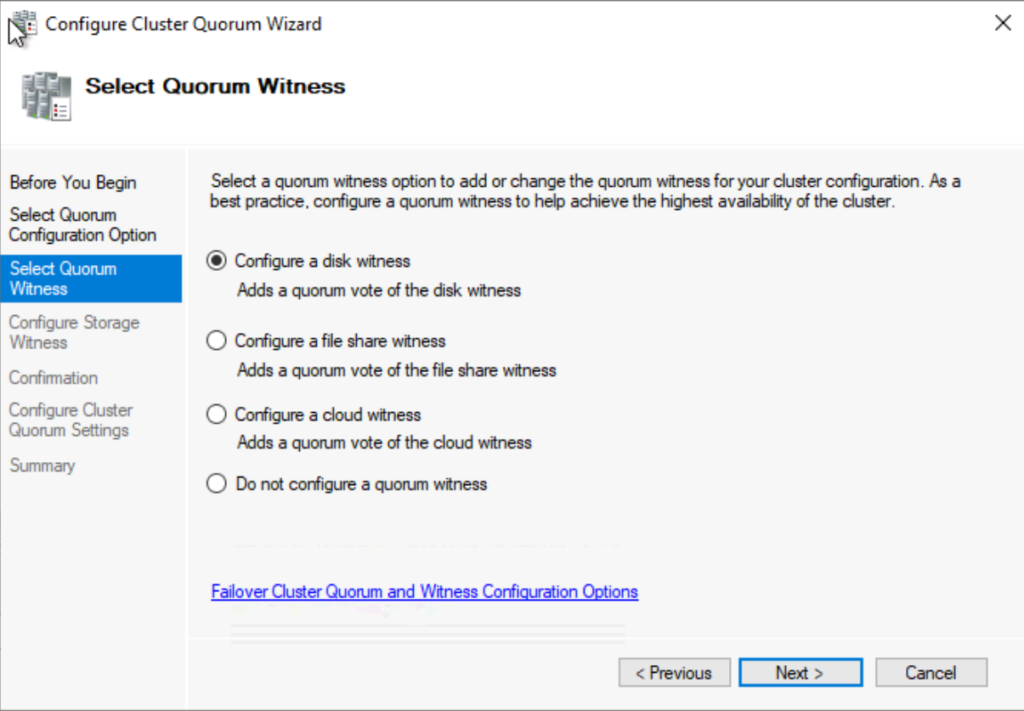

Configure a disk witness

Select the quorum disk you have added earlier

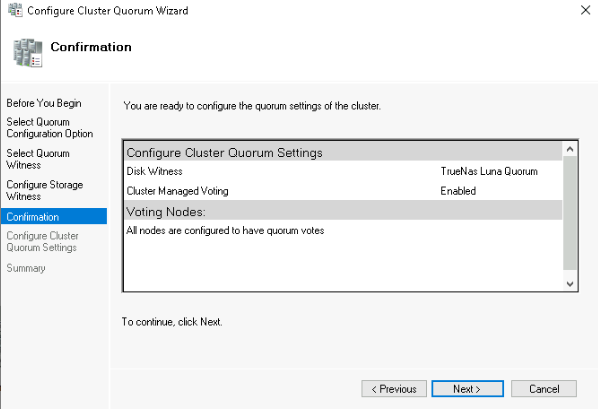

Then click next here to confirm

Then finish

The disk is now showing as assigned to disk witness in quorum, so everything is working as expected here

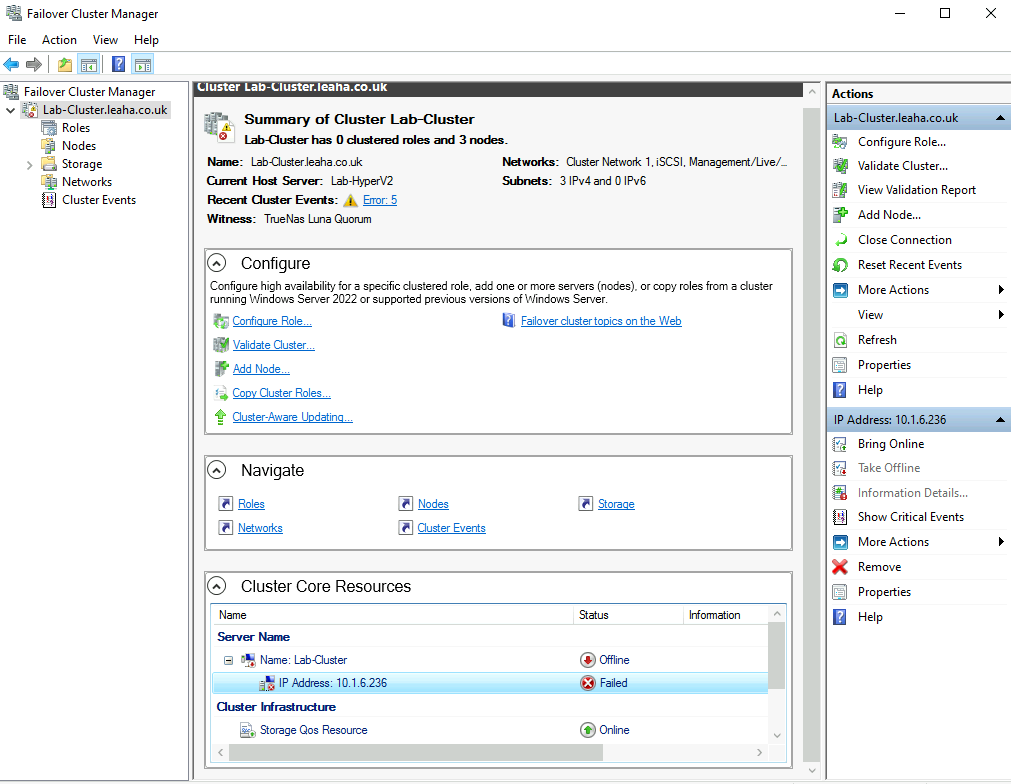

2.6 – Adding/Changing The Cluster IP

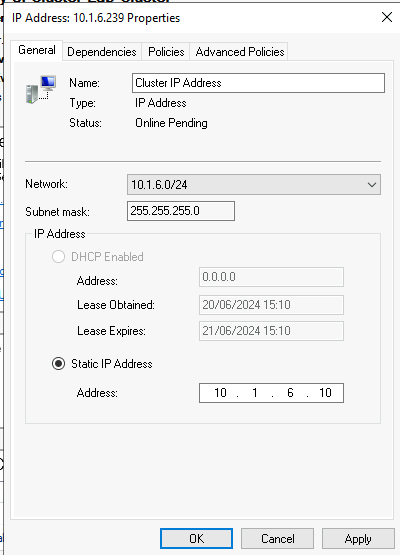

By default when you create a Hyper-V cluster, the cluster IP will be added via DHCP, if you want to change this, or set it static, open failover cluster manager, select the cluster and scroll to Cluster Core Recourses

Click the IP address and then properties on the right

Set the IP static and make sure you have the right network selected, my management is on 10.1.6.0/24

If your network is not selected make sure your management network is on cluster and client

2.7 – Cluster Validation Errors

Here are some solutions to common validation errors when creating the cluster

2.7.1 – The Cluster Network Name Doesnt Have Create Computer Objects Permissions

The error you’ll see for this is

The cluster network name <cluster-name> does not have Create Computer Objects permissions on the Organizational Unit <Ad Path And Details> This can result in issues during the creation of additional network names in this OU.

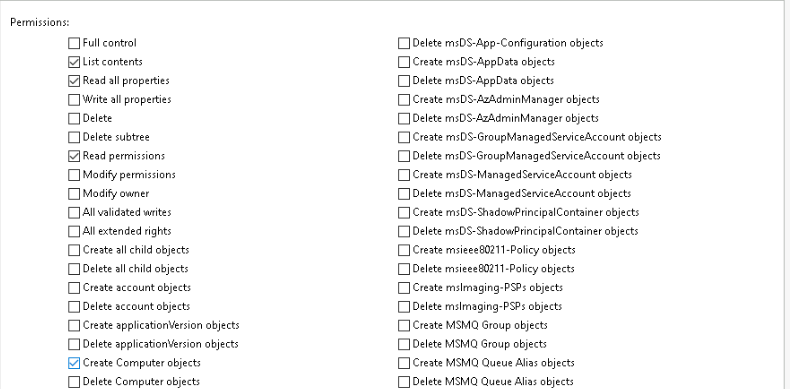

To fix this you need to give the cluster entry the correct permissions in AD

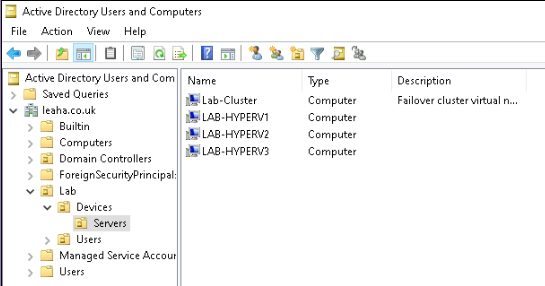

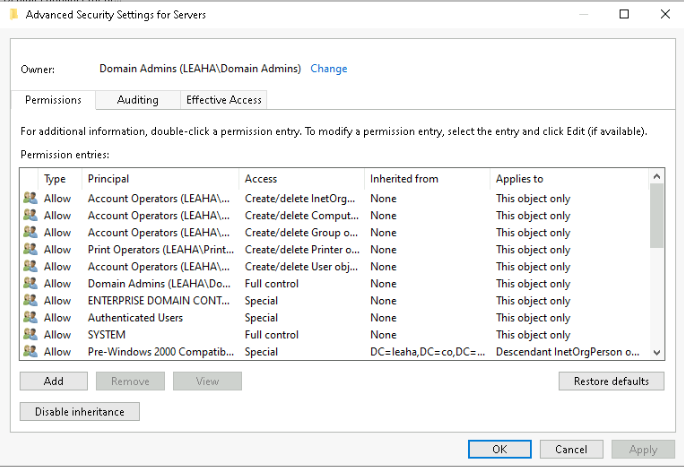

Go to the location of the Hyper-V servers in AD, for me this is under Lab/Devices/Servers

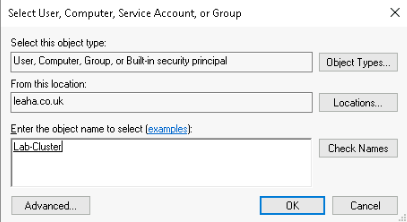

It will have created an entry for the cluster, mine is called Lab-Cluster

This entry needs special permissions to the OU its in

Open View and Enable Advanced Features

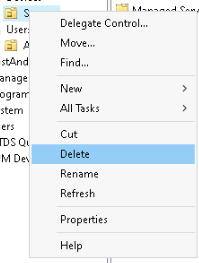

So to fix this, I am going to edit the permissions of the Servers OU

Right click the OU and go to properties

Then security and click advanced

Click Add here, on the bottom left

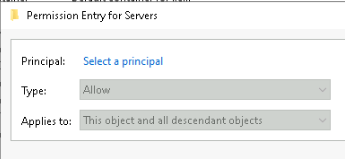

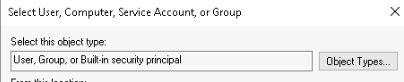

Select principle

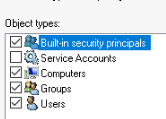

Click object types

Check computers

Add the entry for your cluster my cluster is called Lab-Cluster

Ensure Create Computer Objects, on the bottom left, is selected, ensure it also has read all properties, and to enable to all dependents, ie inheritance

Click ok and apply

2.7.2 – Network Adapter Has RDMA Enabled On The Adapter

Networks that are isolated may give the following error

Network Adapter <Network Name> on Node <host> has RDMA enabled on the adapter, but SMB Client is does not view this adapter as RDMA Enabled

If you do not need RDMA, you can disable this, on my setup, this flagged on the iSCSI, Live Migration And Cluster networks, so we can disable this

You can disable it via PowerShell with

Disable-NetAdapterRdma -Name <Adapter-Name>

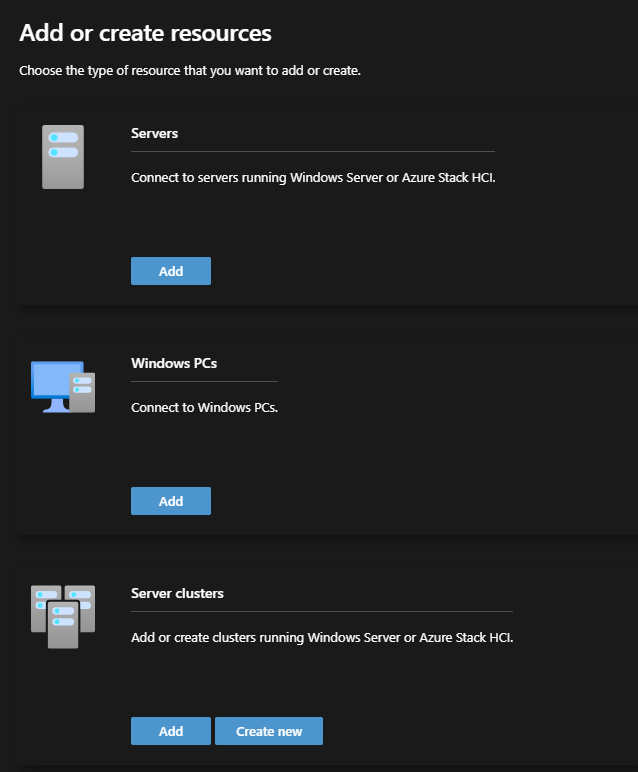

2.8 – Add Cluster To WAC (Optional)

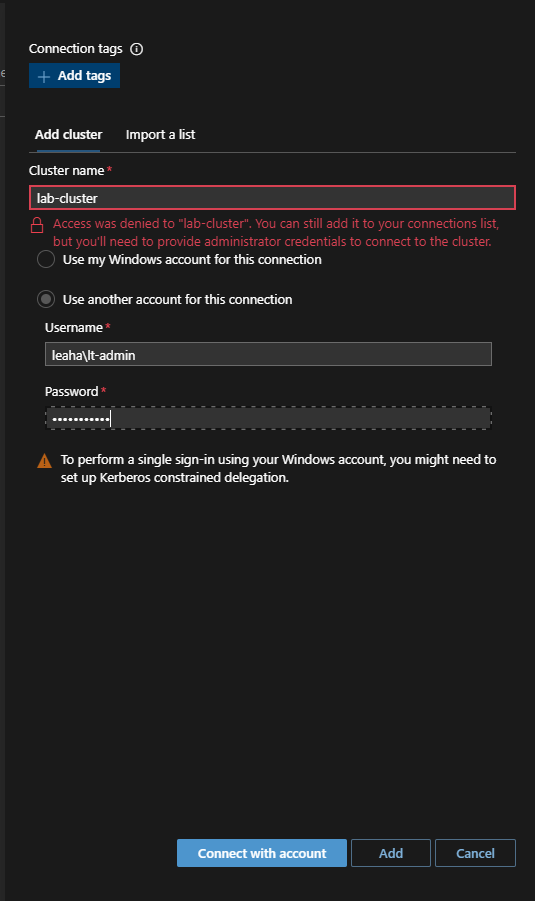

Once you have WAC installed you can add a Hyper-V Cluster from the add section

Then select cluster and add

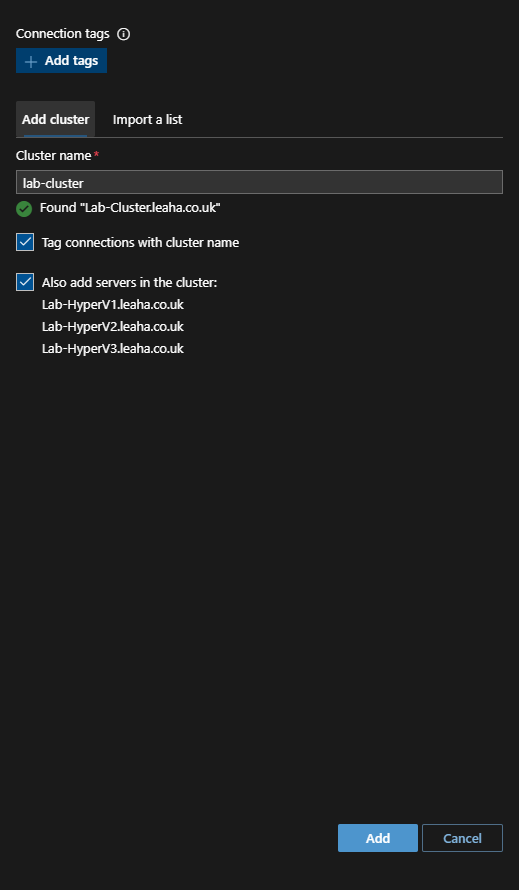

Add the cluster name, this should match to Windows DNS for the cluster IP, and give an admin account if asked

This will also add your Hyper-V hosts, click add

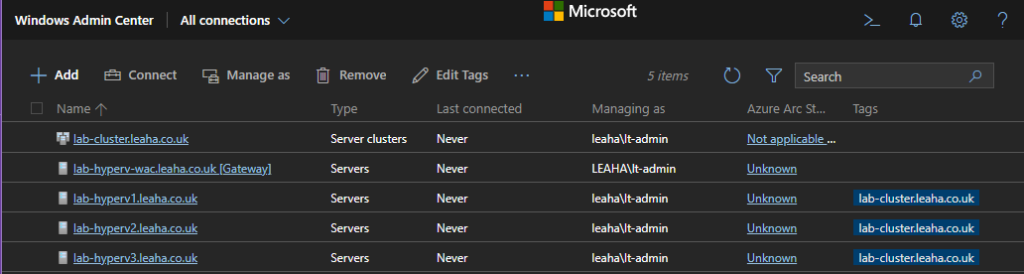

Now the cluster and Hyper-V nodes are showing in WAC

3 – Setting Up SCVMM (Optional)

This part covers setting up SCVMM, you dont need SCVMM at this point, you can manage your cluster via Failover Cluster manager and WAC, but if you have multiple clusters and have the license for SCVMM this section will walk you through setting it up

3.1 – SCVMM VM Setup

To Deploy VMM you’ll need a dedicated VM with at least 16GB of RAM

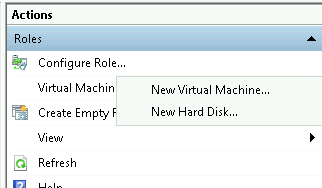

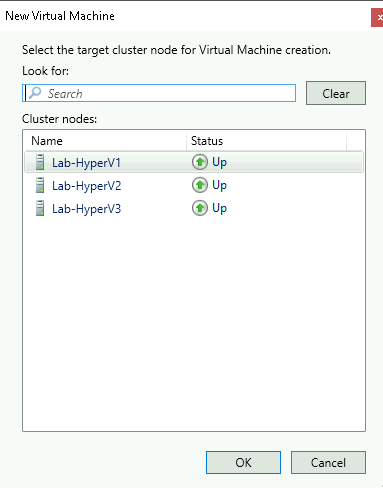

Lets create this on one of our Hyper-V hosts, go to the right on the host and select New/Virtual Machine

Select a node

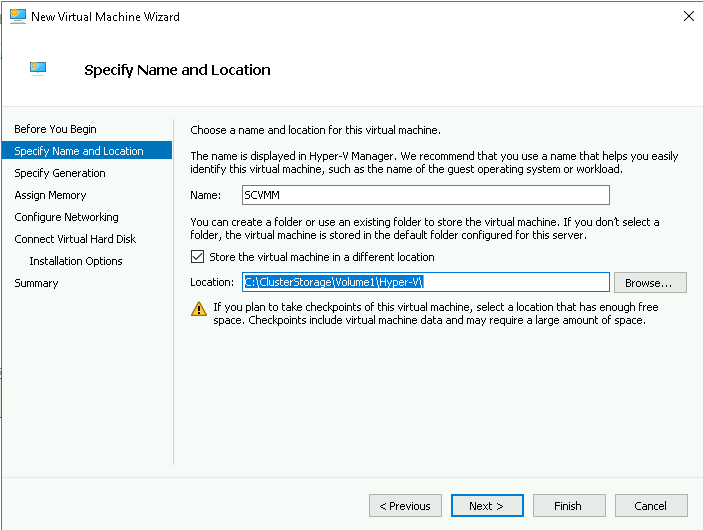

Name the Machine and select the location, you’ll want to use the CSV for this

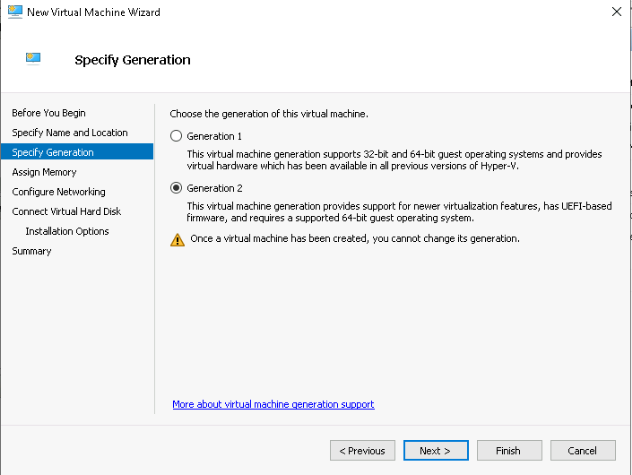

Select the generation

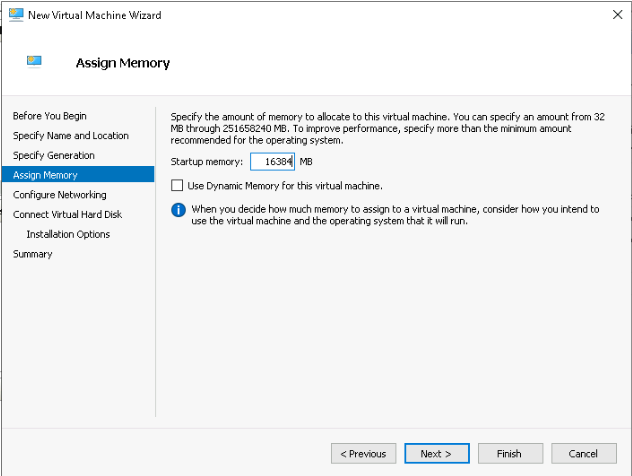

Add the RAM, you need 16384 as a minimum

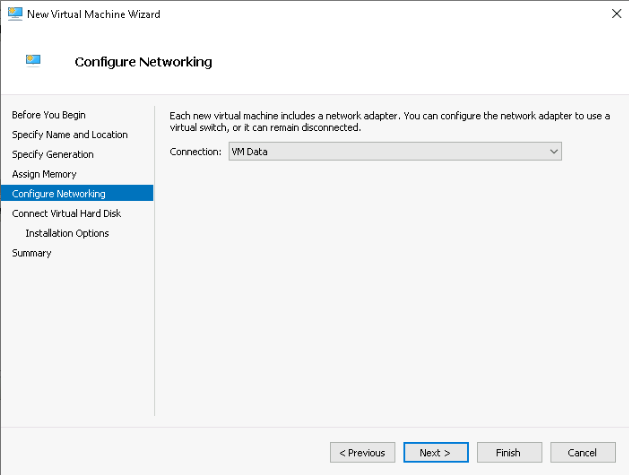

Specify the Network

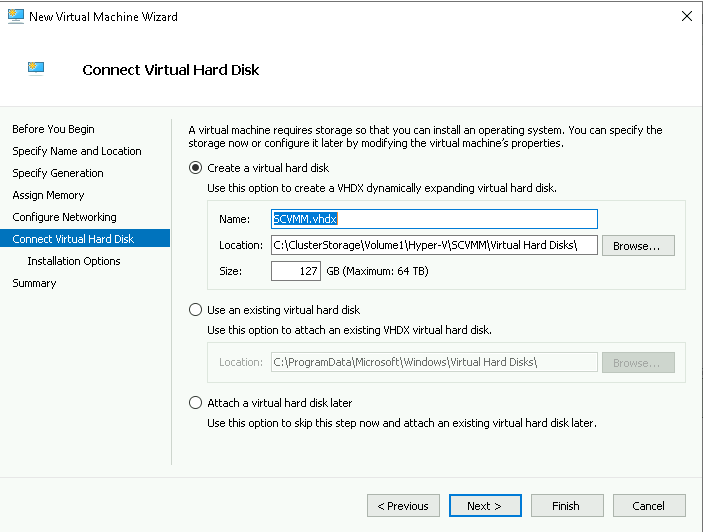

Add a virtual Hard Disk, 128GB should be enough

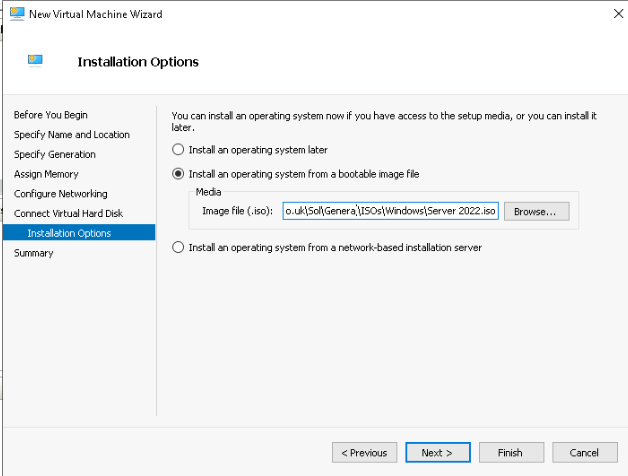

Add a Windows server ISO

Next, right click the VM and go into Settings

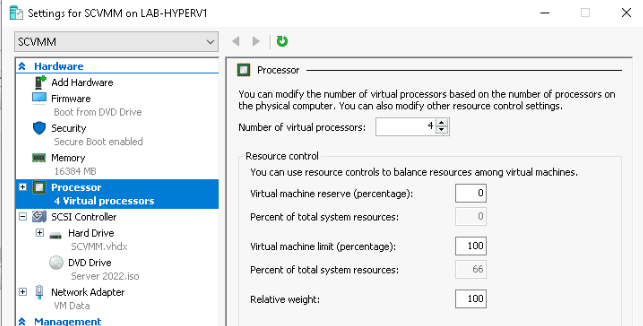

Change the number of CPU cores to 4, the default is 1

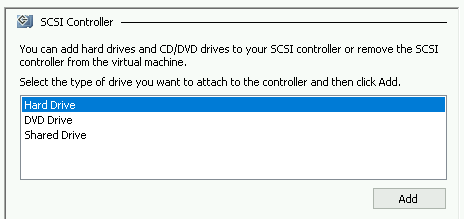

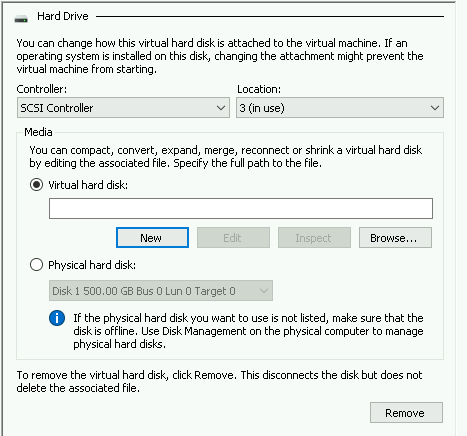

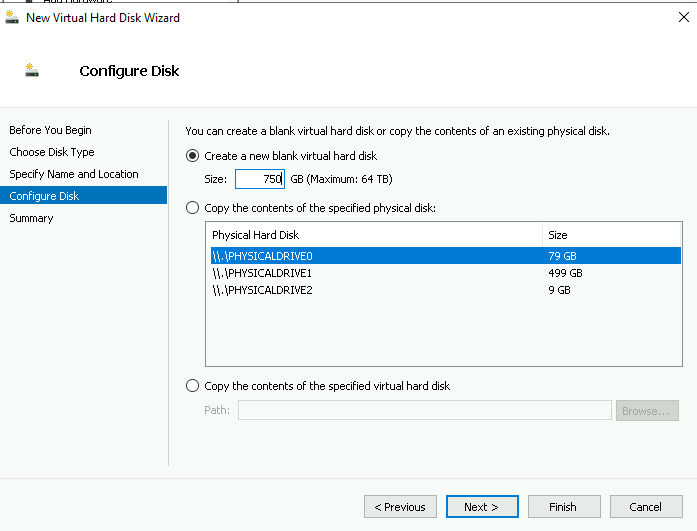

Also add an extra disk to the machine for SQL which should be 750GB, go to the VM settings and select the SCSI controller and add a hard disk

Click new to create a new virtual disk

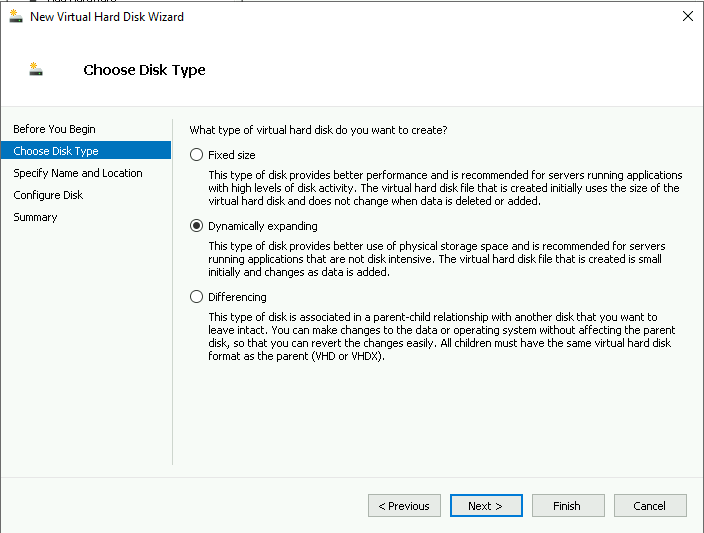

Select dynamically expanding for thin provisioned, or fixed size for thick

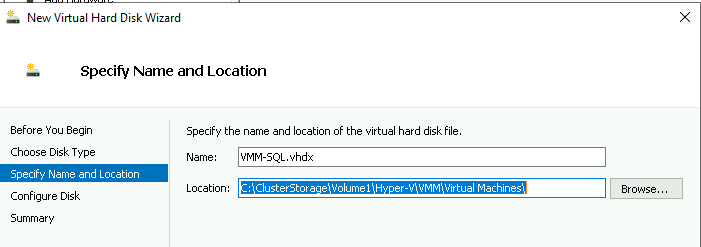

Select the disk name and location

And lastly make it 750GB

Right click and start the VM, and then connect to it and install Windows Server

3.2 – Installing Software Prerequisites

Install run the following to download all the exe and msi files to C:\Temp

$tempFolderName = "Temp"

$tempFolder = "C:\" + $tempFolderName +"\"

$itemType = "Directory"

$visualC2017x64Url = "https://aka.ms/vs/17/release/vc_redist.x64.exe"

$visualC2017x64Exe = $tempFolder + "VC_redist.x64.exe"

$msodbcsqlUrl = "https://go.microsoft.com/fwlink/?linkid=2239168"

$msodbcsqlMsi = $tempFolder + "msodbcsql.msi"

$msSqlCmdLnUtilsUrl = "https://go.microsoft.com/fwlink/?linkid=2230791"

$msSqlCmdLnUtilsMsi = $tempFolder + "MsSqlCmdLnUtils.msi"

$adkUrl = "https://go.microsoft.com/fwlink/?linkid=2196127"

$adkExe = $tempFolder + "adksetup.exe"

$adkWinPeUrl = "https://go.microsoft.com/fwlink/?linkid=2196224"

$adkWinPeExe = $tempFolder + "adkwinpesetup.exe"

Invoke-WebRequest -Uri $visualC2017x64Url -OutFile $visualC2017x64Exe

Invoke-WebRequest -Uri $msodbcsqlUrl -OutFile $msodbcsqlMsi

Invoke-WebRequest -Uri $msSqlCmdLnUtilsUrl -OutFile $msSqlCmdLnUtilsMsi

Invoke-WebRequest -Uri $adkUrl -OutFile $adkExe

Invoke-WebRequest -Uri $adkWinPeUrl -OutFile $adkWinPeExeThen install everything everything thats downloaded

Run the VCresist Exe

VC_redist.x64

Then install the ADKWINPETUP

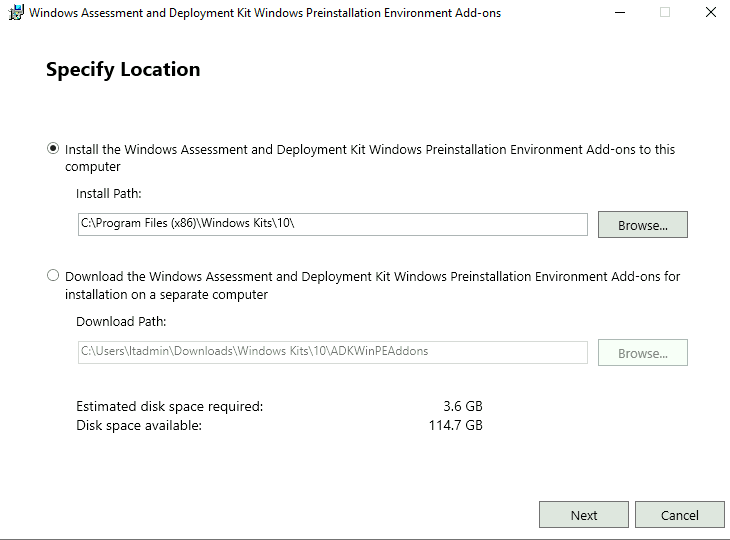

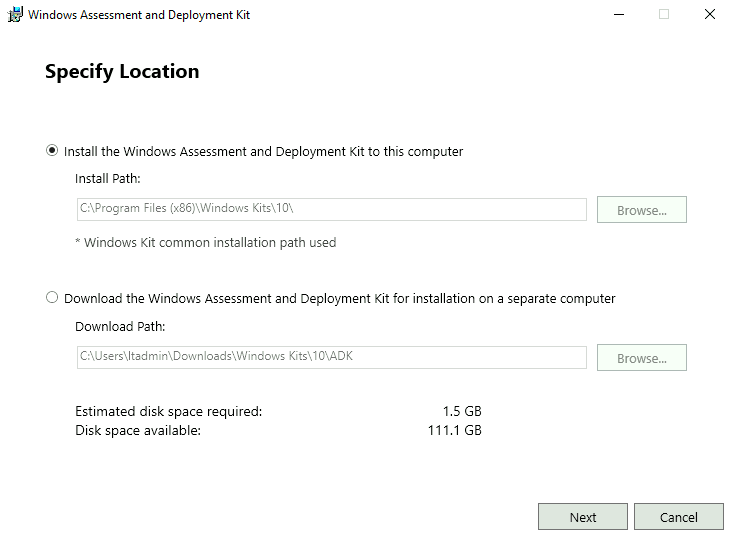

Specify the location and hit next

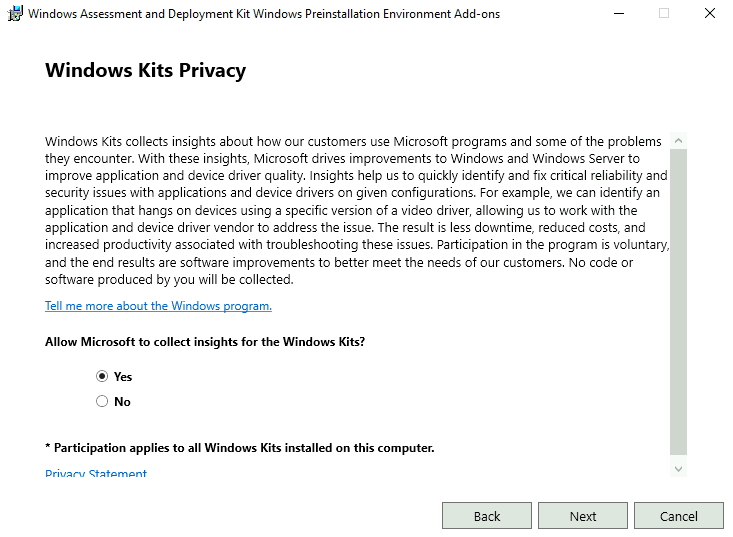

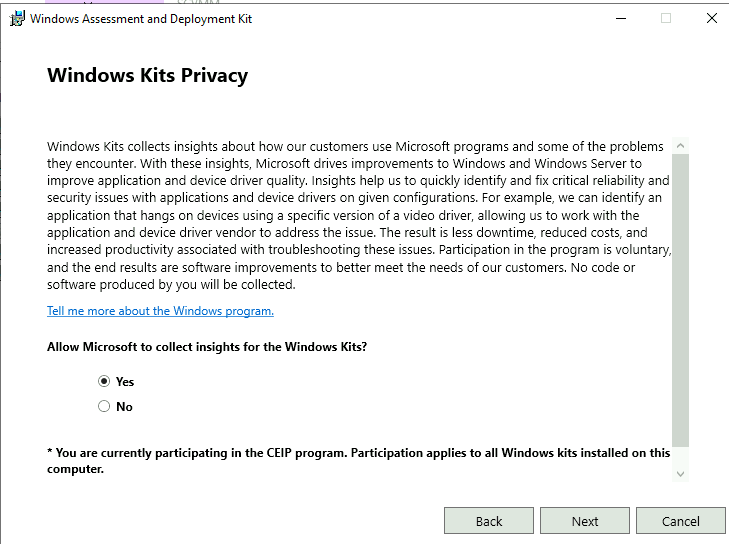

Click next here

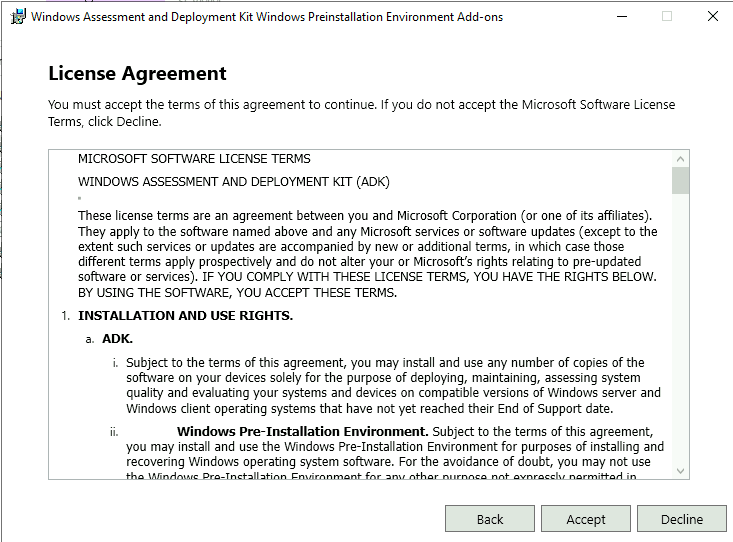

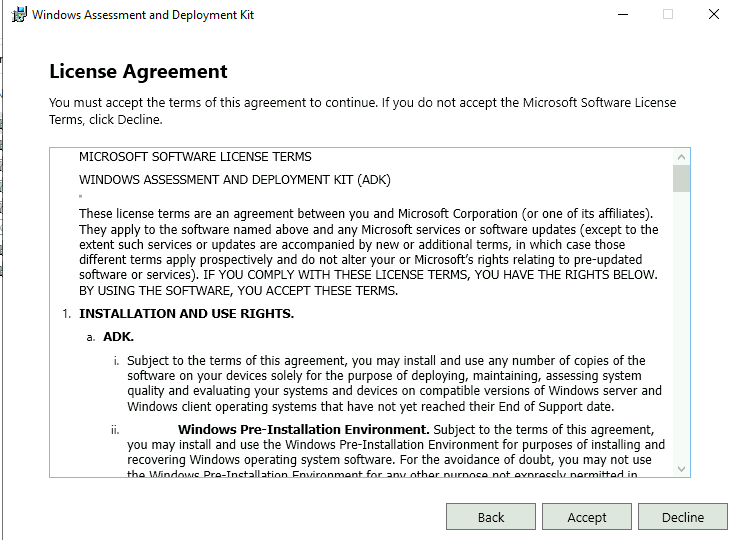

Click accept here

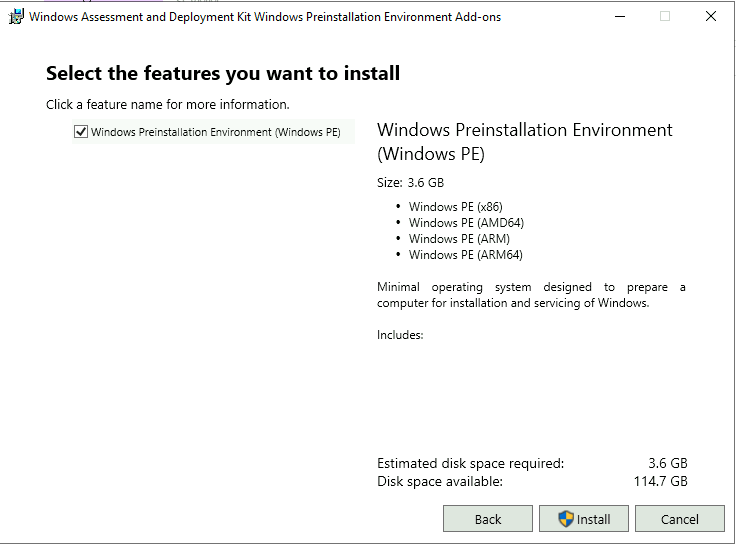

Then click install

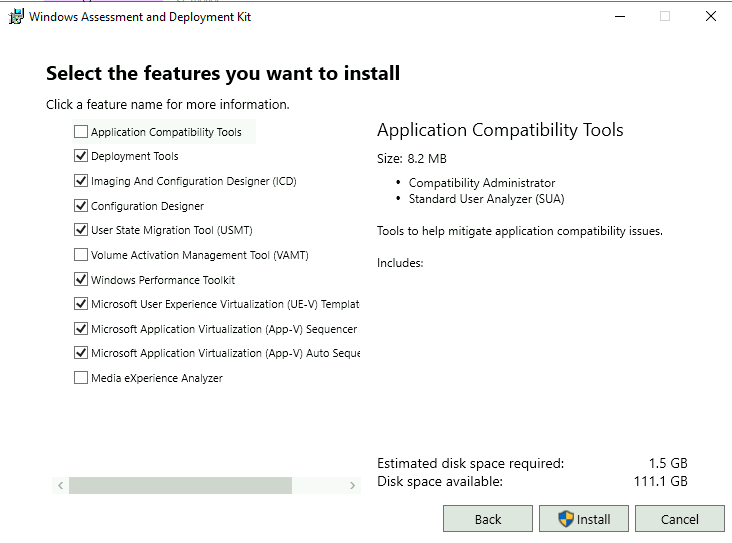

Install the asksetup

Specify the install location

Accept or decline the privacy page

Accept the EULA

Click install

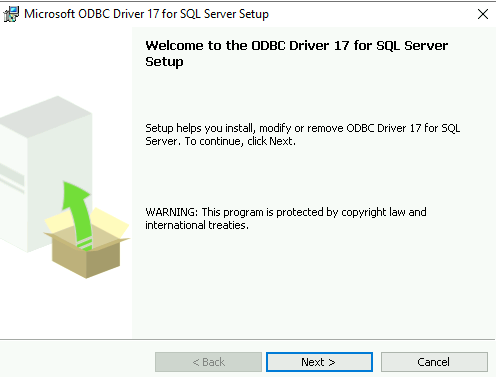

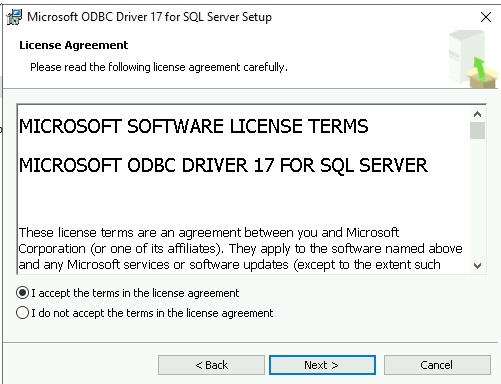

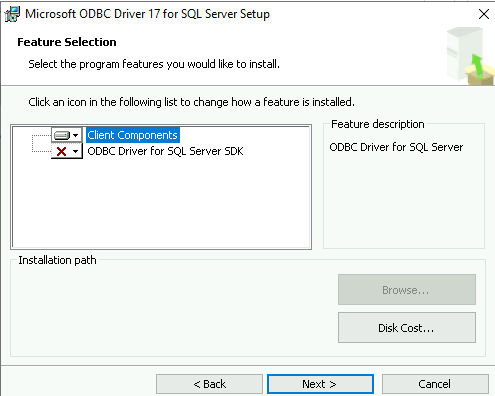

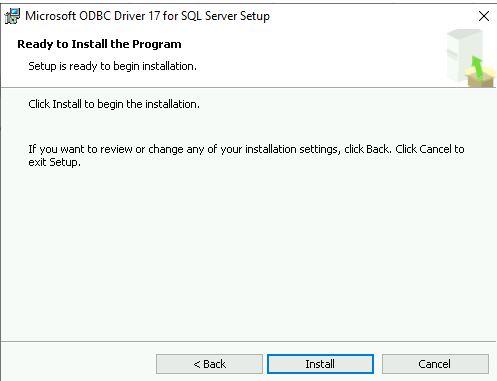

Then install the ODBC setup

Click next here to begin installation

Accept the EULA

Click next here on the defaults

Then install to finish

3.3 – Setting Up The DKM Container

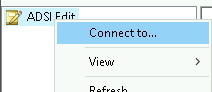

Connect to your DC and open ADSI edit

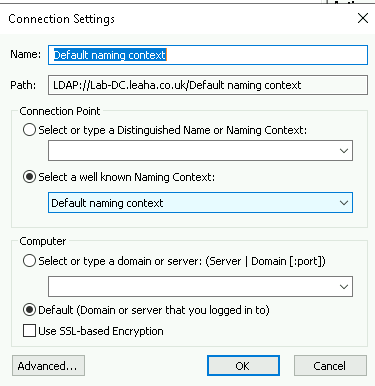

Right click ADSI edit and go to connect to

Connect to the default naming context

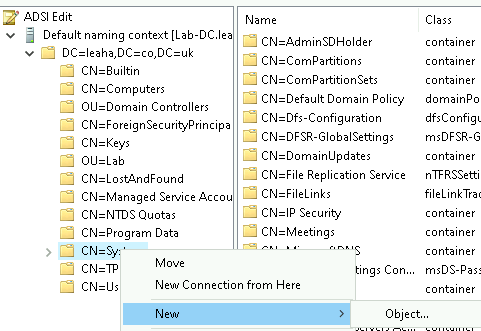

Expand your domain, go to CN=Systsem, right click and create a new object

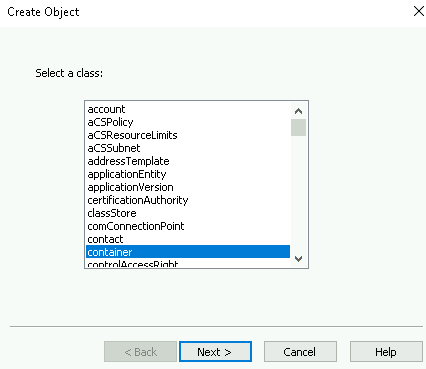

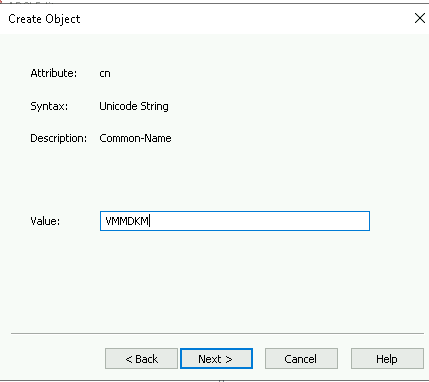

Create a container and click next

Name the object, eg VMMDKM and click next

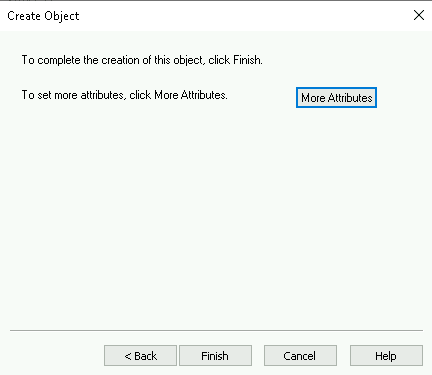

Then click finish

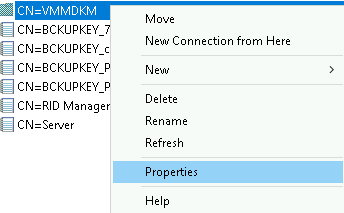

Then right click the container, and go to properties

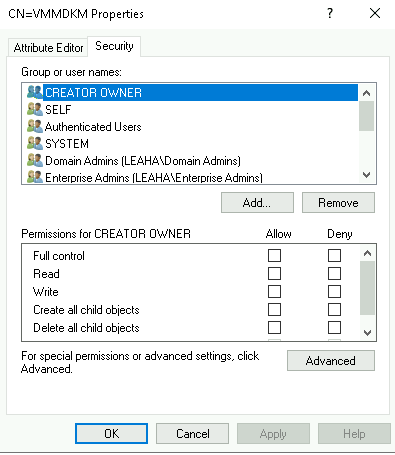

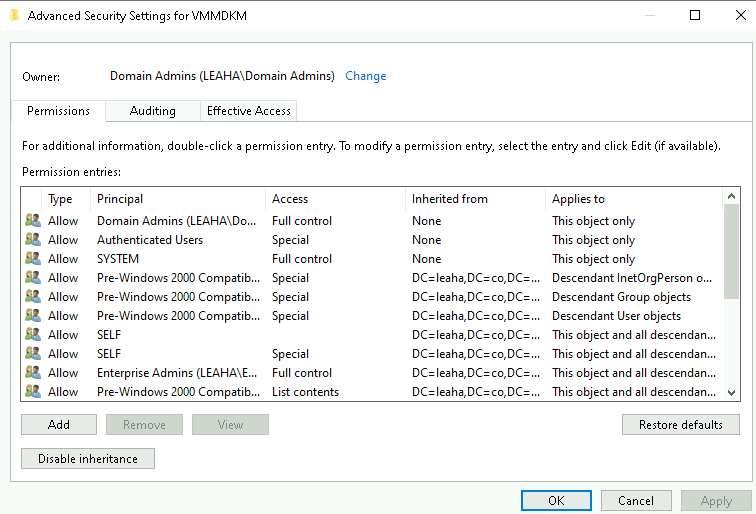

Go to security, then advanced

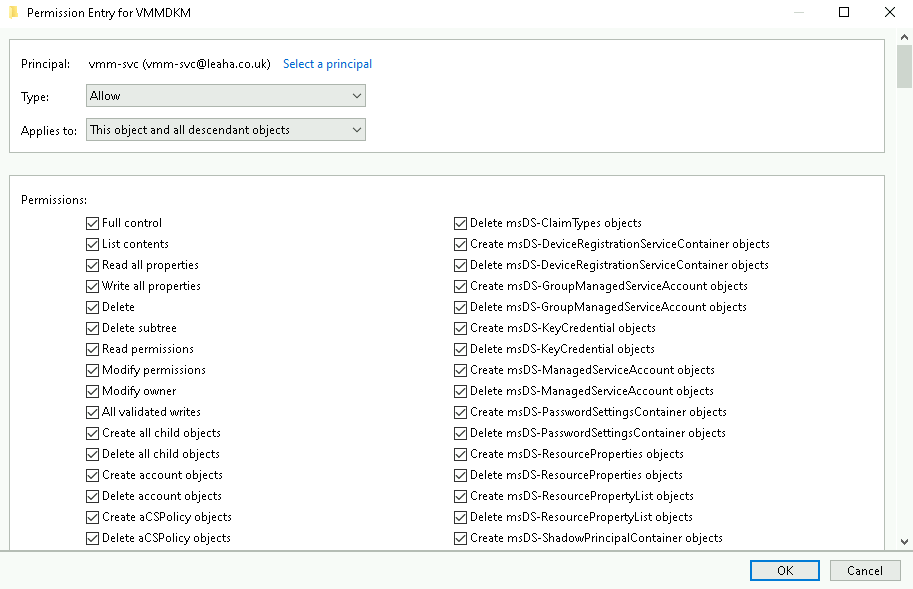

Click add

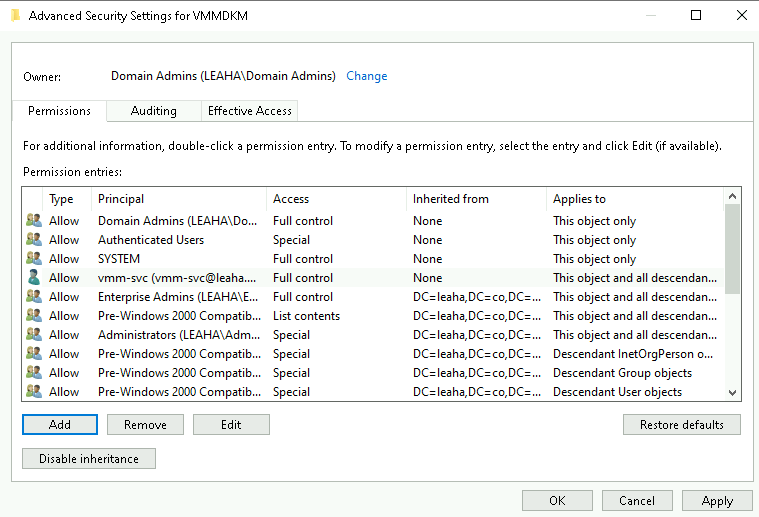

Add the VMM service account with full permissions for the container

Then, ok and ok again to apply and close

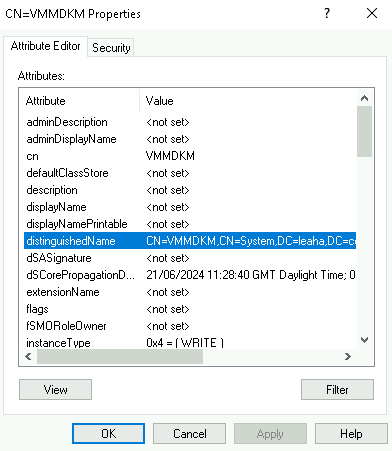

Go into the properties of the container again, and double click distinguished name

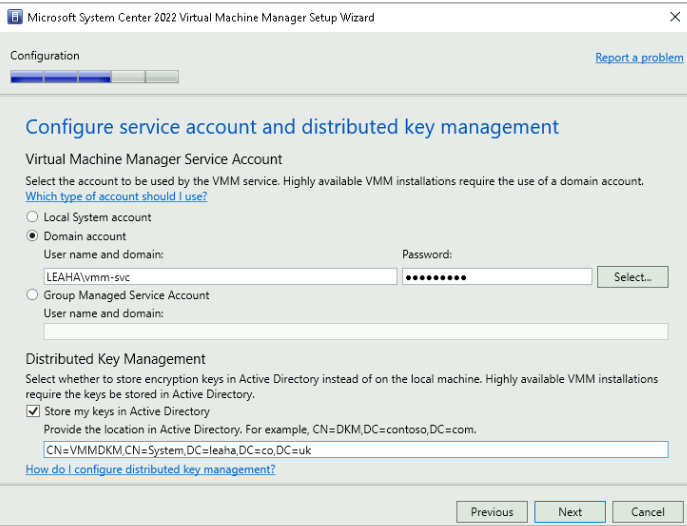

Make a note of this for during the installation

Eg mine is

CN=VMMDKM,CN=System,DC=leaha,DC=co,DC=uk

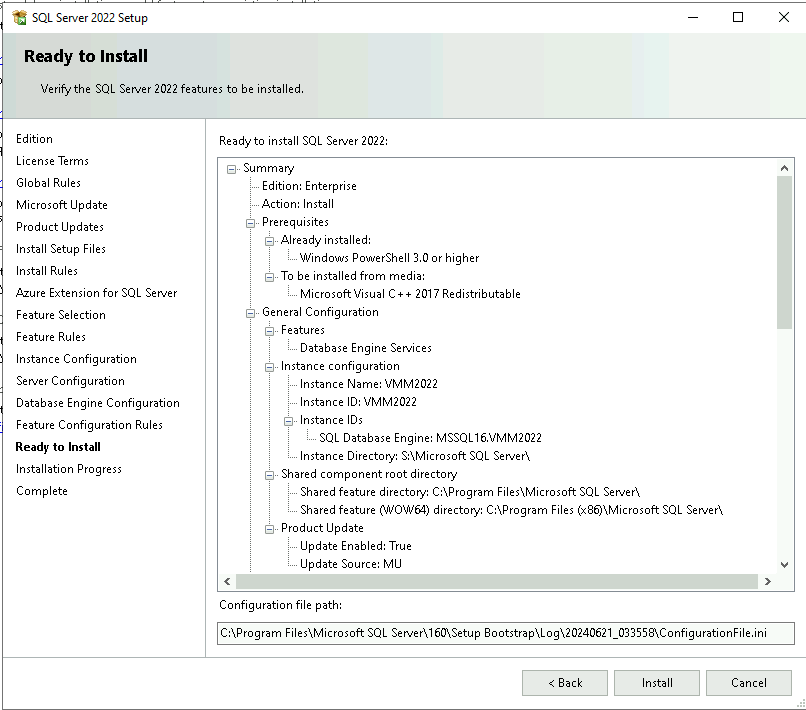

3.4 – Installing SQL

For VMM 2022, we will be using SQL 2022

Sign into the service account before installing SQL and make sure its in the local administrators group

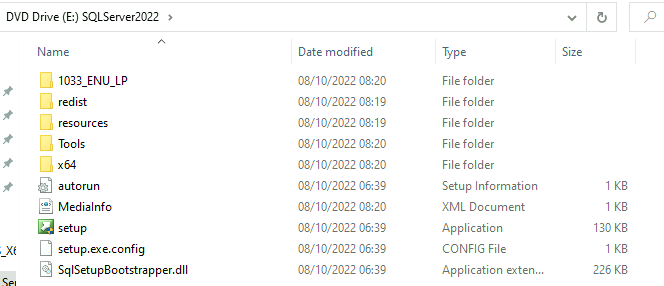

Mount and open the ISO and run the setup.exe

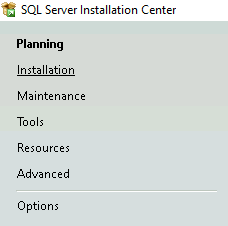

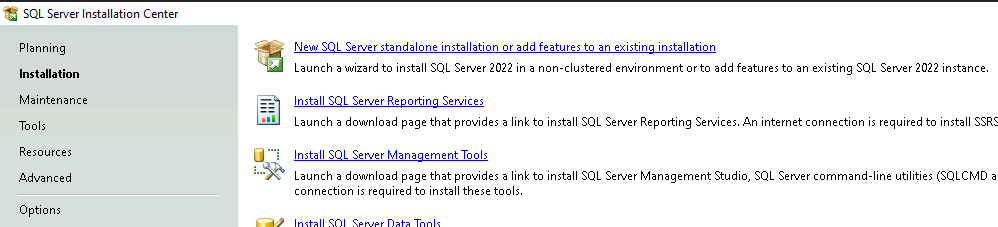

Click installation

Then click new SQL standalone installation

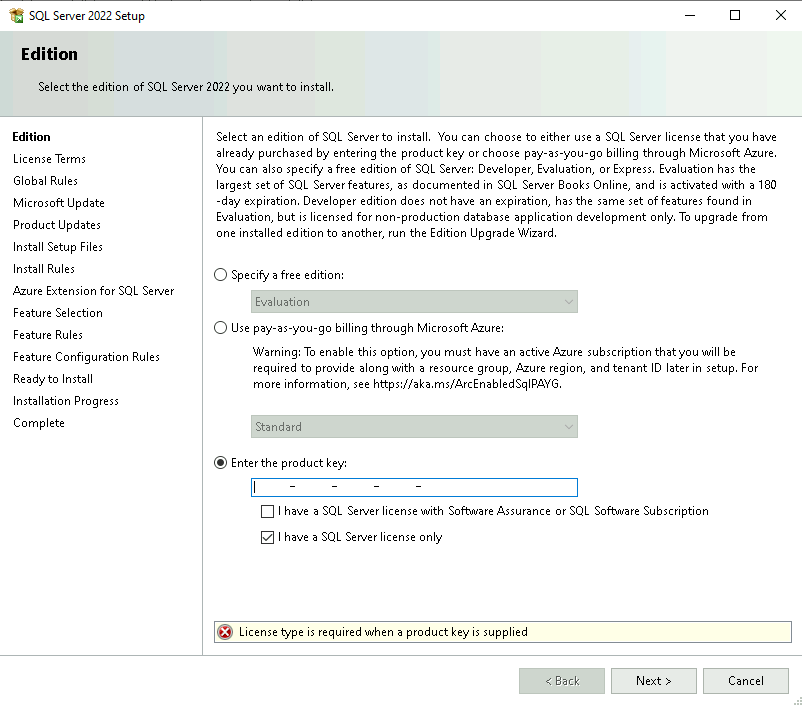

Enter a product key, you can use the evaluation free license for testing

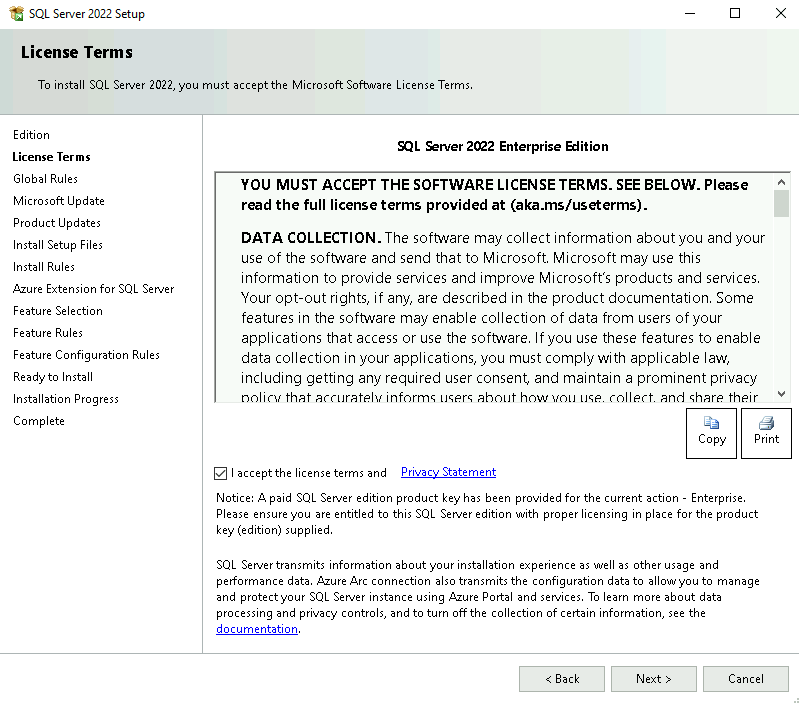

Accept the EULA

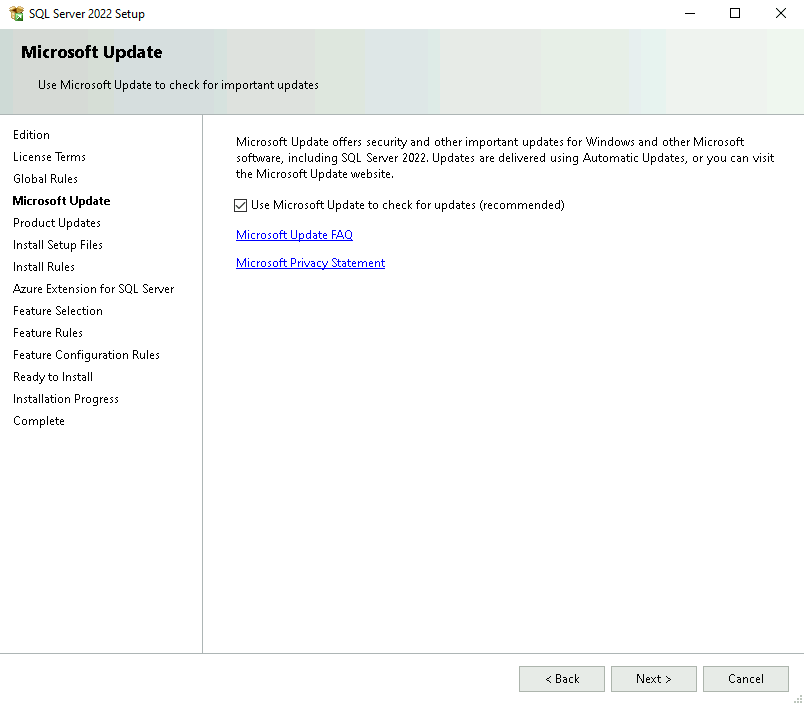

Check using Microsoft update to update SQL

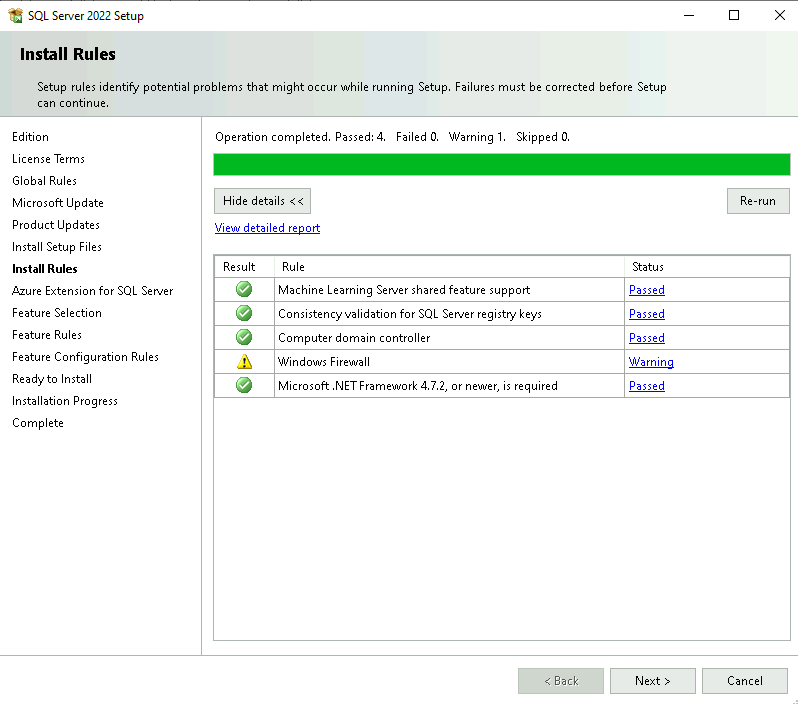

Check the install tests for anything, the warning here is because the windows firewall is enabled, so it will need the to allow the right communication

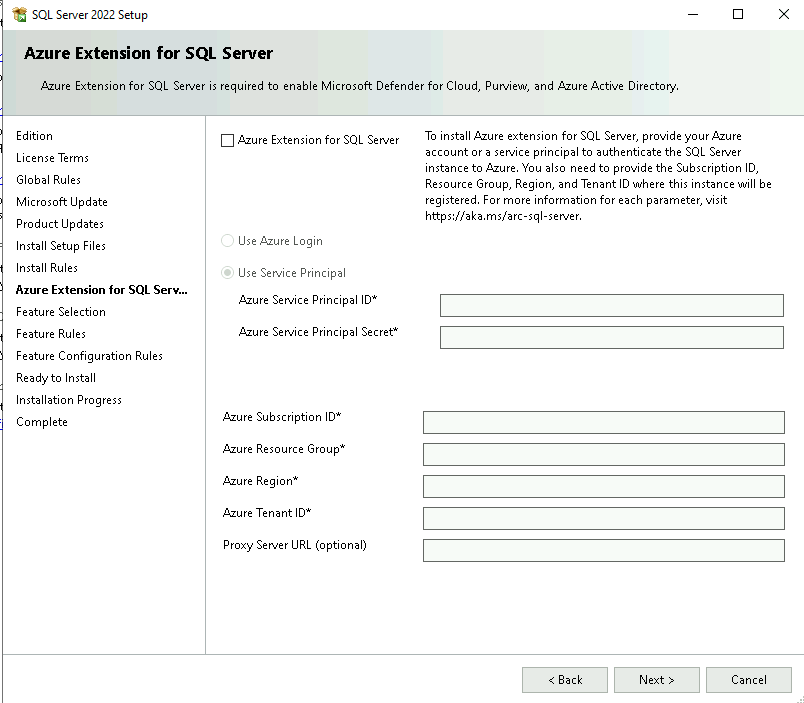

We can uncheck the Azure extension, unless you explicitly need it

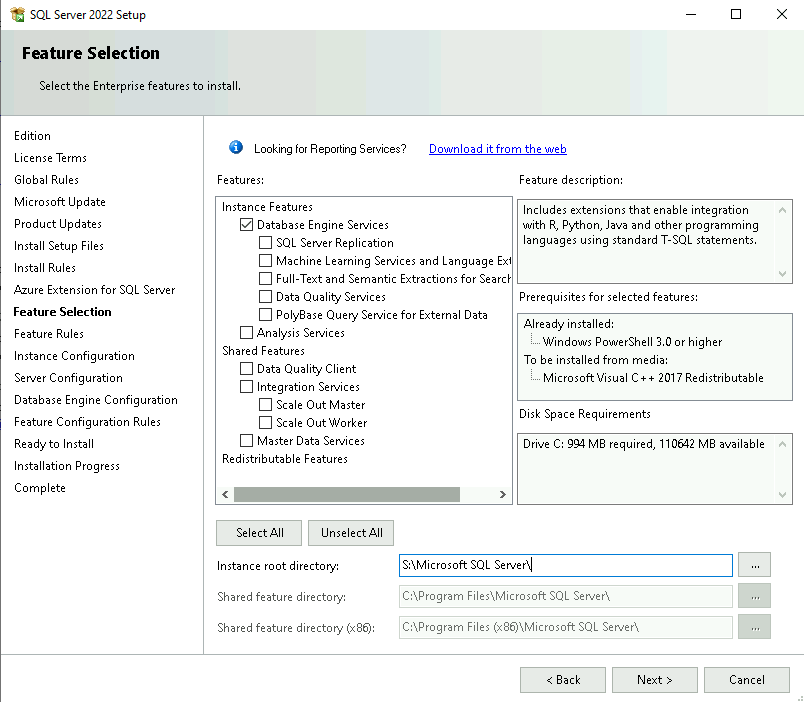

Select the Database Engine Services

Change the instance root directory to the SQL Drive, I have mine mounted on S

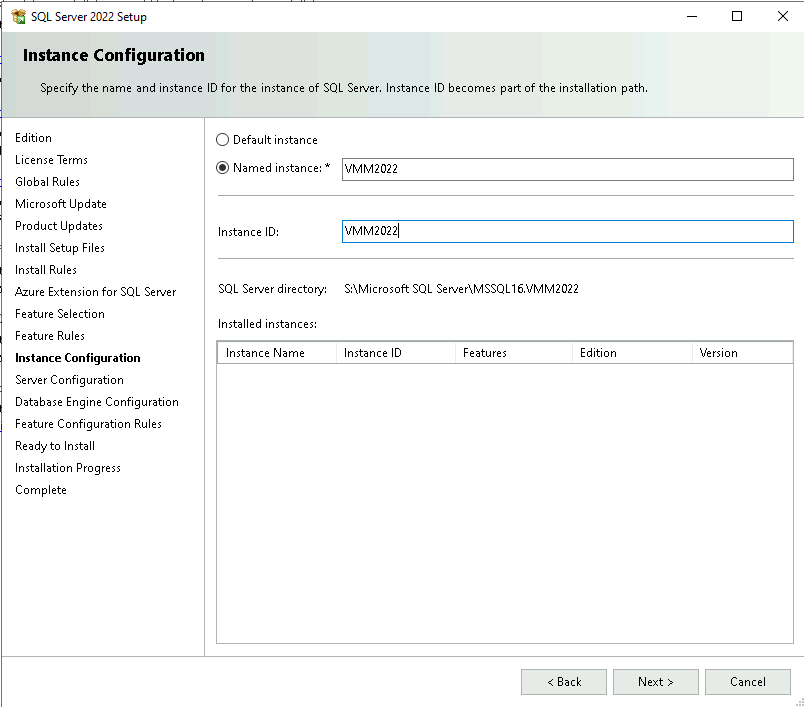

Create a named instance and instance ID, these should be the same

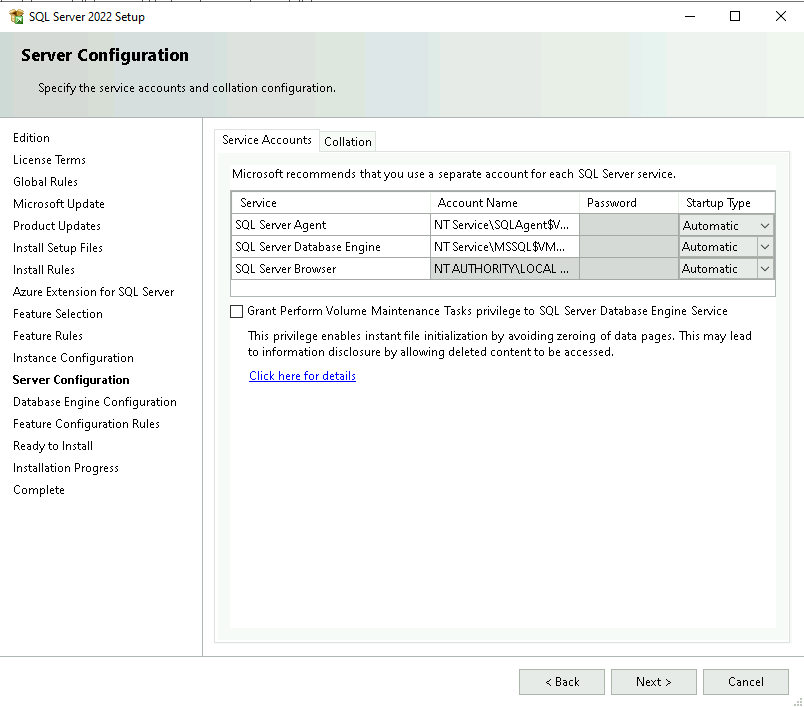

Leave the service account as stock, as this is an isolated instance, so it doesnt need a domain service account

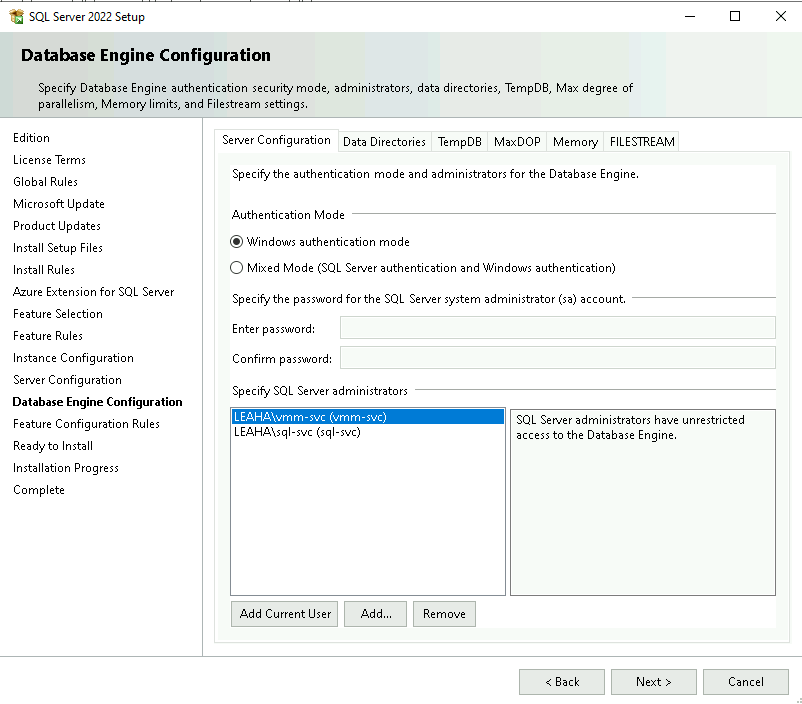

Stick with Windows authentication mode and add the VMM/SQL service accounts to have full access

Once you’re happy, click install

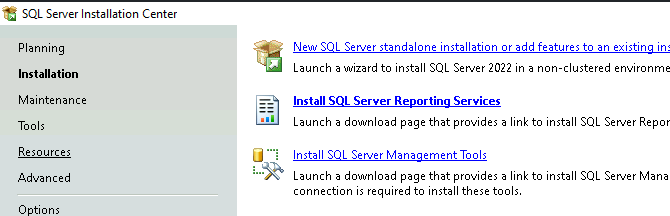

Now install the management tools from the ISO by clicking the link

Then scroll down and click the link here to download it

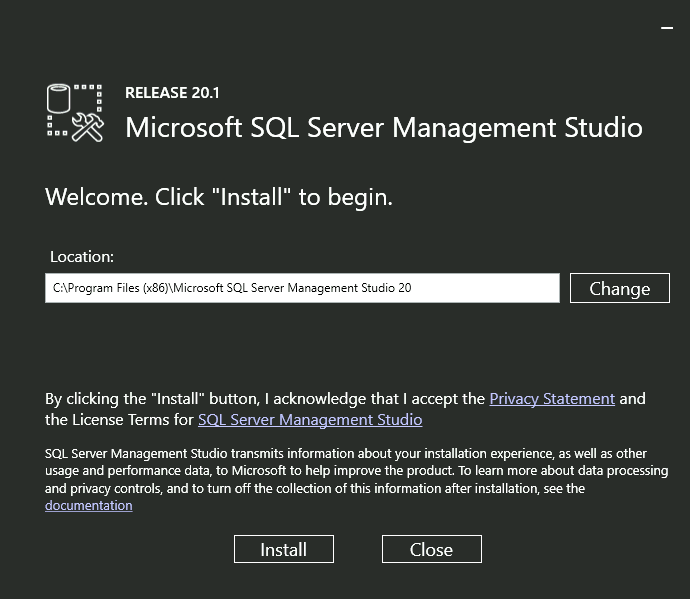

Run the executable

Then click install

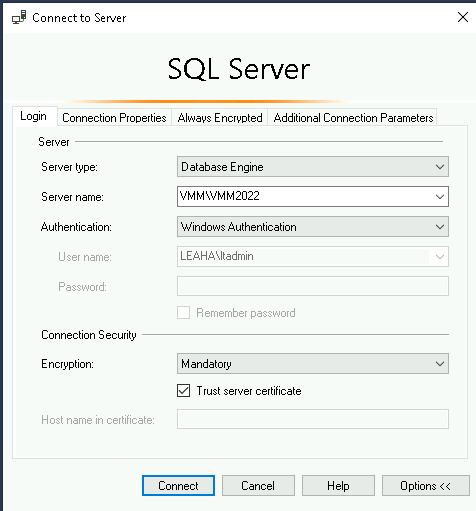

When connecting to the DB, make sure you click trust certificate as its self signed

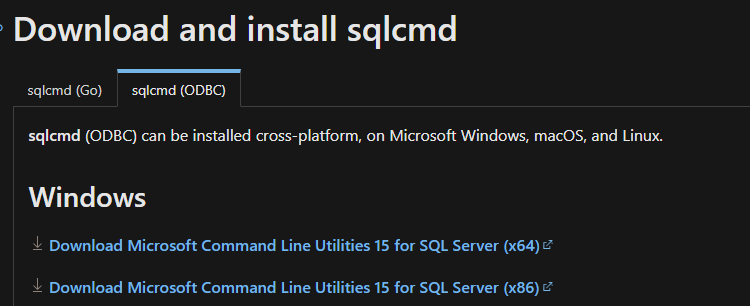

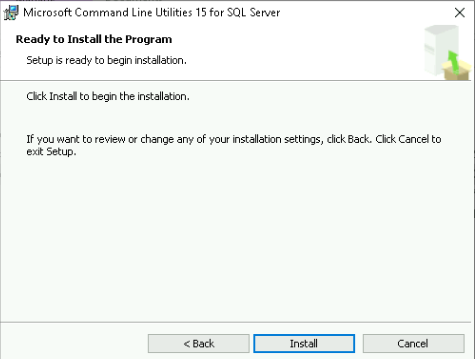

Lastly install the SQL command line utilities from here

Download the x64 version here

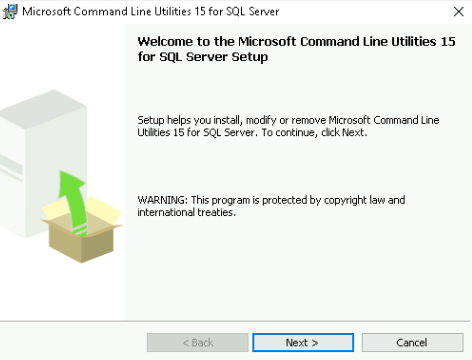

Run the executable, then hit next

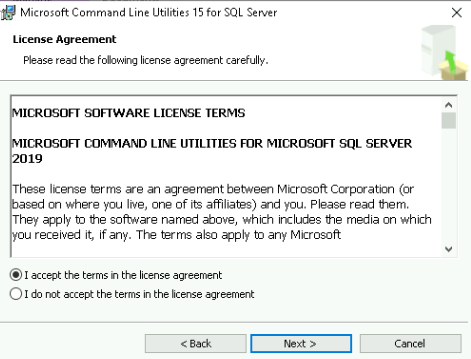

Accept the EULA

Then install

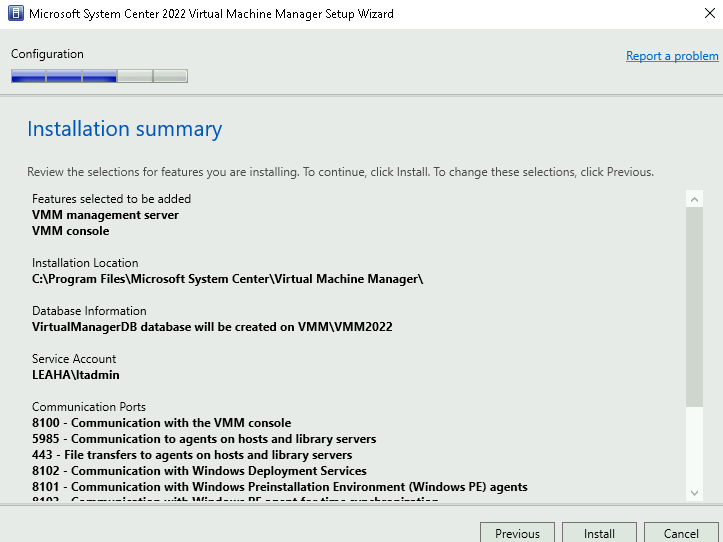

3.5 – Installing SCVMM

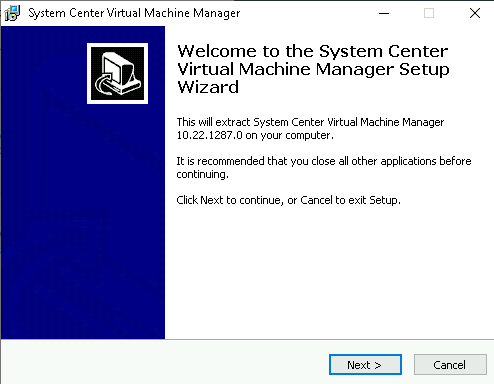

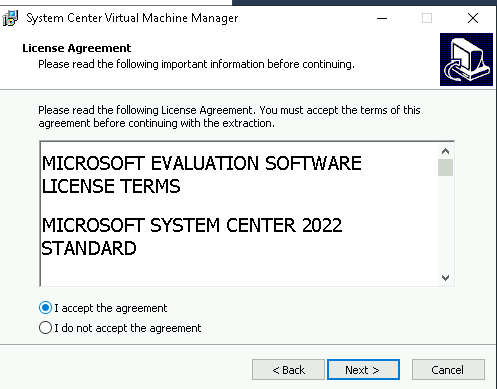

You can download SCVMM from here

Run the executable and click next

Accept the EULA

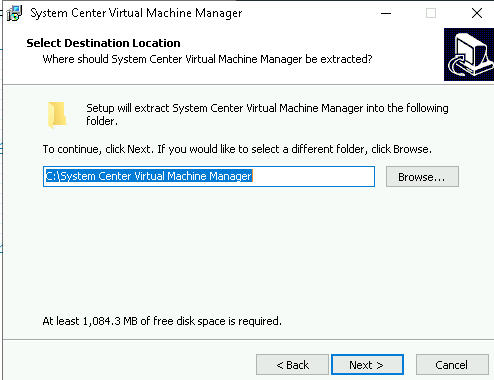

Set an installation path, I am using C for VMM and S for SQL, then click extract

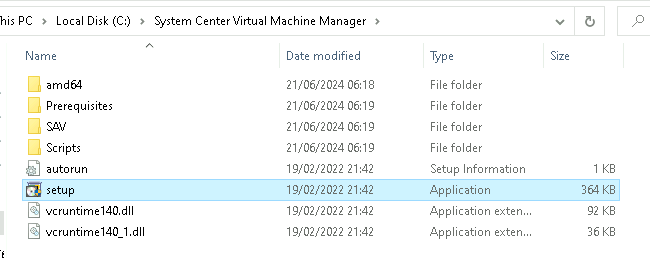

Then run the setup from the C:\System Centre Virtual Machine Manager

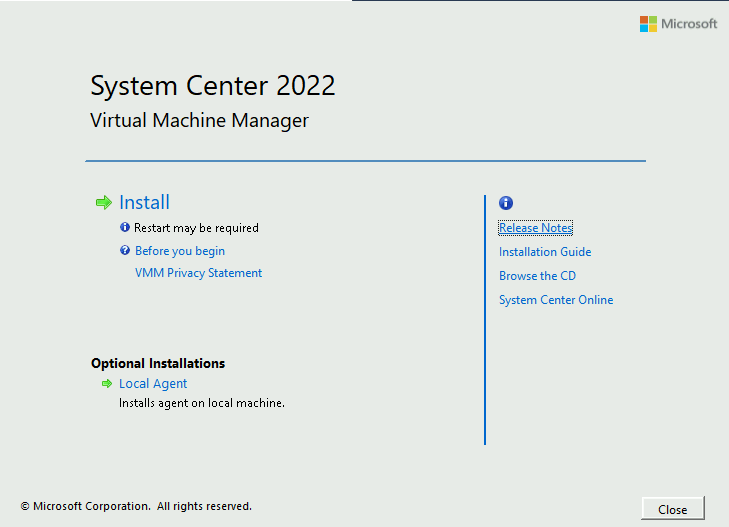

From the install screen, click install

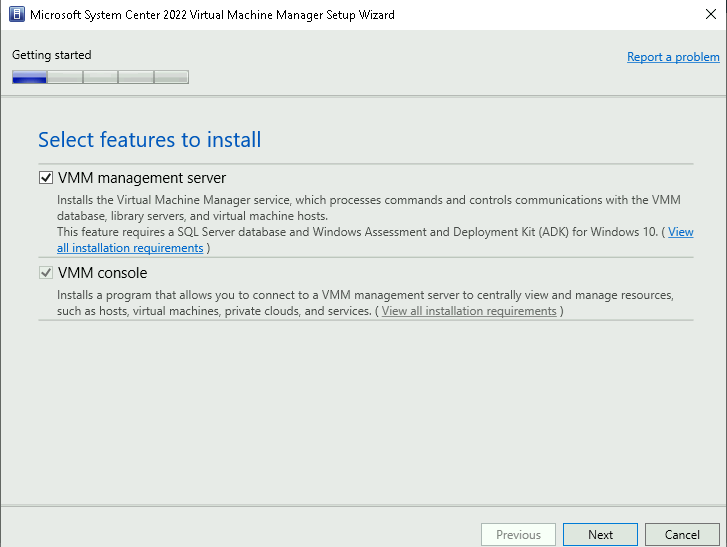

Select both the Management server and the console to install

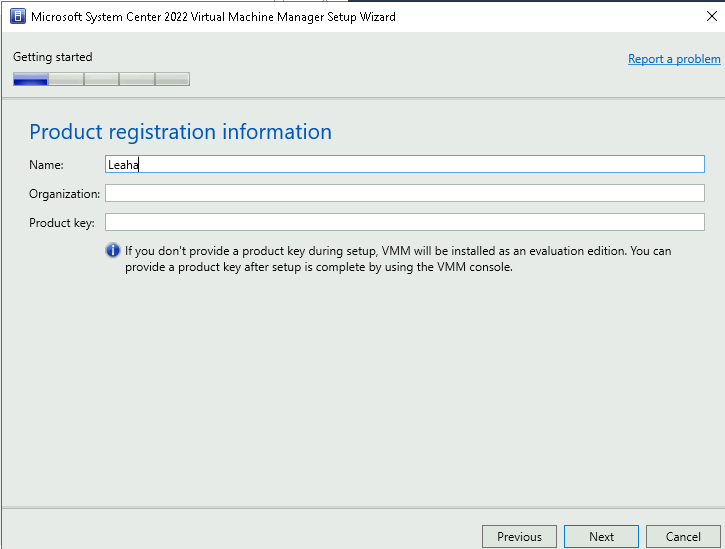

Add a name and your license key details, entering no key gives you a 180 day trial

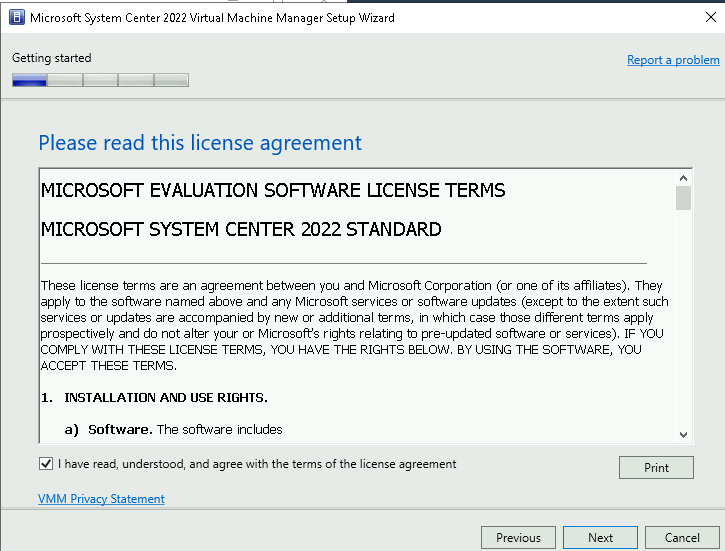

Accept the EULA

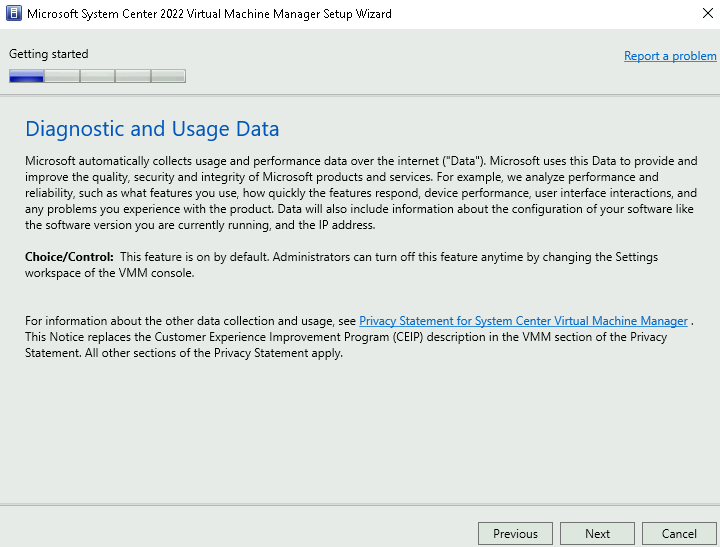

Click next here

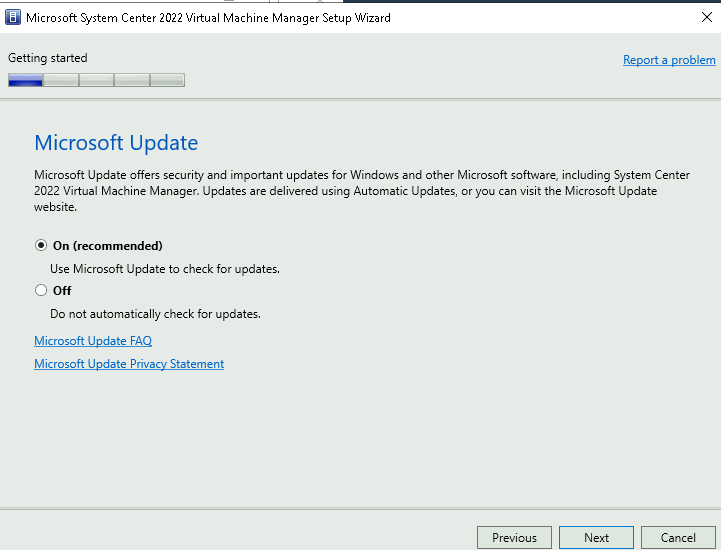

Enable Microsoft Updates

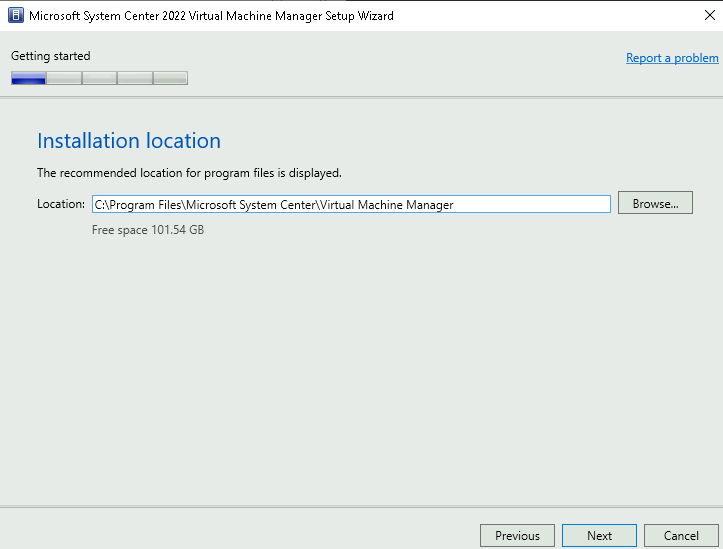

Select an installation location and click next, you may wish to put this on another drive for a production system

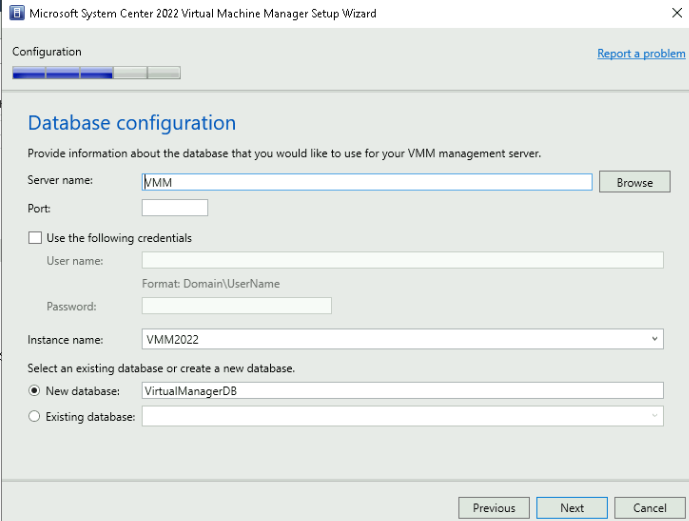

As the SQL server is local, the defaults here are fine, assuming you are logged in with the SCVMM service account, if not tick the use following credential box, and enter it, or re log in as that account

Click next

Give the SCVMM service account and password as well as the path for the DKM container from earlier

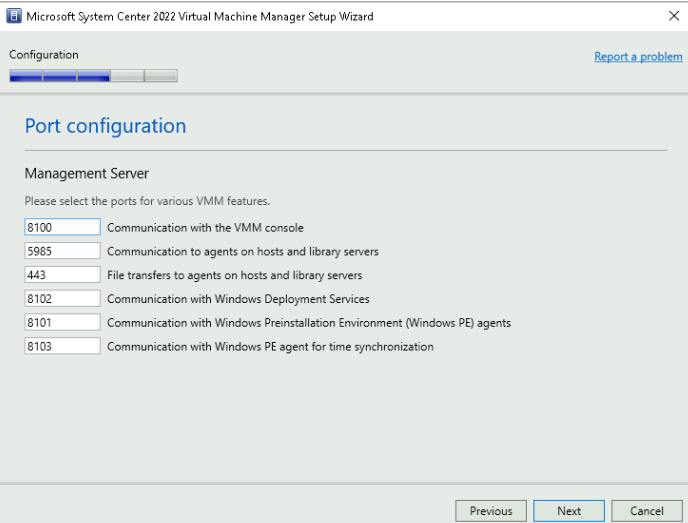

Leave the ports as default

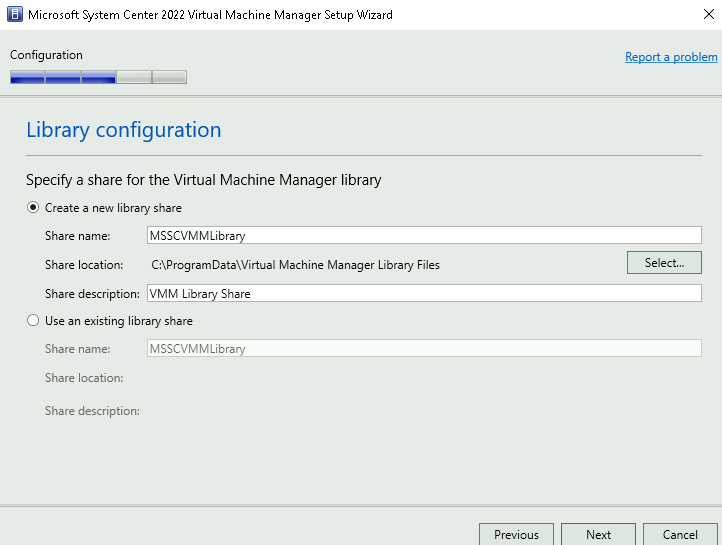

Specify a library location – for production you ideally want the base install from above on another drive, so this location will change as you dont want it on C

When you are happy click install

3.5.1 – SPN Issues Post Installation

If you get an error about SPN installation after VMM is installed, such as

The Service Principal Name (SPN) could not be registered in Active Directory Domain Services (AD DS) for the VMM management server.

Then you need to run the following as a domain admin

setspn -u -s SCVMM/<MachineBIOSName> <VMMServiceAccount>

setspn -u -s SCVMM/<MachineFQDN> <VMMServiceAccount>

For a cluster, <MachineBIOSName> should be <ClusterBIOSName> and <MachineFQDN> should be <ClusterFQDN>

On the VMM server (or on each node in a cluster), in the registry, navigate to HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft System Center Virtual Machine Manager Server\Setup.

Set VmmServicePrincipalNames to SCVMM/<MachineBIOSName>,SCVMM/<MachineFQDN>. For a cluster: SCVMM/<ClusterBIOSName>,SCVMM/<ClusterFQDN>

Lastly run

<Install-Path>\setup\ConfigureSCPTool.exe -install

Eg – Where SCVMM is installed to D:\SCVMM

D:\SCVMM\setup\ConfigureSCPTool.exe -install

To configure SCP

3.6 – Updating SCVMM

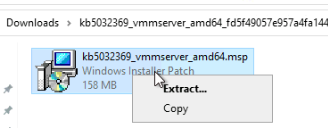

To patch SCVMM to 2022 Update Rollup 2 you will need to grab the server update here and the console update here

For this guide we will be taking a base SCVMM 2022 install to 2022 Update Rollup 2

First, do the VMM Server update

Double click the CAB file, and right click the msp and extract it, ive extracted it to my downloads

Open PowerShell as an admin and run

msiexec.exe /update <packagename>

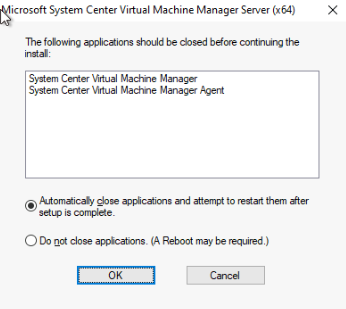

Ensuring you have the MSP for the VMM Server as the package, not the CAB file

Click ok here, this will automatically close anything open the update needs closing

Repeat for the console CAB you downloaded, running the msiexec for the MSP

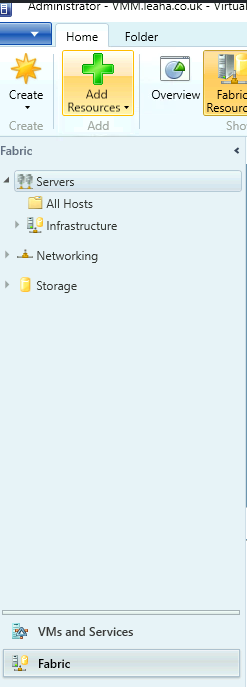

3.7 – Adding A Cluster

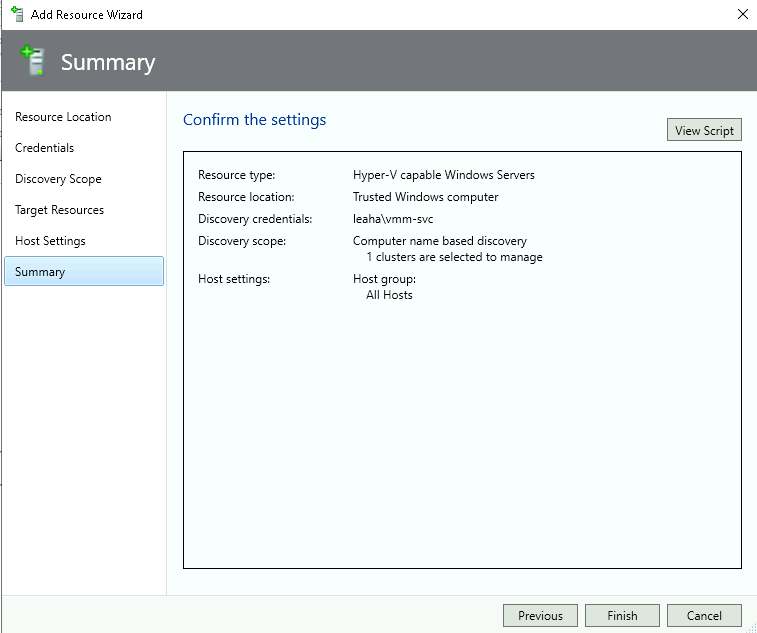

Go to fabric on the bottom right, and click add resources

Then Hyper-V hosts and clusters

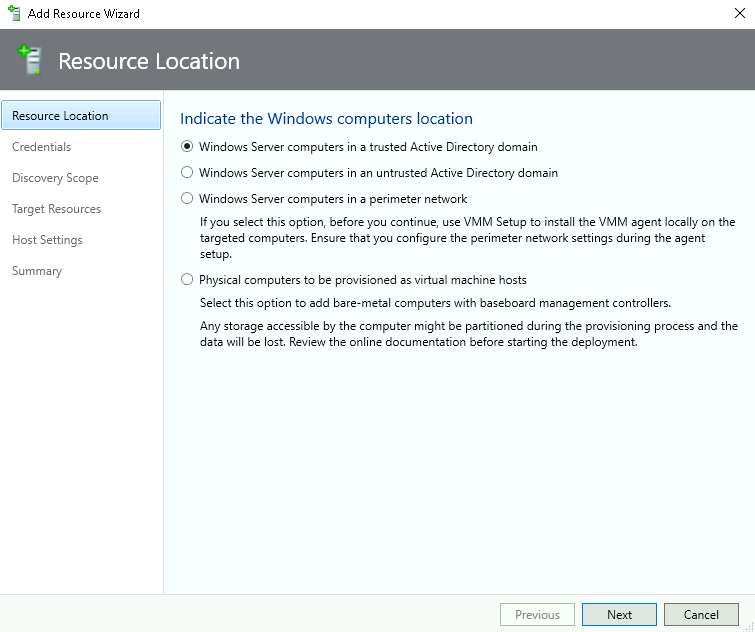

Then select the top option for Windows servers on the domain

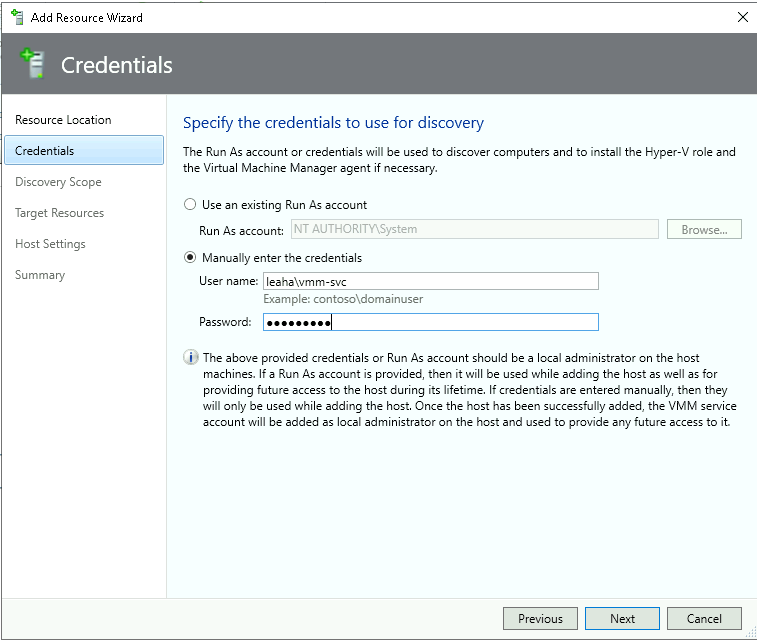

Select specify credentials and give the VMM Service Account, this should be added to the local administrators group on the Hyper-V hosts

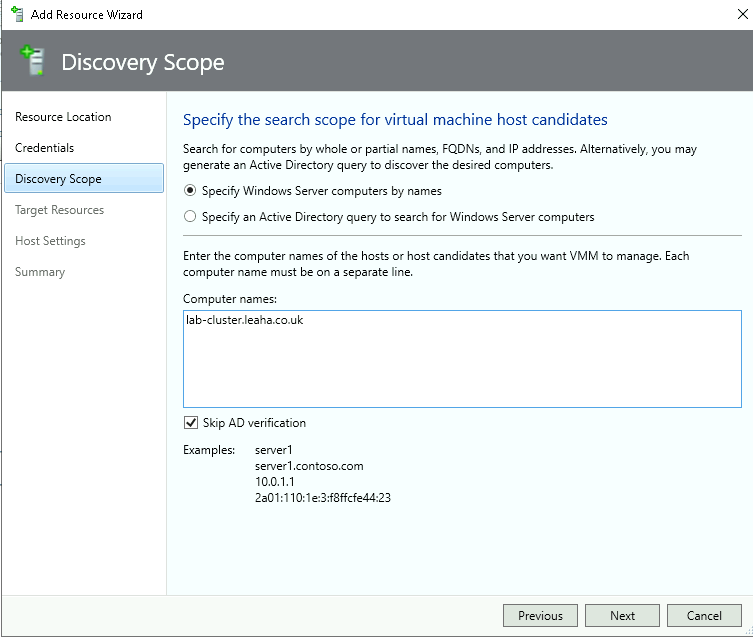

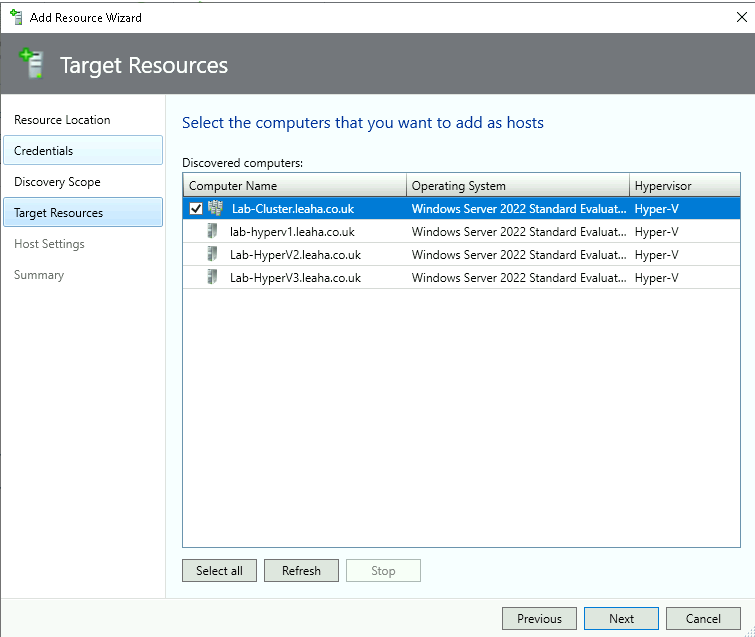

Specify the cluster

Check the cluster here, and then click next

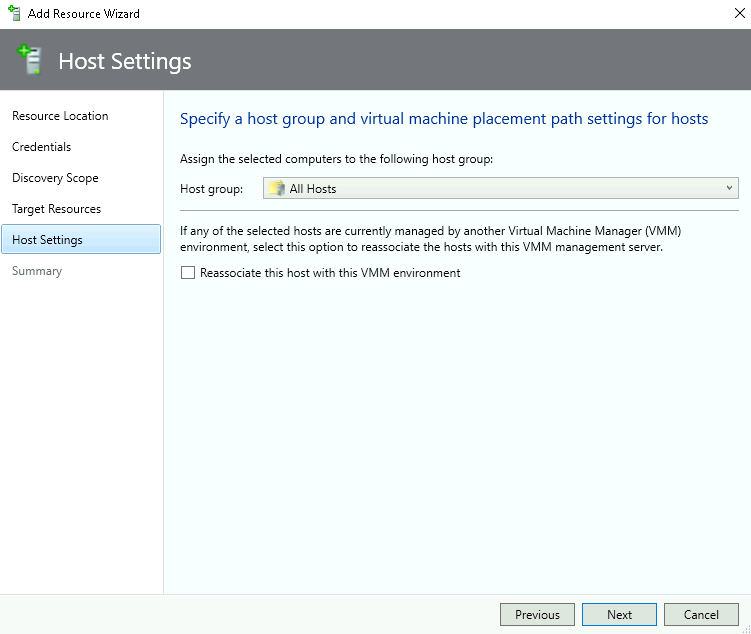

Add them to the all hosts group, we can move them later if needed

Click finish

Fantastic guide, thank you for putting this out.

For the NIC teaming set up, why did you disable VMQ on your NICs? Do you have a specific hardware/firmware issue with your physical NIC’s?

It was done to simulate a physical environment with physical NICs

Though it needs redoing properly with a 4 NIC setup and vNICs rather than 10 NICs, SCVMM wants dropping and WAC adding in a bit more details