In this article we are going to look at everything included with the default VVF package from Broadcom, with a focus on vSphere and vSAN, with no extras added in and how to make the most out of it, as the quotes have gone up a lot, but there is also a lot more on offer that most people didnt have before, so there is a little silver lining to the price hikes

To start, what do you get for your money in VVF, according to Broadcom here, this is

- vCenter Server

- ESXi

- vSphere IaaS control plane

- vSAN Enterprise (250 GiB per core per host) – Broadcom’s article for the change is here

- VMware Aria Operations

- VMware Aria Operations for Logs

- VMware Aria Suite Lifecycle

vCenter and ESXi everyone is very familiar with, and not much has changed, its still vSphere, the main advantage in VVF, vs lower tiers, is you get DRS, a must have, and Distributed Switches, which are very nice to have, but you are likely already using this in your environment

vSAN has 250GiB/core for each host, since this was uplifted from the old 100GiB/core, this makes it a solid option now compared to buying a standard enterprise SAN, like Dell’s PowerStore, as you can just use vSAN instead

You likely fit into the bracket as a typical 4 node cluster with 32 core CPUs, single socket using the Dell R6515, gives you 8TiB/server for a total of ~32TiB in a cluster, so you could use 4×1.92TB SSDs per node, using NVMe SSDs, this is comparable to a SAN, taking Dell’s PowerStore as an example, Dell sell this with a guarantee, for virtualised workloads with compressible data, of 4x data saving, so an effective capacity of ~100TiB, vSAN can also use compression and duplication, so should be comparable under ideal circumstances

We can also leverage erasure coding, RAID 5, within vSAN on a 5+ node cluster to significantly increase our storage capacity, at the expense of network traffic and a little performance, using vSAN storage Policies

So given vSAN can do everything your SAN can, you should probably look at this for the greatest flexibility and as a cheaper option, given the bulk of the cost is in the appliance, not the disks, if we spec our servers with disks and utilise vSAN, this should work out significantly cheaper, and possibly even pay for the VMware licensing over three years, though this varies heavily on the price your supplier can get

So with that in mind, its 100% worth asking for a quote for some vSAN ready nodes, vs, for example, a PowerStore 1200T, then comparing the cost and business requirements before committing to a particular setup, especially, as we’ll dive deeper into below, the vSAN can do everything a PowerStore, or an equivalent Alletra from HPE, since you’ll be buying VVF for your VMware estate whether you go vSAN or with a traditional SAN

We will start with installing ESXi on your nodes and getting vCenter deployed with a vSAN, then move into setting up our cluster networking, which there are a few options on, and then the additional features of vSAN, some of which you will have seen, some will likely be new

This below deployment will focus on two distinct environments as they serv to show different features and the hardware I have for the physical lab doesnt support them all

We have the vSAN ESA Virtual lab, this will have the new ESA features, which is mainly the snap service appliance, but the deployment is also a little different compared to the older OSA, it requires NVMe disks and if you are building a new cluster this wil be what you should be going with

Servers in this environment belong to the leaha.co.uk domain

Hosts in this environment use a more typical 6 NIC deployment model, with two NIC cards, a quad port and a dual port, management was chosen to not have ASIC redundancy due to it being the only thing, that should a network card fail, wont immediately cause a P1 in your environment, the ports were setup as

- 2x Management/vMotion vSwitch, 2x ports from the quad port card

- 2x vSAN VDS, 1x port from each NIC card

- 2x VM VDS, 1x from each NIC card

We then have the physical vSAN OSA lab, as this uses physical servers it better shows performance based tests and is a little more true to the real works deployment in some areas, as its repurposed hardware, I dont have NVMe disks, so I couldnt use just this lab

Servers in this environment belong to the istlab.co.uk domain, and were used in VCF, and names might have this as a reference, all features in vSAN are available in both VVF and VCF, so this wont impact what you can do with your VVF subscription

Spec wise we have

- 3x R640 with 2x Xeon 6130, ~512GB RAM, 4x 1.92TB SSD for capacity, 4x 10GbE NICs and 1x BOSS Card

- 1x R740 with the same spec as the R640s, but it hasnt got a BOSS card and is using a SATA SSD I had available for boot

Networking in this cluster has less NICs, aside from this being all I have, it was sued to demonstrate how you might deploy this is an environment with more networking constraints, be that number of ports on servers, or on your core switches

You will want to keep your two high bandwidth consumers separate, this is vSAN and vMotion, the ports were setup as

- 2x Management/vMotion vSwitch, 1x port from each NIC card

- 2x VM/vSAN VDS, 1x port from each NIC card

Important – By continuing you are agreeing to the disclaimer here

1 – Deployment

Before we do anything we need our Hypervisor installing, ESXi, now this will assume you have already setup your servers IPMI, for Dell this is iDRAC, and HPE have iLO so you can remotely access the machine and know how to mount virtual media, alternatively, you can do this directly at the machine

1.1 – ESXi

1.1.1 – Installation

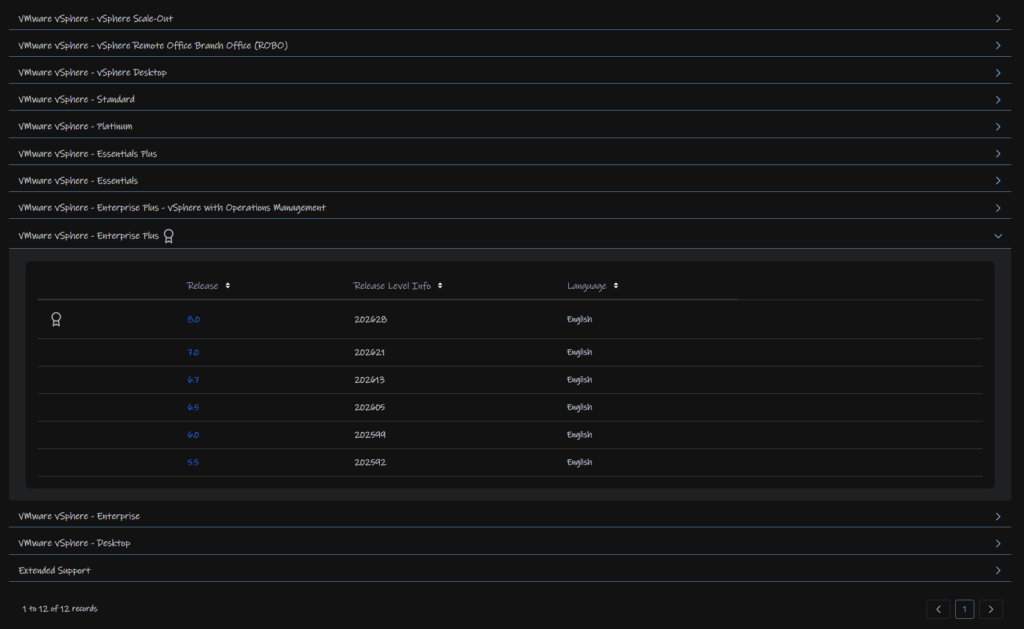

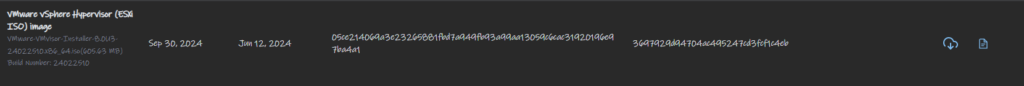

In order to install ESXi you’ll need the ESXi ISO from Broadcom, you can find this under vSphere

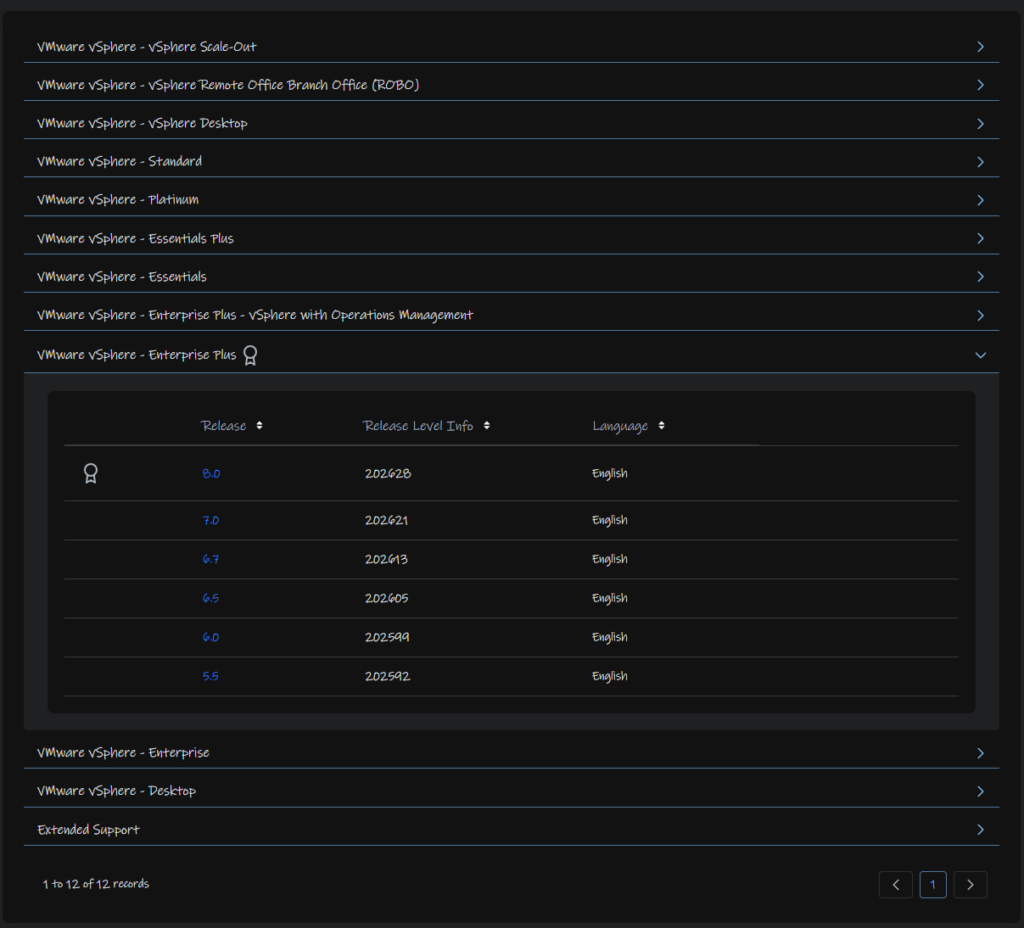

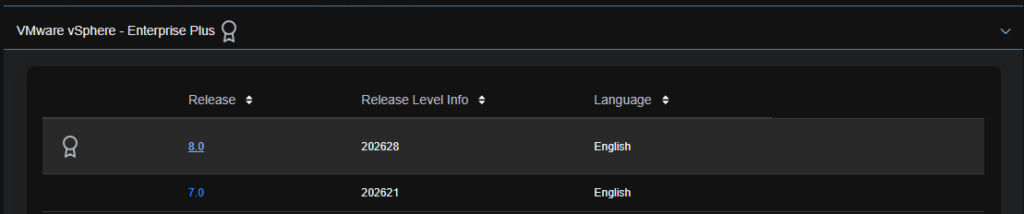

Expanding which ever section you are licensed for, I have enterprise plus and clicking the version, this should be 8.0

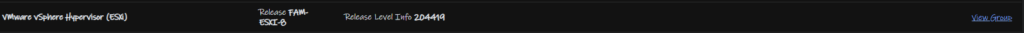

Click View Group on the vSphere Hypervisor

And Download the ISO Image

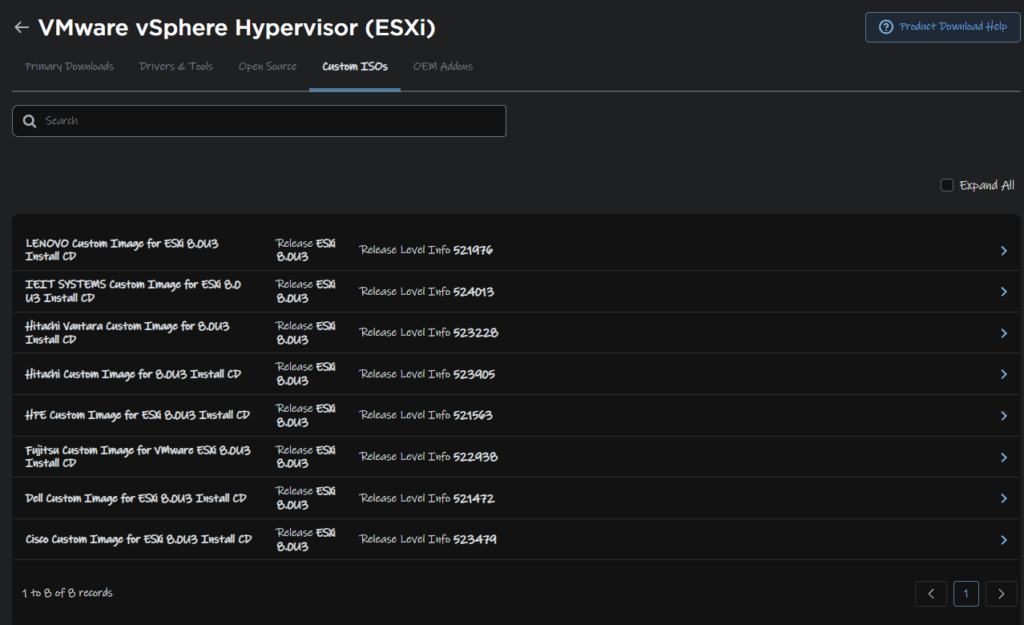

I am using the standard one, however there are Vendor customised ISOs that you will want to use, for your vendor, this is located at the top under Custom ISOs

Mount this to the server, by using rufus to create a bootable USB, or by mounting it to your servers virtual CD ROM in the IPMI, iDRAC for Dell and iLO for HPE

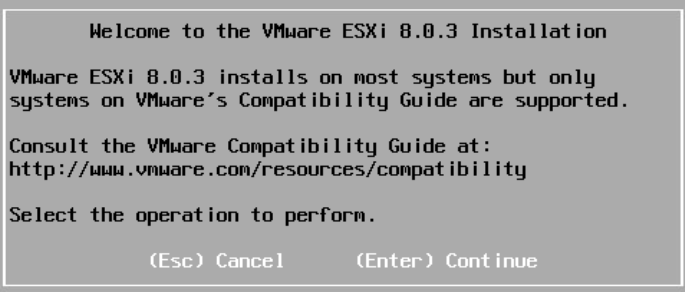

Once the server has booted ESXi, you’ll have this screen, select enter to continue

Accept the EULA with F11

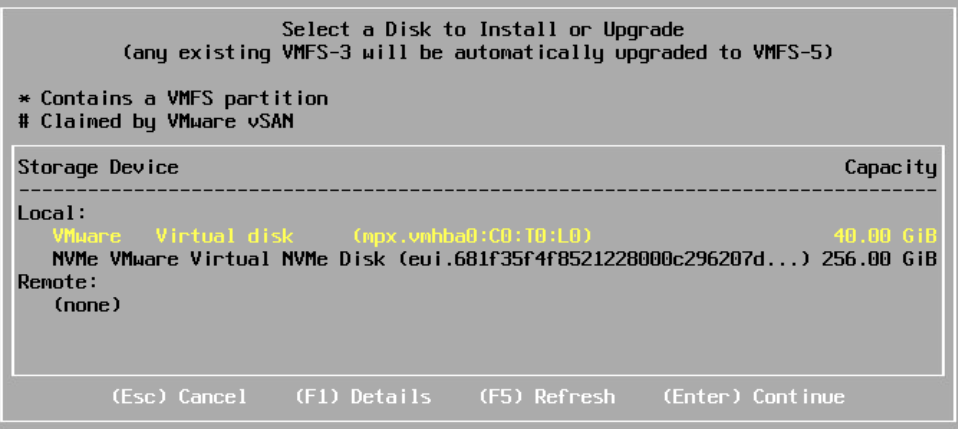

It will then scan for bootable devices, for a production system this should be something in RAID 1

Examples are Dell’s BOSS card

For HPE G11 you should have the NS204i-U, or for G10 systems the NS204i-P, which is a PCIe card

As this is a lab, I have a virtual disk, and will be using the 40GB one by making sure its highlighted in Yellow and clicking Enter to Continue

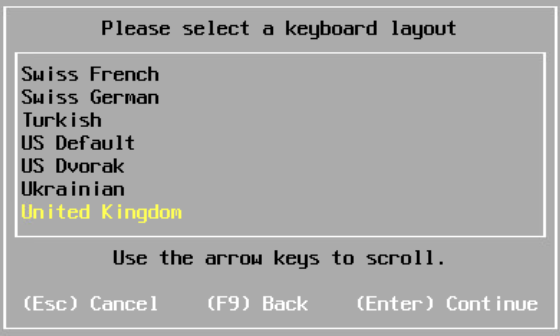

Select your keyboard layout and hit Enter

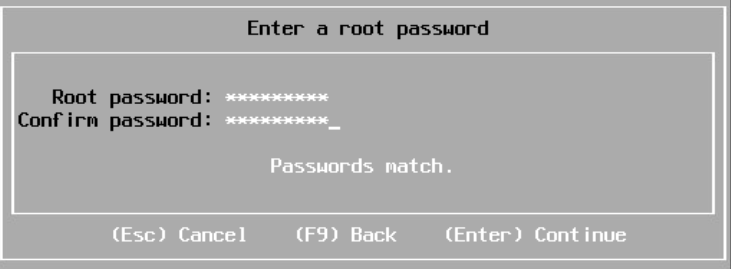

Set a rot password, use something easy to use, we can set a secure random one later

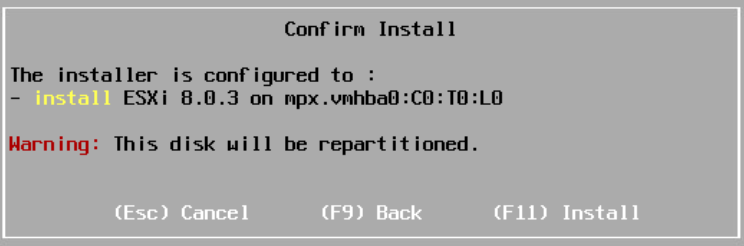

Then click F11 to install

Once thats done, reboot the server when prompted

1.1.2 – Network Config

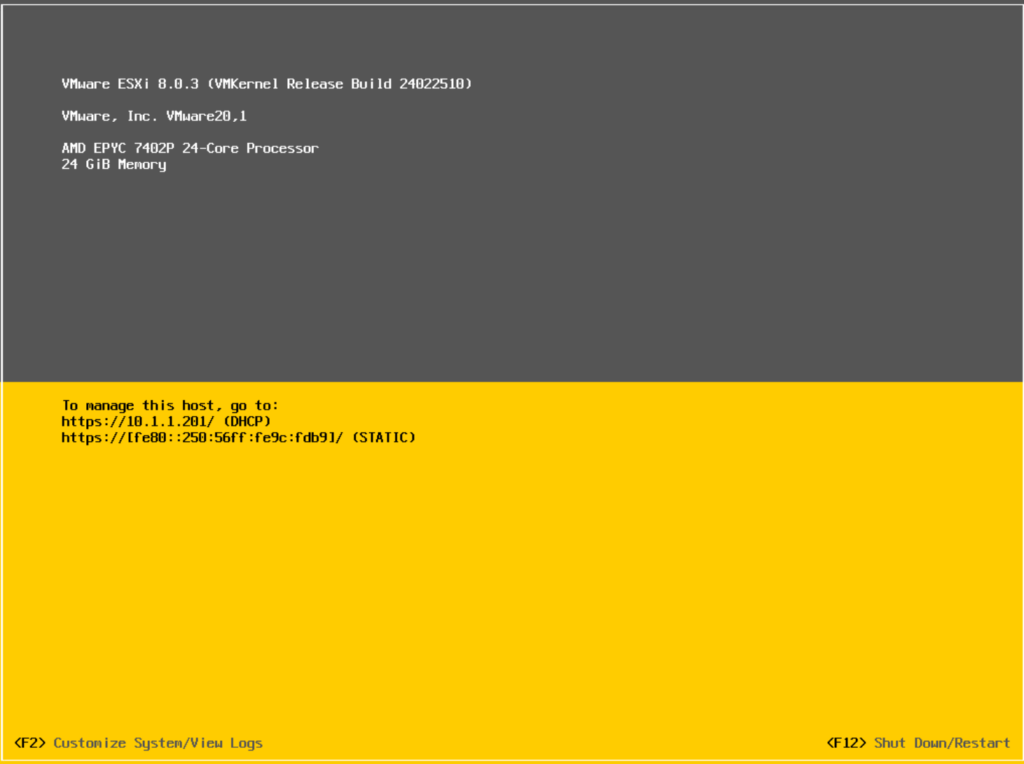

Once ESXi has installed, we’ll need to set the networking up, when its booted backup, your screen should look something like this

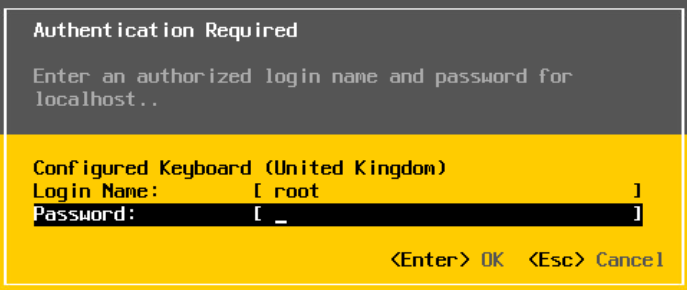

Press F2 to login and enter the root password

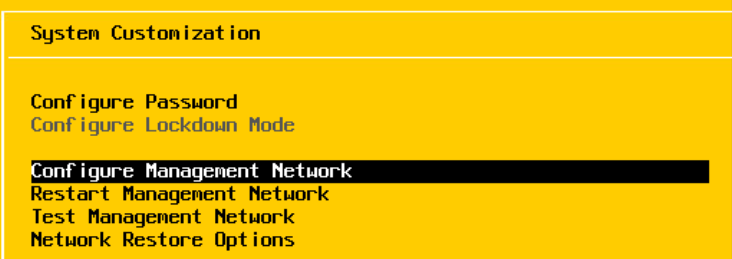

The use the down arrow key to scroll to Configure Management Network and hit enter

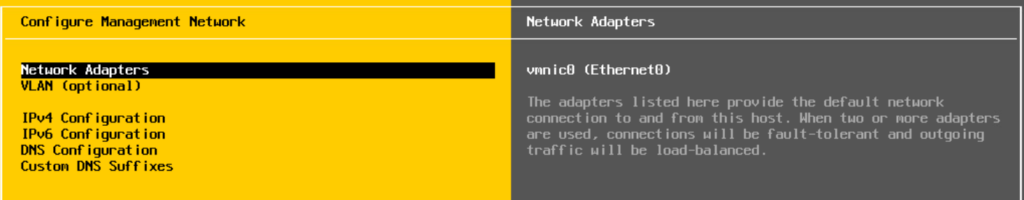

Under network adapters, make sure the adapter you want to use for management is selected, this should be set at the switch level as access to tagged for your management VLAN ideally

You can select VLAN to add a tagged VLAN, or leave blank if the management network is set as the access VLAN on the port

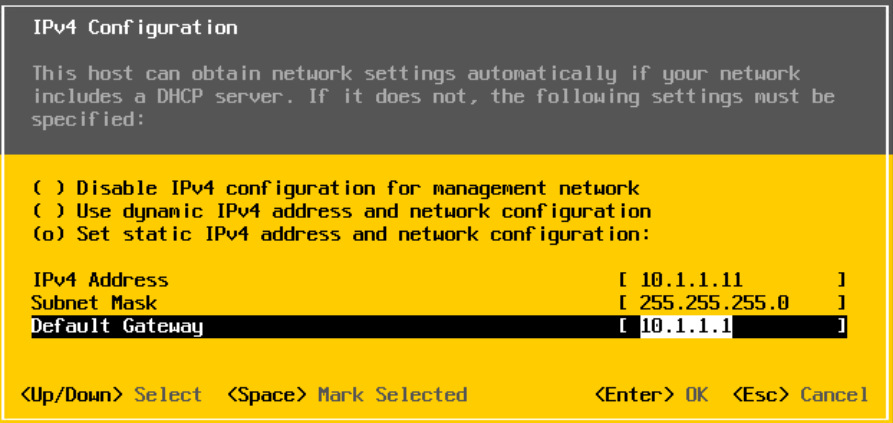

Press Enter on IPv4 to edit this setting

Set the hot as static by scrolling down to the third option at the top and hitting Space, and then adding your IP, subnet mask, and gateway, once you are done, press enter

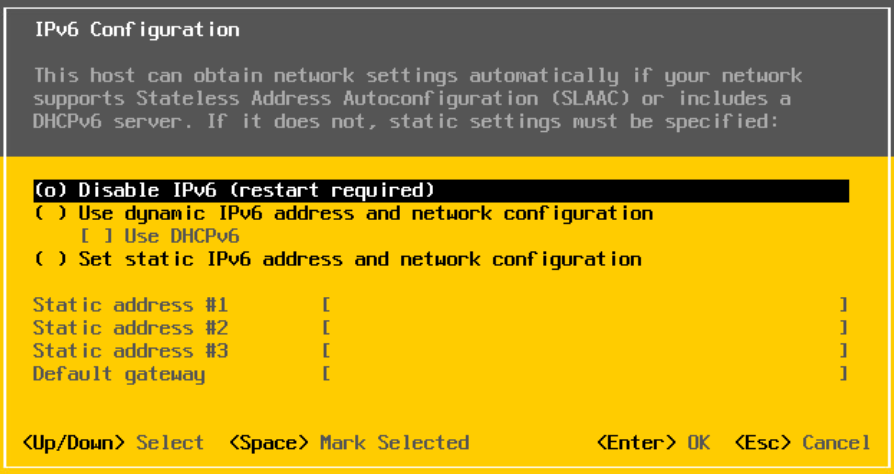

For IPv6, use Space to select disable, unless you are using it and press Enter

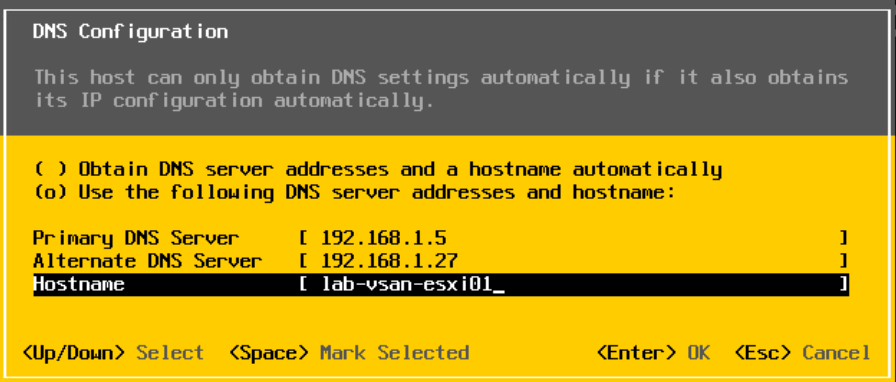

For DNS, use space to select the second option and add your DNS servers and a hostname, then press Enter

Under DNS Suffixes, add your Domain, the press Enter

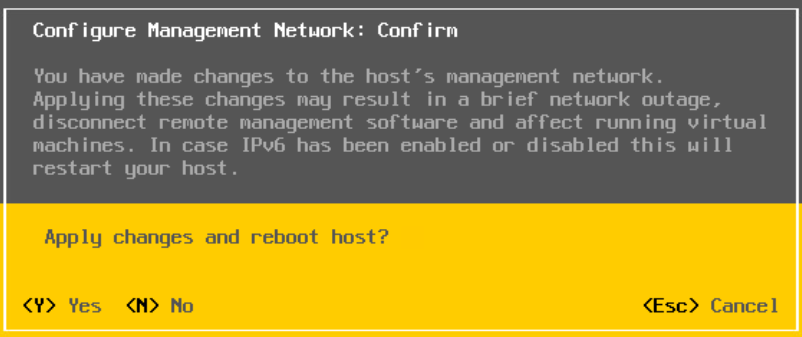

Now press escape to back and press Y when prompted to apply changes, disabling IPv6 requires a reboot

1.1.3 – Post Deployment Tasks

Now ESXi is deployed and the networking is setup, we can get to the Web UI on

https://fqdn/uo

Or

https://ip/ui

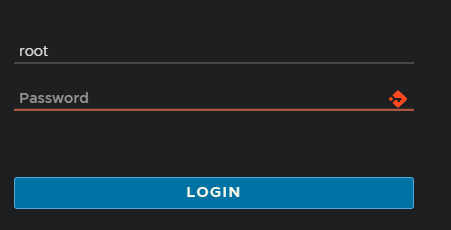

Login with the root password

Uncheck the CEIP box and click ok

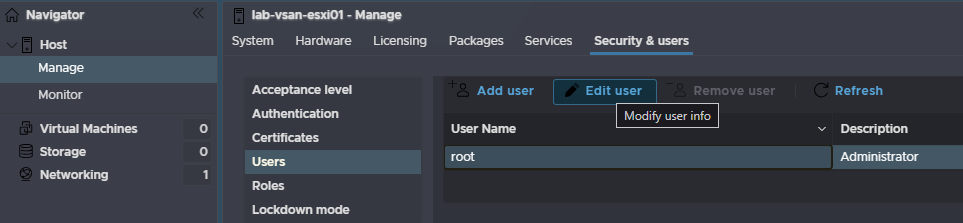

Then head to Manage on the left, Security & Users at the top, Users to the left, click the root account and click Edit User

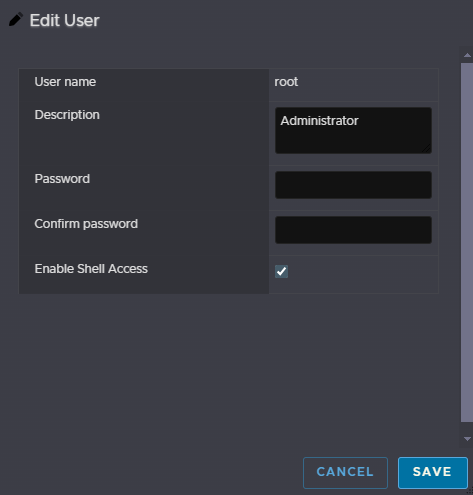

Here, you can set the root password too something more secure

Now, we need somewhere to put the vCenter networking wise, this may vary depending on your networking, but most deployments have a Management network, ESXi and vCenter will usually be on the same network

If your physical switch port the management was set on, VMNIC0 before for me, is set with this management VLAN as an access type, meaning all untagged traffic is tagged with the access VLAN, then you dont need to do anything, if this VLAN is tagged, it needs setting in ESXi like the management port was in the network setup

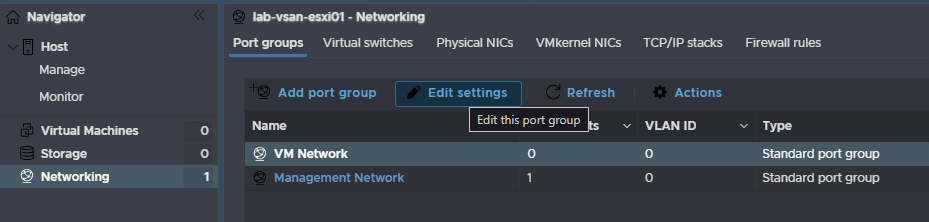

For the tagged part, we need to go to Networking on the left, Port Groups at the top, select the VM Network, and click Edit Settings

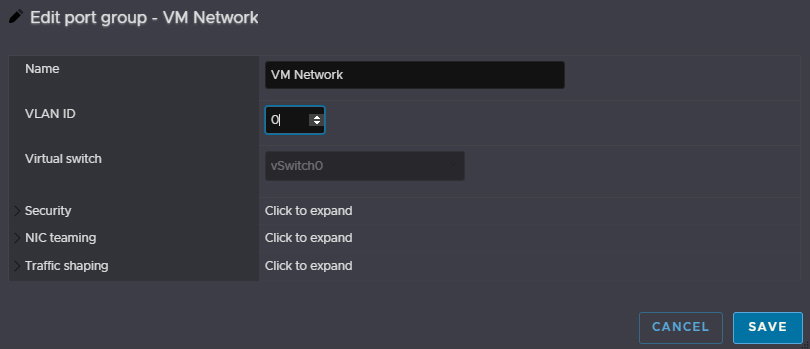

Then set the VLAN ID, and click Save, the vCenter will be deployed here, this is usually the same VLAN as ESXi, and set to 0 to use the access VLAN on the switch

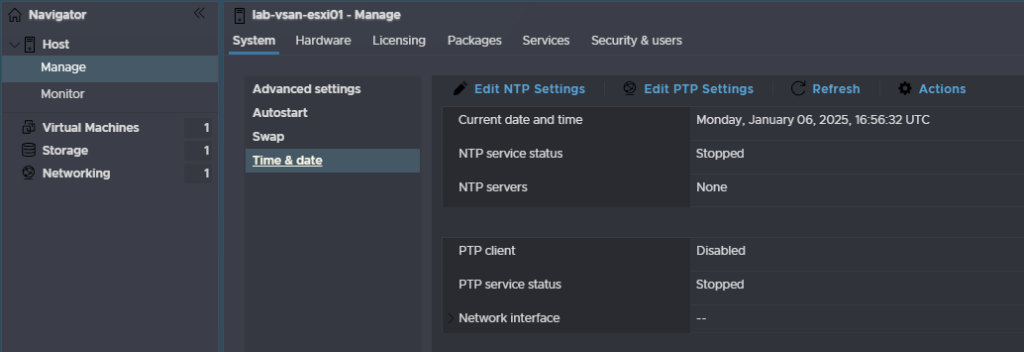

Lastly, we will want to configure NTP, this is a more important if you are using vSAN

Head to Manage/System/Time & Date and click Edit NTP Settings

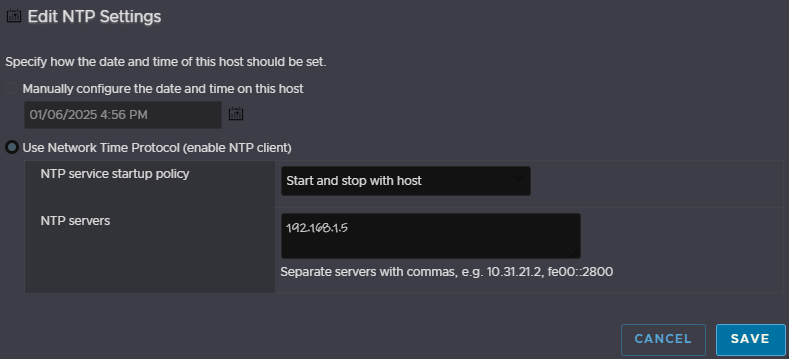

Check the radio button to use NTP and set the service to start and stop with the host, then add your NTP server and click Save

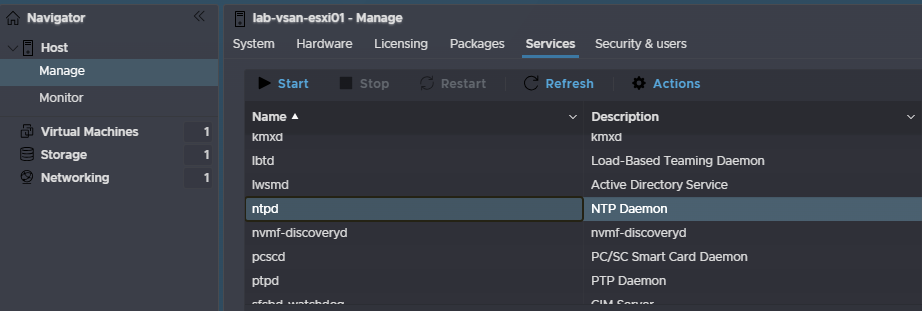

Then head to Manage/Services and find ntpd, click and and then click Start

1.2 – vCenter/vSAN

Now we have ESXi setup with a secure root password, and some temporary networking configured for the vCenter, we can move it later, we need to get the vCenter appliance deployed, which will deploy vSAN at the same time on our first node to begin with

1.2.1 – Stage 1

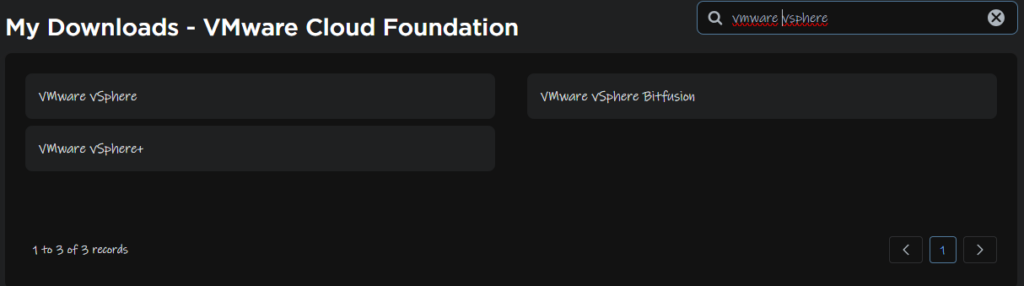

We will need to download the vCenter ISO from the Broadcom portal, you can get it from the Broadcom downloads page by Searching and selecting “VMware vSphere”

Expand what you have an entitlement too, as I have VCF, enterprise is available for me, but its the same for VVF, and select release 8

Click View Group on vCenter Server

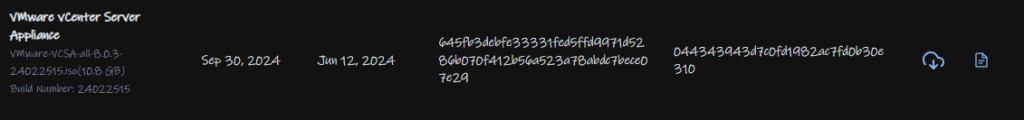

Then downloads the ISO file

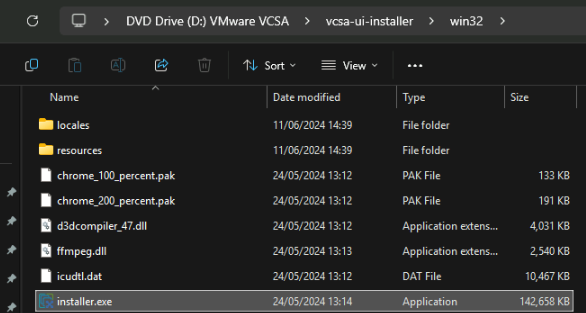

Double click the ISO to mount it, then head to vsca-ui-installer/win32 and double click the Installer.exe file

If you see this click Run

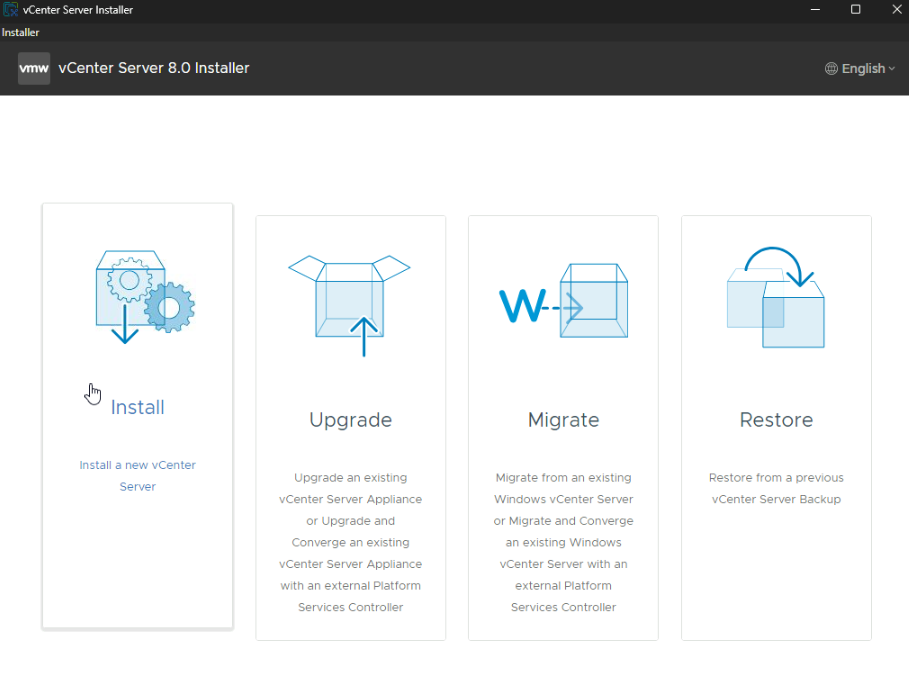

Click Install

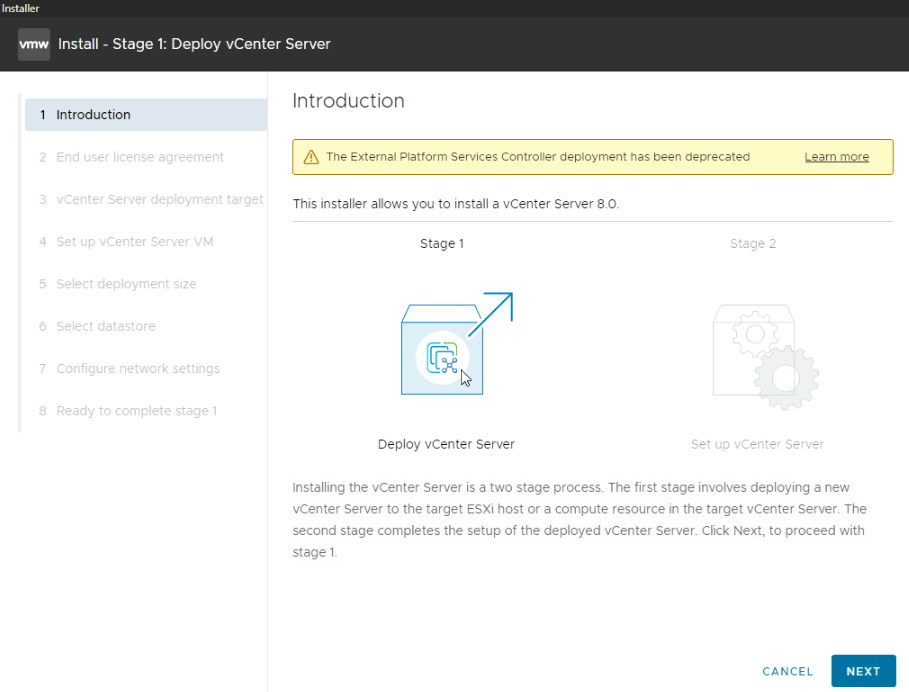

Click Next

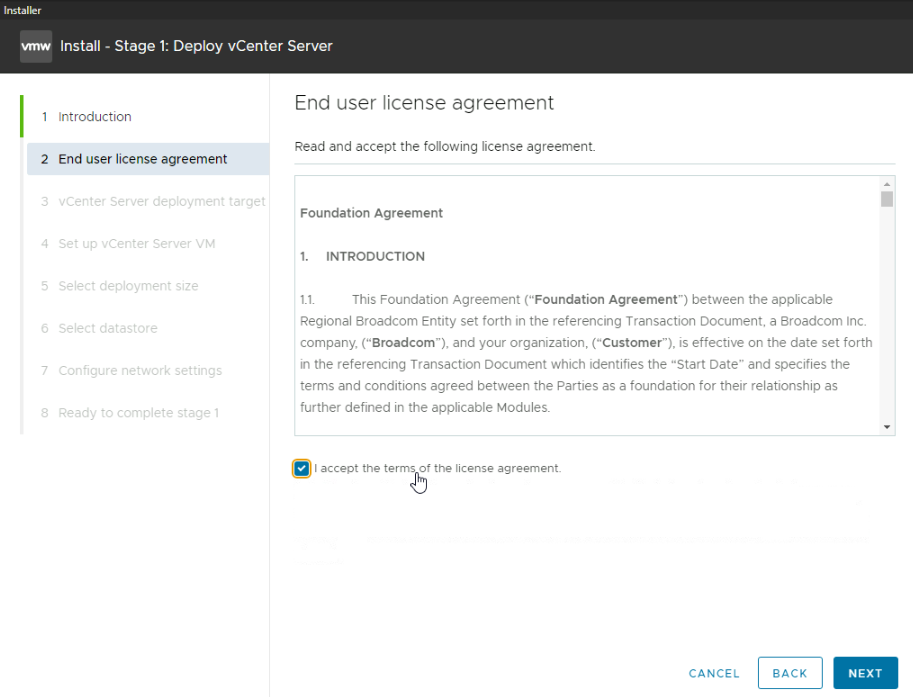

Accept the EULA and click Next

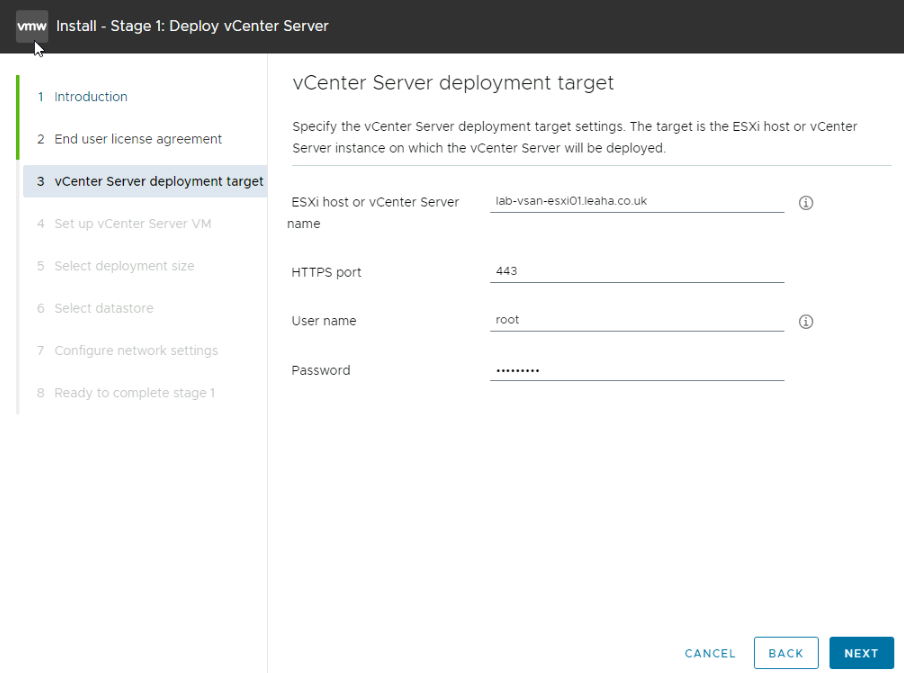

Add the ESXi nodes FQDN, leave HTTPS port on 443, and add the root user credentials, then click Next

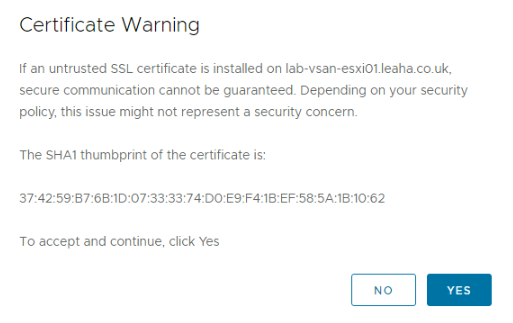

Accept the SSL certificate

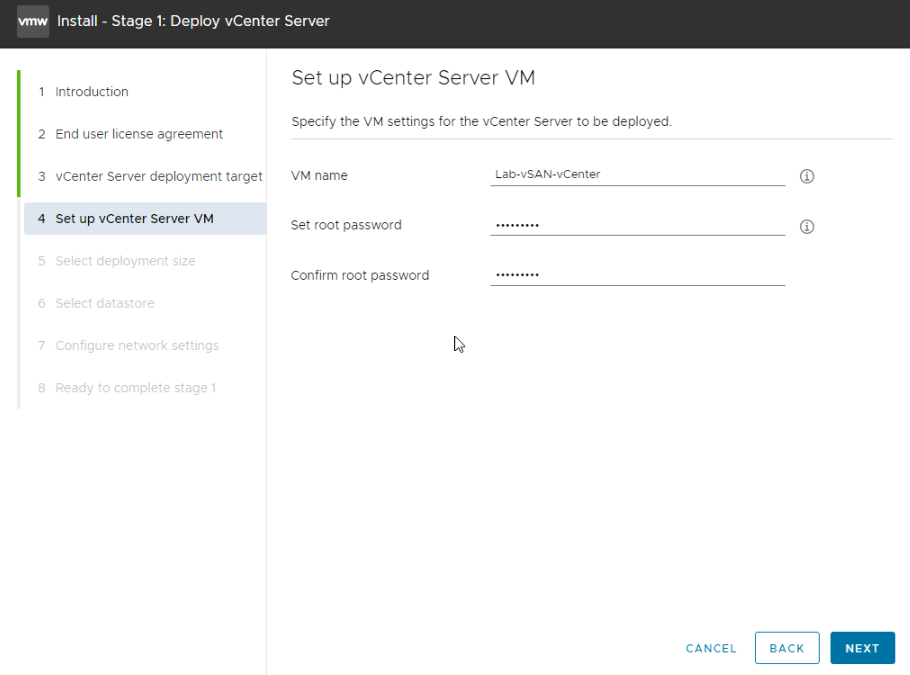

Then add a name for the VM, this isnt the machine name or FQDN, and set a root password and click Next

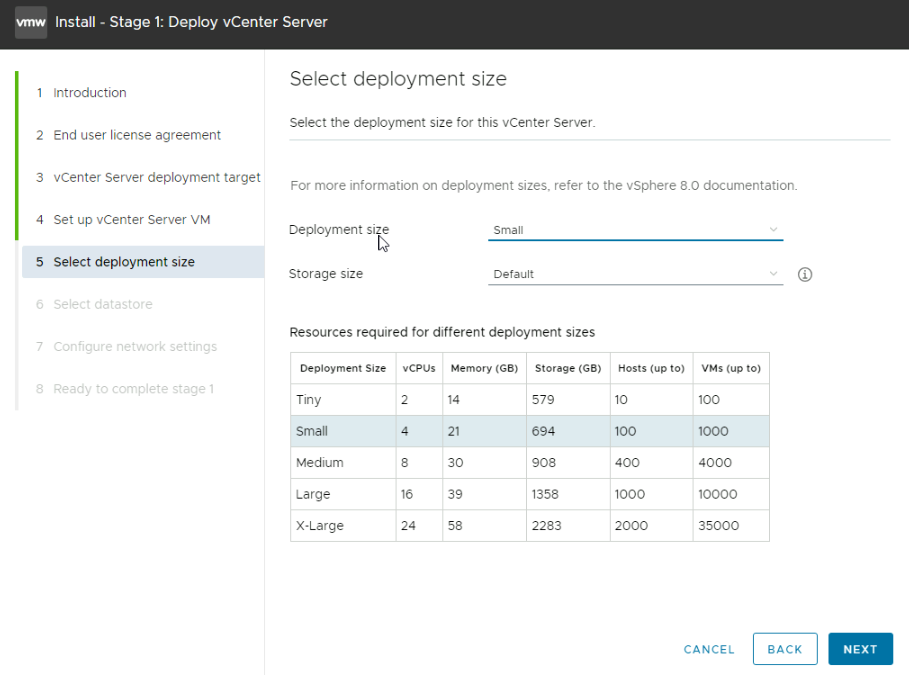

Then select the deployment size, for production Small should be the smallest you deploy, Tiny is only suitable for proof of concept labs, otherwise base the size on the chart of VMs and ESXi hosts

Eg if you have 110 hosts and 500 VMs, you would want medium

Then click Next

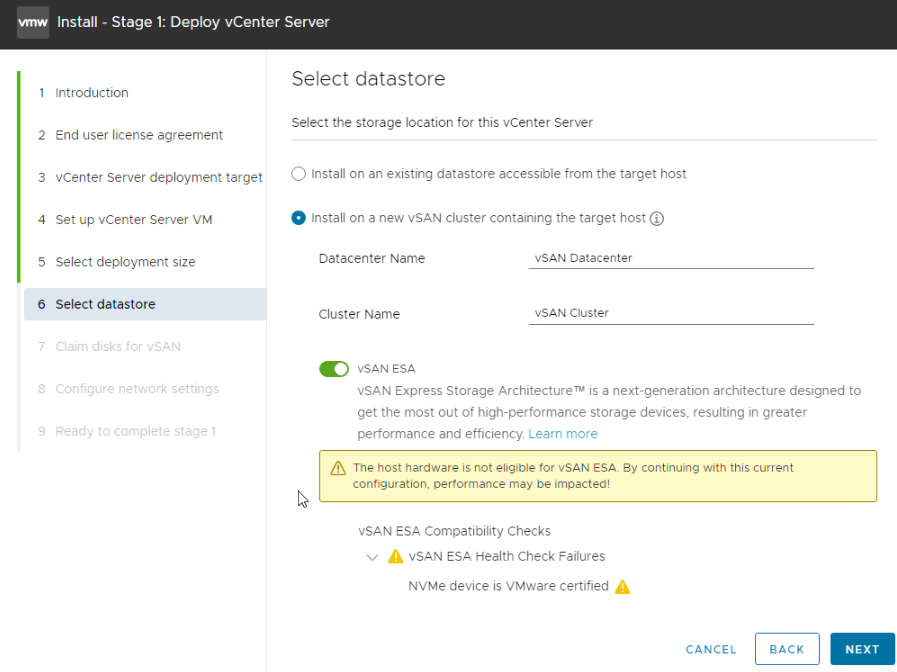

We then need to select the option to install on a new vSAN cluster, give a datacenter and cluster names, I am using the default for this lab, and in 2025 we should be using NVMe disks, so enable vSAN ESA

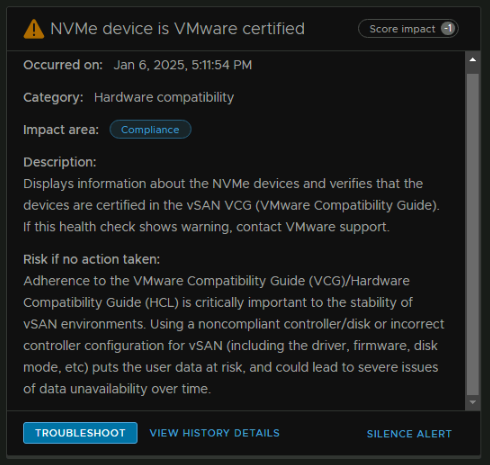

Now this must be HCL certified disks, and when you bought the servers this should be stated, or the node will be marked as vSAN Ready, VMware will not support you if vSAN doesnt work or has issues and the disks and firmware are not in the HCL

For me I am using a virtual lab, so it fails here and tells me they arent certified, so you shouldnt see this error

Once you are done, click Next

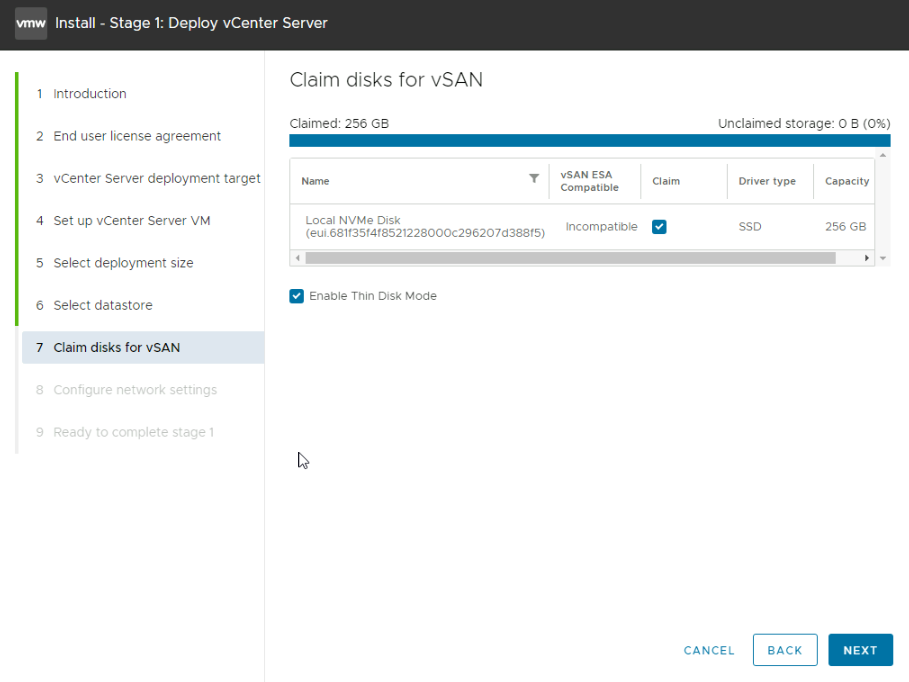

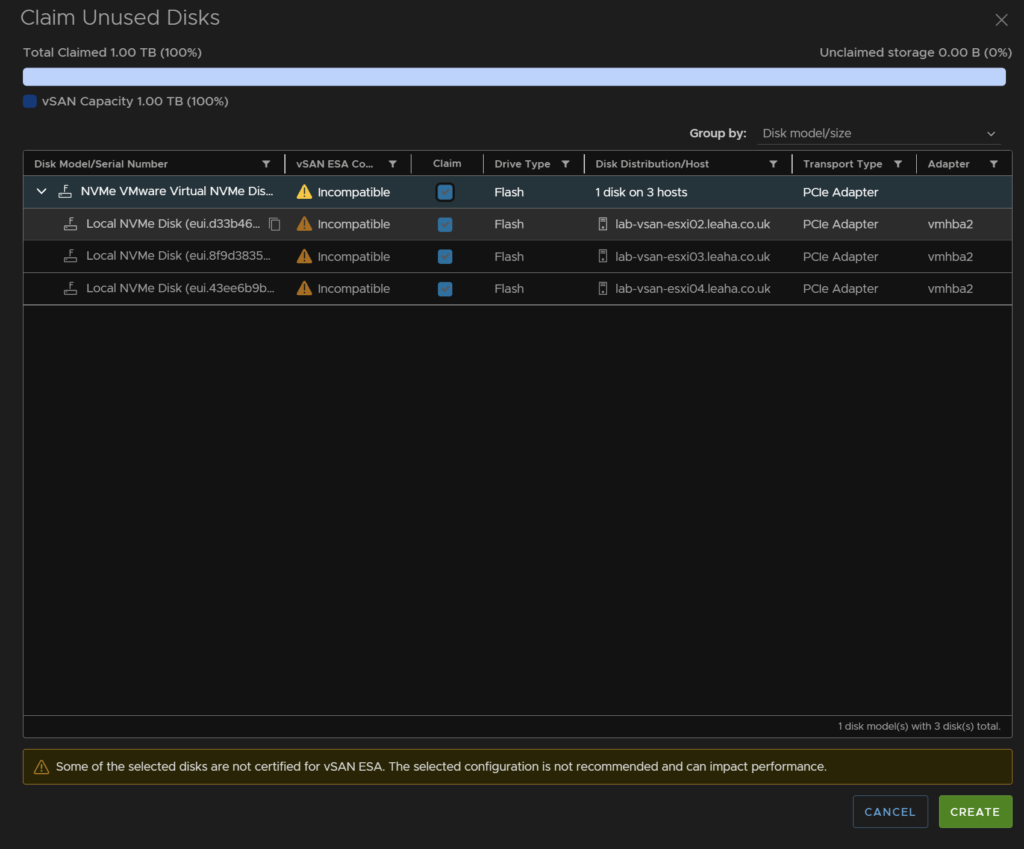

Select the disks to add to vSAN, your hosts likely has more than one disk, using the check box under the Claim column

And then enable thin disk mode for the vCenter

Again, your disks should be compatible, as mine are virtual, they are listed as incompatible

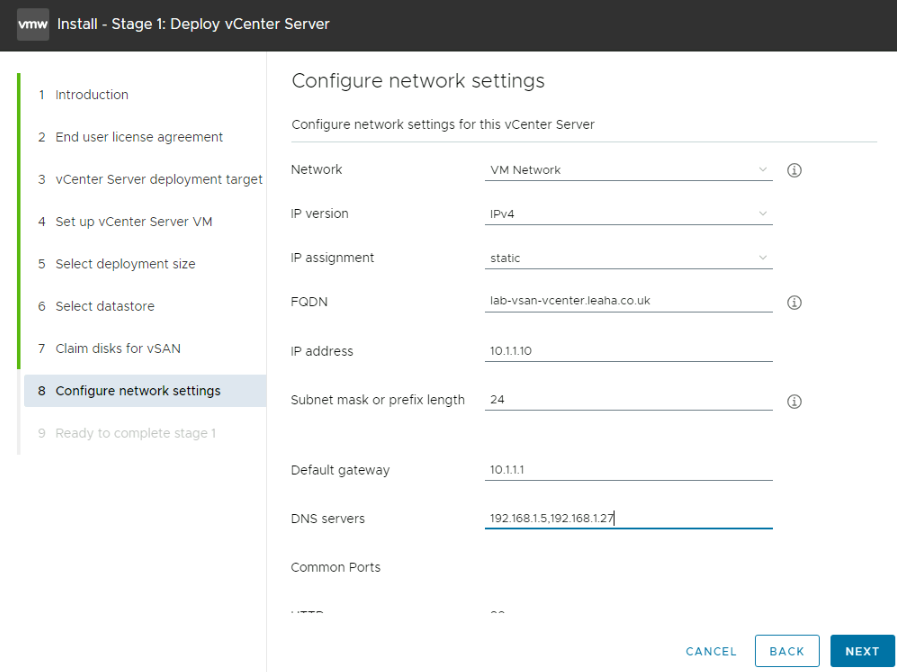

For the network, we prepped the VM Network earlier, so we will be using that for the vCenter for the moment, it will be moved later

IP version wants to be IPv4 and IP assignment needs to be static

Ad the server FQDN, this needs to match the IP we will use and be added to all DNS server before we move on else the deployment will fail

Then we need the IP address and subnet mask or prefix

And lastly the default gateway and DNS servers comma separated, everything else below we can leave, then hit Next

And review and finish

You will see it go through the install and claim the disks for vSAN

Then initialise

Then Deploy

Once thats deployed, the RPM will initialise, this is when it begins powering on

Then several RPM files will be installed and initialised as well as some containers

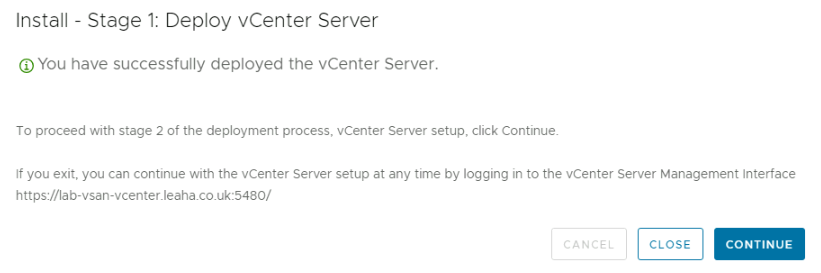

Once thats done we can head to Stage 2

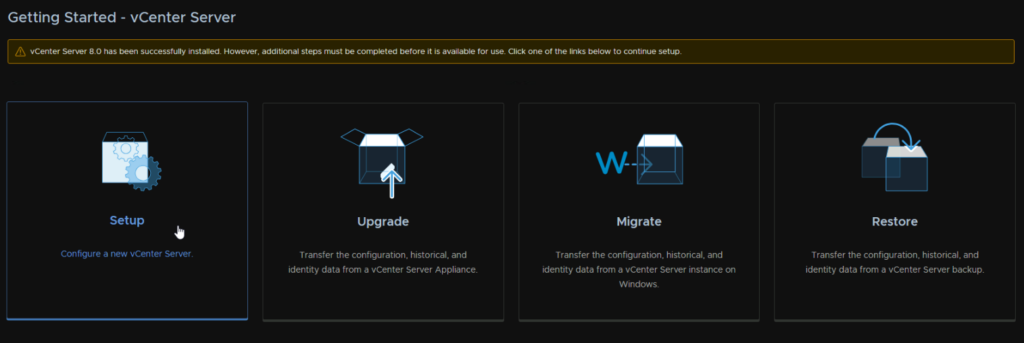

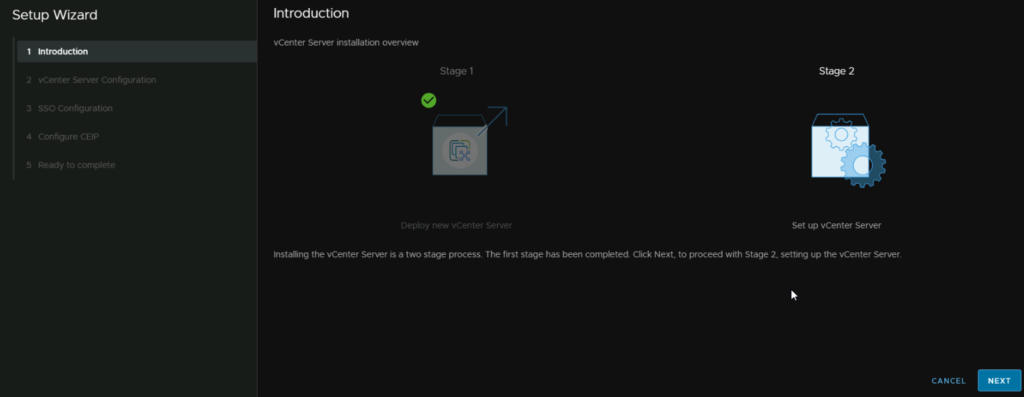

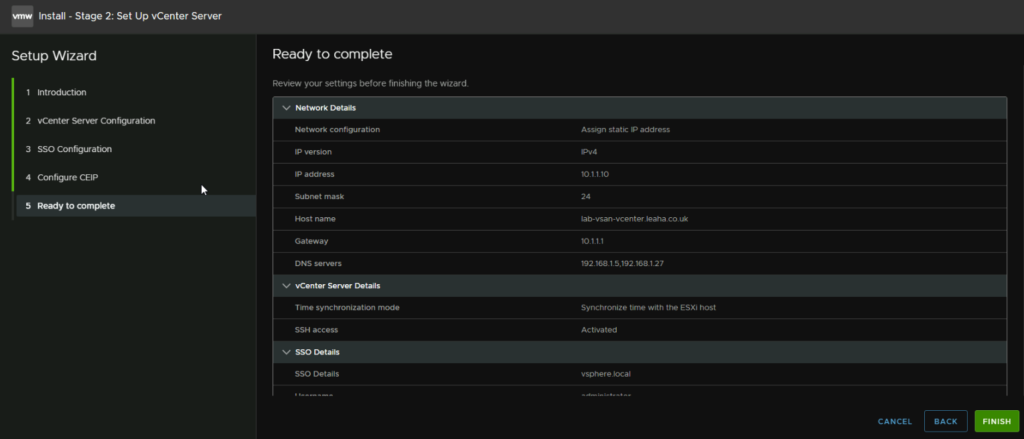

1.2.2 – Stage 2

When you clicked continue the UI from the ISO will take you through the next stage, however mine closed as I left it a while when I clicked continue, if this happens navigate to

https://fqdn:5480 and login with the root password, we can continue from here

Click Setup

Click Next

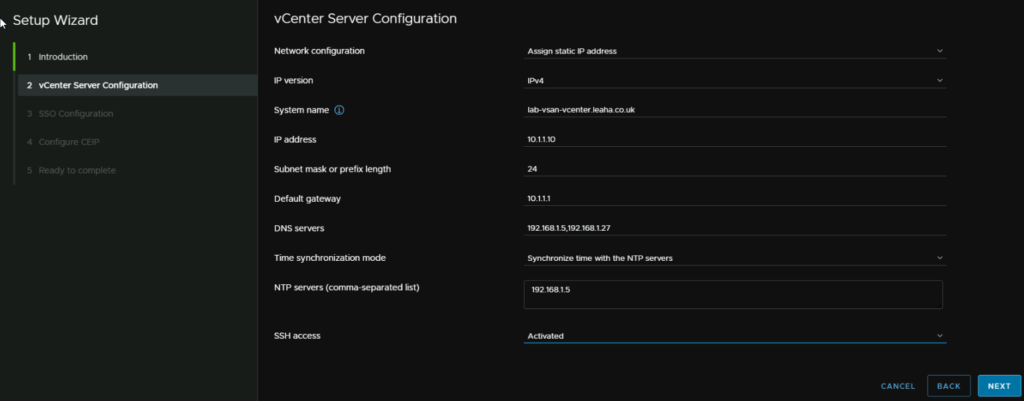

For this next bit, if the App didnt close, you would only have the NTP server and SSH options, so we will ignore everything else

NTP should be the same as ESXi for vSAN, and SSH can be enabled/disabled as you like, most production environments opt to disable SSH, however if the vCenter were to break, this will be needed

We will then click Next

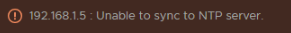

I did get an NTP error oddly, even though it is working

My suggestion here is to change time sync to be inline with the host, as that has NTP, it will have the correct time

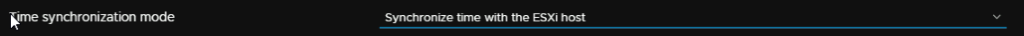

For the SSO domain, I would recommend vsphere.local, but it can be anything, this cannot be changed later

And add a password for the [email protected] account, then click Next

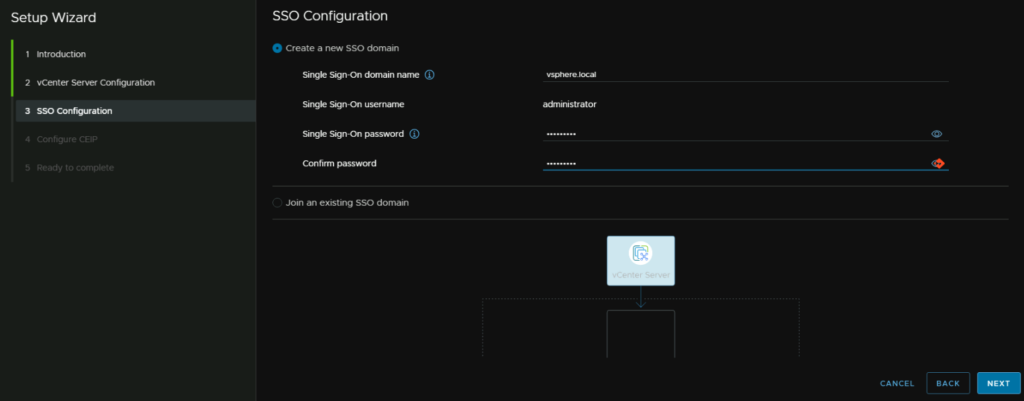

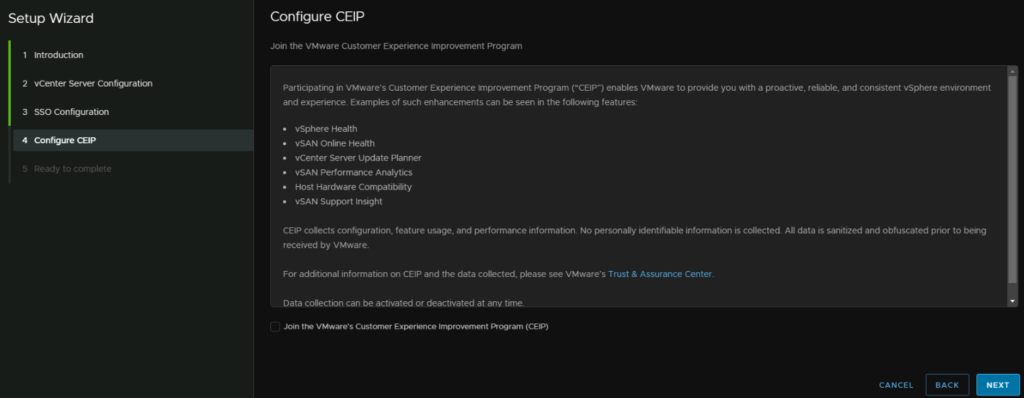

Opt in/out of the CEIP as you like

Then review and finish

This cannot be stopped once started, so click ok here when you are 100% happy

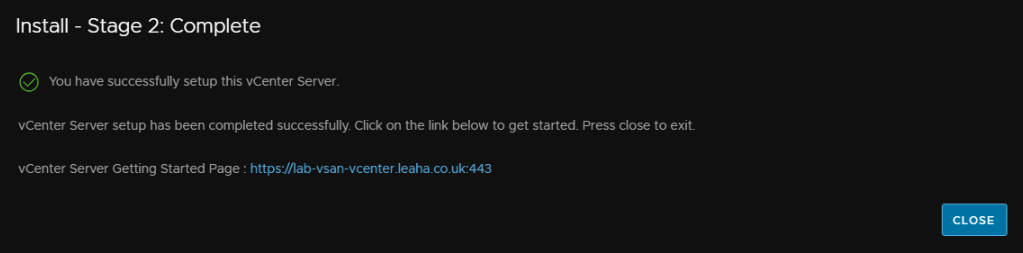

Once its done you can click Close and login at

https://fqdn/ui

1.2.3 – Adding Hosts

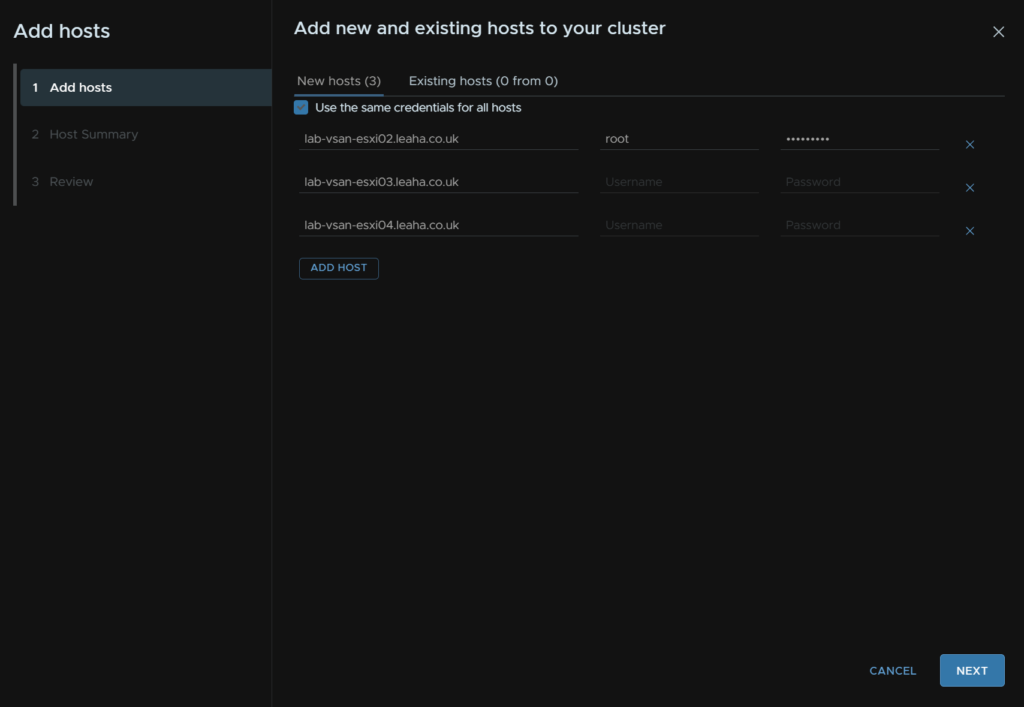

Now we need to add our remaining 3 hosts, right click the cluster and click Add Hosts

Add the FQDN of the remaining hosts, if they all have the same root password, you can use the check box to use the top credential entry for all hosts

Accept any SSL warnings that pop up

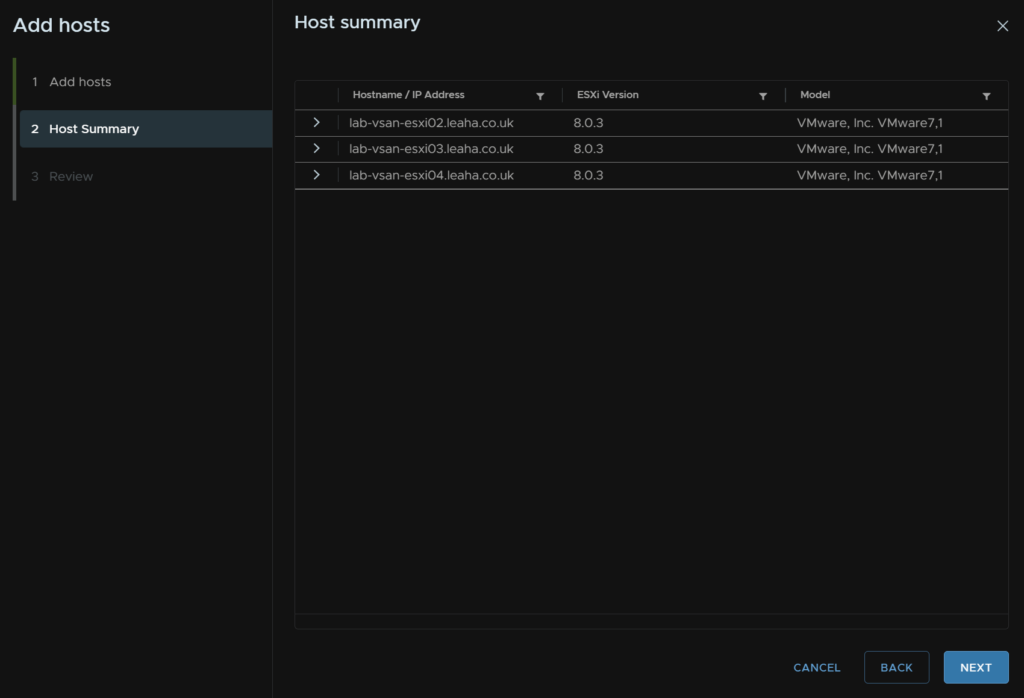

Check the host summary and click Next

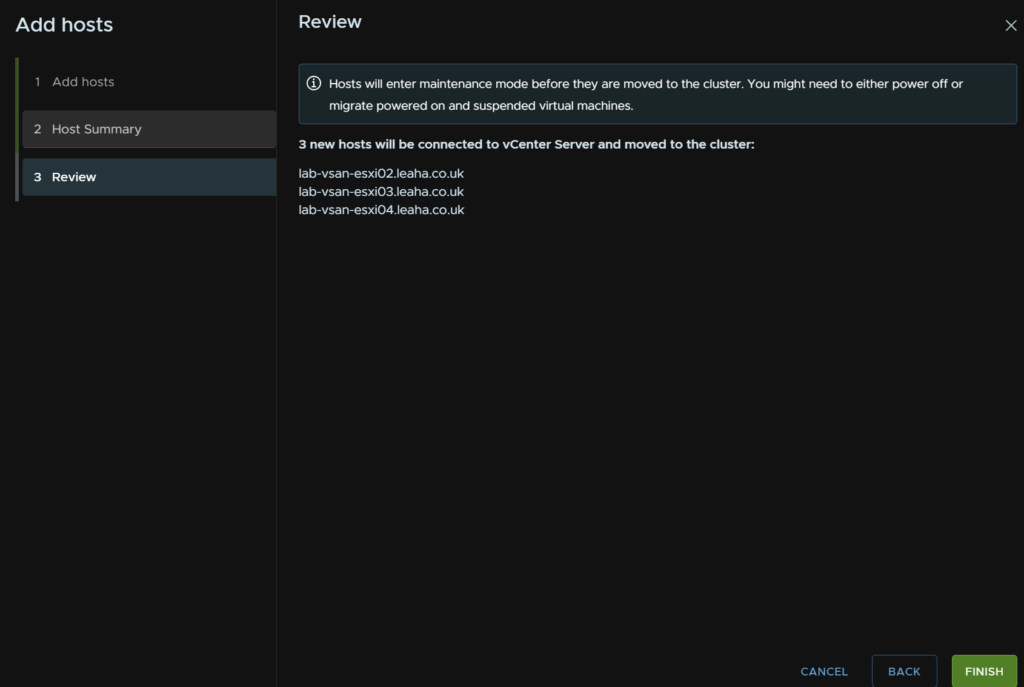

And click Finish

1.2.4 – Management Switch

When we setup the initial install, we only added one NIC for the management switch

Changing these bits from vCenter has one key advantage, if we were to incorrectly set something and management connectivity is lost, vCenter will revert it, rather than loosing connection to the host, compared to changing this in the ESXi UI

We will keep the same standard switch, and not move management to a VDS, as a VDS cant be managed from ESXi, so keep management on a standard switch gives us the best flexibility and the ability to connect to the host and fix issues in some circumstances

And we will be setting it and leaving it, so we wont need to be making changes frequently

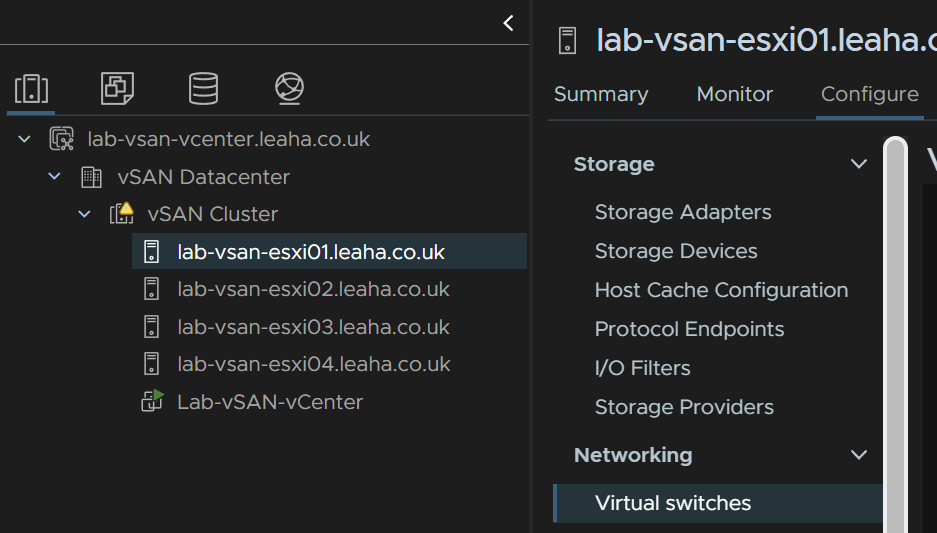

Click the host and head to Configure/Networking/Virtual Switches

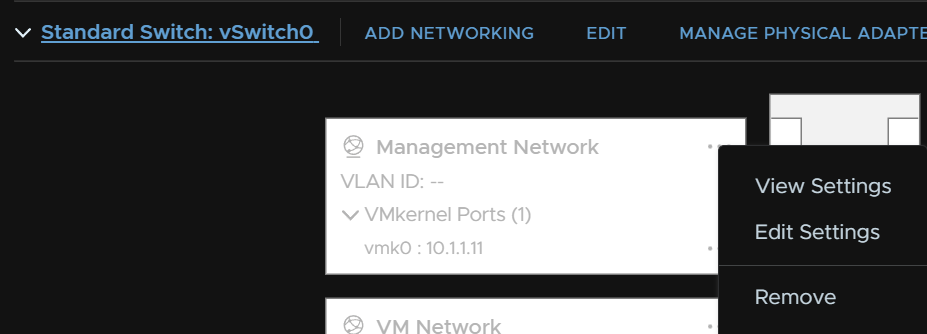

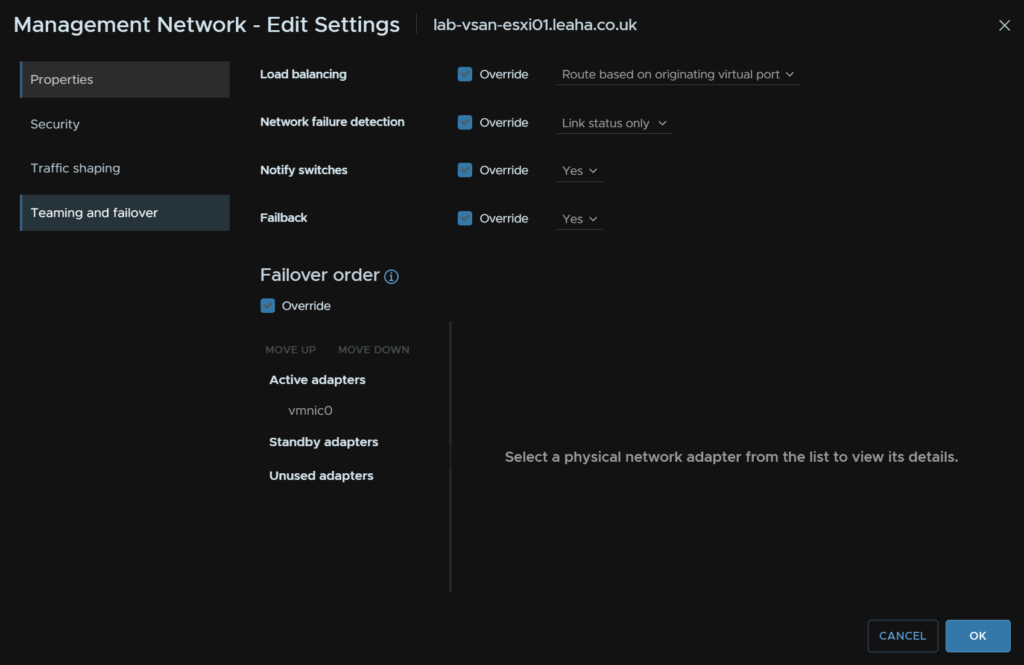

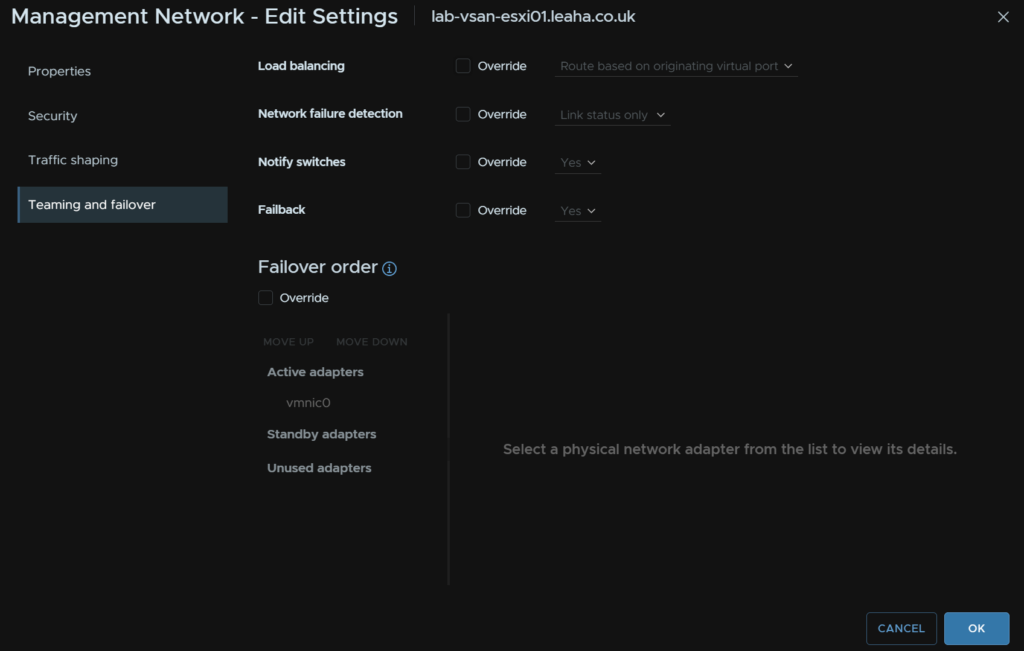

Then click the three dots on the management port group and click Edit Settings

And uncheck all the override boxes here, as this will cause issues when we add the second NIC to the switch

It should look like this, then click ok

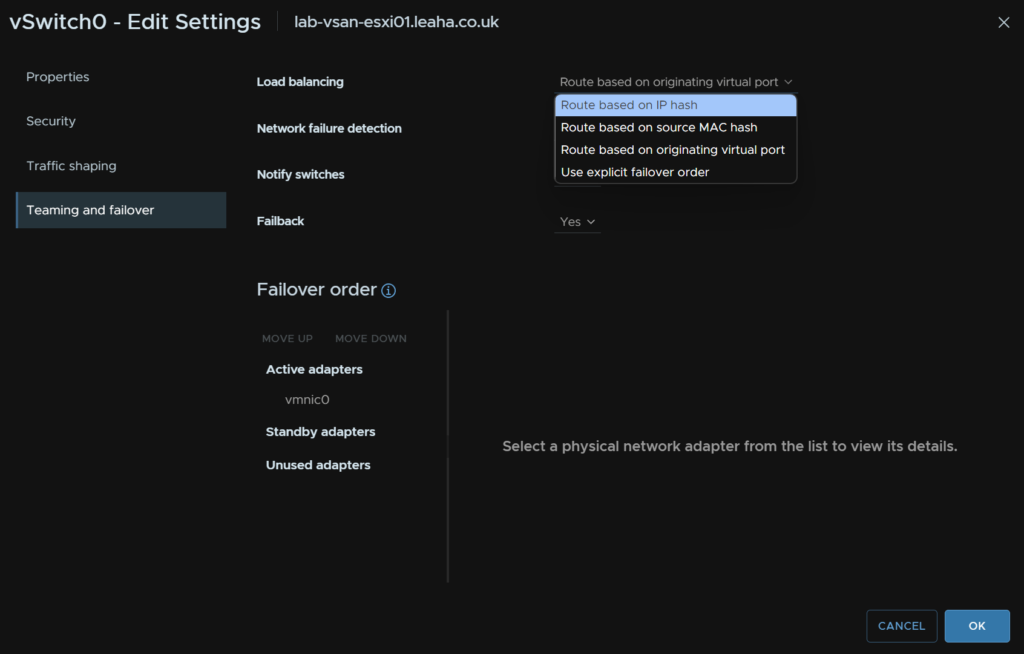

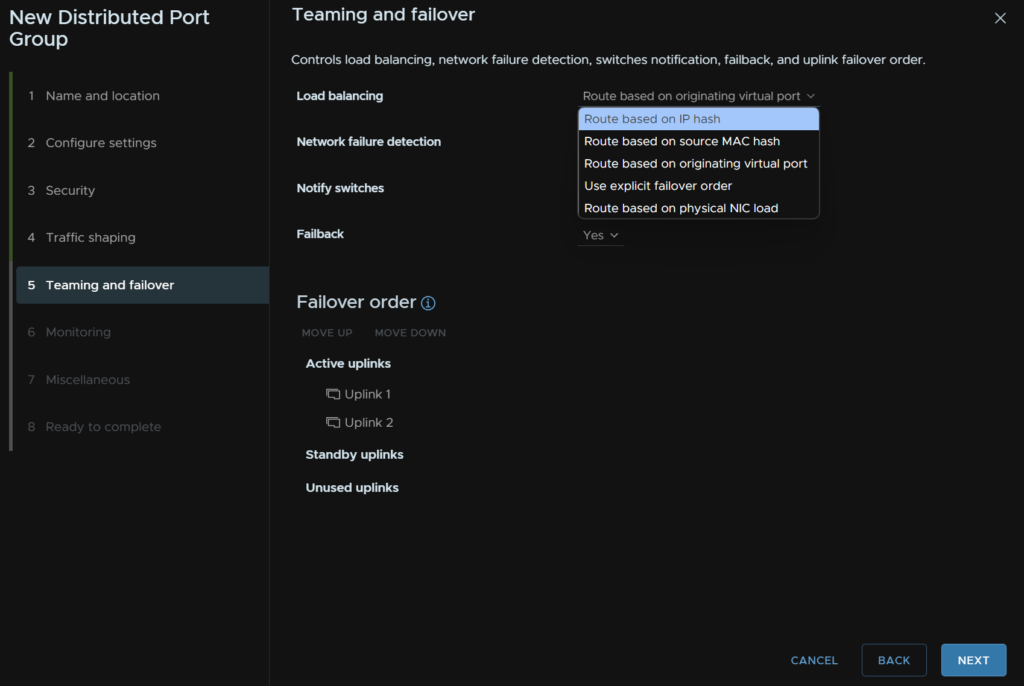

Its worth noting, your core switches likely have MC-LAG, Dells VLT or HPEs VSX, you will want to also go to Teaming And Failover and ensure you change load balancing to “Route On IP Hash” else the connectivity wont work

For this sort of set up, click Edit

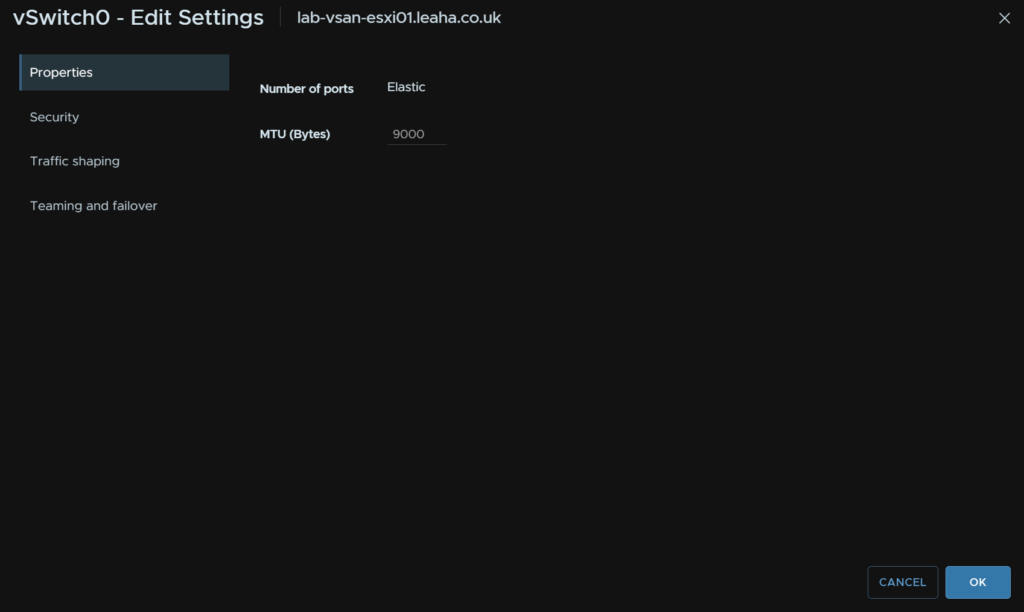

While we are here, ideally you want to have your switch MTU, at the physical level, all switch ports and port channels the management NICs interact with should have an MTU of 9216, usually the max of the switch

If you are unsure, leave it at the stock 1500, MTU inconsistencies can cause all sorts of issues that you dont want

Set the MTU to 9000 and click Next

Under Teaming And Failover click the drop down under Load Balancing and select “Route Based On IP Hash”

If you do set this and dont have the above sort of switch setup, it shouldnt cause any issues

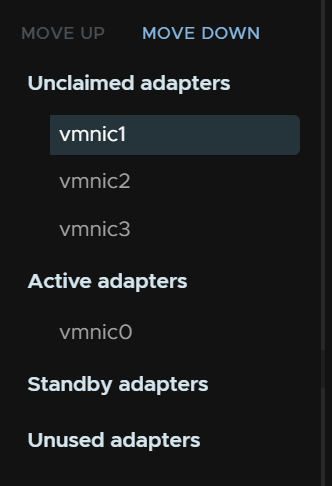

Now we need to add the second NIC, select Manage Physical Adapters

For me, NIC1 is the other management NIC, this may be different for you, a lot of servers with six NICs have a quad port Mezz card and a dual port card, in this case, put two ports from the quad port card for management

While yes, you want ASIC redundancy, by having NICs from different cards, if you only have two cards, management is what you want to have with both NICs on one card

Keep storage and VM switches with one NIC on each card, if these go down, it will trigger a P1 incident, management dropping out wont impact production workloads

To add the second NIC, click it and click Move Down until its under Active Adapters

It should look like this

Then click ok and repeat on the remaining hosts

1.2.5 – Adding The Remaining Hosts To vSAN

Now we have the hosts we need a networking switch to setup the vSAN networking before we can expand the vSAN cluster to use the storage from all servers

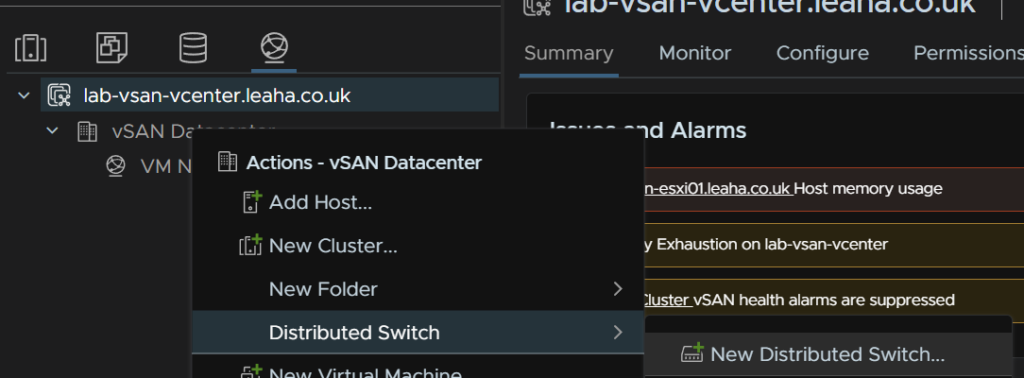

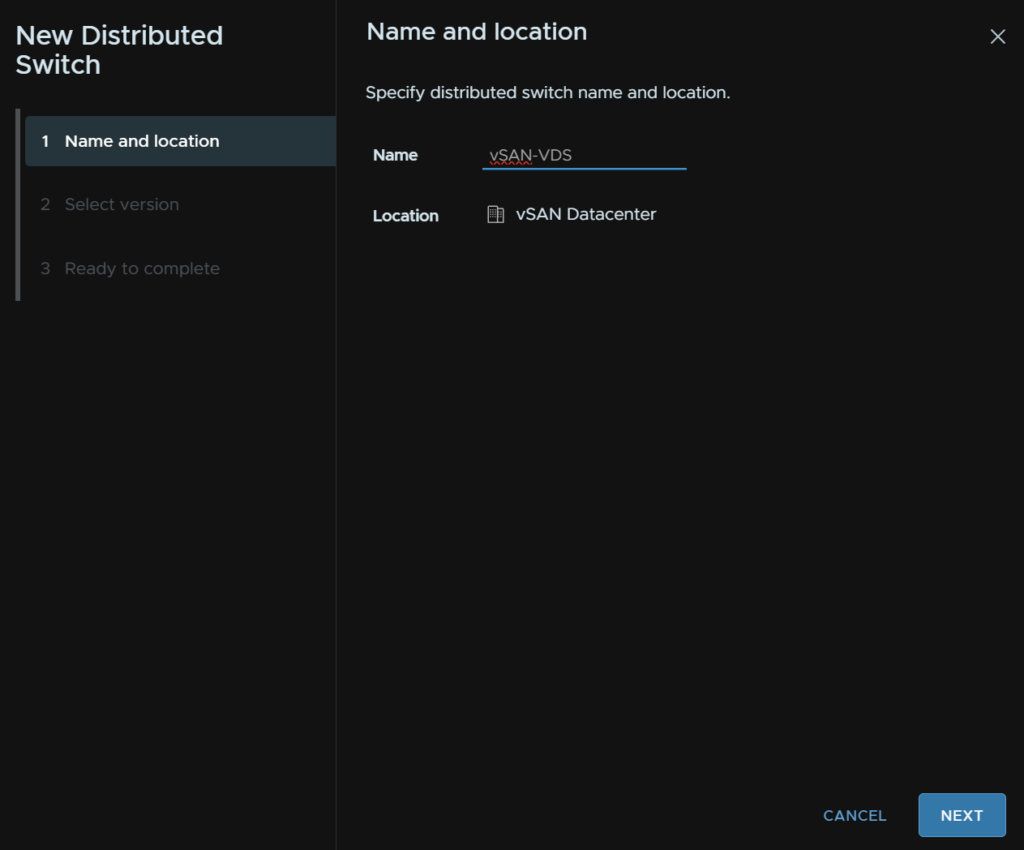

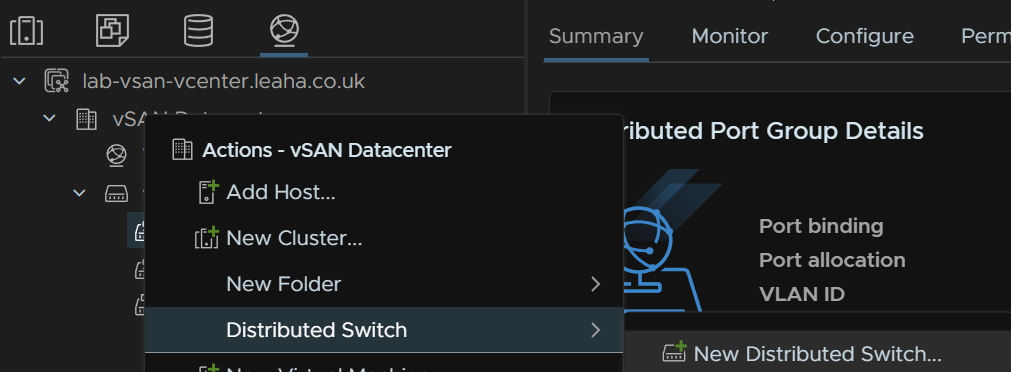

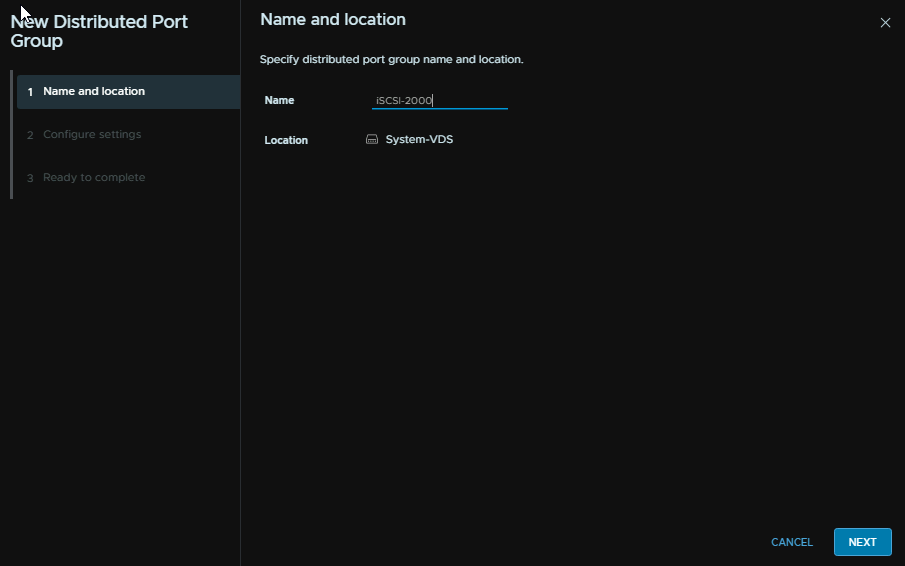

Head to the networking tab, then right click the Datacenter, and click Distribution Switch/New Distributed Switch

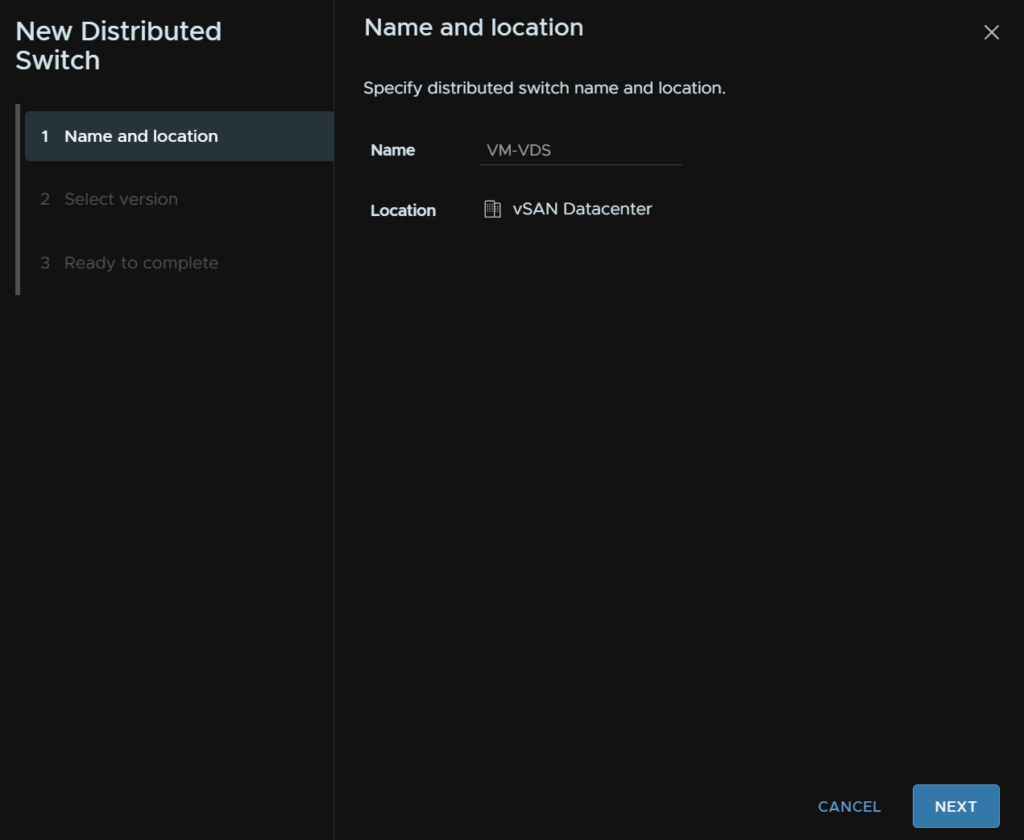

Give the VDS a name

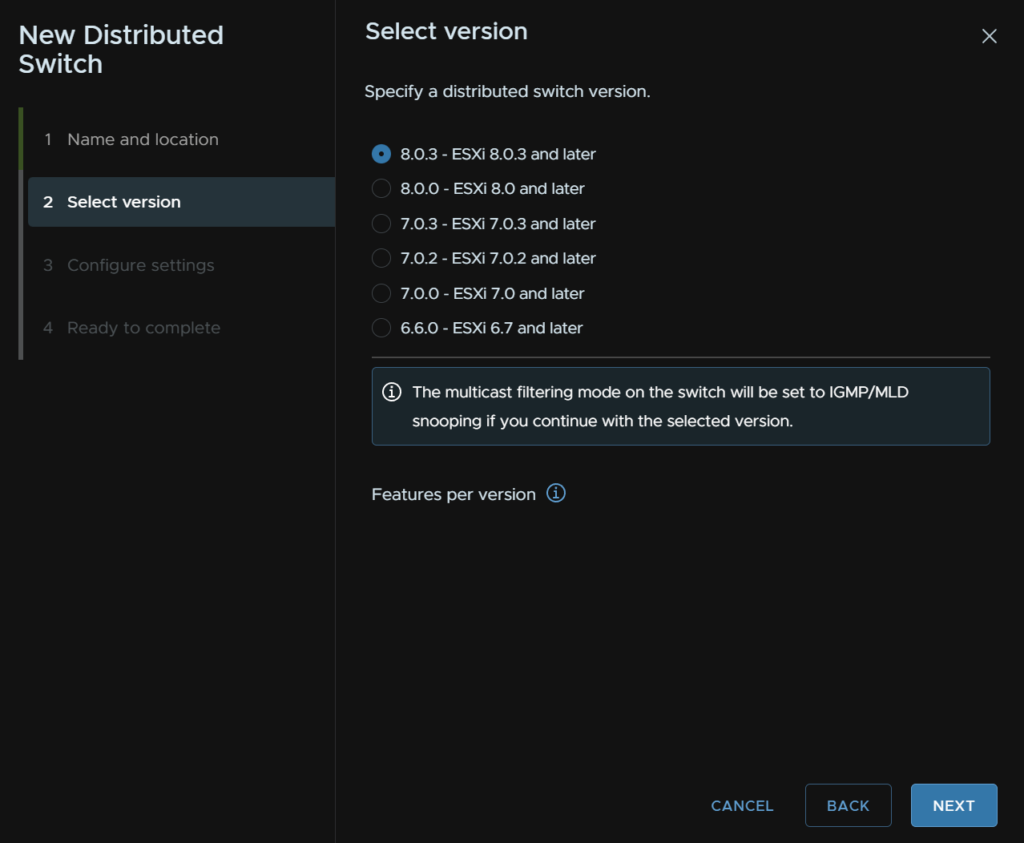

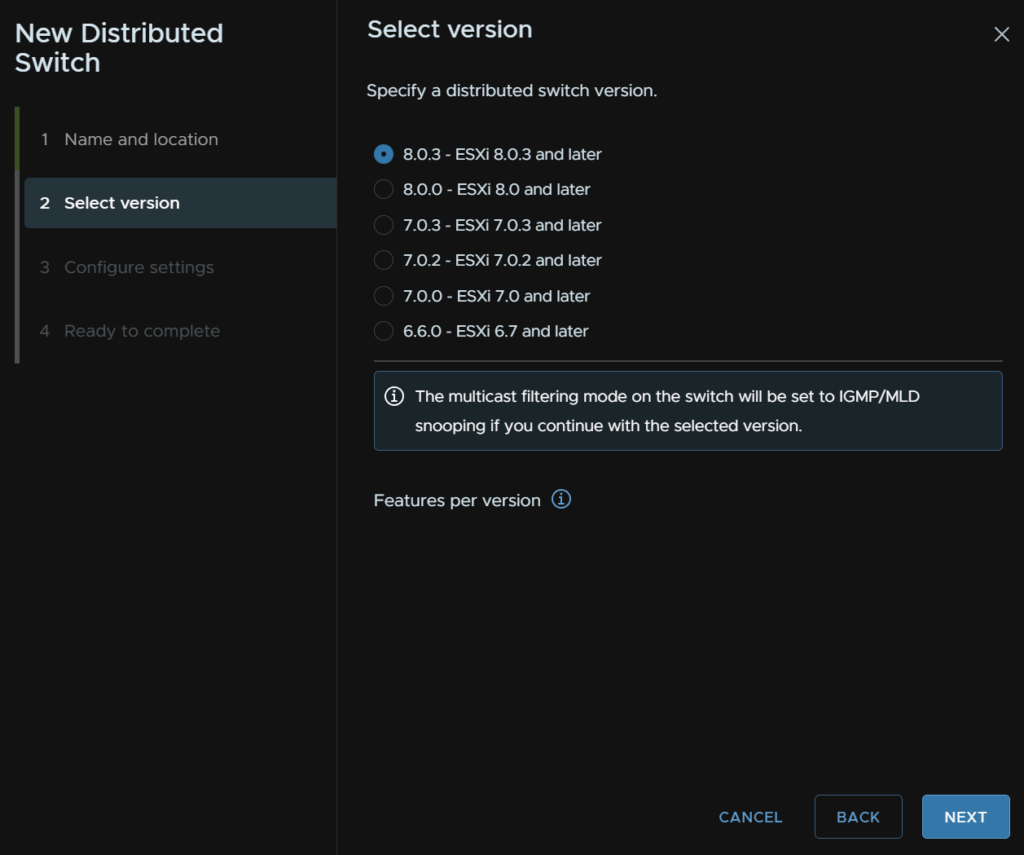

Select the version, I would suggest the latest, assuming the datacenter will have ESXi hosts of the same version, otherwise, opt for the version for the lowest version ESXi host, unless you need features on a newer version, if you do, multiple VDS will be needed

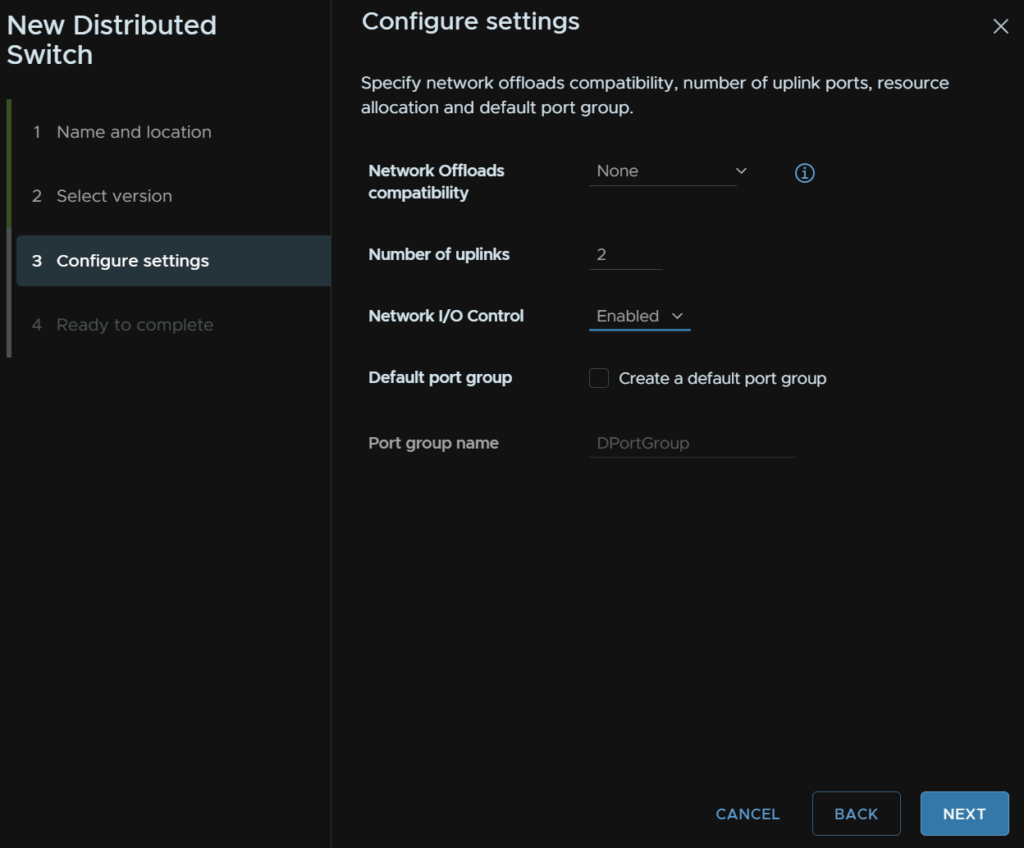

You can select a network offload, but unless you have a DPU, which for VVF you likely wont, you can leave this as none

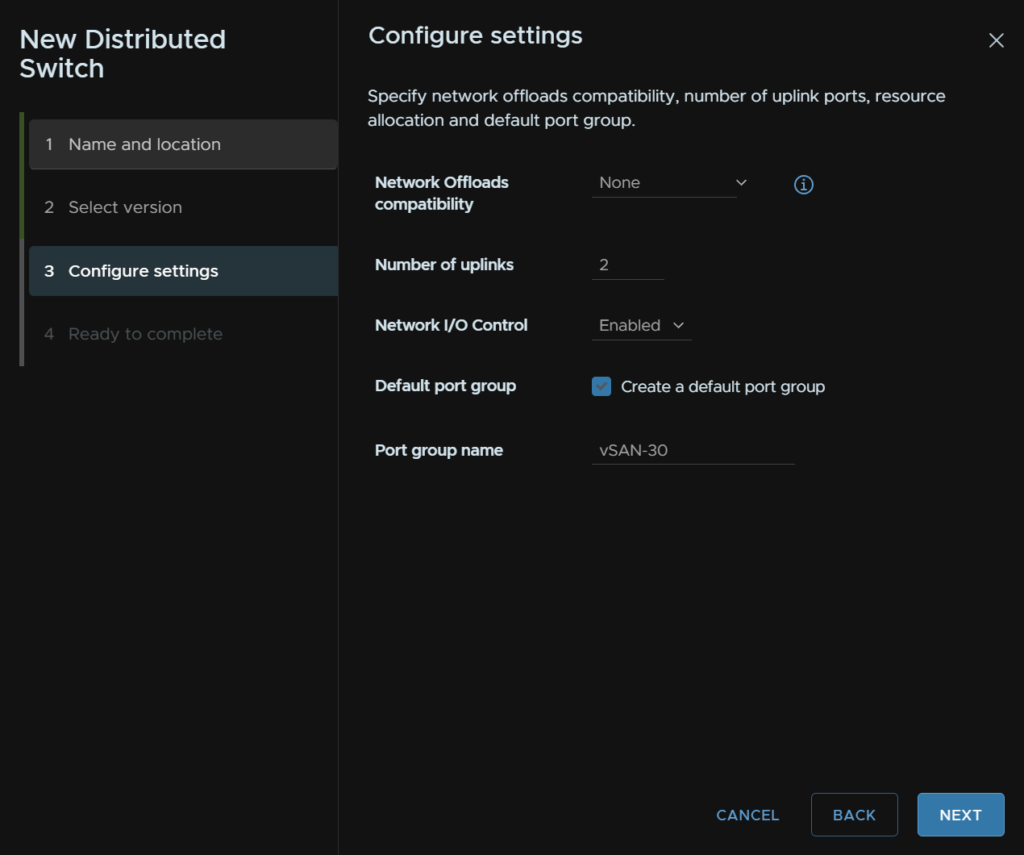

We need 2 uplinks, enable I/O control, and add a Default Port Group, then give it a name, the vSAN should be on a dedicated network, that is also non routable

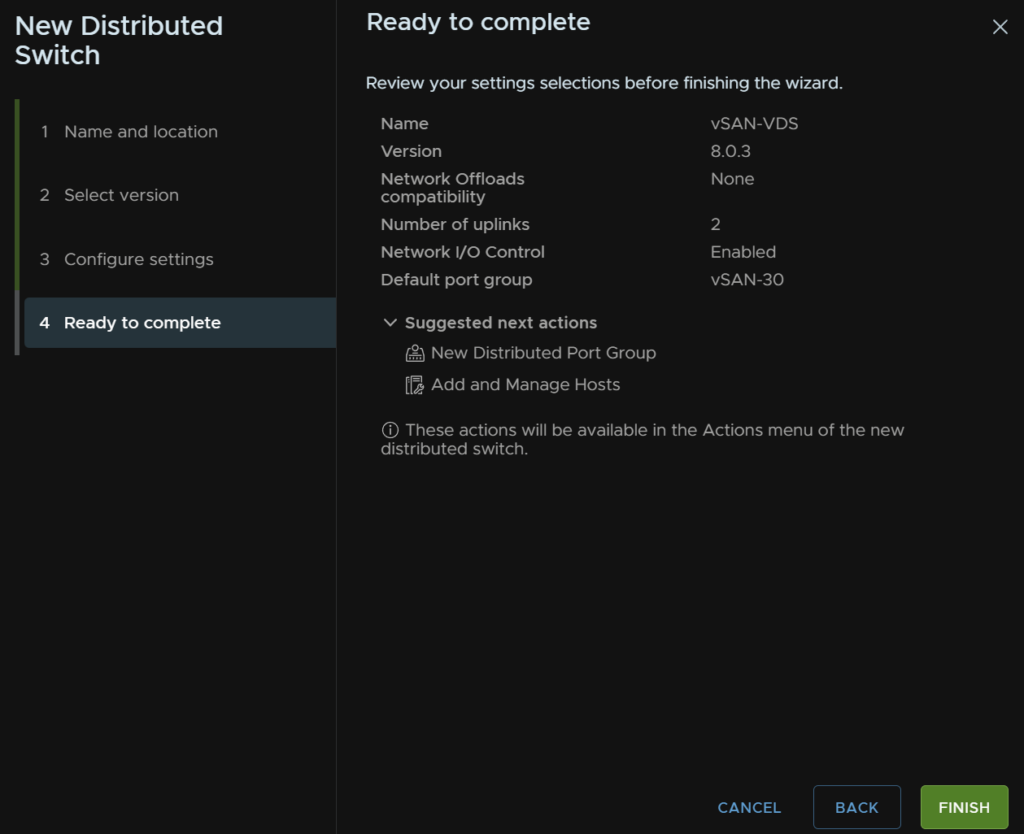

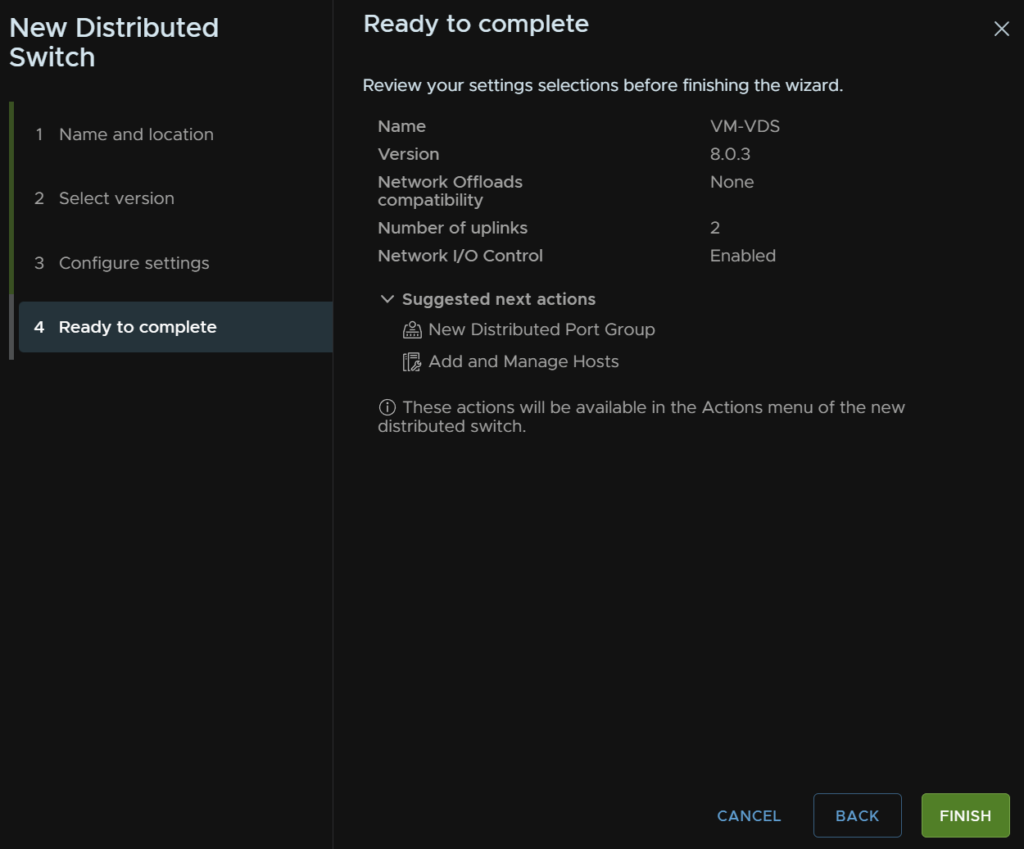

Then click Finish

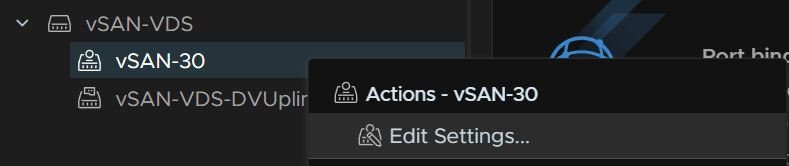

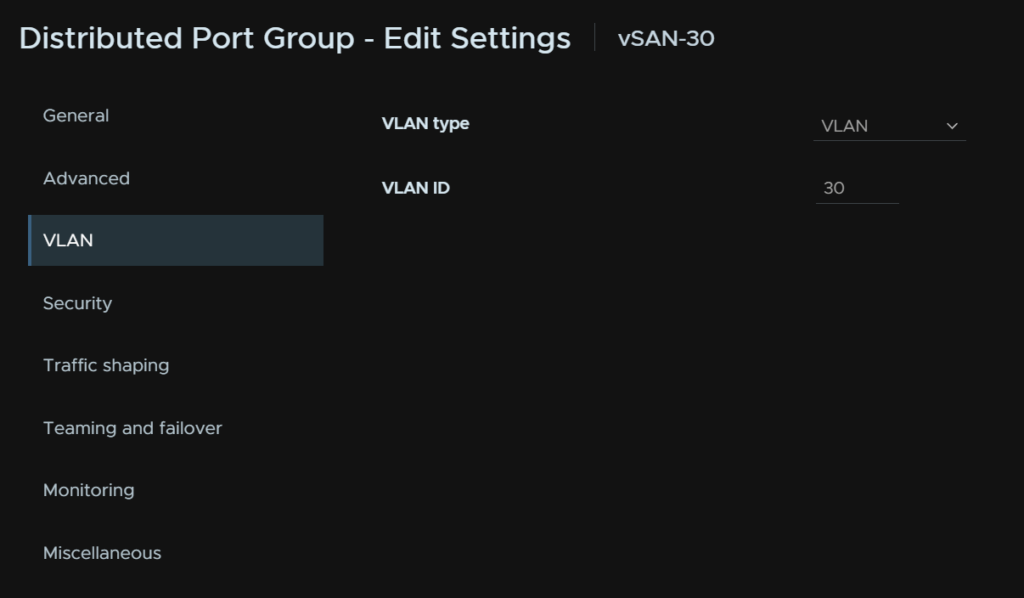

Right click the port group and click Edit Settings

Click Next till you get to VLAN and set the VLAN tag to match the vSAN tag, this assumes you have the VLAN tagged at the switch level, if, for example, vSAN is on VLAN 30, and you have the switch port native VLAN, or access VLAN, as VLAN 30, this isnt needed

As I have my switch trunked, I am setting the VLAN

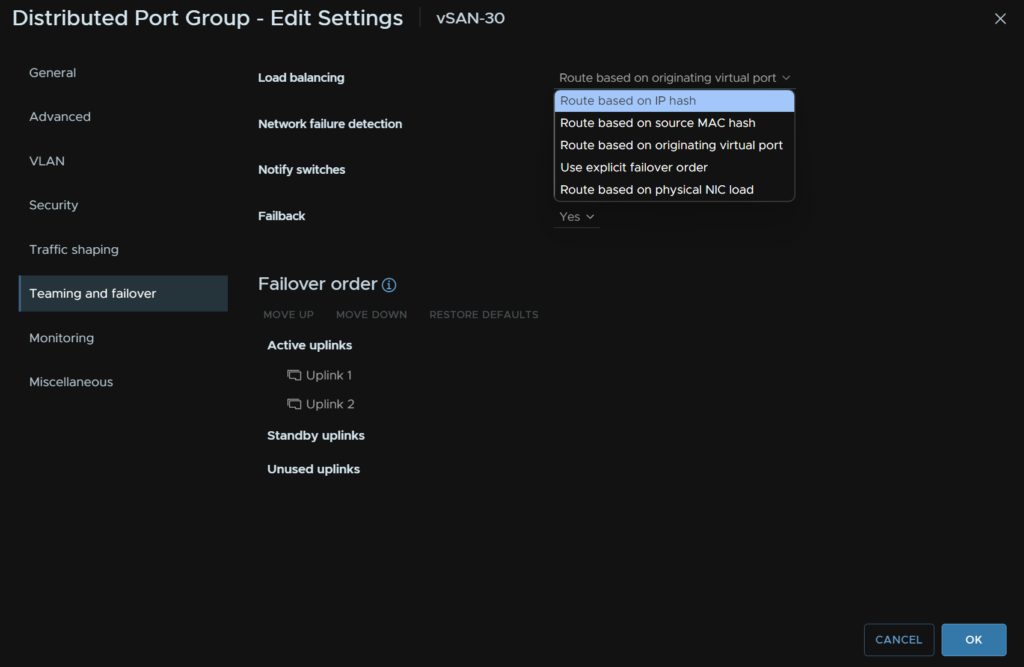

Again, your core switches likely have MC-LAG, Dells VLT or HPEs VSX, you will want to also go to Teaming And Failover and ensure you change load balancing to “Route On IP Hash” else the connectivity wont work

Then Click Next and eventually Finish

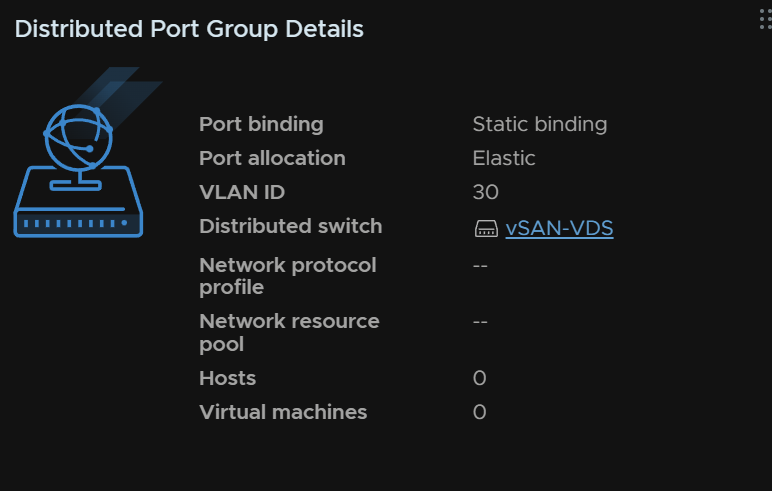

We can see this in the summary tab

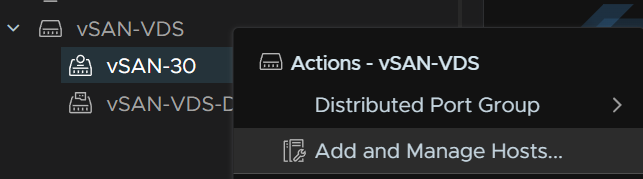

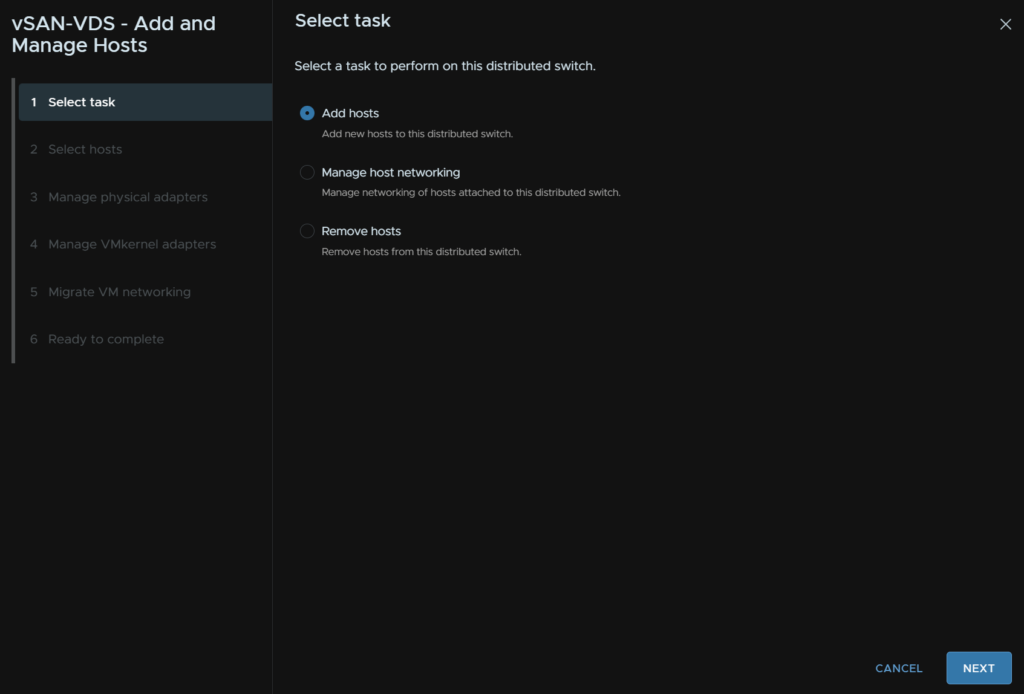

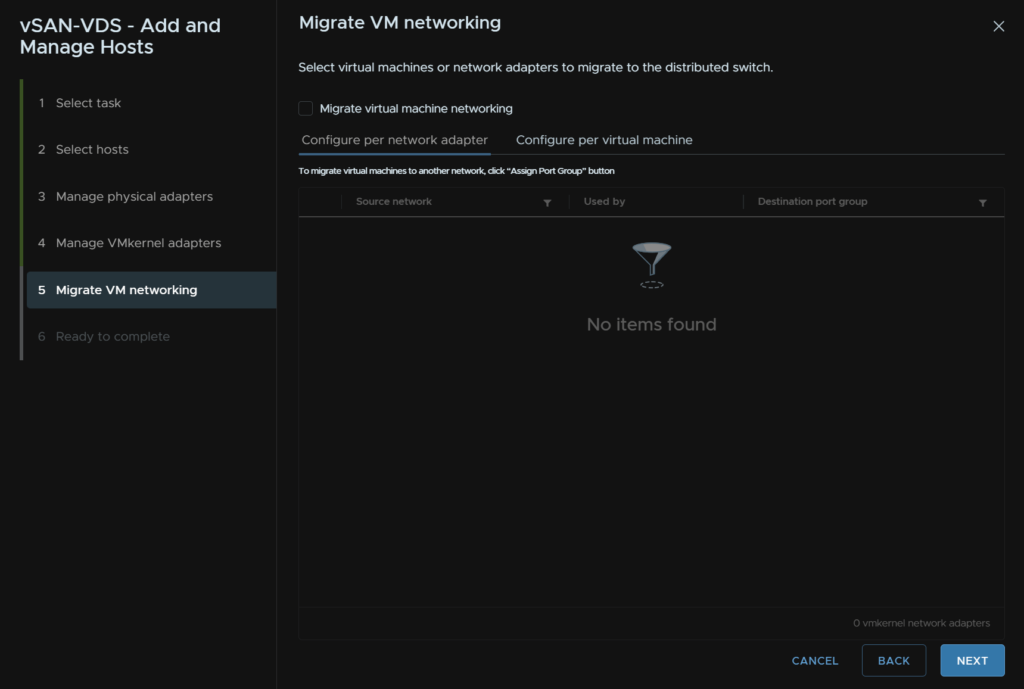

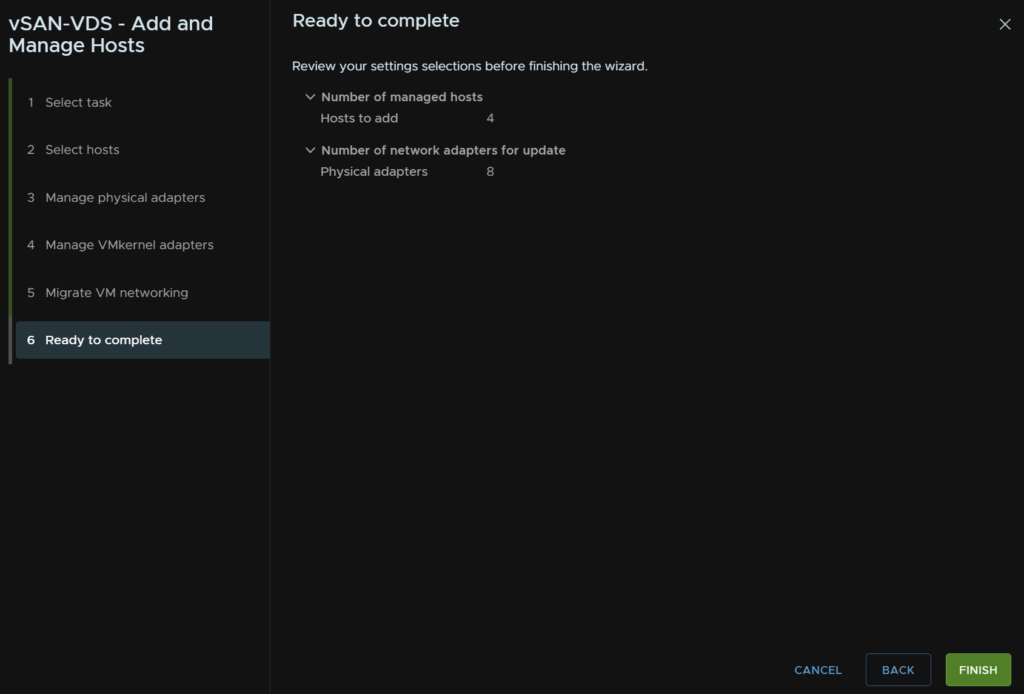

Now we need to add the hosts to the VDS right click the VDS and click Add And Mange Hosts

Select Add hosts, and click Next

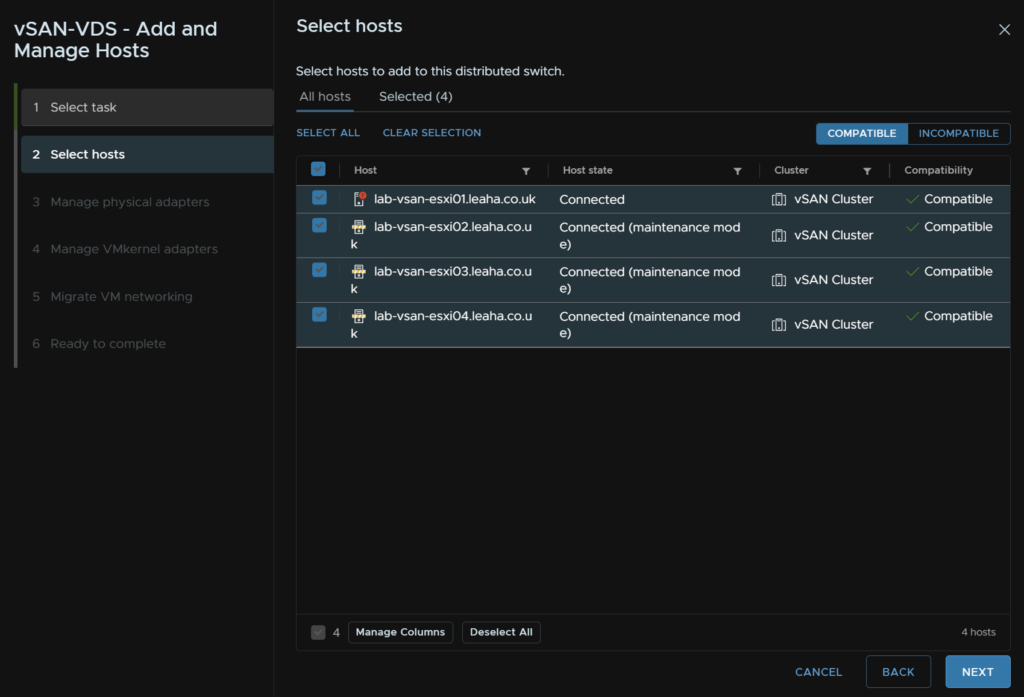

Select all the hosts and click Next

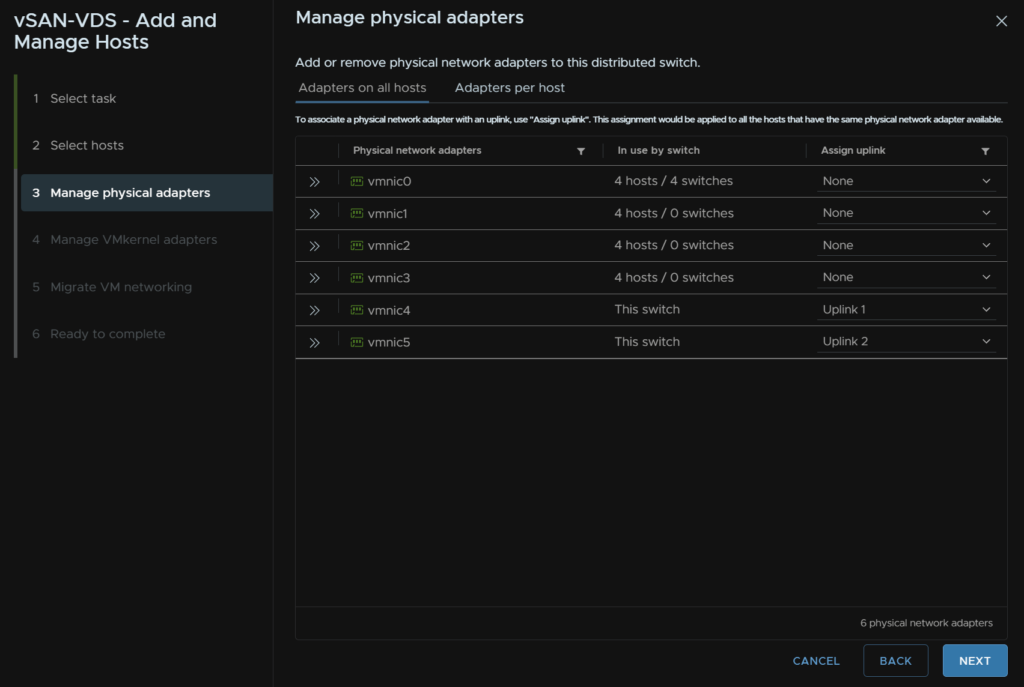

Select the physical adapters that correspond to the NICs you have assigned for vSAN storage, in my case VMNIC5/6

For a production system these should be spread across two physical NIC cards in a server, this gives ASIC redundancy meaning if a physical card fails, your storage remains up, and this is very important

Then click Next

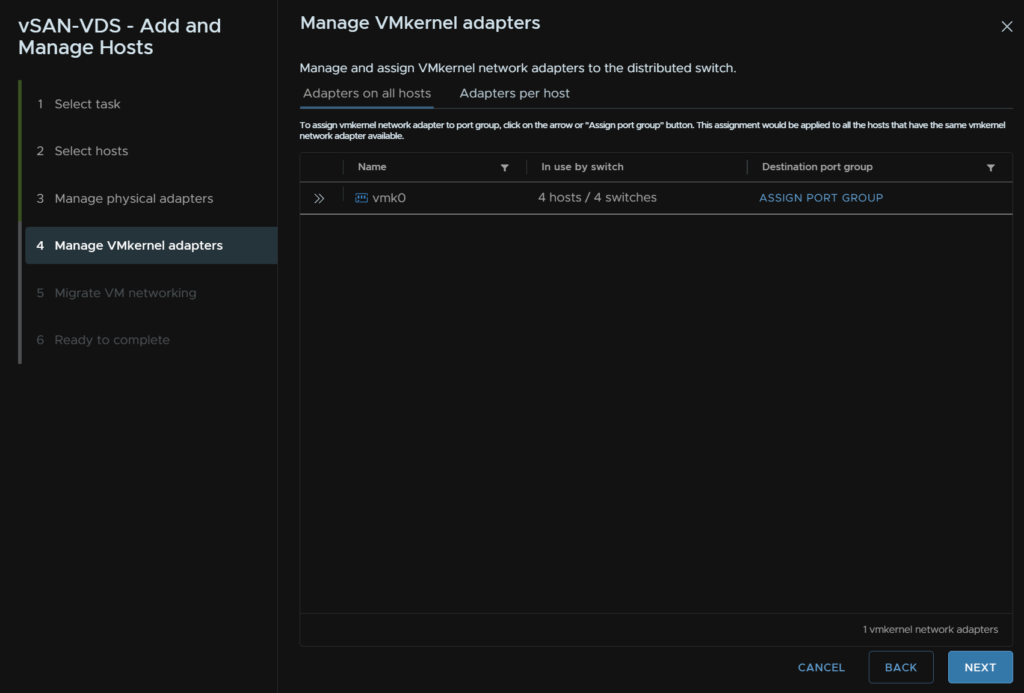

Click Next here

Next again

Then click Finish

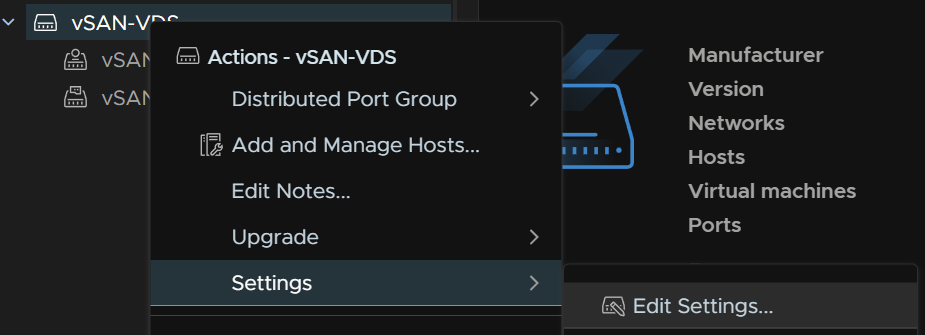

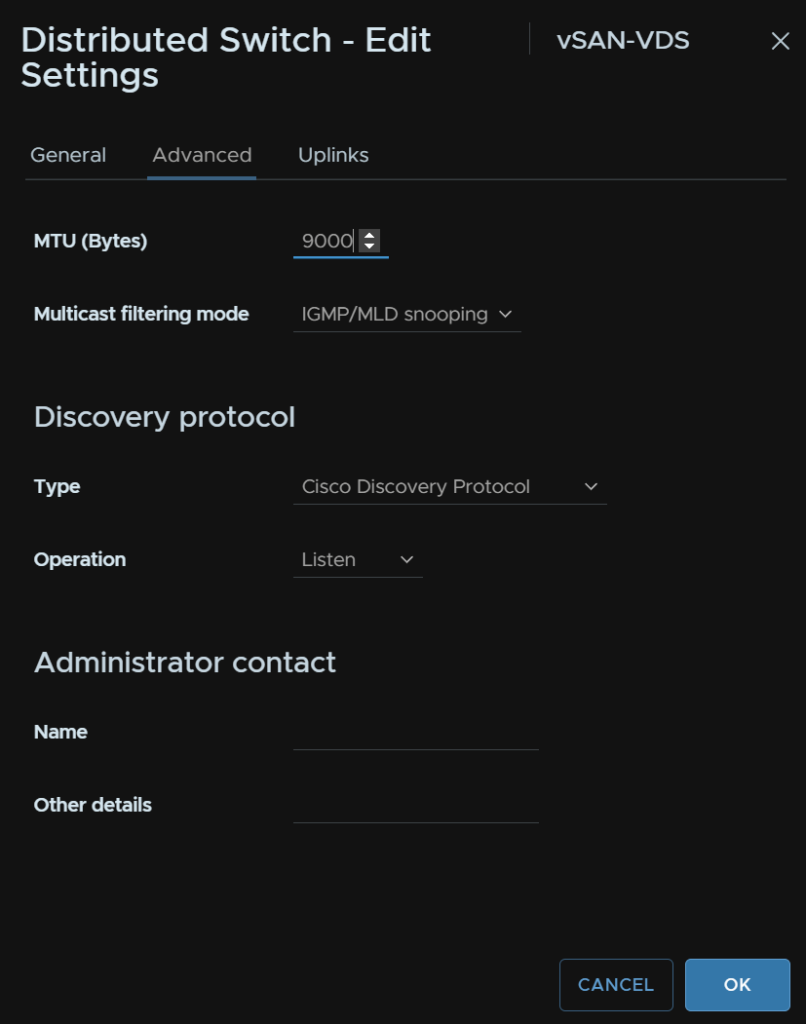

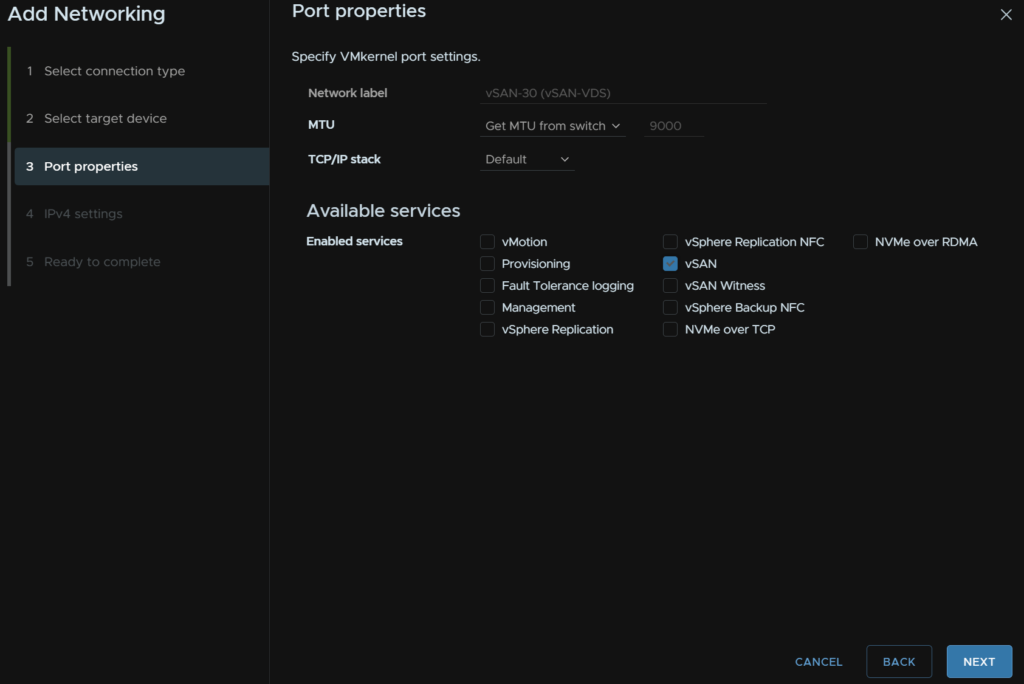

Lastly, for the VDS, it would be ideal to use a MTU of 9000, though you do want to be careful with this, as MTU changes can easily cause networking issues if not done properly

The important bit is that all ports, port channels and switches that connect the hosts should have an MTU of over 9000, switches normally max out at 9216, so I would set the ports and port channels to that

Then the VDS needs updating to that, right click the VDS, and head to Settings/Edit Settings

Then head Advanced and set the MTU to 9000 and click ok

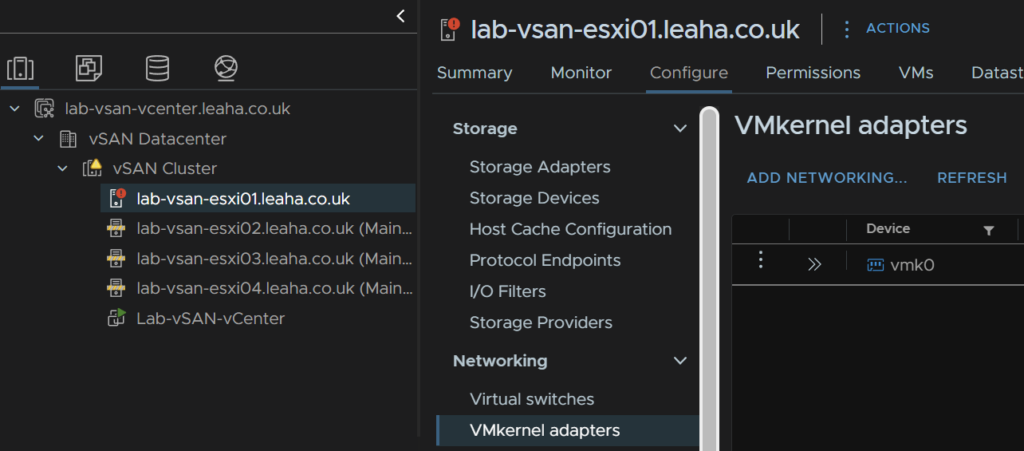

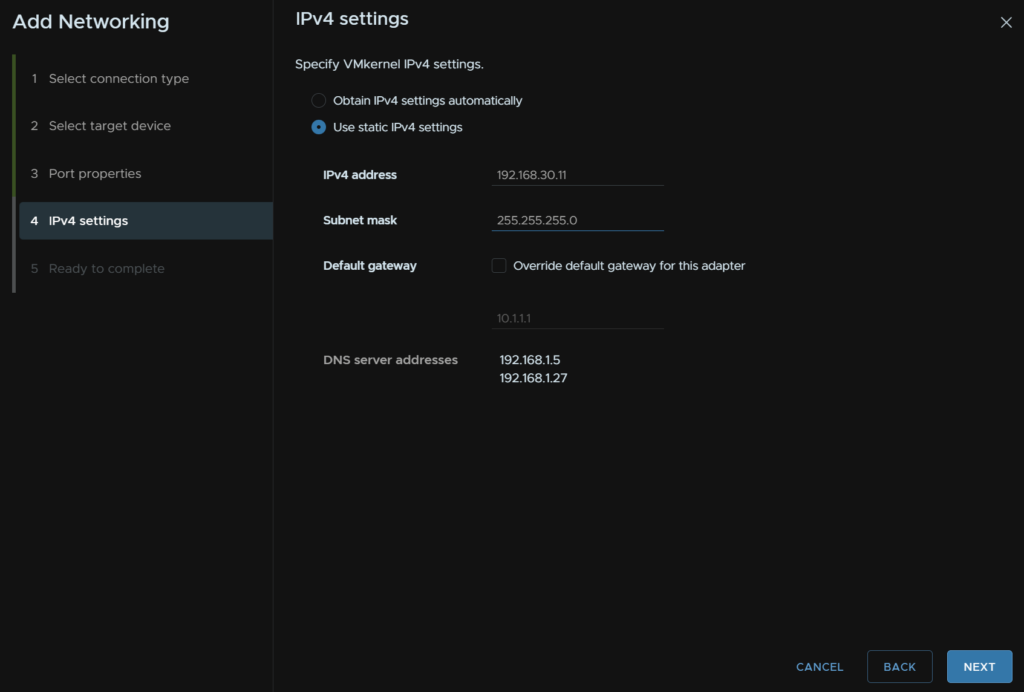

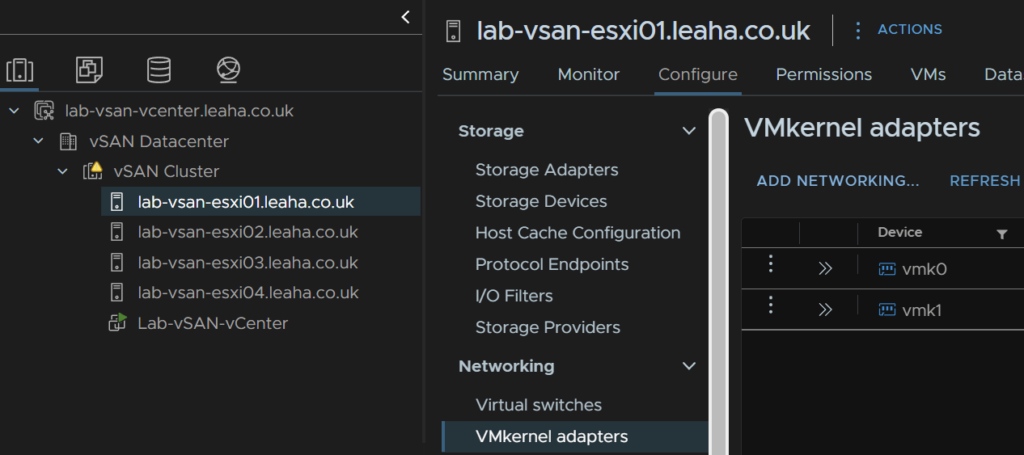

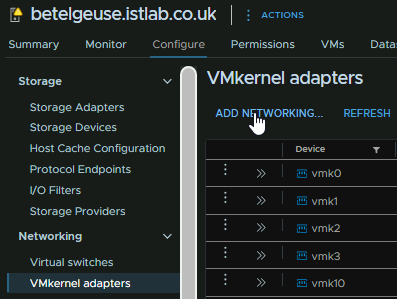

Now, we need a dedicated VMK for vSAN, click the host and head to Configure/Networking/VMkernel Adapters and click Add Networking

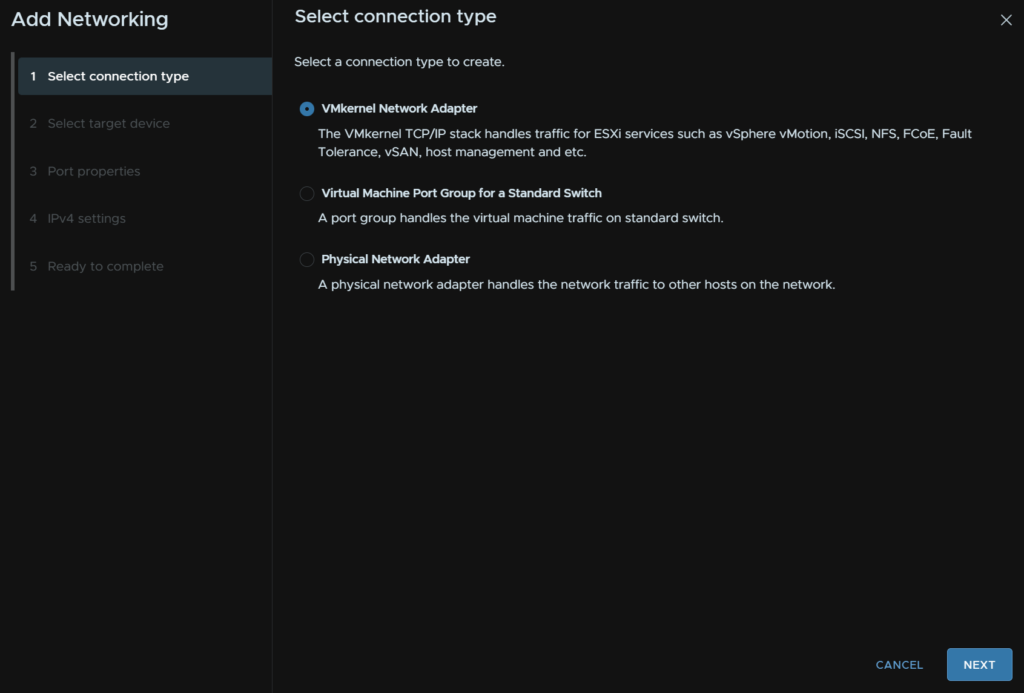

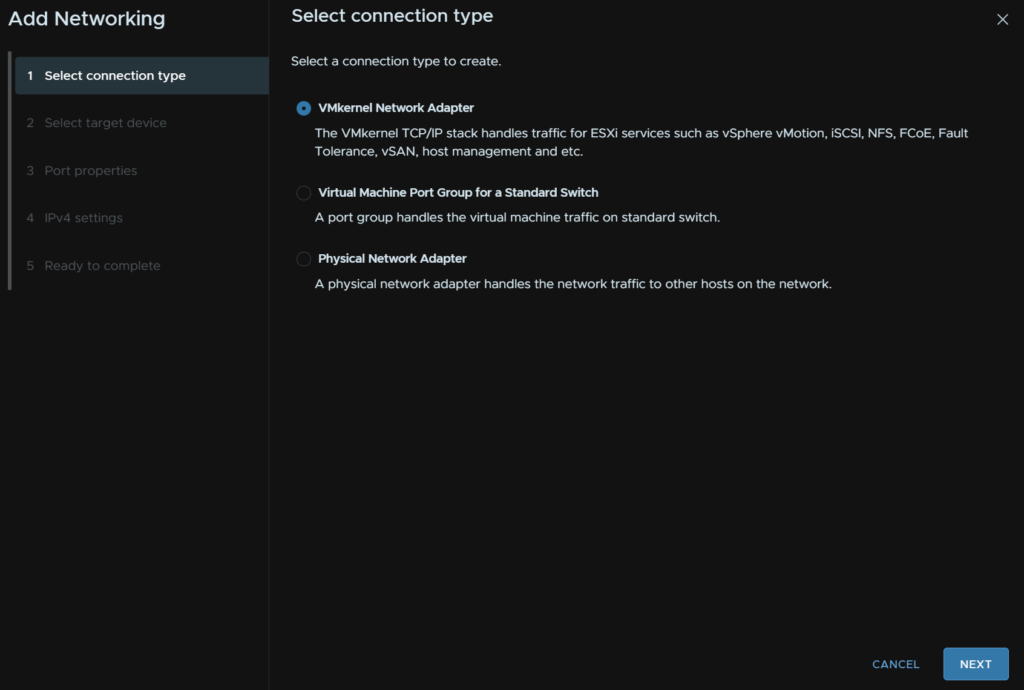

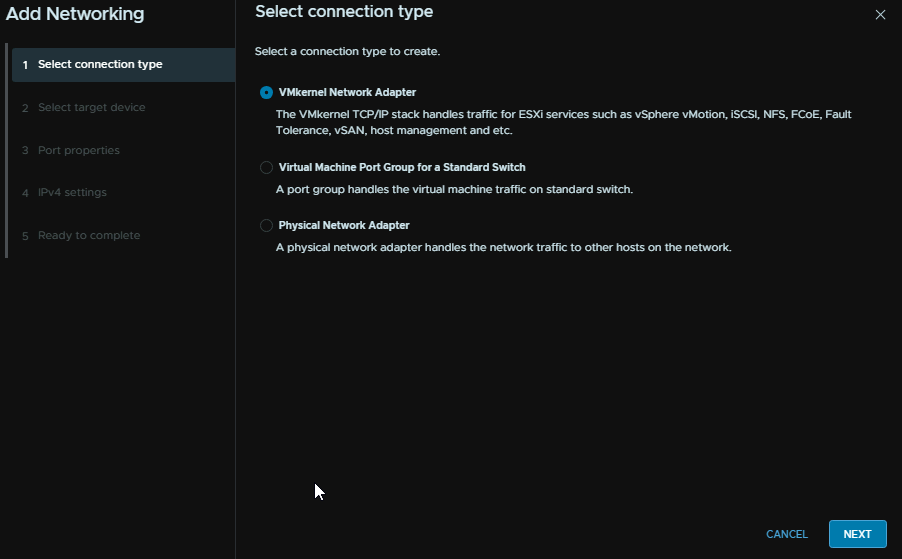

Select VMkernel Network Adapter and click Next

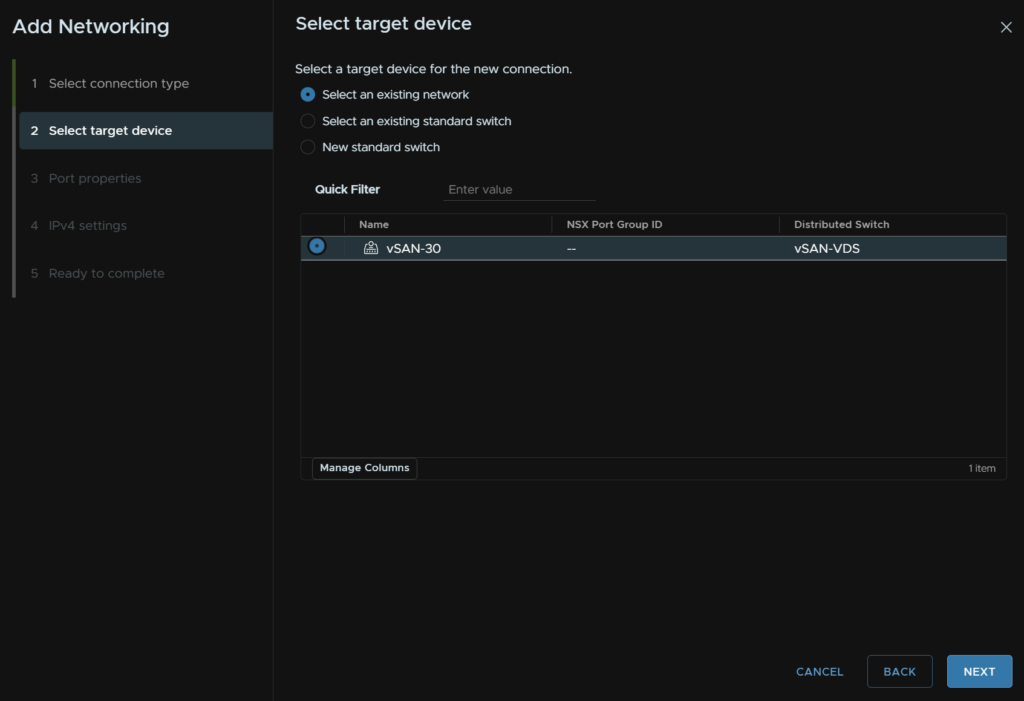

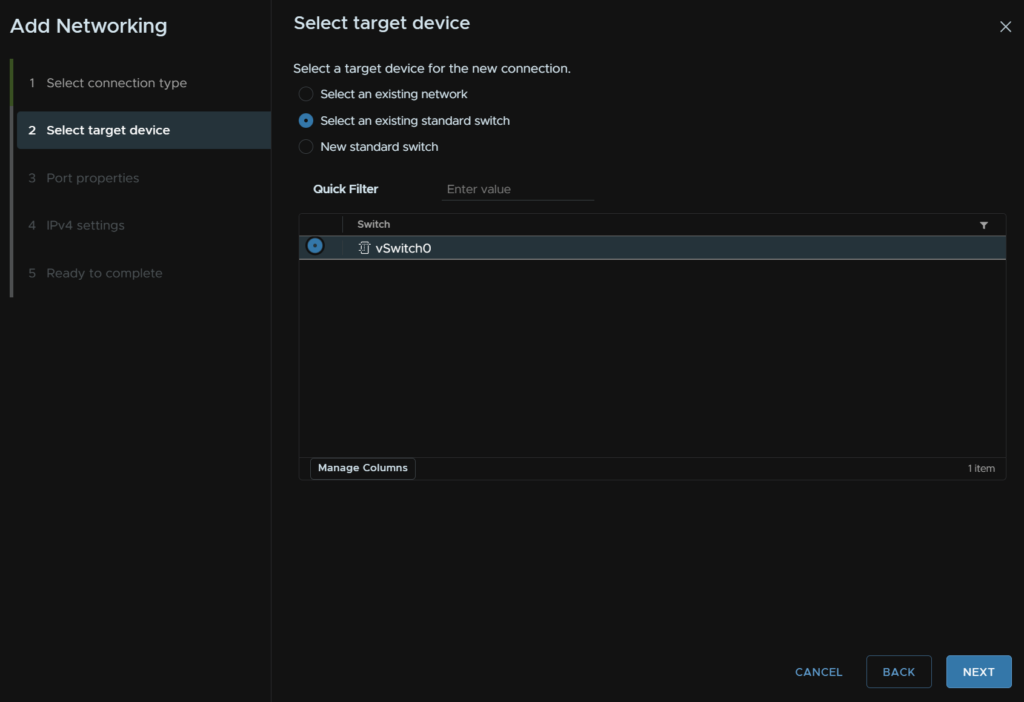

Select the vSAN port group and click Next

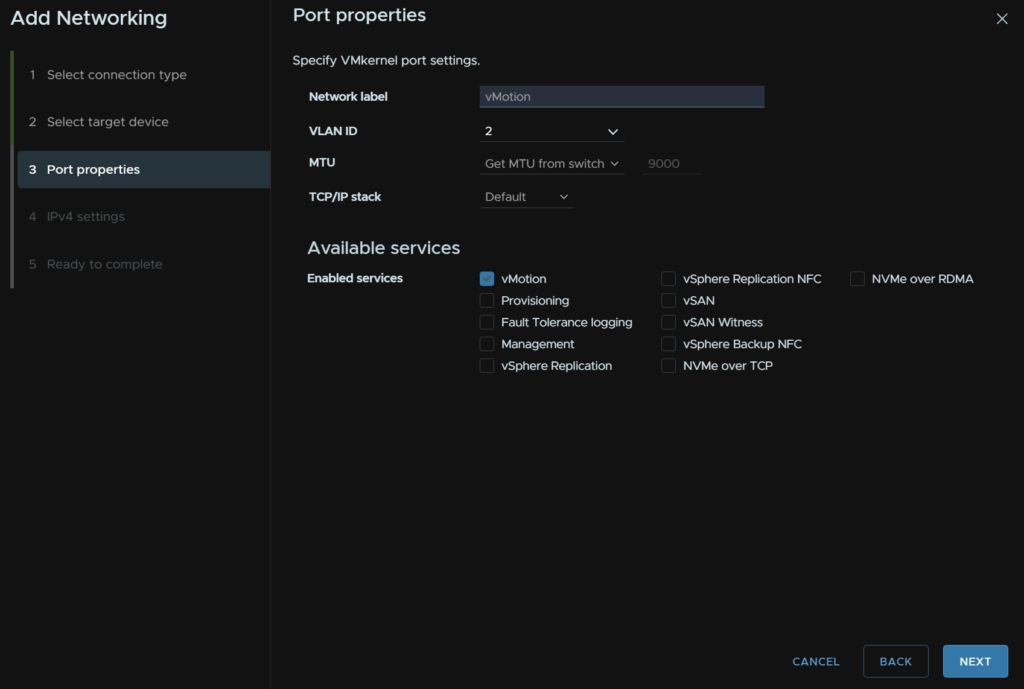

Under services, check vSAN and click Next

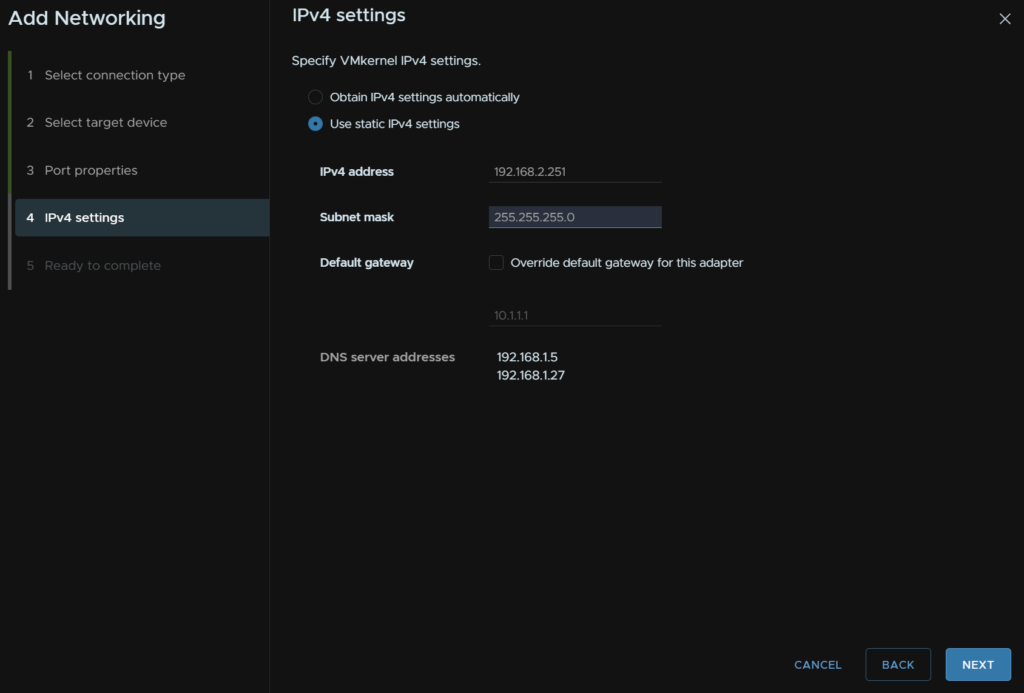

Add an IP Address and subnet mask for the VLAN you have for vSAN and click Next

Dont worry about DNS or the gateway, as its a non routable network, this only needs to communicate with the other hosts at L2

Then click Finish, and repeat on the remaining hosts

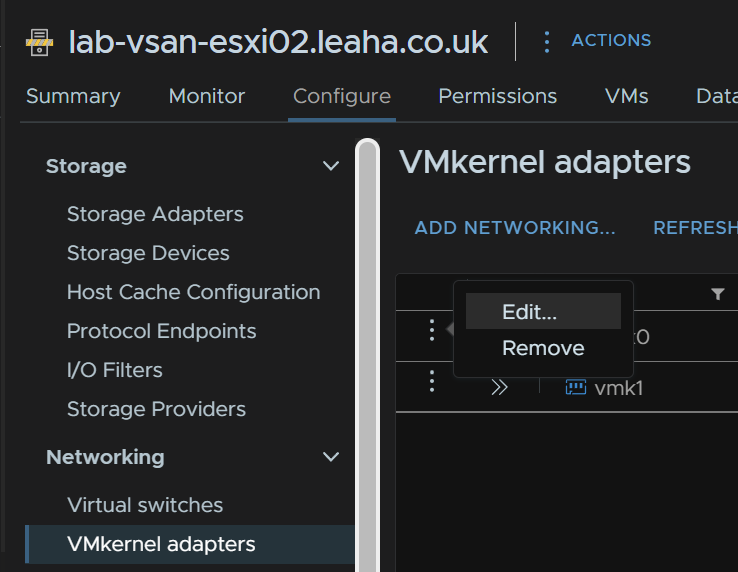

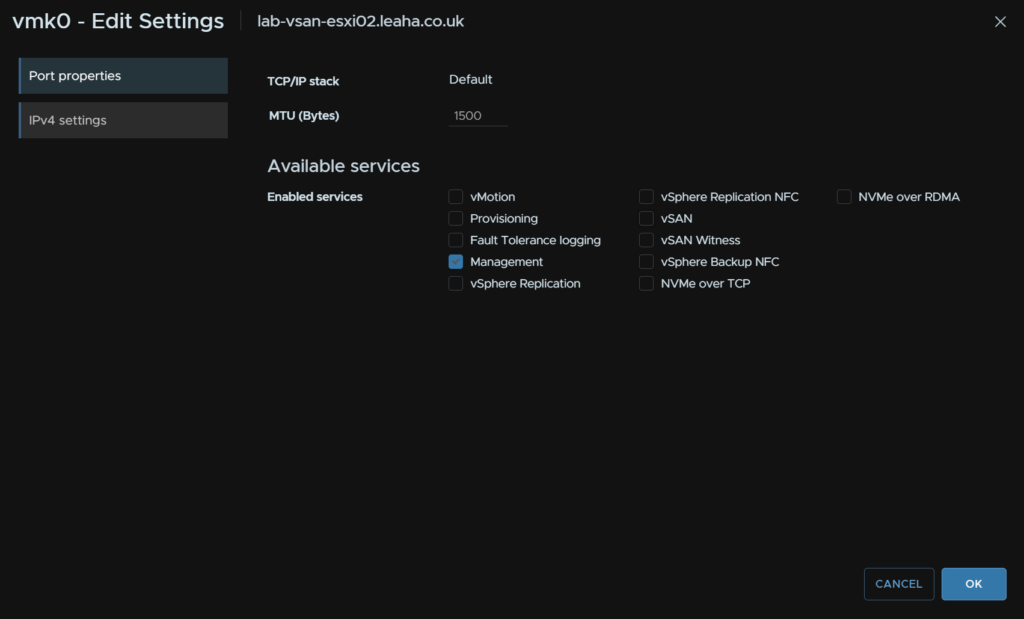

Now, on the three newly added hosts, vSphere will have configured the management VMkernel for vSAN and vMotion, which we dont want

We can see this under enabled services

To remove these, click the three dots on vmk0 and click Edit

And remove vMotion and vSAN, then click ok

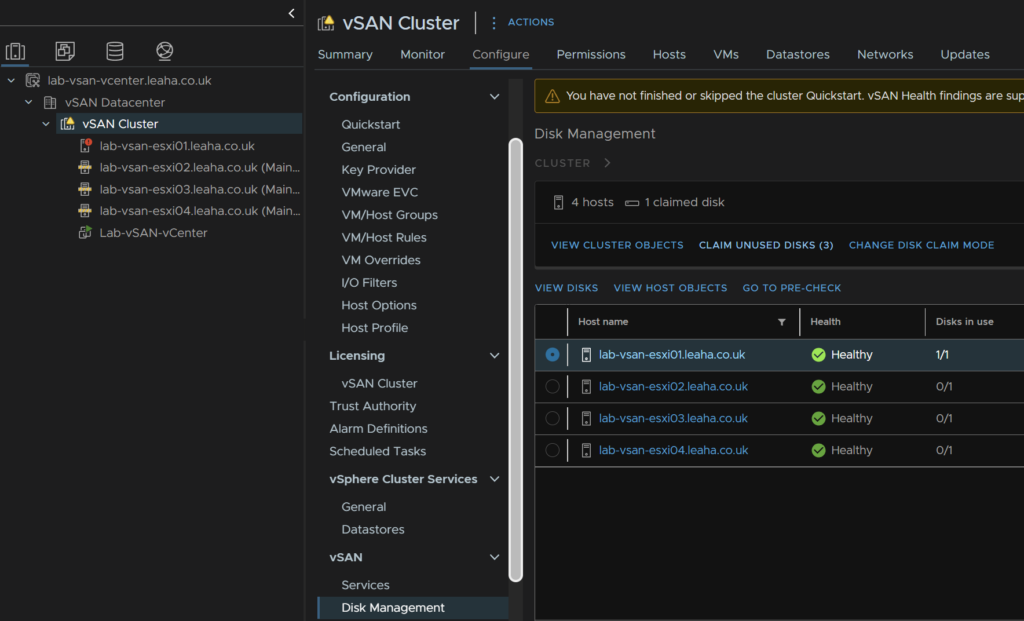

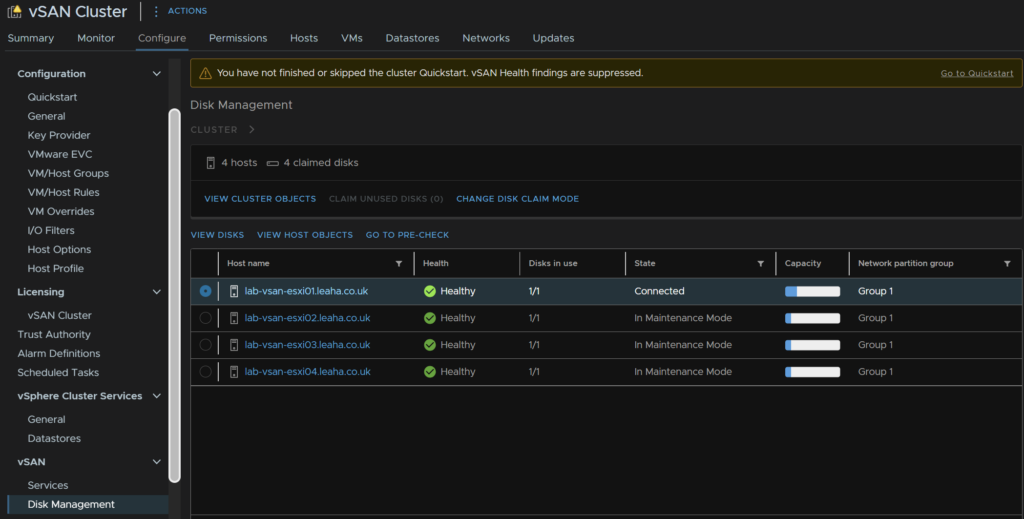

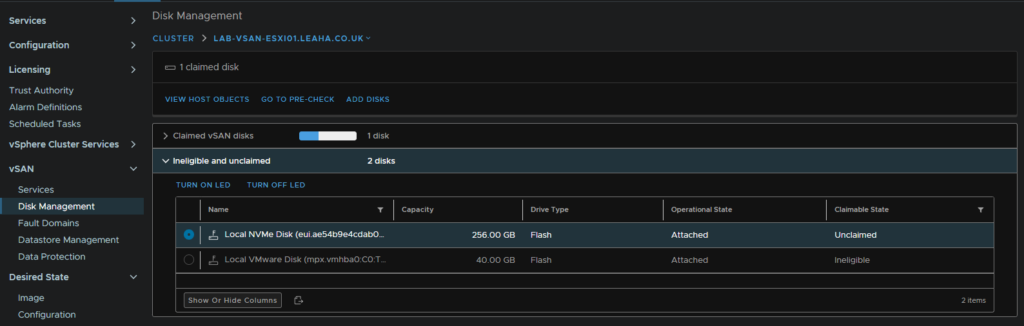

Now we need to add the remaining cluster disks to the vSAN pool

Select the cluster and head to Configure/vSAN/Disk Management then click Claim Unused Disks

Expand any drop downs and select all disks and click Create

As mine are virtual disks, they are incompatible, but for a production system, they should be on the HCL

Now we can see after a min or two, they have been added

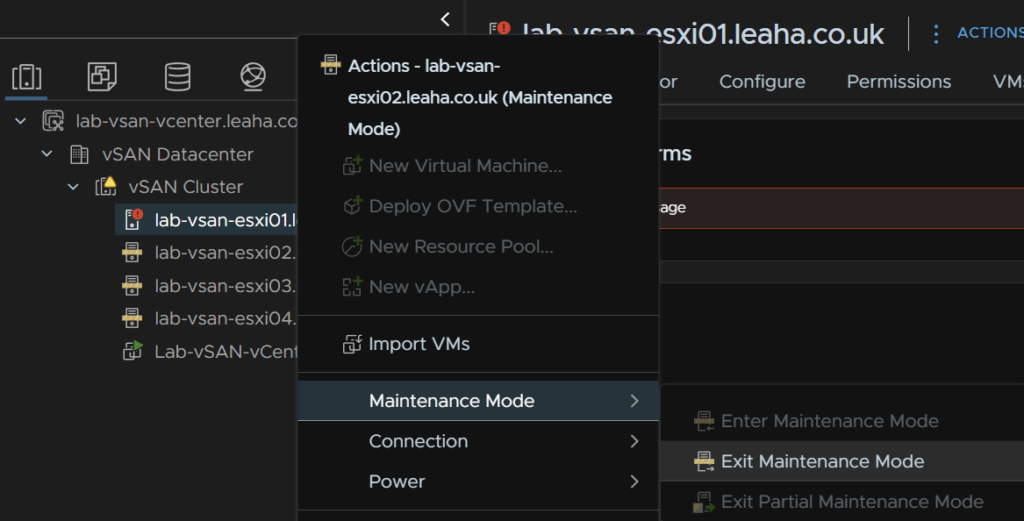

We can now right click the newer three hosts and remove them from maintenance mode by right clicking the host, then Maintenance Mode, and Exit Maintenance Mode

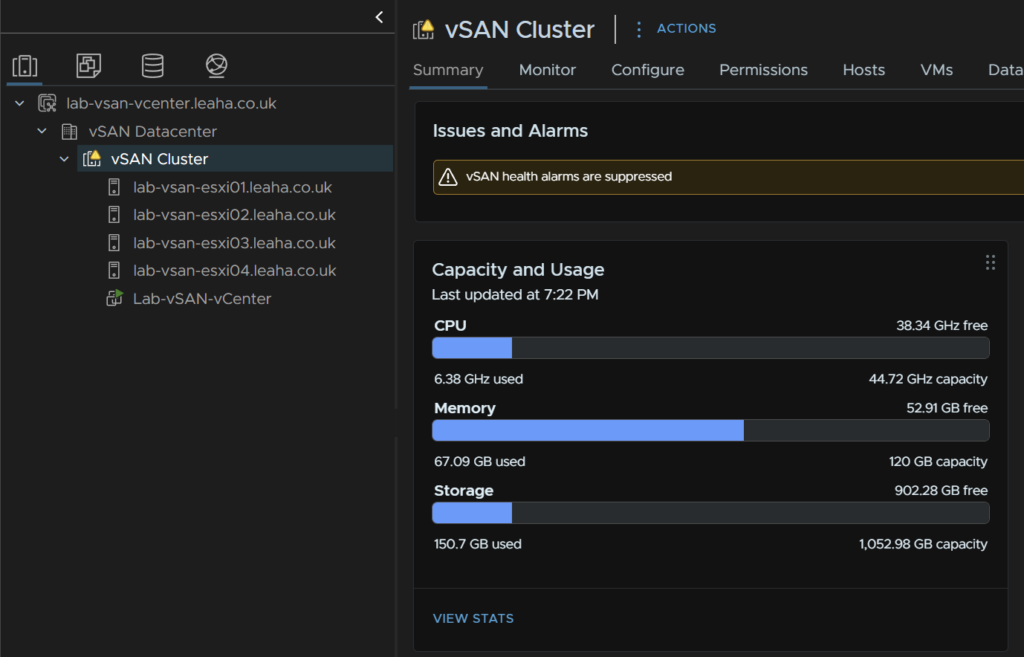

From the cluster view, we can see its added the storage totally all four hosts

1.2.6 – Setting Up vMotion

vMotion ideally wants to be on the Management vSwitch, assuming all NICs are 10Gb, and this should remain a standard switch

Click the host, and head to Configure/networking/VMkernal Adapters then click Add Networking

Then select Add VMkernel Networking and click Next

Click Select An Existing Switch and select vSwitch0, then click Next

Name it by filling out the network label, select the VLAN, this should be a dedicated non routable VLAN, and check vMotion

As these ports have the management set as a VLAN access, or native VLAN, we need to tag the vMotion network

Here, an MTU of 9000 is ideal, but your switches much be set for it, else its going to cause issues, if you are unsure, leave it at 1500, then click Next

Then add an IP and subnet for that VLAN, the gateway/DNS is also irrelevant here, it should be a non routable network, and click Next, then Finish

1.2.7 – Adding The VM Switch

For VMs we want to create another VDS, head to the networking tab, right click the Datacenter, click Distributed Switch/New Distributed Switch

Give it a name

Select a version, the same idea applies from the vSAN VDS

Pick the highest compatible with all hosts

Drop the uplinks to 2, keep I/O control enabled, and uncheck create default port group and click Next

Then click Finish

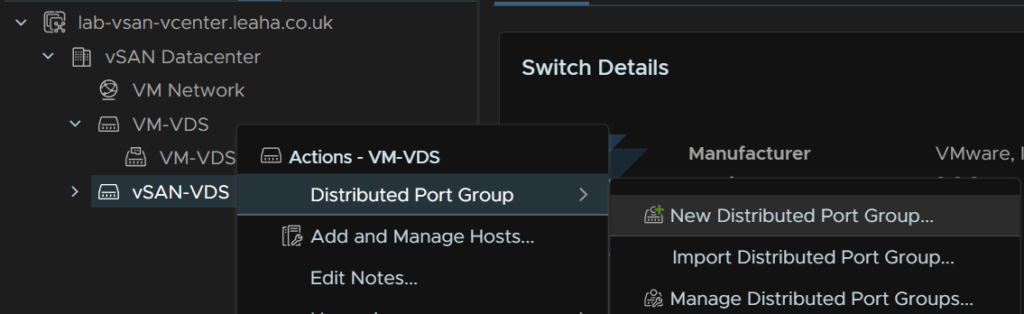

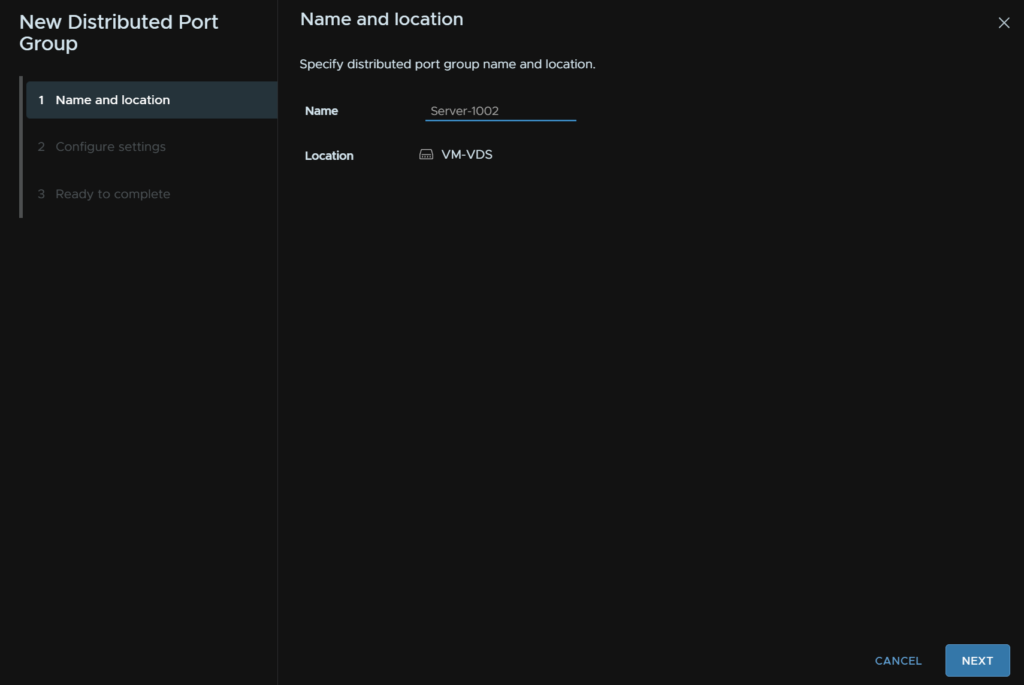

Then right click the VDS click Distributed port Group/New Distributed Port Group

Give it a name and click Next

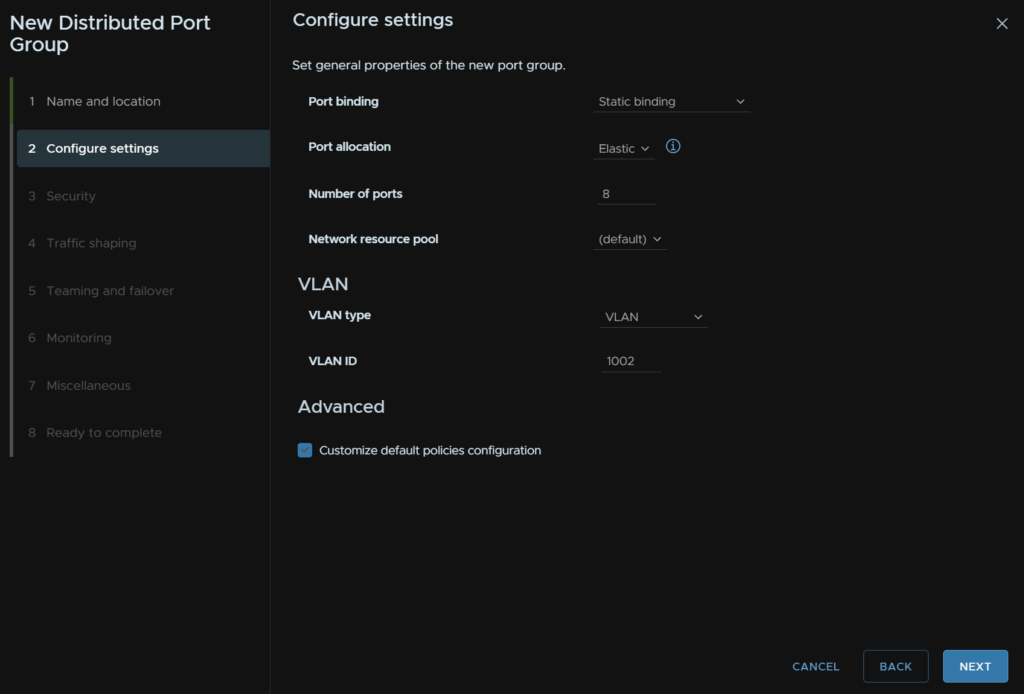

Keep the top four bits the same for port binding, allocation, number and resource pools

Under VLAN make sure the type is set to VLAN and the ID is what you want for this network and check the box to Customise Default Policies Configuration, then click Next

Like the rest of the switches and port groups, if you have Dell VLT, MC-LAG or HPE VSX you want to have Load Balancing under Teaming And Failover set to “Route On IP Hash”

Then click Next until it reaches the end and Finish

2 – Configuration

Now we have the basics deployed we can look at some configurations to help sort out credential management, cluster load balancing with DRS, vSphere HA within vSphere

Then we can look at some post deployment configurations for the vSAN cluster and then looking at the addition services it offers

2.1 – vSphere

2.1.1 – Licensing Your Servers

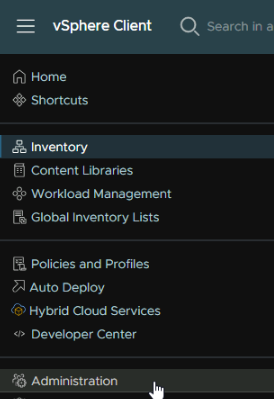

Now we have everything setup, we want to license our hosts, to do this, click the three lines in the top left and click Administration

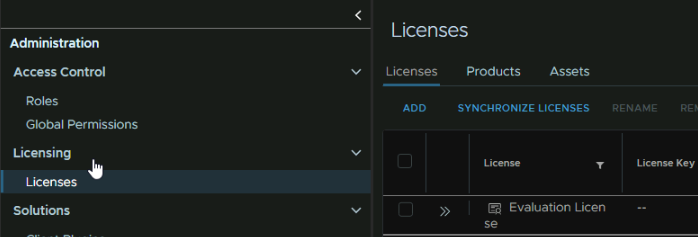

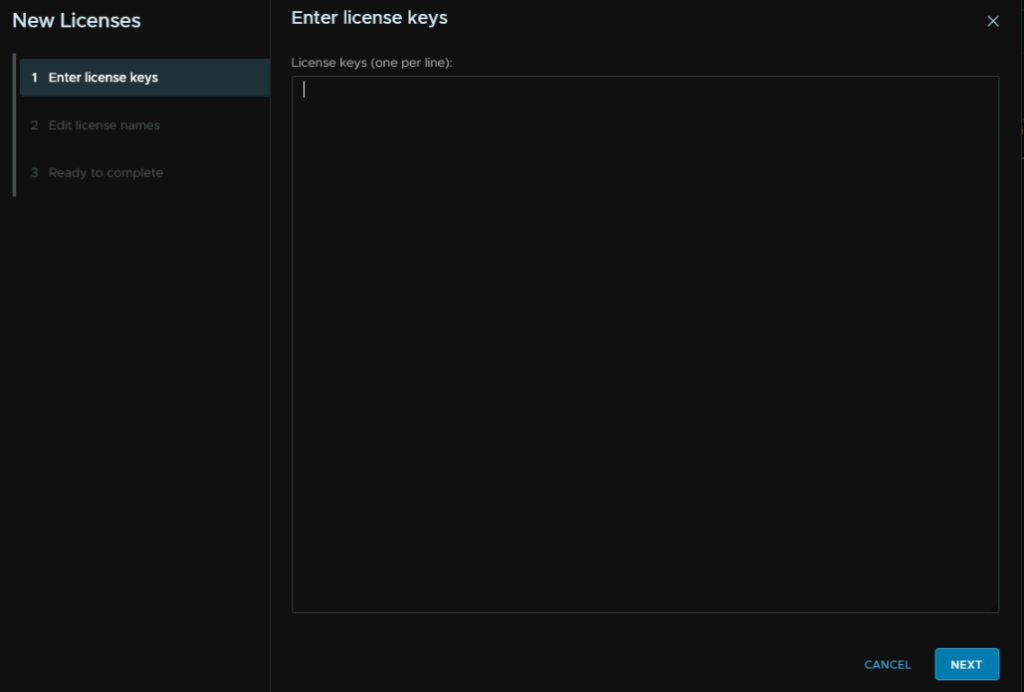

Head to Licensing/Licenses and click Add

Add the keys for vCenter, ESXi and vSAN, one per line, or if you have a solution license, add that on one line and click Next

Then name the licenses then click Next and Finish

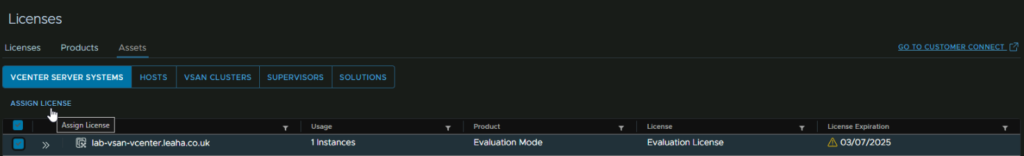

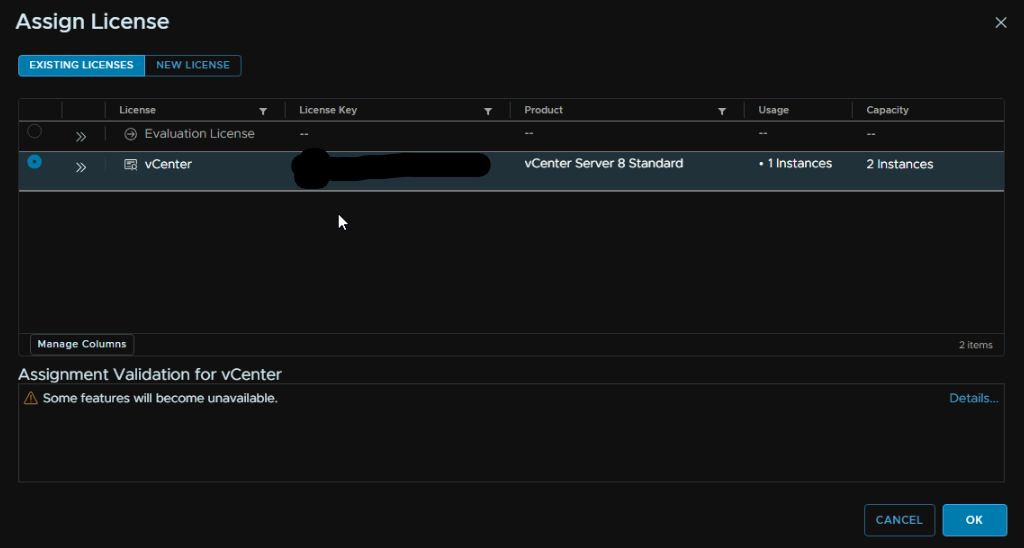

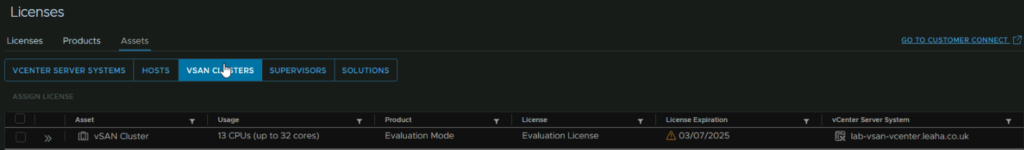

Now we can apply these license, Head to Assets, still under Licenses, click vCenter Server Systems and select the vCenter, and click Assign License

Select your license and Click ok

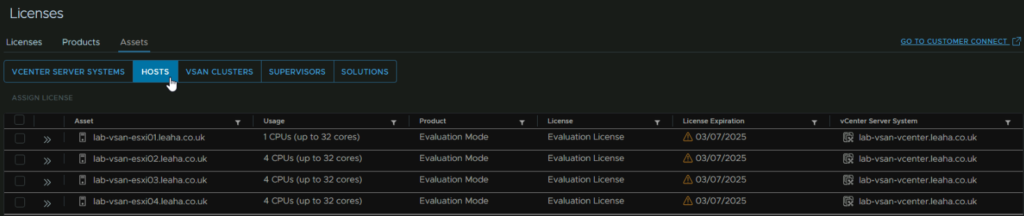

Repeat under hosts for the ESXi license

And again for the vSAN Cluster

2.1.2 – Changing Account Expiry

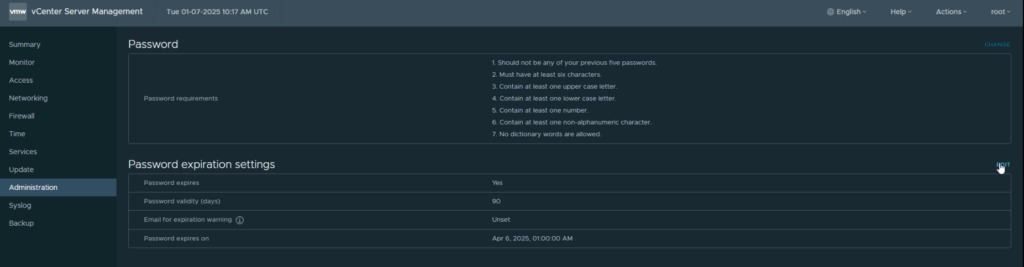

By default, if vCenter root account will expire every 90 days, this should be something complex and random when we deployed the vCenter, so it would be better to disable this, as if its needed you dont want to be changing it

To do this head to the appliance management page on

https://fqdn:5480

Login with the root account

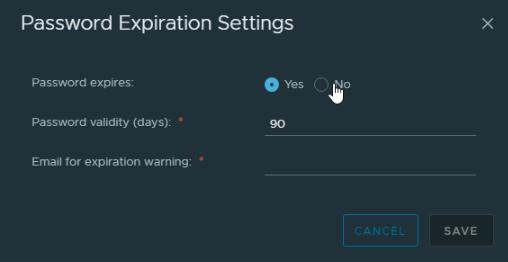

Head to Administration and click Edit

Here you can set the password not to expire, or change the expiry date from the default 90 days

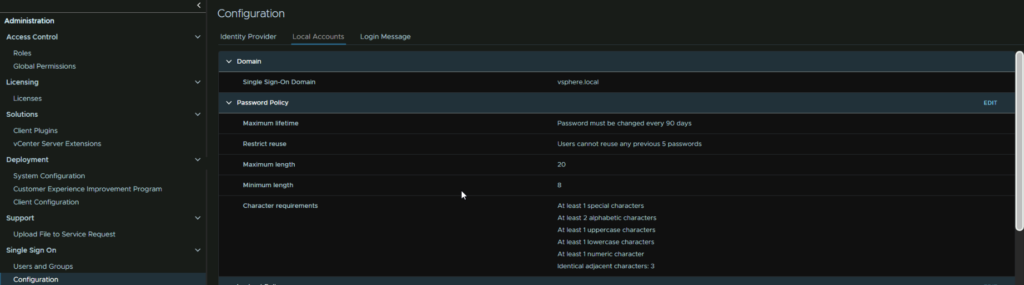

The same rule applies to the SSO accounts, like the [email protected] accounts, we can change this from the UI menu, not the admin portal and clicking the three lines in the top left and then Administration

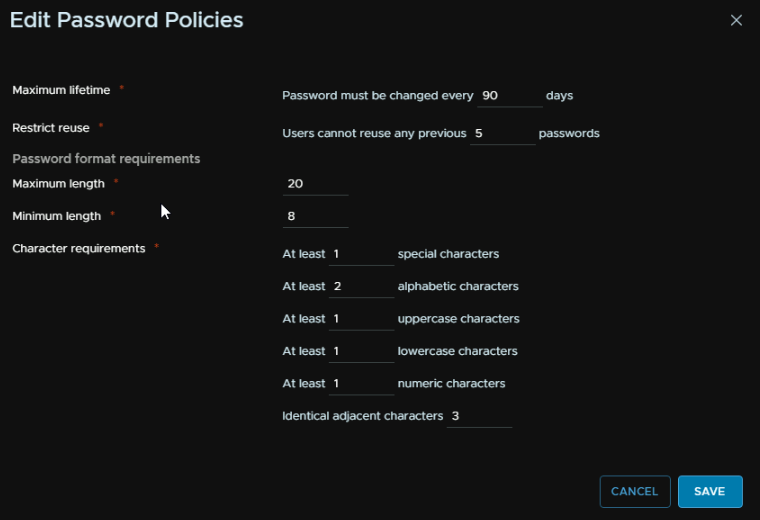

Then head to Single Sign On/Configuration/Local Accounts, from there you can click Edit under password policy

This then comes with several options you can tweak to suite your environment

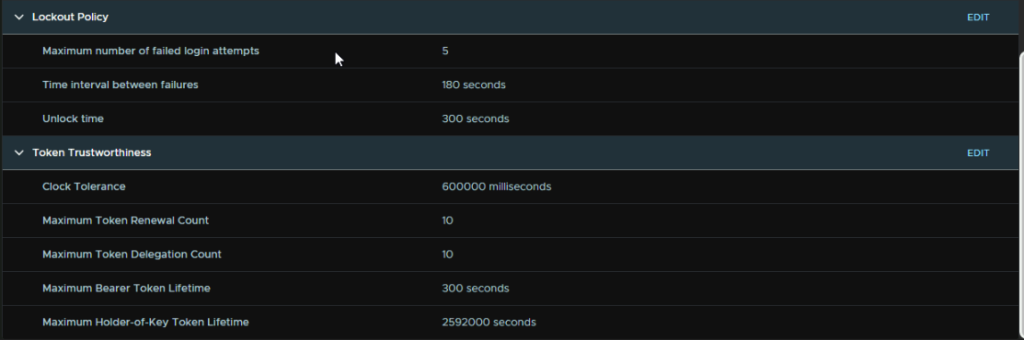

You can also configure the lockout policy

Its worth noting that while you can add AD into vCenter, I would strongly recommend against it, your admins, with the domain accounts, will have admin permissions to areas of the AD environment, and vSphere, and this poses a massive security risk, if one of those accounts gets breached, an attacker has a lot of power over the AD environment, and if they are a vSphere admin, full access to the vSphere environment without having to get access to another account

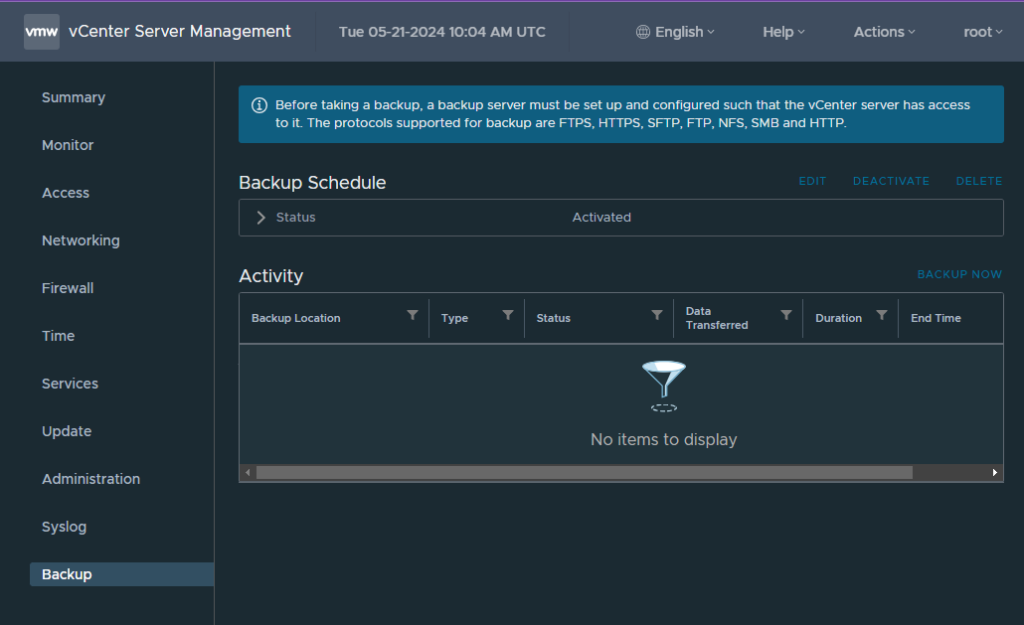

2.1.3 – vCenter Backups

vCenter backups is an absolute must, especially since you have vSAN, should anything happen to the vCenter, if this is configured you will be able to restore it and get systems up with little difficulty

Backups to a 3rd party provider, Eg backing the VM up with Veeam/Rubrik are not the recommended way, take up significantly more space, do not guarantee working restore due to them not being aware of the database in the back end meaning the backup likely wont be crash consistent, and lastly, these types of backups will not fix all issues, a prime example is certificate expiry based issues, as the config backup restores the config into a new VM, it will fix these types of issues

The best practices method to backup a vCenter is to use the config backups in VAMI

To access VAMI go to the following link substituting fqdn for your vCenters FQDN

https://fqdn:5480

You can log in here with the local root account, or an SSO admin login

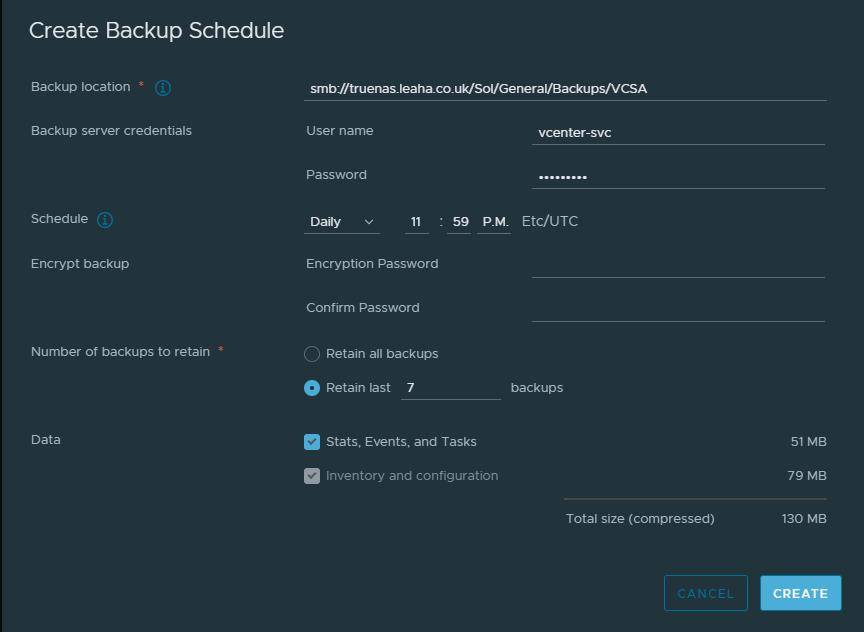

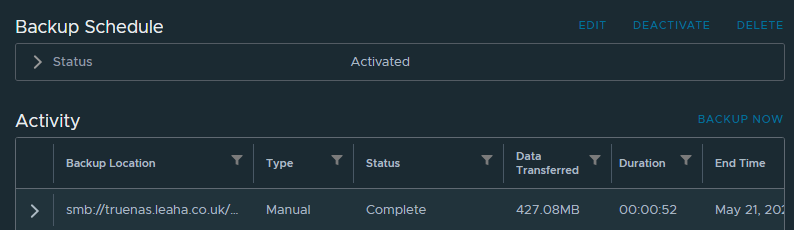

Now head to the backups tab at the bottom on the left, from here you can click ‘Configure’ on the right to setup a schedule

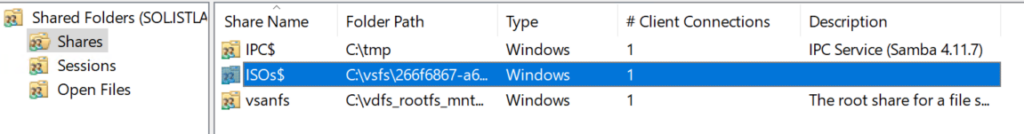

You’ll need a valid backup location to store them, an SMB, NFS or FTP server work best but you can also use HTTPS and FTPS

The backup schedule will give you a format for the backup location

We want to setup out location, here I am using an SMB server, but for NFS/SFTP the process is the same you just change the protocol at the start to NFS or SFTP respectively

We can also add in an account with read/write permissions to the share, I recommend a service account with a password that wont expire, as if it expires and you forget, the backups will stop working

You’ll want it to run daily, ideally if you need to restore you dont want a backup older than 24 hours

And retain the last 7 backups, this will remove older backups and maintain its self

Then hit create

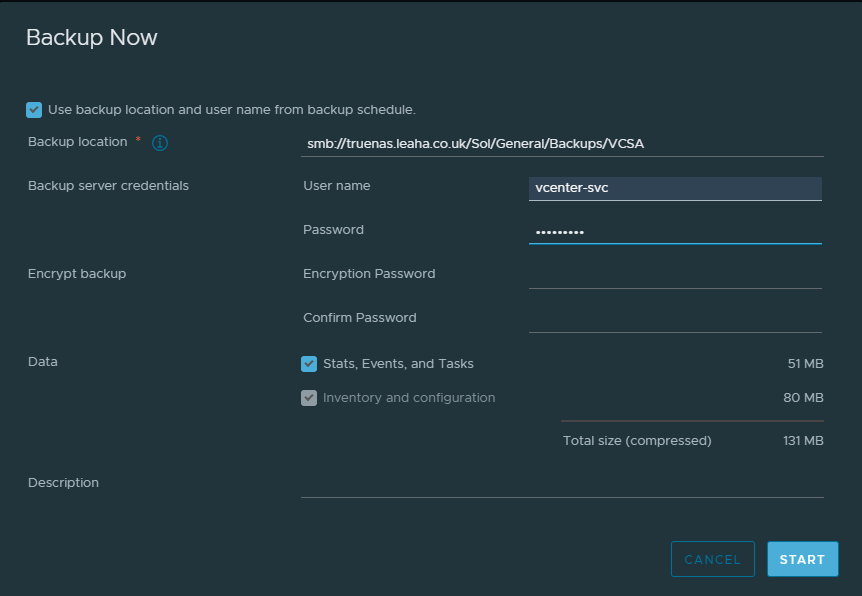

To test this works, run a manual backup by clicking backup now on the right

Click use backup location and username at the top of the pop up, this will pull the settings from the schedule, you’ll just need to enter the account password

Then click start

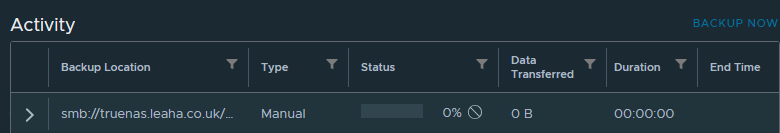

That will create a manual backup task

If all is working, this should complete with no errors

Now your vCenter is backed up and will automatically back its self up everyday for you, so if something goes wrong you have a way to restore

2.1.4 – Cluster DRS/vSphere HA

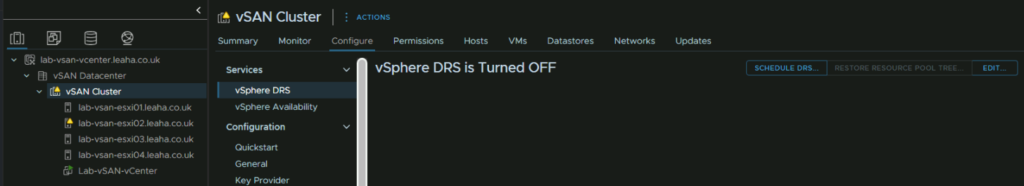

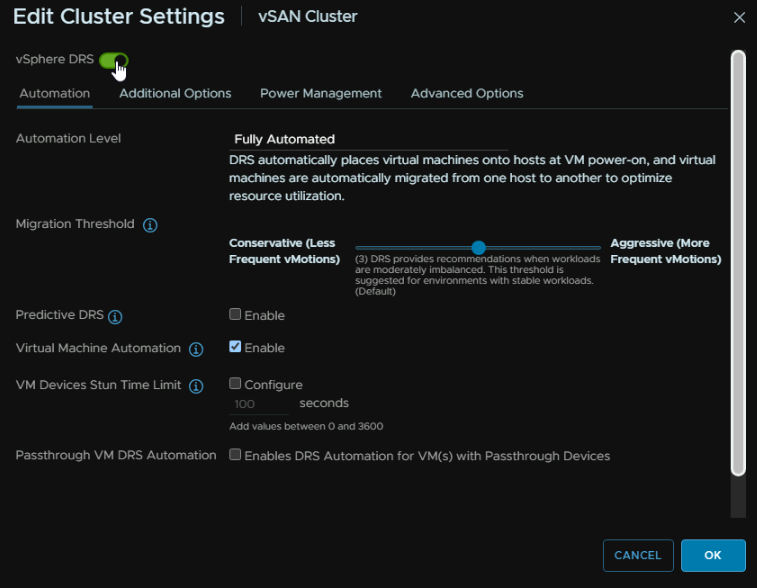

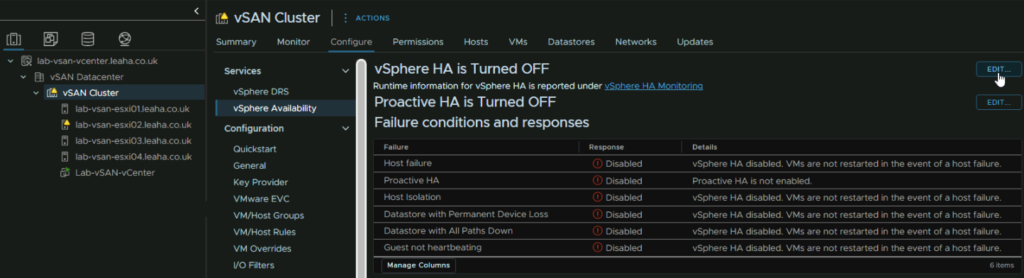

What we now need for our cluster is the load balancing of resources across the cluster and automatic restarts of servers if a host dies or if a VM stops responding with VMware Tools

DRS is for the cluster load balancing, it will ensure VMs are on the hosts where they can best get the resources they, it will also allow VMs to other hosts when one is put into maintenance mode

To enable this, click the cluster and head to Configure/Services/vSphere DRS and click Edit on the right

Click the enable toggle at the top and leave the rest as is and click ok

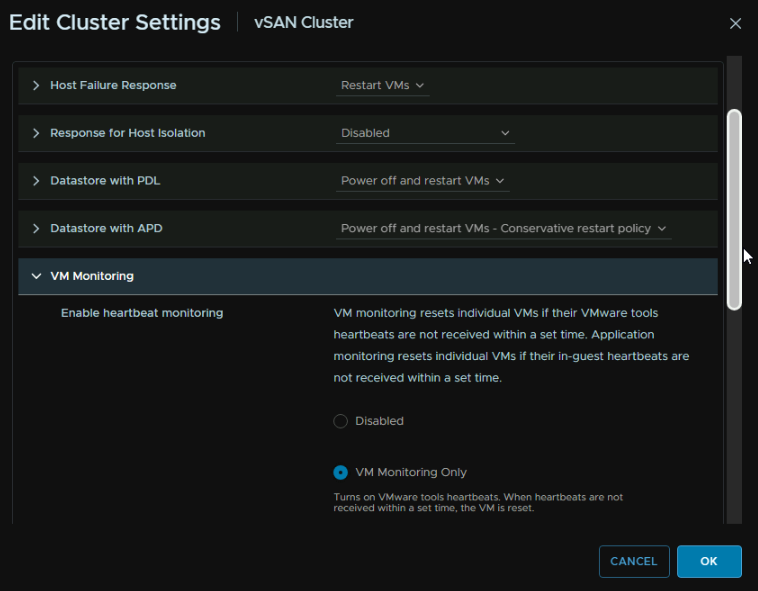

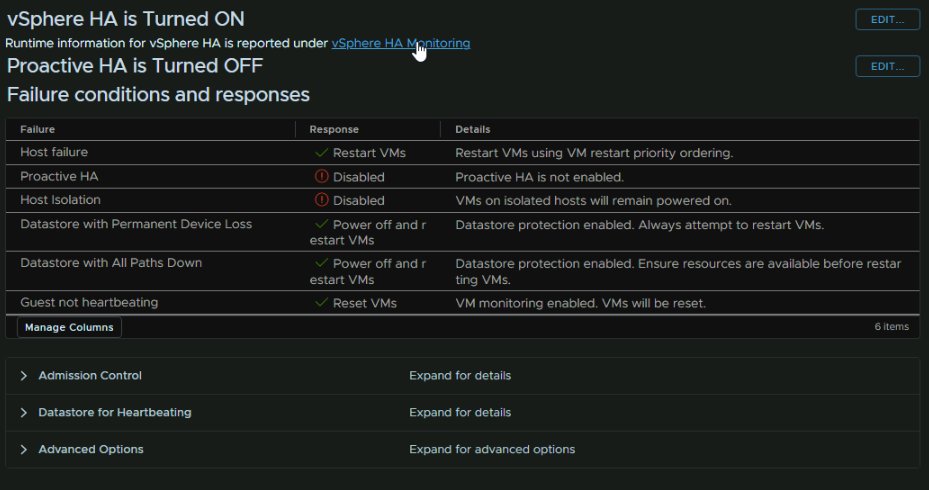

For vSphere HA click the Cluster and head to Configure/Services/vSphere Availability and click Edit in the top right

Click the toggle at the top to enable it

Expand VM Monitoring and click the radio button for VM monitoring Only, then click ok

It should now look like this

2.2 – vSAN

2.2.1 – vSAN Alarms

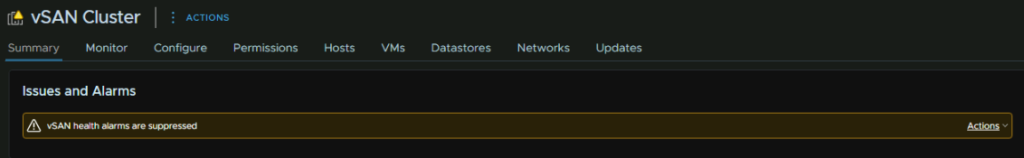

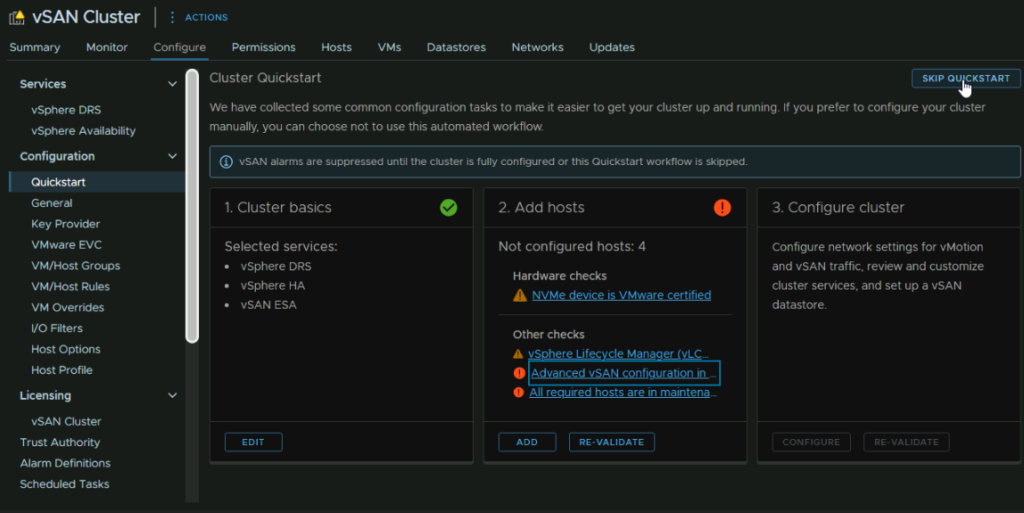

By default, the vSAN health alarms are suppressed, and you will see a warning for it on the cluster

This is due to the quick start not being done, but we can sort everything manually, I find it can be a little difficult to get this working, and manually we end up with a slightly better result, and the workflows it would have you do, we have already done

For example, in the screenshot below shows hosts are not configured when they are

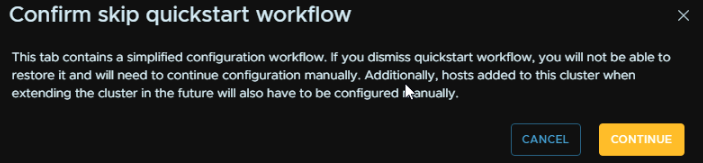

What we want to do is click the cluster, and go to Configure/Configuration/QuickStart and click Skip QuickStart in the top right

Then click Continue

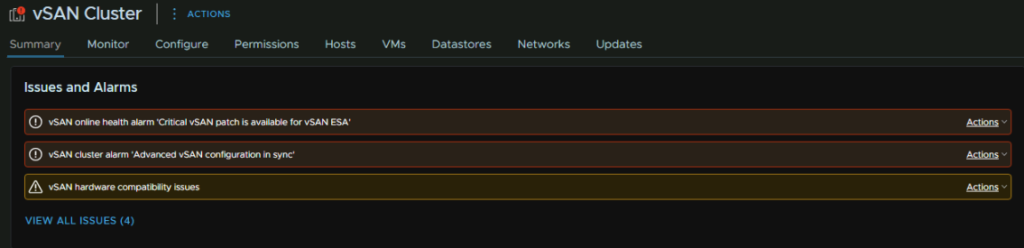

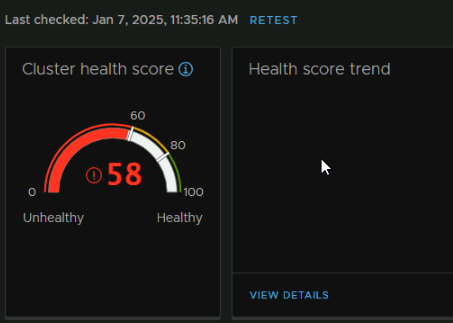

Now the health alarms are not suppressed we can see bits we need to address

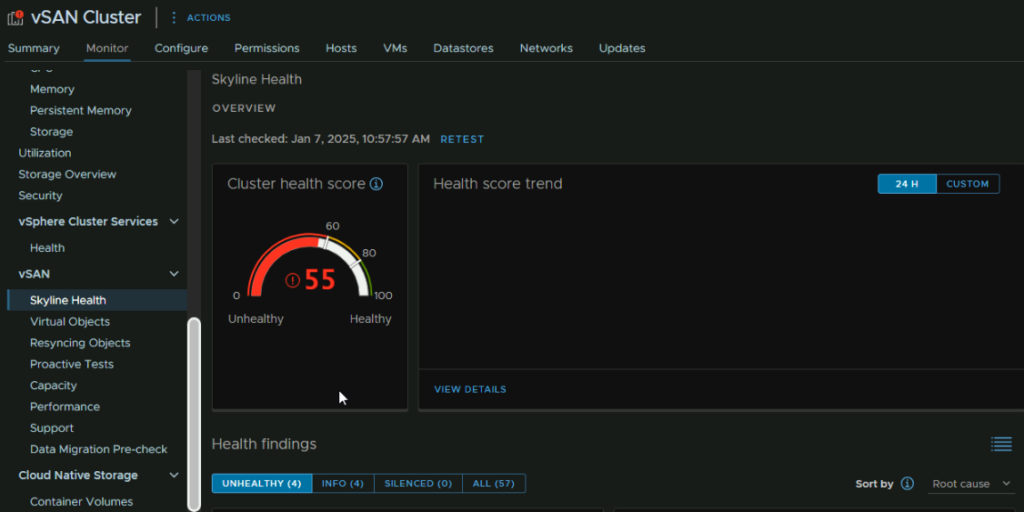

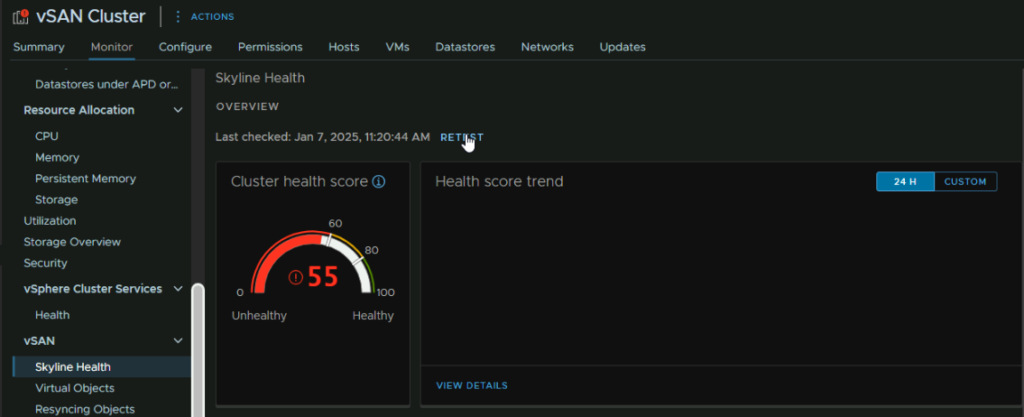

To check these, click the cluster and go to Monitor/vSAN/Skyline Health

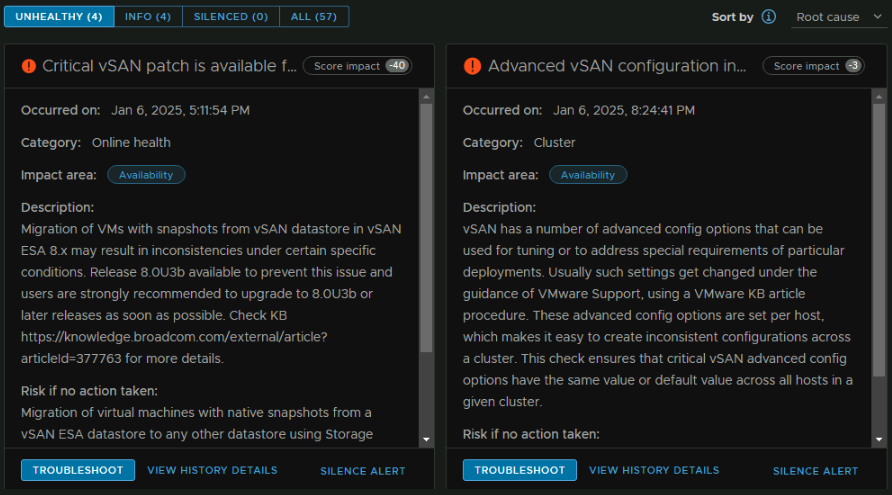

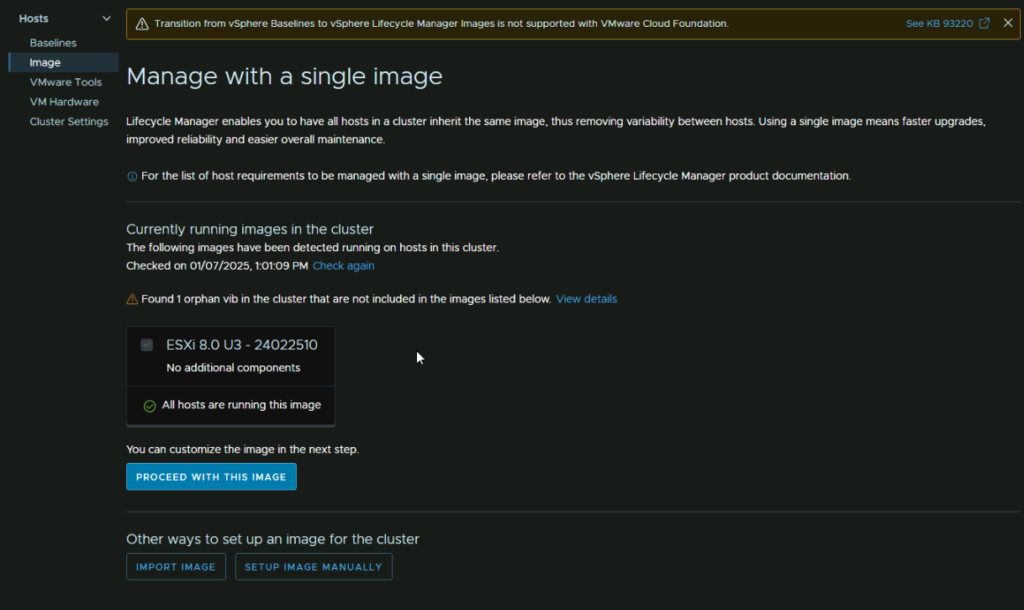

We can see the primary reason the health is so low is due to my lab running an older version, 40 of the 45 points lost are from a patch to ESXi needing to be applied, though vCenter would need doing first

For the bit on the right we can click Troubleshoot to see whats up and how to fix it

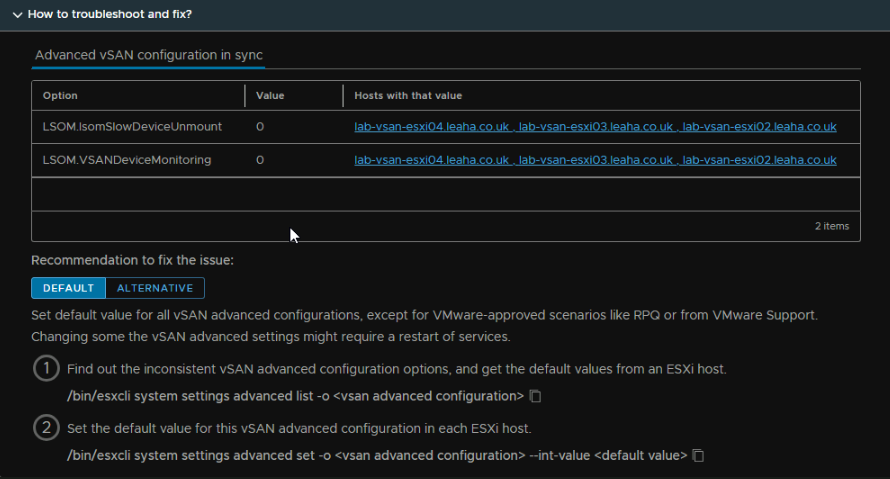

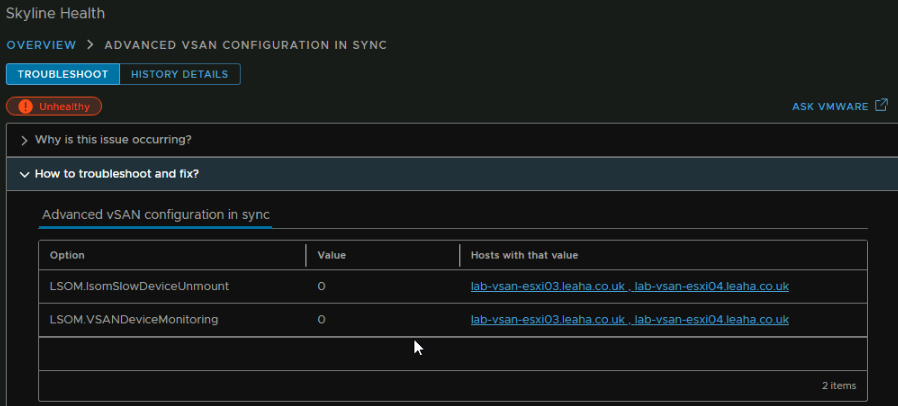

Here it says some advanced configuration isnt set for the three additional hosts

The command here is a little bit of a red herring and doesnt actually work oddly

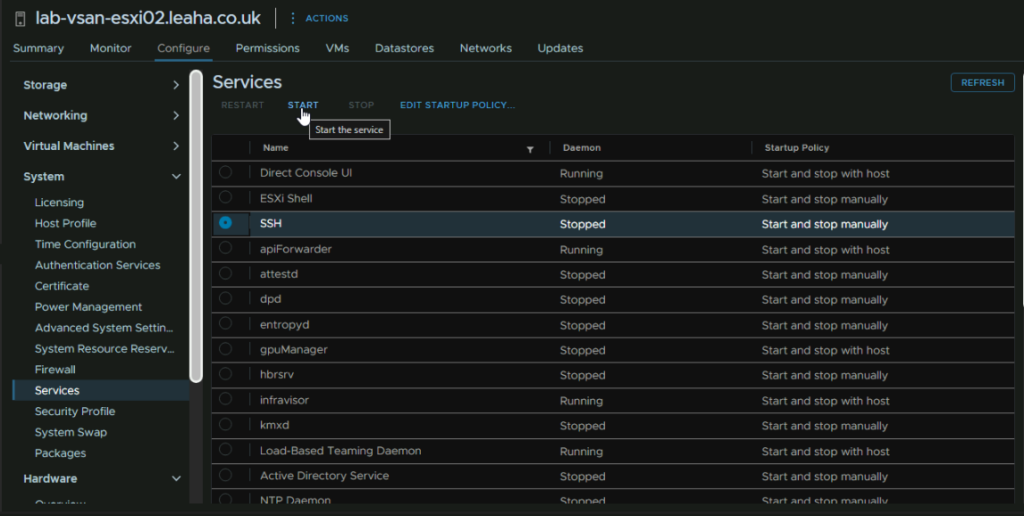

The default value is 1, and this needs setting on the three hosts we added, to do this we need the CLI, and we need to enable SSH

Click the host, and head to Configure/System/Services, then click the radio button on SSH and click Start

ssh root@hostnameThen run

esxcli system settings advanced set -o /LSOM/lsomSlowDeviceUnmount -i 1And run

esxcli system settings advanced set -o /LSOM/VSANDeviceMonitoring -i 1To test this worked, I did this on Host 2, we can click the Cluster, and head to Monitor/vSAN/Skyline Health and click Retest

Clicking troubleshoot on the same error shows its gone on Host 2, so this works, and we can repeat the steps on the remaining host

After that and another retest, our score has gone up by three points

I also have an alert for the NVMe devices being uncertified, I will silence it, as its a lab, but a production setup should not have this

The big bit we want to deal with is is the patch alert impacting our score by 40 points

2.2.2 – vSAN Cluster Image

The main error in the vSAN health around the patch version brings us nicely into setting up a cluster image and patching ESXi in our vSAN cluster

Of course, always ensure you patch vCenter before patching ESXi and you look at patching your host firmware, ensuring any disk firmware is explicitly listed as compatible in the VMware HCL here, but as Broadcom only support vSAN Ready nodes for the ESA architecture you should be fine, more information on patching server firmware and the vCenter can be found here

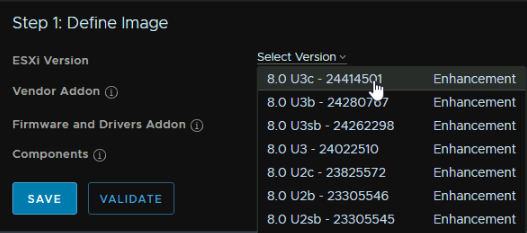

To setup the cluster image to prepare us for patching, we need to click the cluster, then head to Updates/Hosts/Image and click Setup Image manually at the bottom

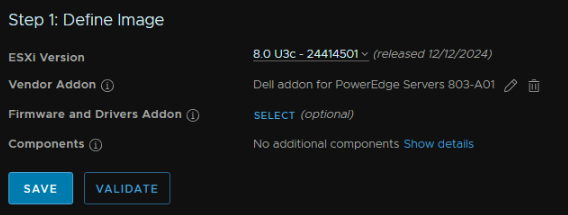

Click the drop down under ESXi version and select the latest, which for me was ESXi 8U3c

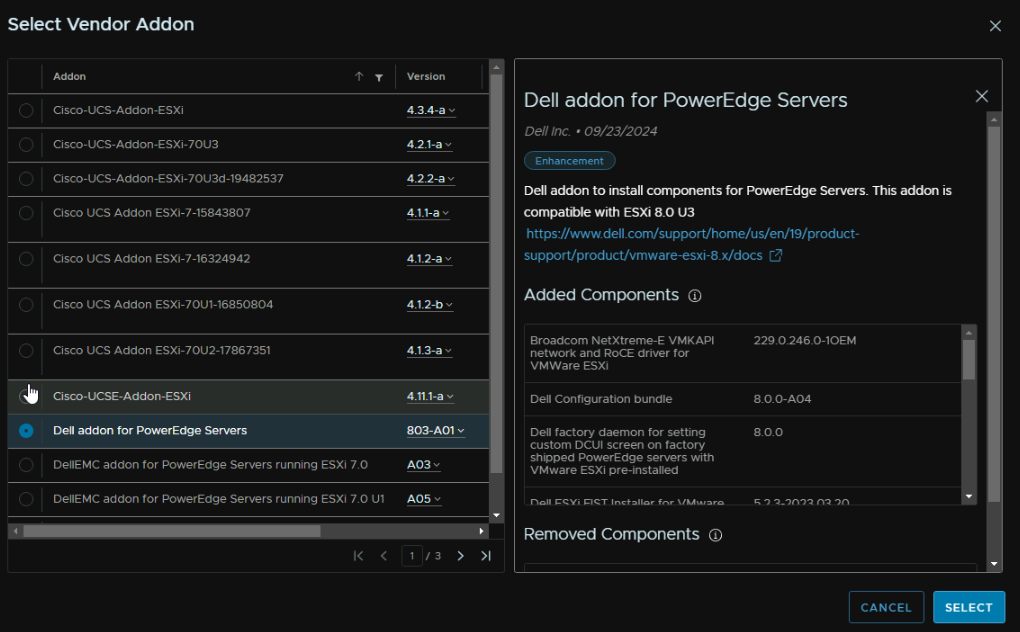

Click Select on Vendor Addon

And select the addon for whatever vendor you have, for example, if you have Dell PowerEdge servers you would want this, I’ll add this for demo purposes in this lab, as adding it to the virtual ESXi hosts isnt going to cause an issue

And the last two are if you have any special components, this can include the iDRAC service module for ESXi on Dell, or GPU drivers for Nvidia cards, but for now we will leave this

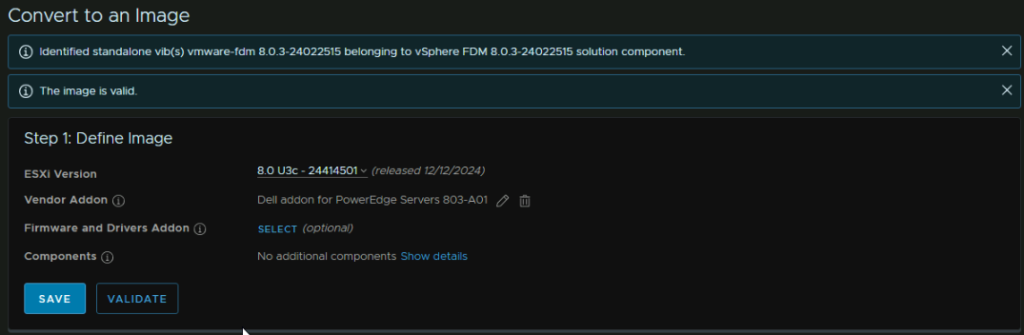

Click Validate

When it says its valid, click Save

You will notice a warning about the vmware-fdm VIB, this is the vSphere HA VIB pushed by the vCenter and is normal

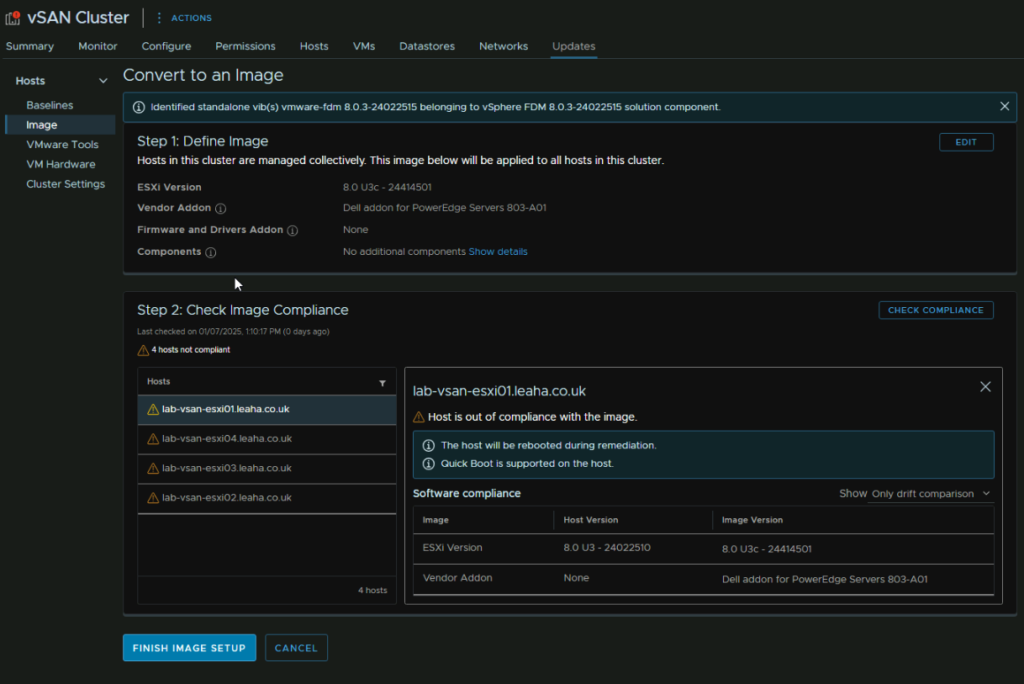

It will check the cluster compliance, which as this is a higher ESXi version will have our hosts out of compliance, but we can now Click Finish Image Setup

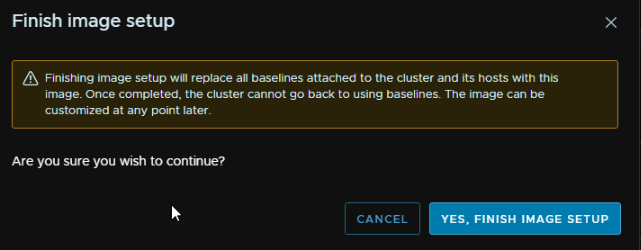

Then click Yes, Finish Image Setup, this will remove Baseline updates permanently from the cluster

Now our hosts are ready to be patched via an image which you can find more into on my article covering a simple ESXi patch here, the only thing to keep in mind with VVF, is this includes Aria, which should be patched before vCenter, if in doubt check the Broadcom Product Interoperability Matrix here

2.2.3 – vSAN Reservations

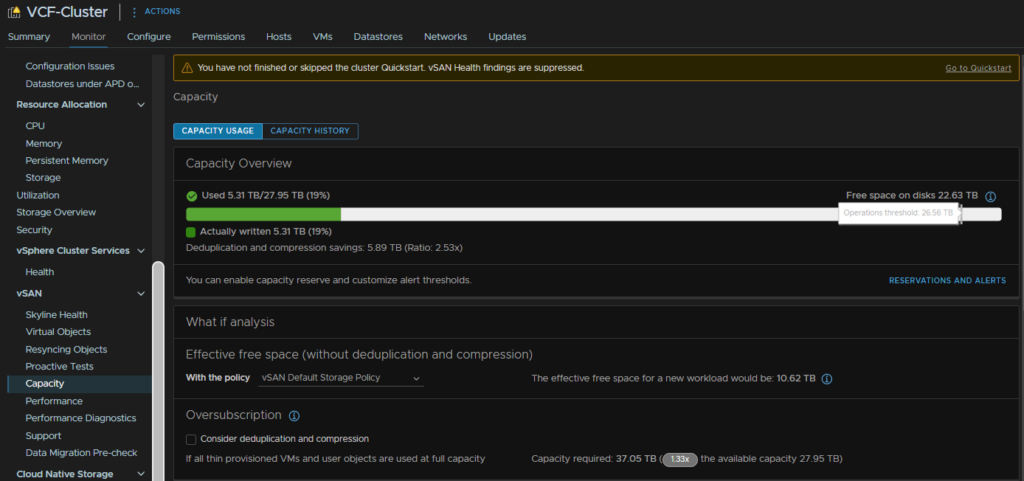

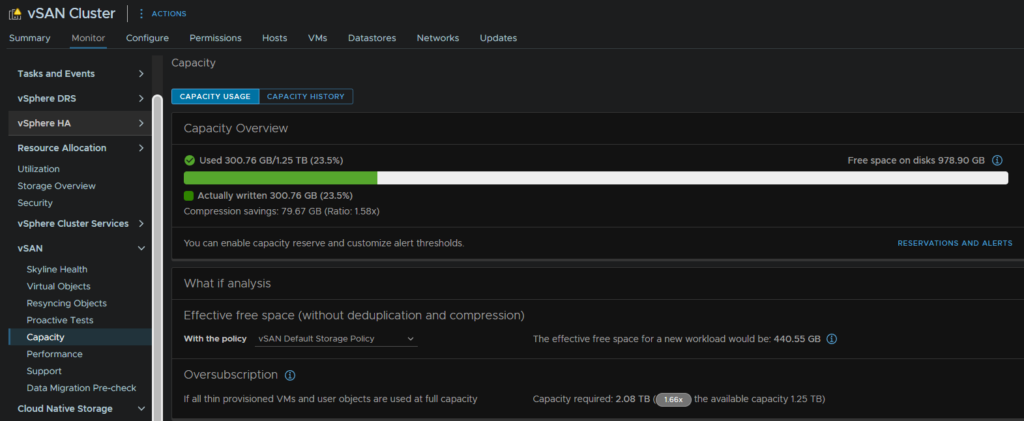

With vSAN, you have an amount of storage that the cluster should never go over, under any circumstances, the operations threshold, above this things can really break, you can see this by clicking the cluster and heading to Monitor/vSAN/Capacity

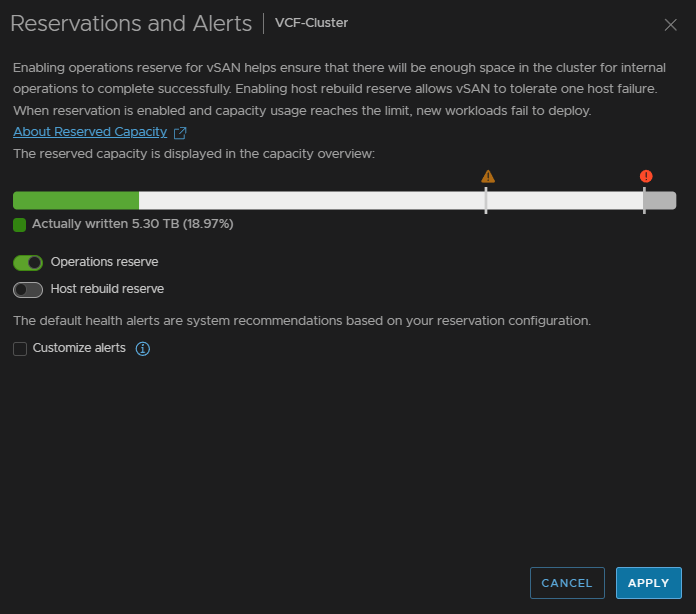

Now, ideally, we want to reserve this, so 100% capacity is this operations threshold to prevent issues like this from occurring, if we click Reservations And Alerts we can toggle a slider to reserve this space so we can put the vSAN is massive danger by over filling it and using space the system needs, then we can hit Apply

Now we can see its reserved and the 100% capacity is the operations threshold, but we have introduced a slightly new problem, if we hit 100% we will have a lot of issues we need to avoid

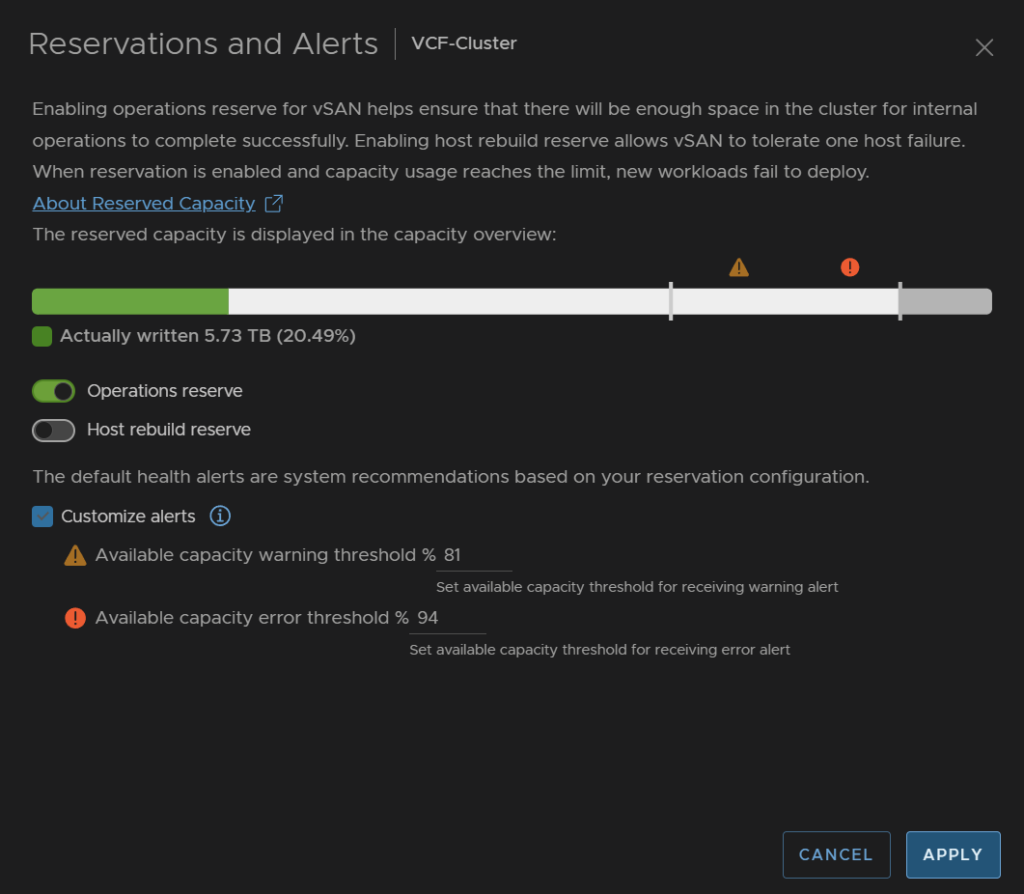

Click back into Reservations And Alerts

Check the box to customise alerts, we want to set the critical threshold to something a bit more helpful than 99%, normally it isnt this high, but the reservation pushes it to this

I set it at 94% and set the warning a little high to 81%

Now we will get an error when the storage is getting critical

2.2.4 – Guest Unmap

When VMs in a cluster use disk space, the blocks are mapped, with any vSAN/VMFS datastore when the guest OS has files removed freeing up space within the guest, but the blocks are not unmapped on the thin disk

With vSAN, we have the option to enable Guest Trim/Unmap, this allows vSAN to reclaim disk when the guest OS has files removed, so this is really helpful for maximum space efficiency, if the VM is already on however, it will need a reboot

From my testing, this works out of the box on Windows Server 2022/2025, however with Linux, it doesnt seem to, Broadcom’s documentation points to using certain commands on the guest, but I was unable to get this working

So in a Windows environment, this is very good, for Linux less so, though you may be able to get it working, but your milage may vary

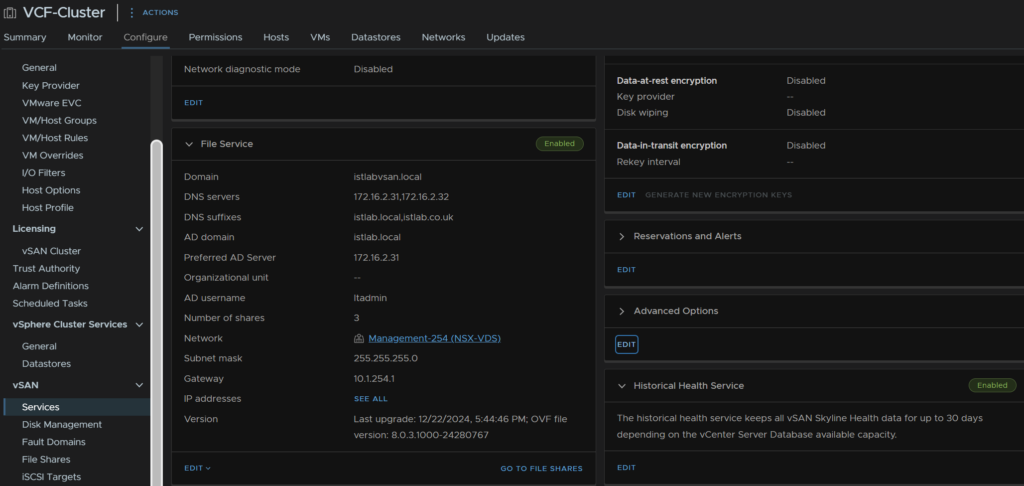

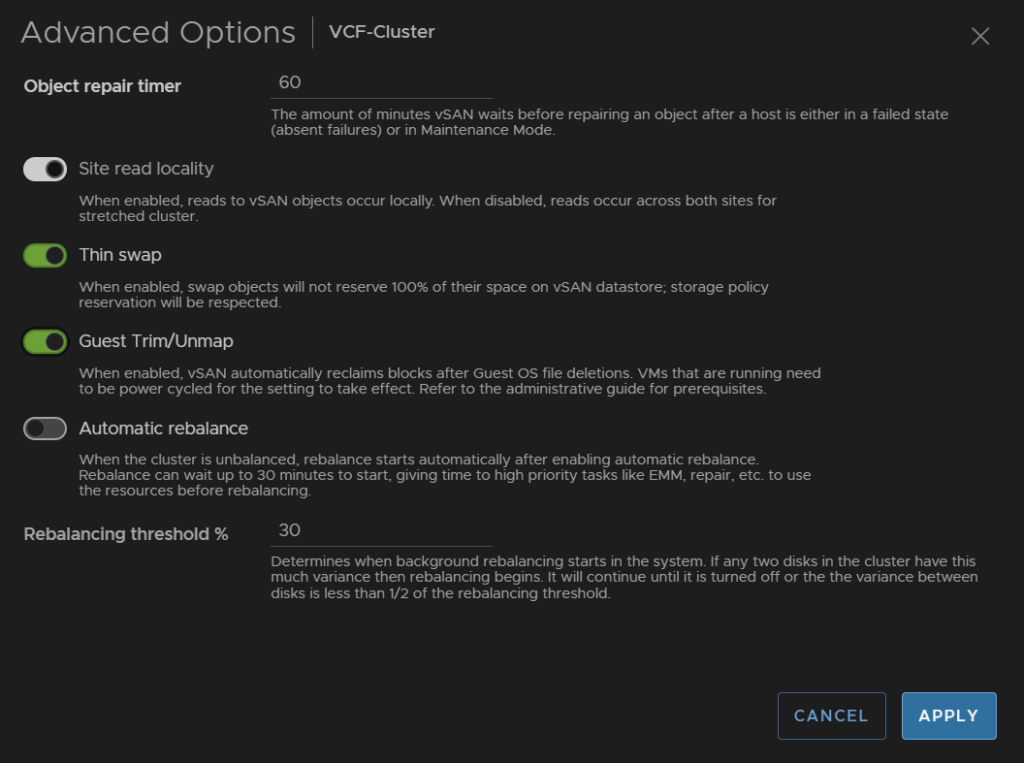

Click the cluster, then go to Configure/vSAN/Services and under Advanced Options click Edit

Click the toggle on Guest Trim/Unmap then click Apply

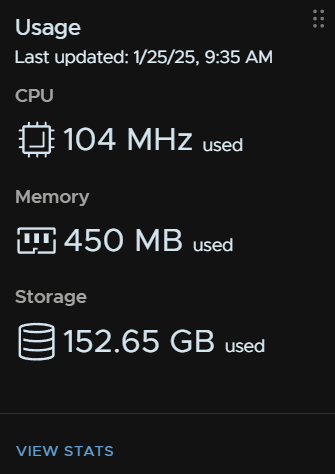

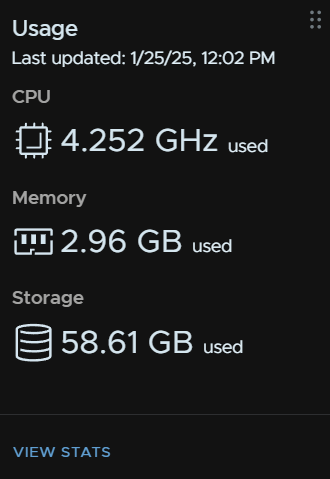

Now, lets see how helpful this is, a jumpbox I had, used to have several ISOs which caused the disk to balloon vs the other jumpbox

After a few hours it did come all the way down to match the ~30GB the OS is using, this shows as ~60GB due to the RAID 1 policy

2.2.5 – Storage Policies

How storage is used and made redundant in vSAN is policy driven, this is very helpful for applying how redundant VMs are

There are a few policies to be aware off

- RAID 1, this causes each object, Eg a VMDK, to have a mirroring, the object is stored on two hosts in the cluster and a third hosts get a metadata file, VMs will use ~200% of their disk space

- RAID 1 FTT2, this is a three way mirror, VMs use 300% of their capacity

- RAID 0, this applies no redundancy, I wouldnt recommend this at all, VMs use 100% of their disk space

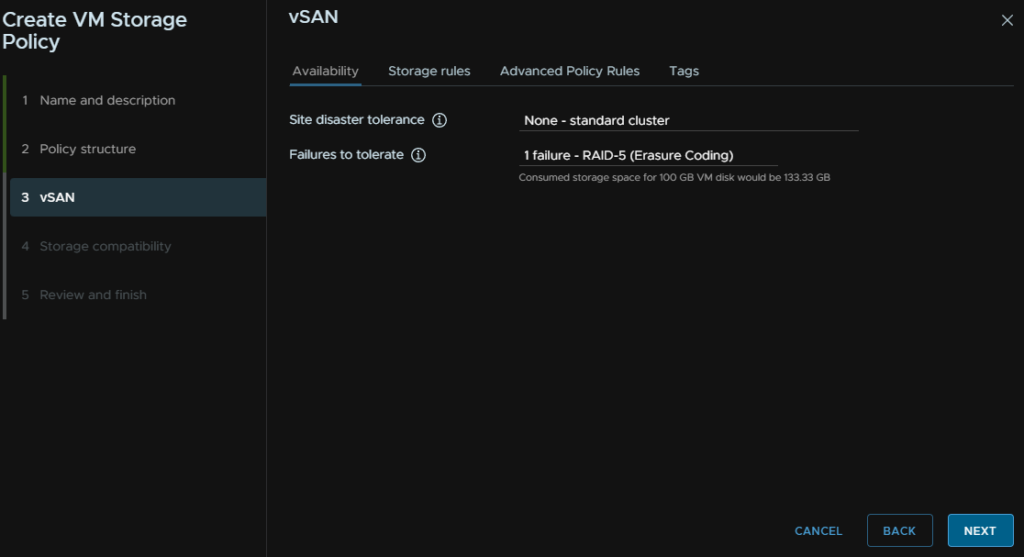

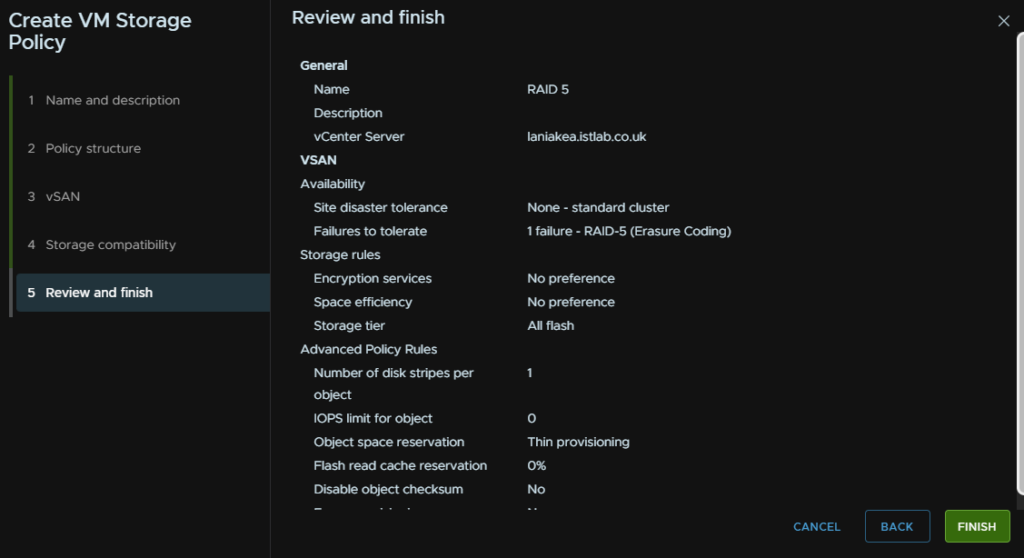

- RAID 5 – Erase Coding, this uses the principle of striping in RAID and applies this to objects, the data is striped acorss three hosts and a parity stripe on a fourth, VMs use ~ 133% of their capacity

- RAID 6 is the same as RAID 5 but with an extra parity stripe, VMs use ~150% of their capacity

RAID 1 requires a minimum of three hosts, though I would recommend no less than four hosts in a vSAN cluster – FTT1

RAID 5 requires a minimum of four hosts, but I would recommend five – FTT1

RAID 6 requires a minimum of six hosts, but I would recommend seven – FTT2

The advantages of policies is we can create them based on these storage designs and apply them to VMs to reduce the storage footprint, or increase their redundancy

I would leave the default policy alone and create new ones to apply to VMs

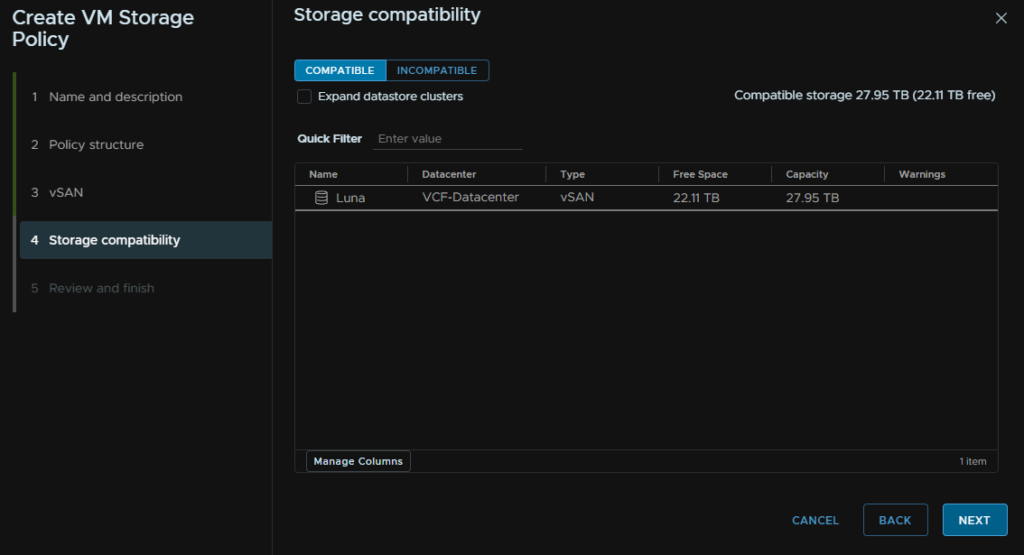

RAID 5 has a big benefit in a 5+ node cluster for how much usable storage you get, for ESA you will have NVMe disks, but I also recommend 25GbE networking for vSAN, as this significantly increases the bandwidth uses and lowers overall performance for a VM

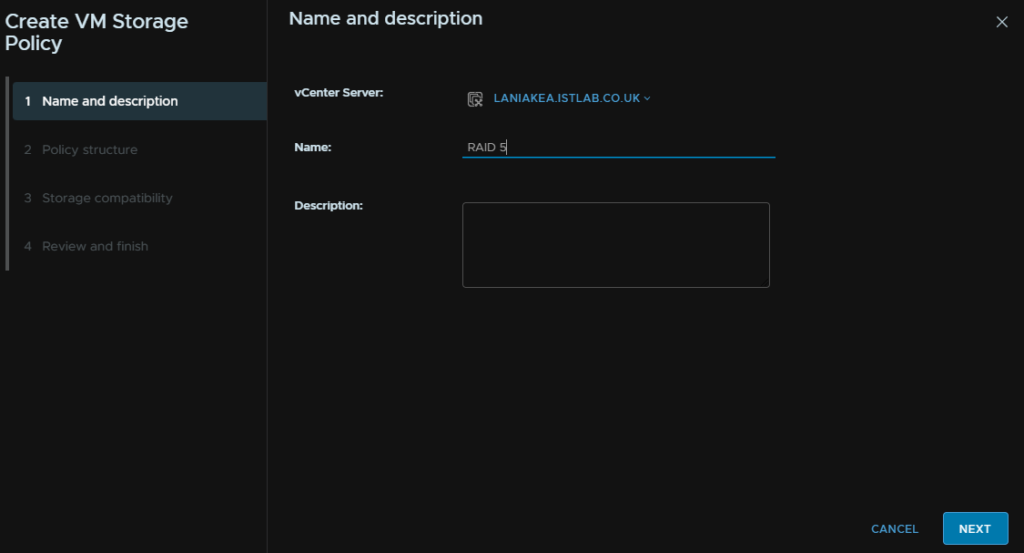

To create policies click the three lines in the top left of vSphere and click Policies And Profiles

Under VM Storage Policies we can click Create

Give it a name and click Next

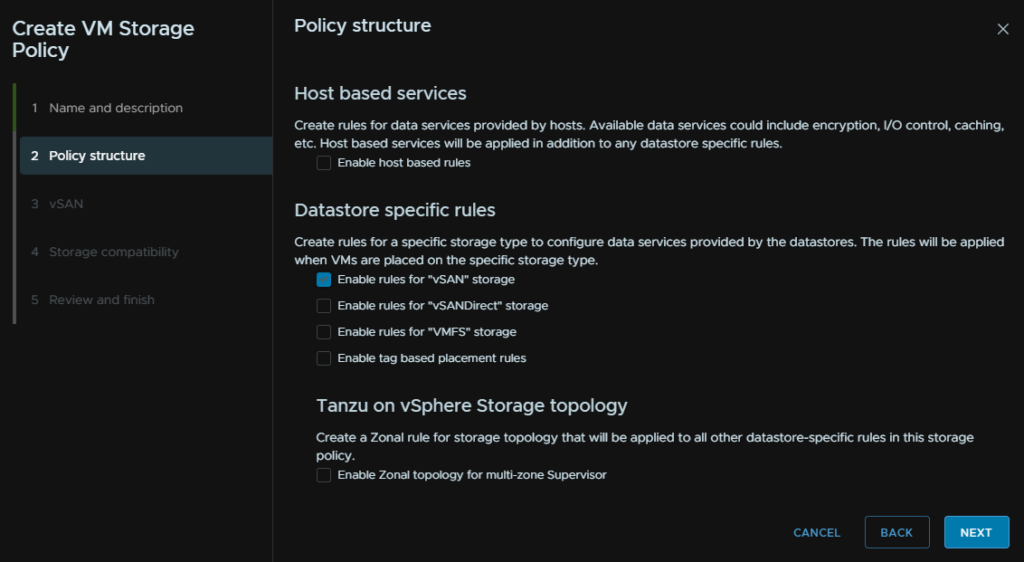

Check the box to Enable Rules For vSAN Storage and click Next

Under Failures To Tolerate, select RAID 5, then click Next

Click Next again

And then Finish

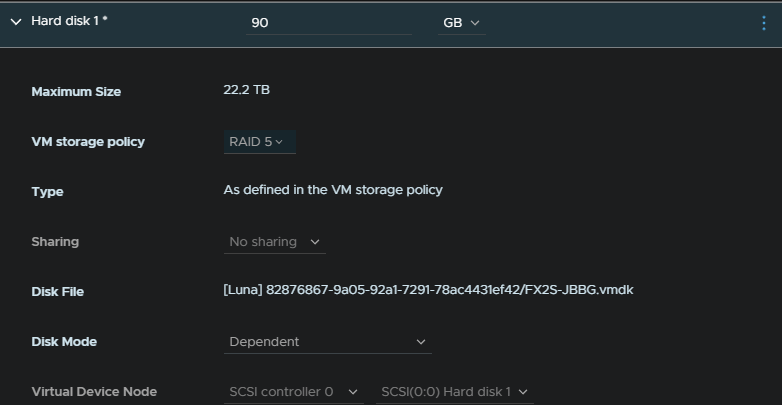

Now if we go and edit a VM, we can expand the hard disks and change the storage policy to the new one, and click ok

This will apply the RAID 5 target resulting in the VM size shrinking from 200% disk usage to 133% on the vSAN cluster making it significantly more storage efficient, though it does have a performance drop so there are places where it can be good and others where its not

You will probably want better redundancy offered by RAID 1 or RAID 1 FTT2 for something like a DC

2.2.6 – Adding Disks

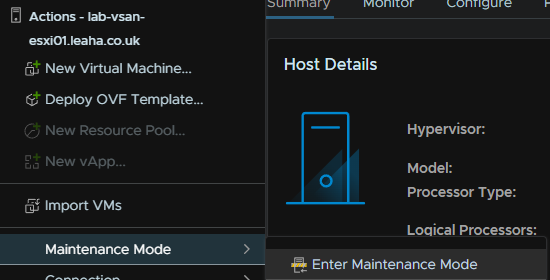

If you want to expand your vSAN cluster with more disks per host, you will first want to put the host into maintenance mode by right clicking it and going to Maintenance Mode/Enter Maintenance Mode

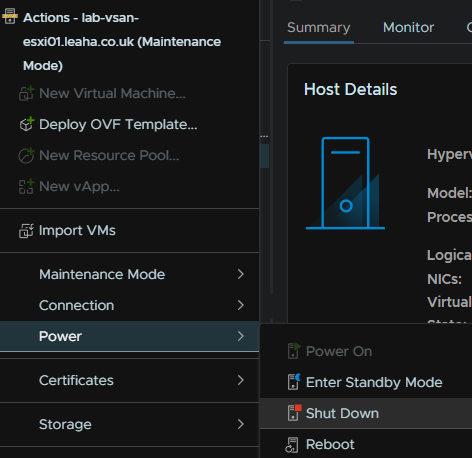

Then Power/Shutdown

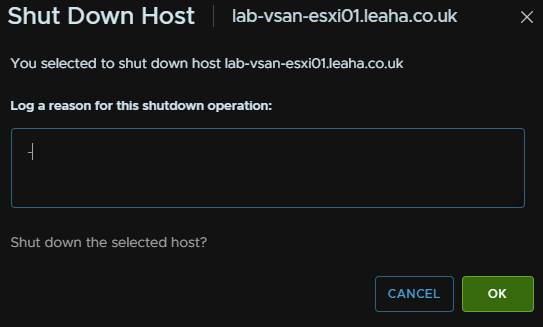

Enter a reason and click ok

When the host is offline, add your new disk in, for optimum performance is should be the same size as the others, then power the host back up

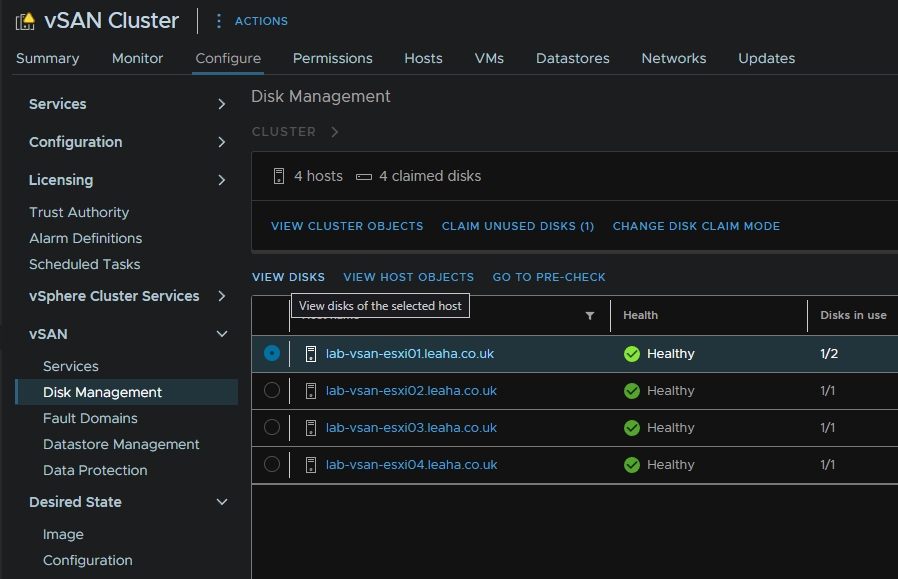

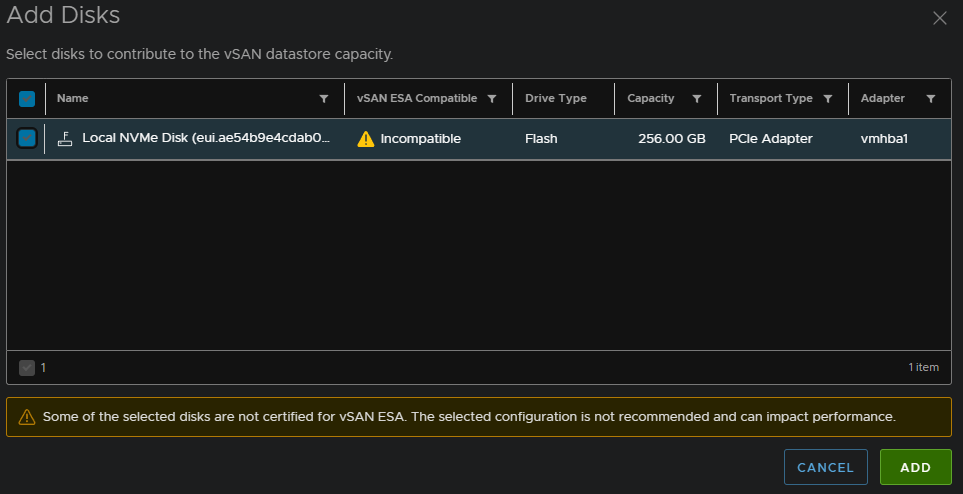

When its back online, click the cluster and head to Configure/vSAN/Disk Management and click View Disks

Expand Ineligible And Unclaimed, you should see your new disks and it should be marked as Unclaimed on the right, make sure its selected

Then click Add Disks

Select your disks and click Add, for a production system, you should not have the hardware certification warning, else VMware will not support it, this is just because mine is a virtual lab

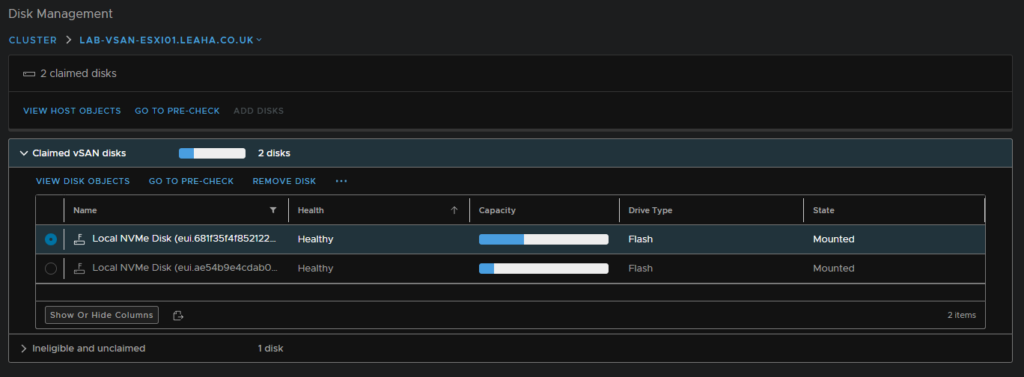

We can now see the disk has been added

And from the cluster on Monitor/vSAN/Capacity, we can see the space has increased

Then right click the host and click Maintenance Mode/Exit Maintenance Mode

2.2.7 – File Services

vSAN file services aims to provide the same NAS functionality with SMB/NFS that a PowerStore can offer with AD RBAC and HA via the vSAN cluster with shared having their objects stored using storage policies

Performance wise I was able to get ~450MB/s from the share, which is most likely due to the SATA SSDs in the cluster being a bottle neck

If you have the new ESA with NVMe disks on 25GbE, I would expect this to be a lot higher, but outside of media requirements, I dont see many people needing 1Gb+ speeds off the file share you might have at the office

2.2.7.1 – Enabling The File Services

To setup the file services, you’ll need some IP addresses on the network you want to use it on, and this will deploy one VM per ESXi host

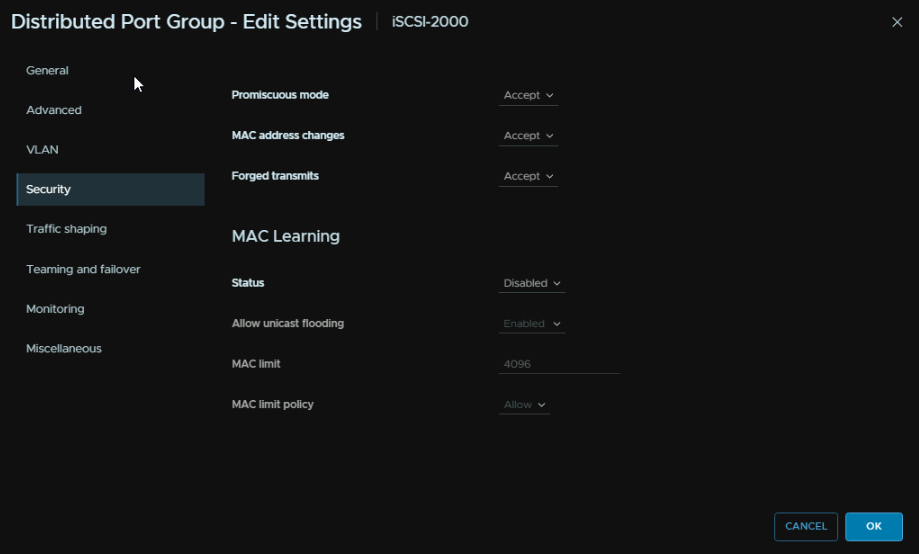

You’ll also need a dedicated port group for this on a VDS, this is because it will lessen the security, so even if its the same VLAN as a port group you already have, it needs it own group

To be able to use SMB shared you’ll need to connect this to an AD server which is also manage RBAC for the shares

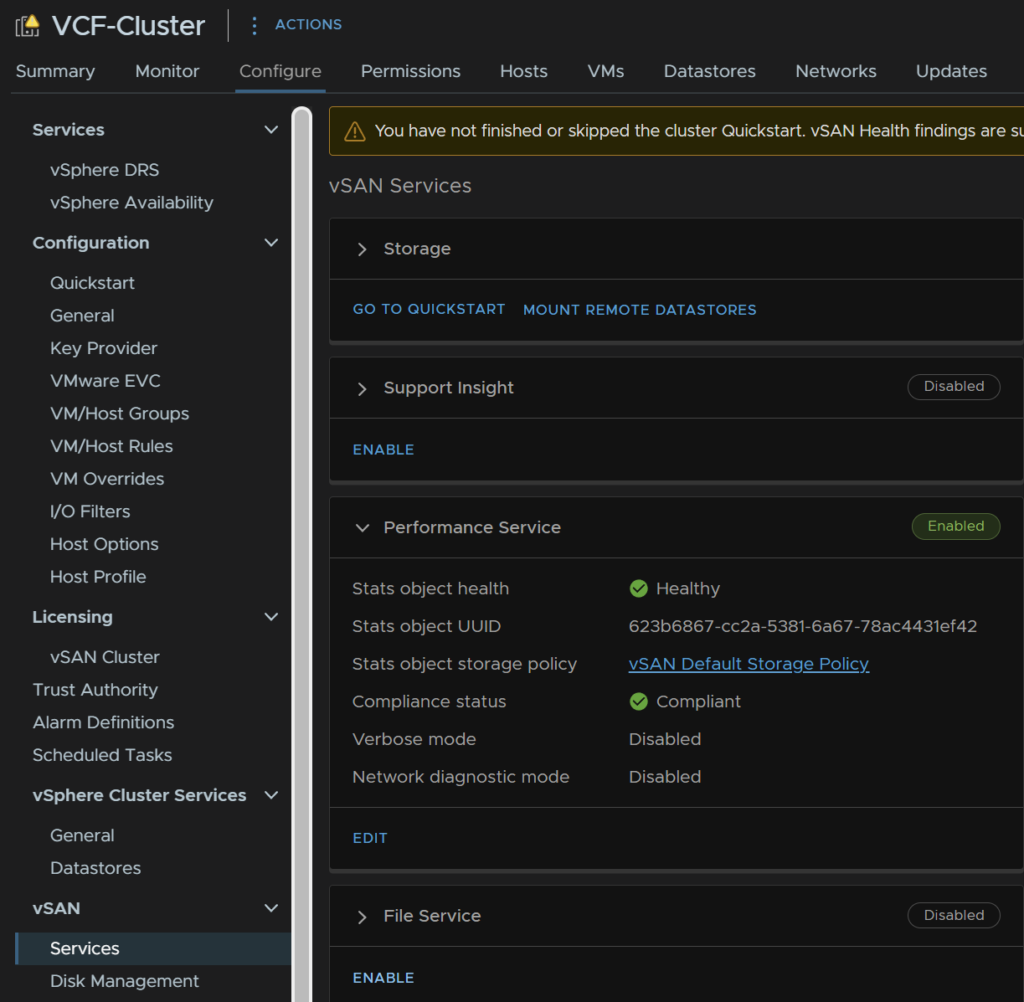

To enable it, click the cluster, then head to Configure/vSAN/Services, and at the bottom, click Enable under the file services tab

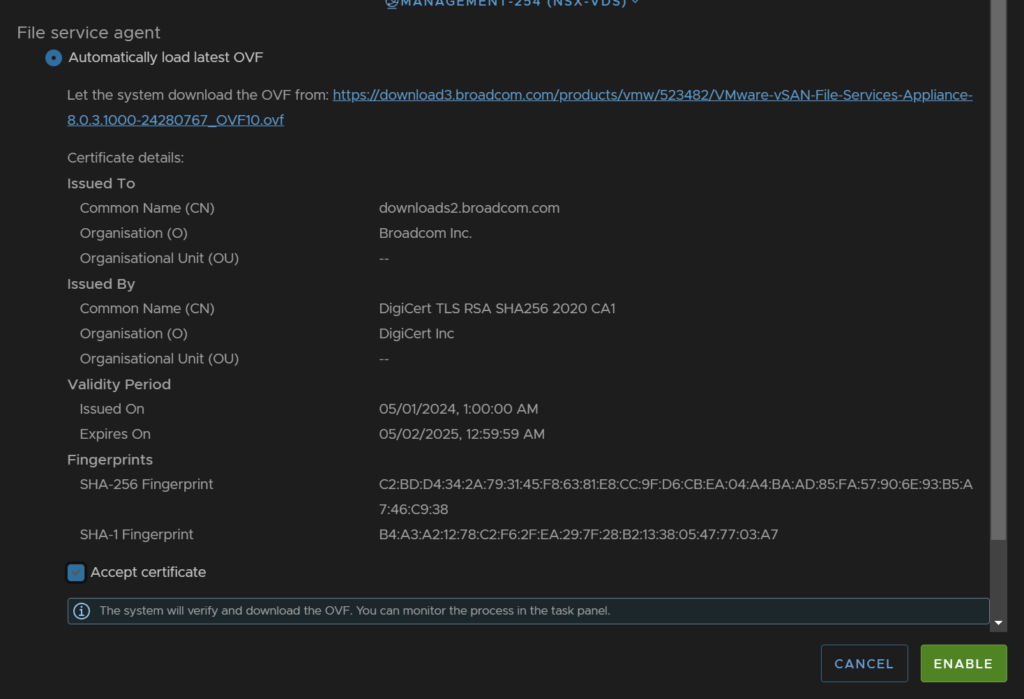

Click the Select drop down on the network section, and select the network port group you want to use

Keep the top option of automatically loading the latest OVF and accept the certificate at the bottom, then click enable

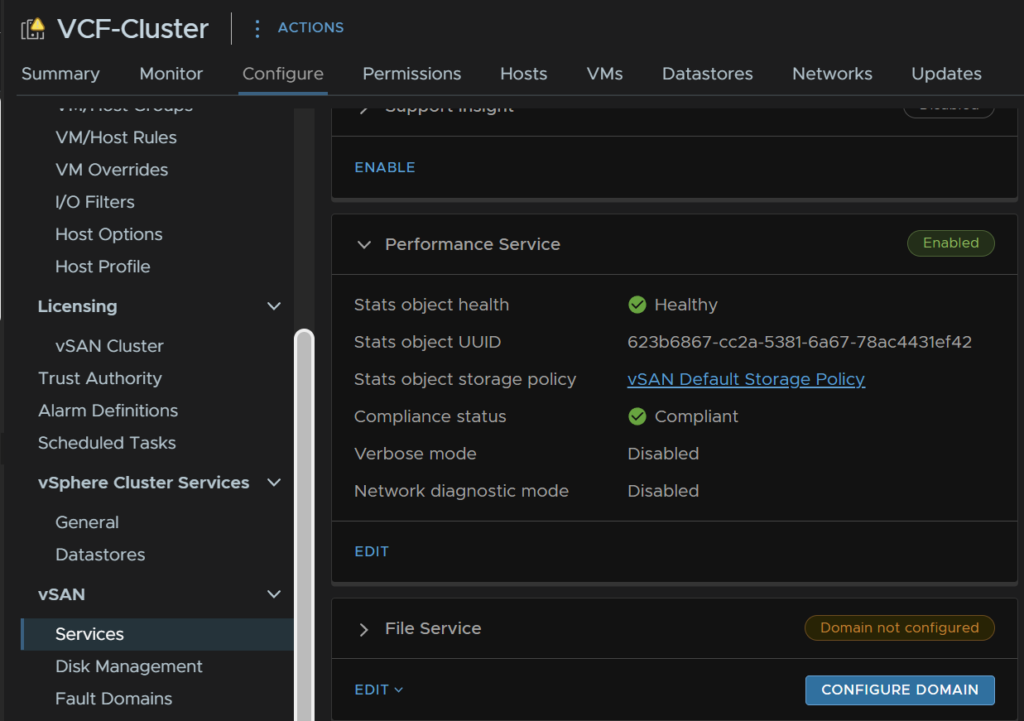

Now when we head back to our cluster and go to Configure/vSAN/Services, we have the option to configure the domain, if we click the Configure Domain button we can continue

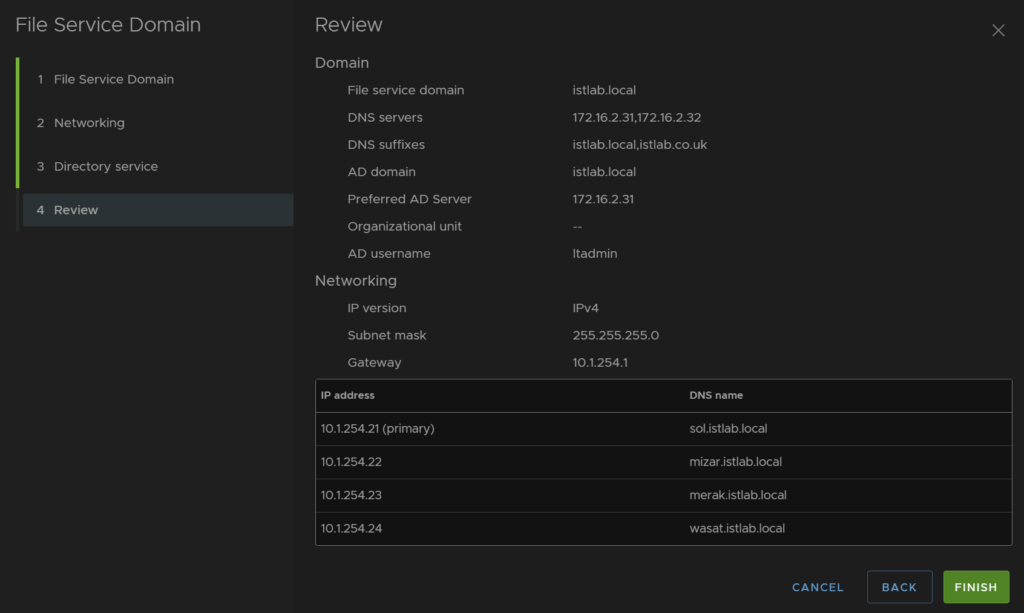

We need a unique domain name for the vSAN file service

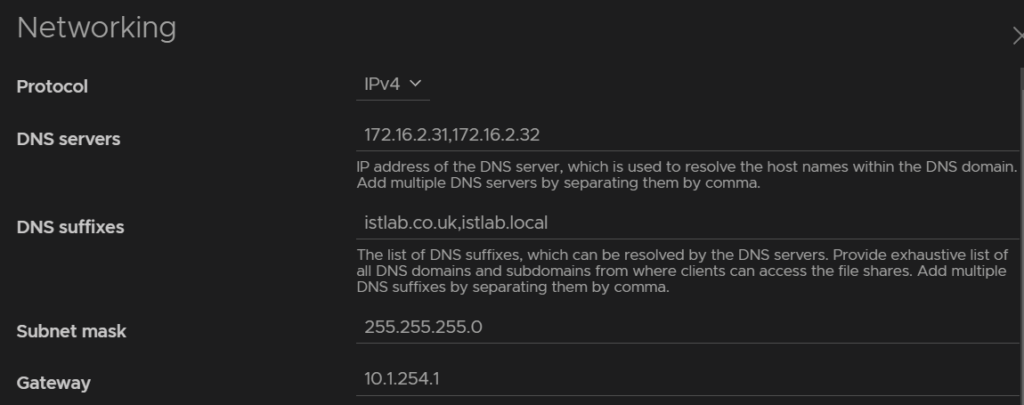

Now enter the IP Protocol, I am using IPv4

Then add your DNS servers, this should be able to resolve the AD Domain, which is normally your AD servers, add any DNS suffixes, I have two, istlab.co.uk which is the main name, and istlab.local, the AD domain suffix, the subnet mask and gateway for the network, this will be for the port group we selected earlier

Now we need the IPs and DNS names, everything by default will run off the primary IP, these IPs will also be on the network on the port group we set, and the DNS names need to be resolvable, and be the same domain as AD

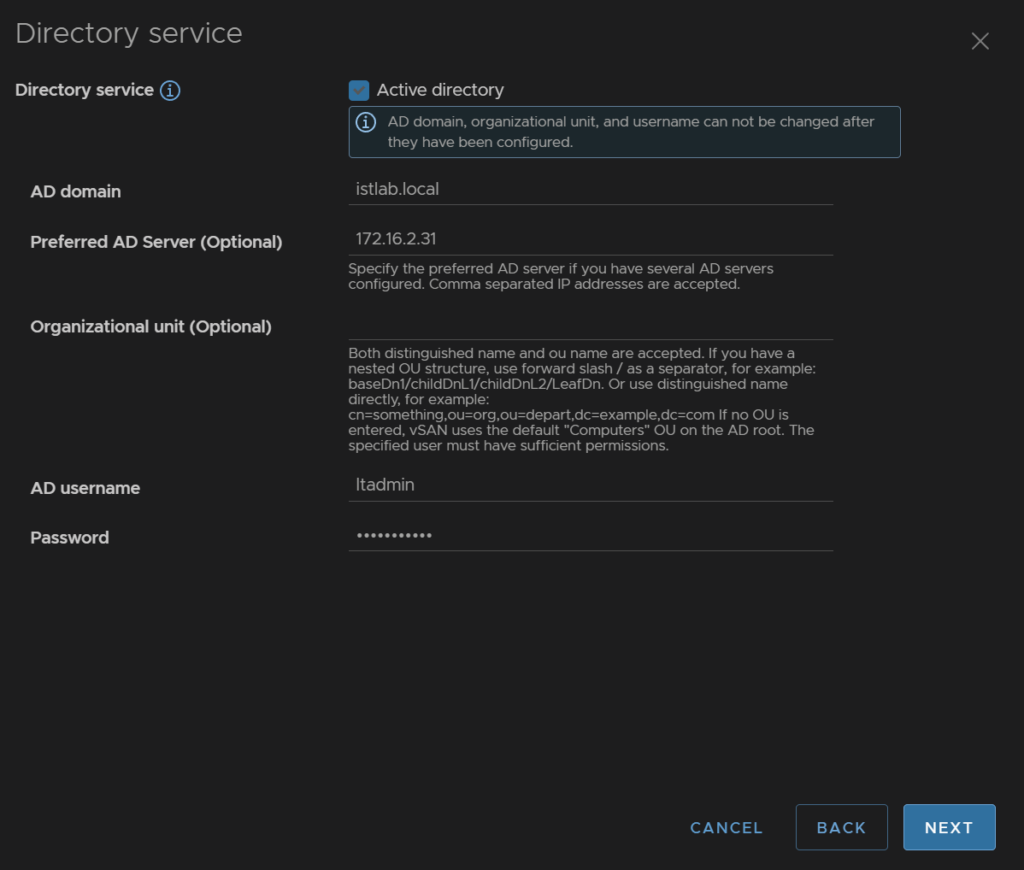

Enable AD, enter the domain, you can select the preferred AD server if needed, I added my primary DC, then a domain admin user account

Then review and finish

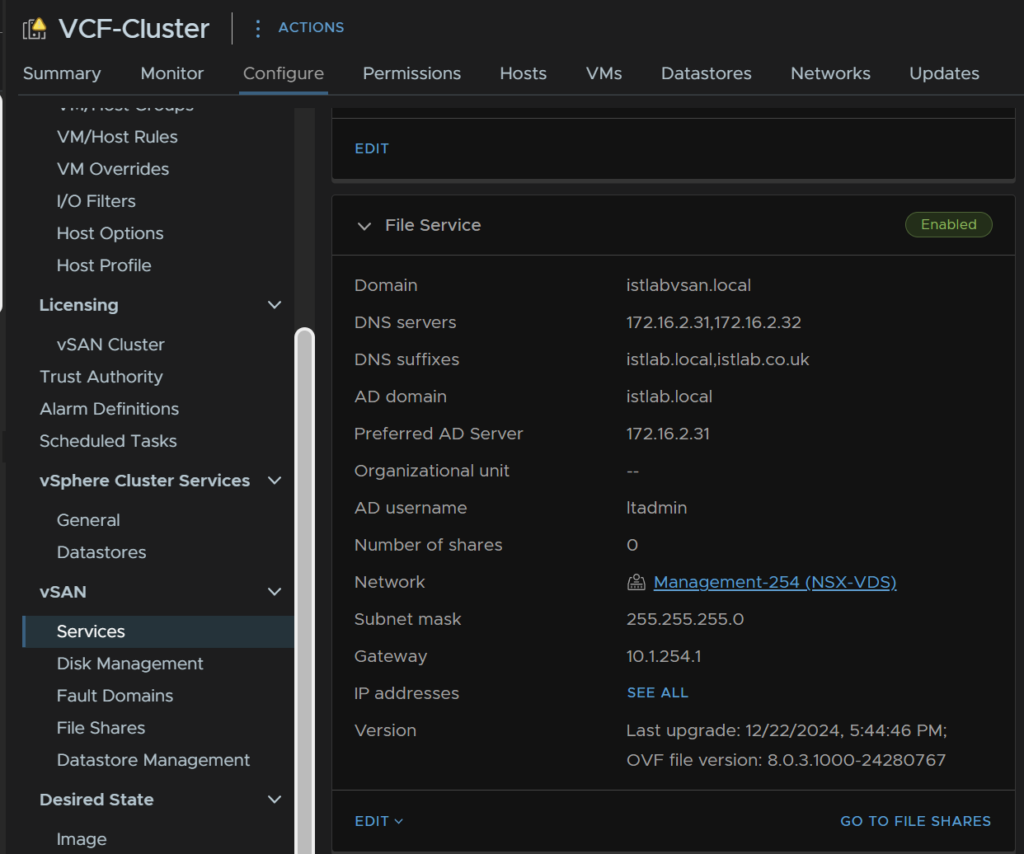

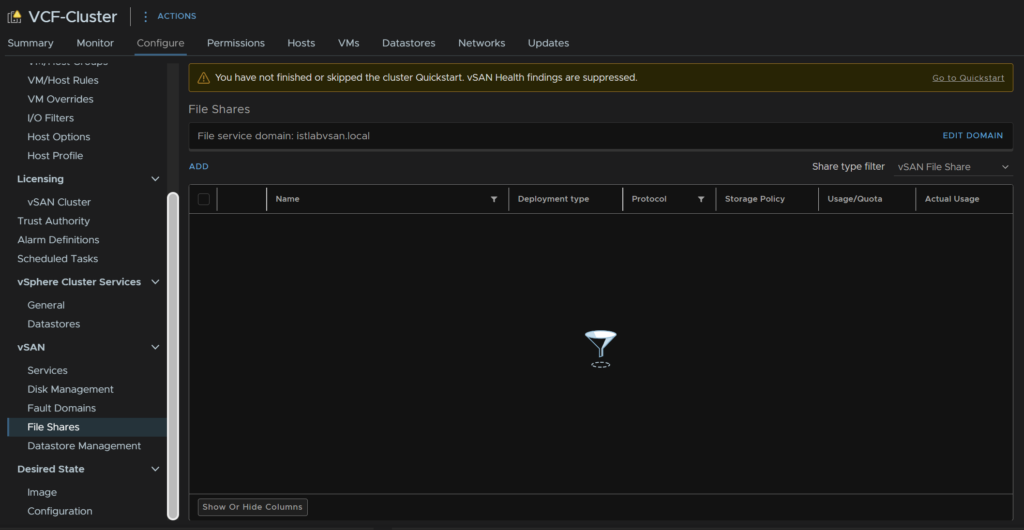

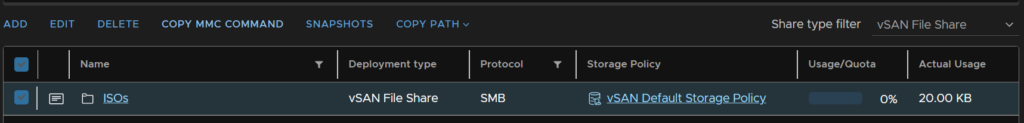

We can see the widget has populated, and under the cluster we have Configure/vSAN/File Shares we can access

2.2.7.2 – Creating Shares

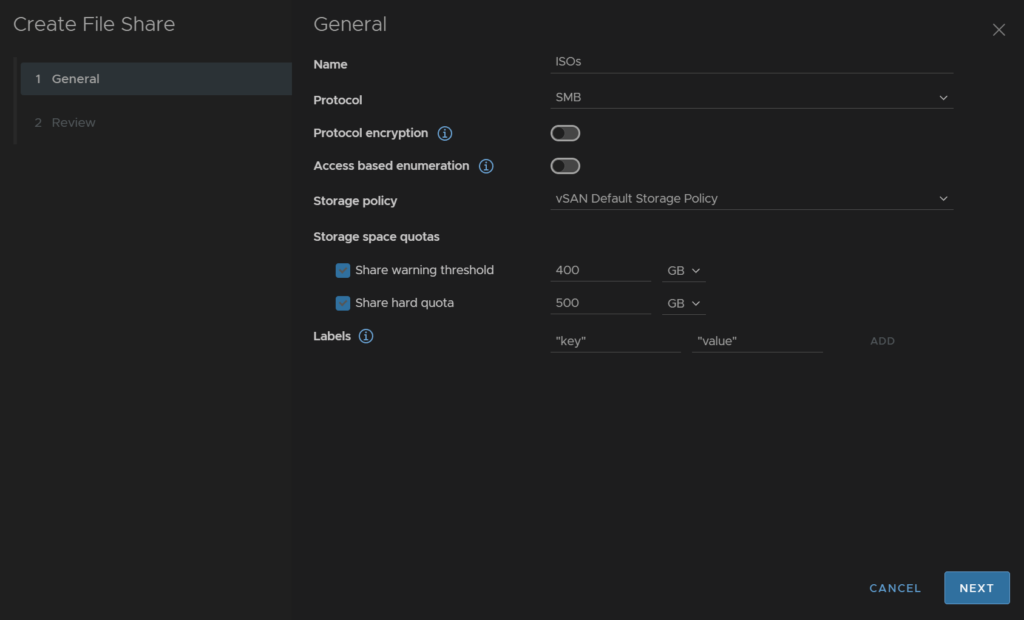

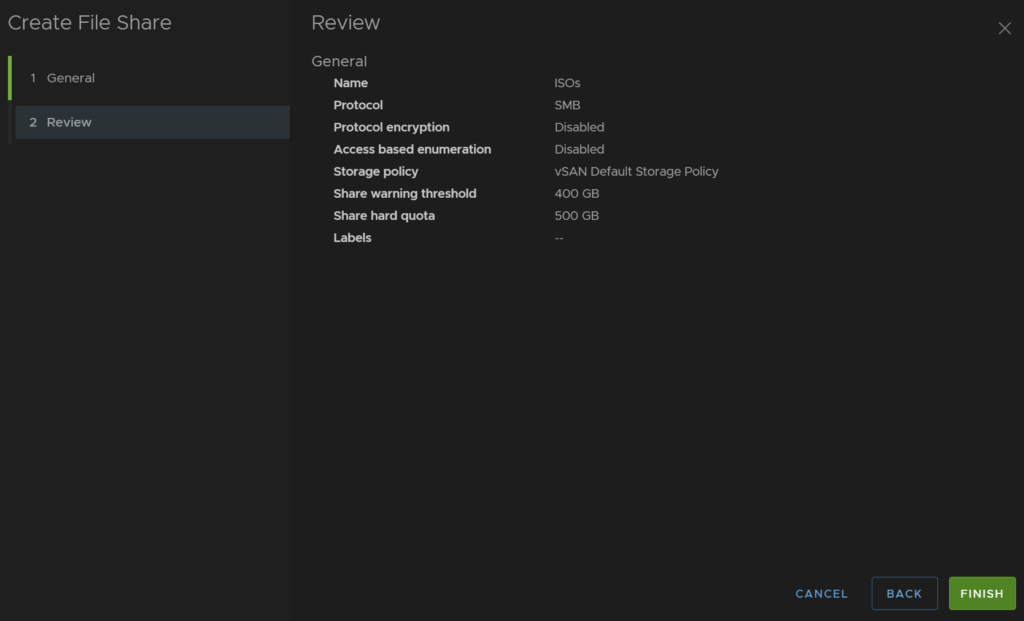

Once its all setup we can add a share from the cluster then Configure/vSAN/File Shares, then click Add

Add a name for the share, select the protocol, you can then enable encryption if you need, and ABE, this can slow shared down, over 10k files, but hides anything a user doesnt have access to

Select the storage policy, the default is fine, though you can get creative with different shares and storage shares if you want to save space

You can then add a warning threshold, and a hard quota, and labels if you want, but I left it blank

Then click Finish

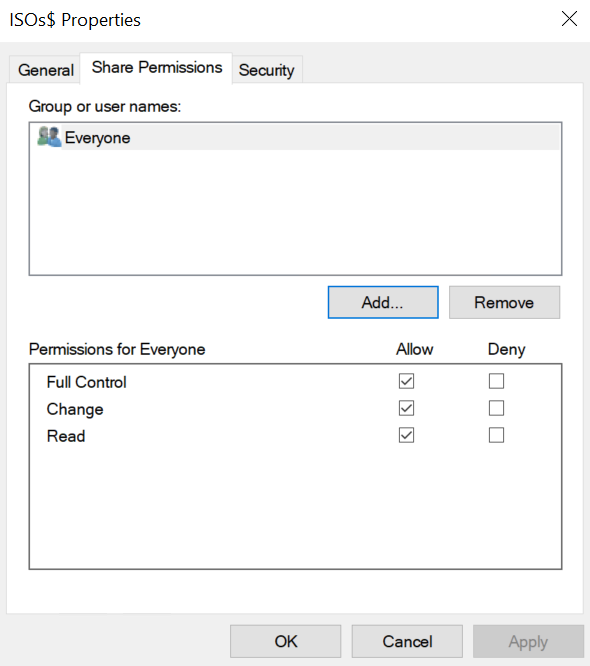

2.2.7.3 – Edit Share Permissions

Now we have our share, how do we setup the permissions on the share to prevent certain people from accessing it

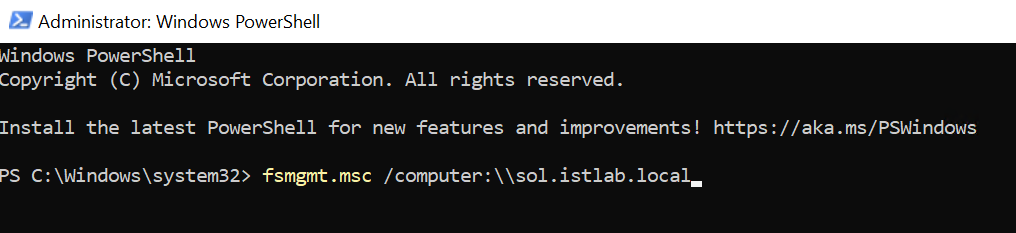

We can select our share and click Copy MMC Command

We need to then head to a machine on the domain, with suitable domain privileges, I used a domain admin here, and pasted the command into an admin PowerShell window, it should look like this

In the newly opened window, we can see all the shared on the primary IP the vSAN File Services has, and we can see our new ISO shares

You can then double click your share and manage your permissions for the top level here

You can then manage subfolder like you would on a Windows file server from File Explorer

2.2.8 – iSCSI Services

Performance wise, I saw this to be similar to the File Services

Its also worth noting, that this cannot be used to connect to another ESXi host, you likely wont be doing this in production anyway, but in a lab scenario, I wanted to test it as an iSCSI target for nested hosts, sadly, the only option there is NFS via the File Services

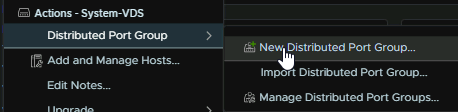

2.2.8.1 – Enabling The Service

Firstly, we need some networking, I would recommend having a non routable dedicated network for this, here I will be using VLAN 2000

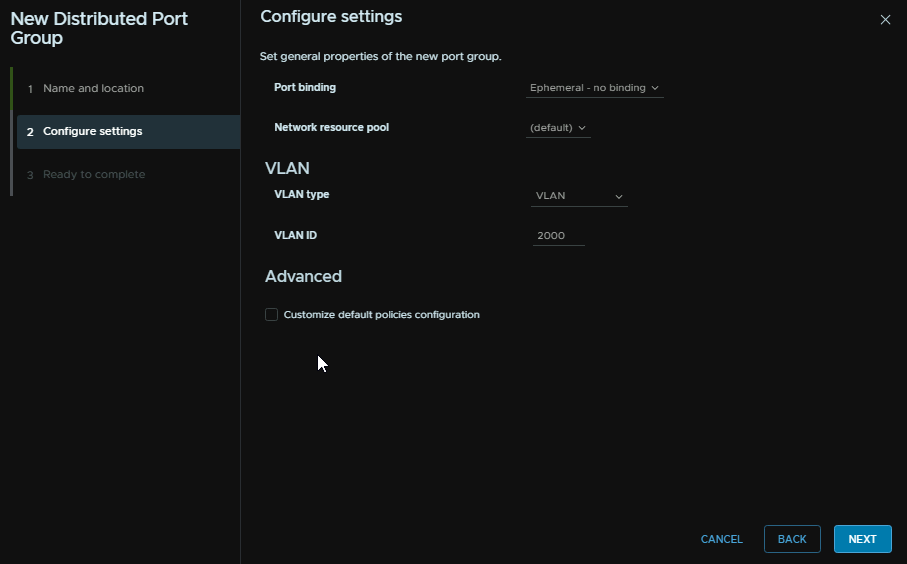

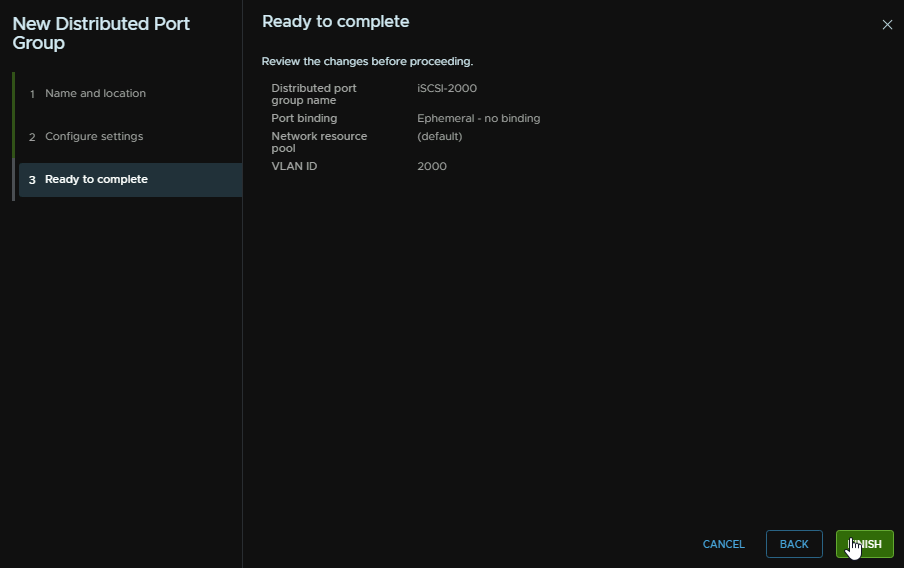

Right click the VDS you want to add this too, ensuring the ports connected have this VLAN allowed to the trunk list, and click Distributed Port Group/New Distributed Port Group

Give the port group a name and click Next

Select Ephemeral for the port binding and select the VLAN to be the VLAN you want, in my case, 2000, then click Next

Then finish

Now we need a vmk per server o the iSCSI VLAN, click the host and head to Configure/Networking/VMkernel Adapters and click Add Networking

Select VMkernel Adapter and click Next

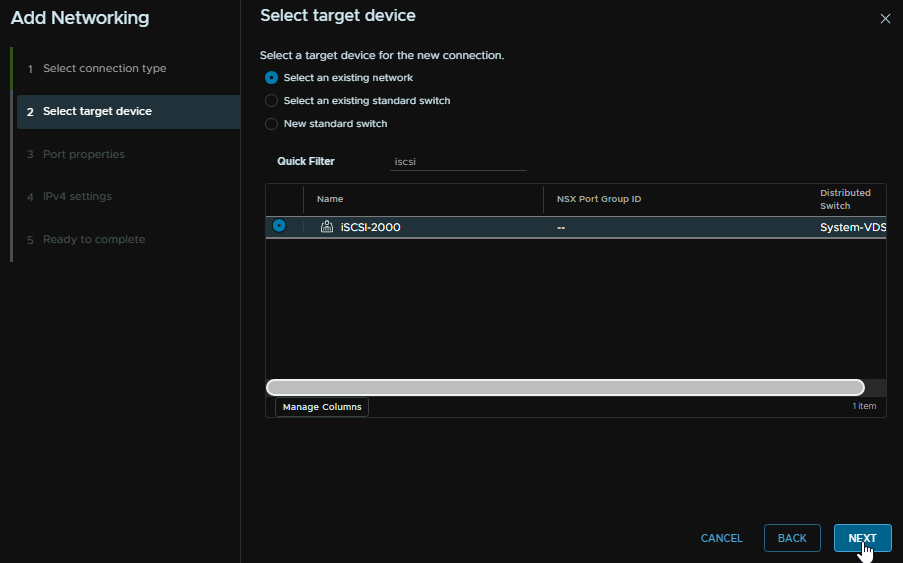

Select the port group and click Next

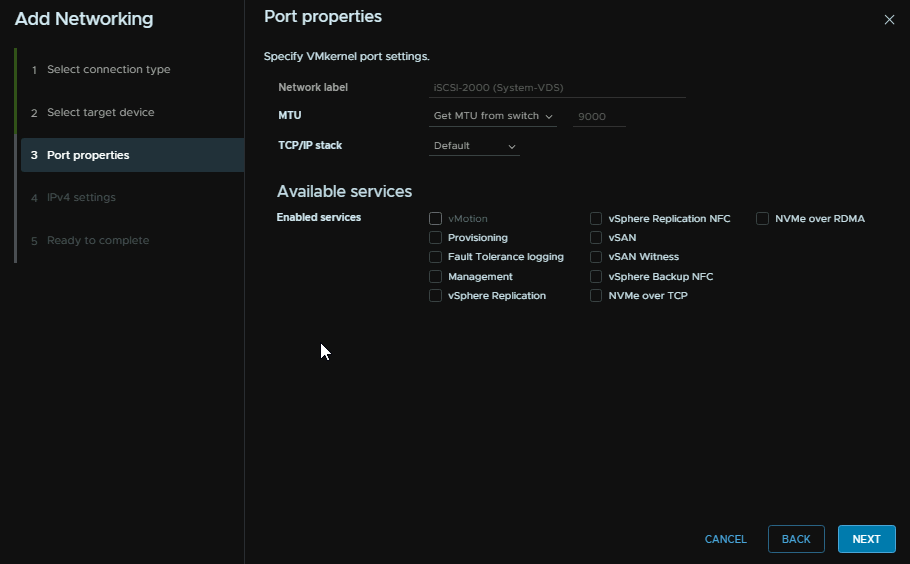

Click Next, my switch has an MTU of 9000, so the VMK will be using that, but be careful with MTU changes that everything in the chain has the same MTU for bits, then click Next

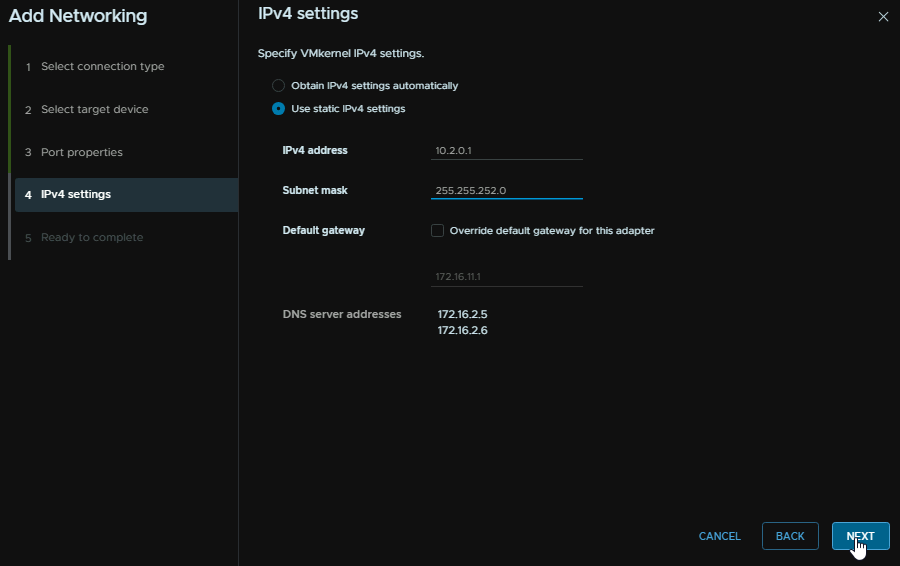

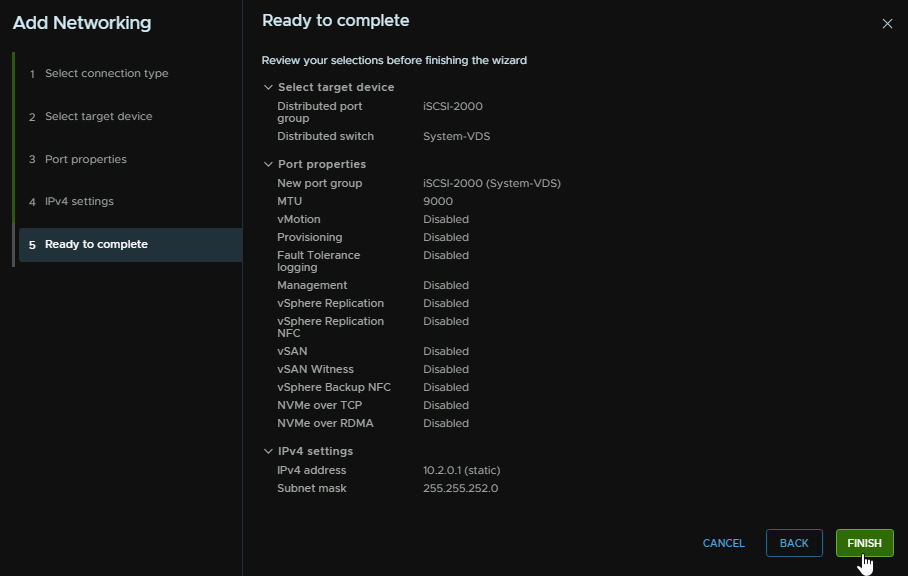

Add an IP address and a subnet, the gateway wont matter as its L2 anyway, then click Next

The click Finish

The repeat with different IPs for the rest of the hosts, the vmk needs to be the same, ie vmk4 on all hosts

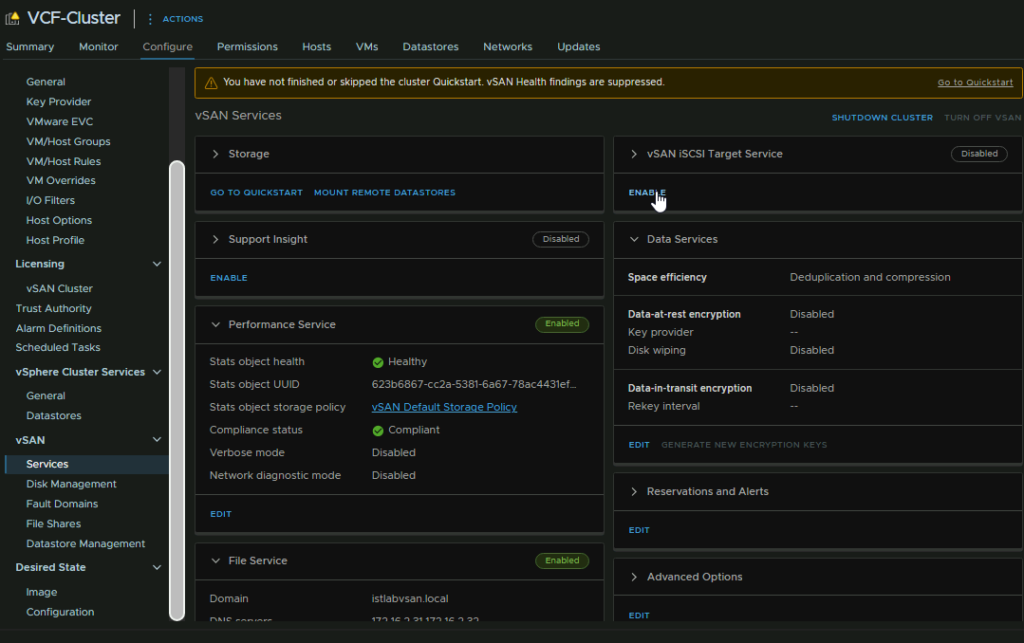

To enable this, click your cluster and head to Configure/vSAN/Services and under the vSAN iSCSI Target Service, click Enable

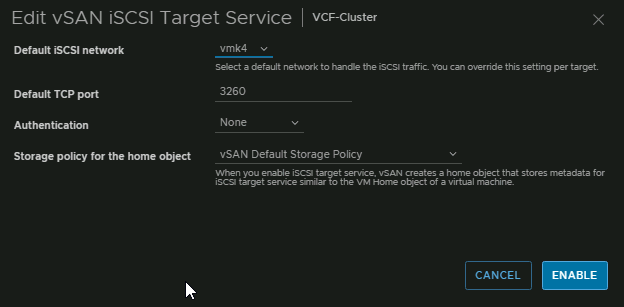

Select the vmk, I have vmk4 on all the hosts, select the port you want, authentication you want, and a storage policy, then click Enable

2.2.8.2 – Adding Targets And LUNs

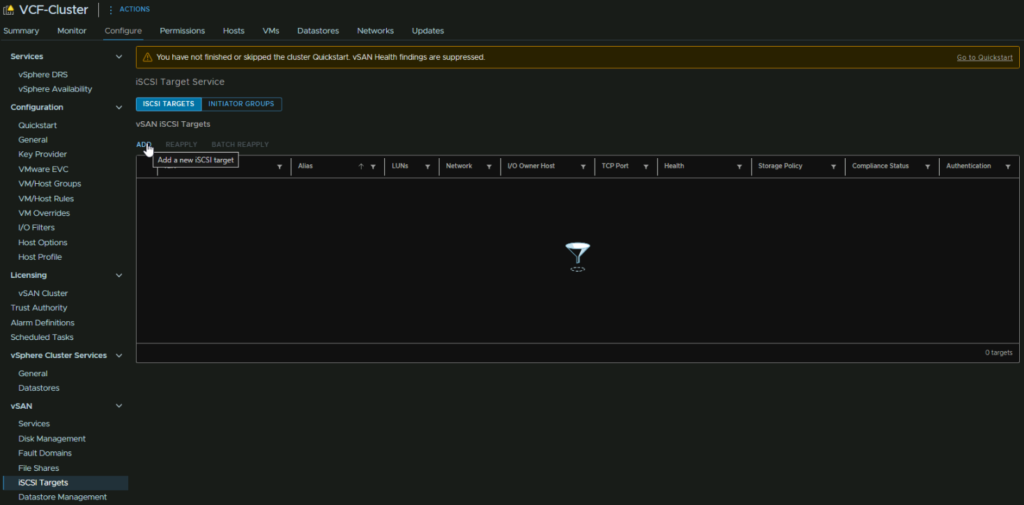

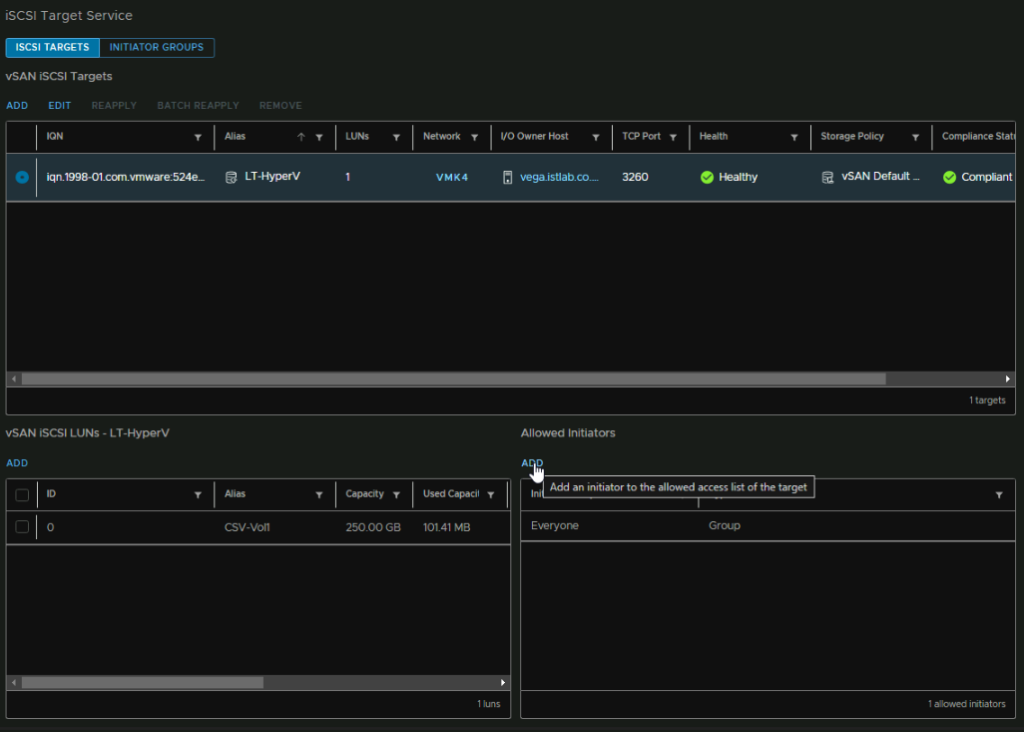

You may need to refresh your page for the iSCSI Targets section to crop up

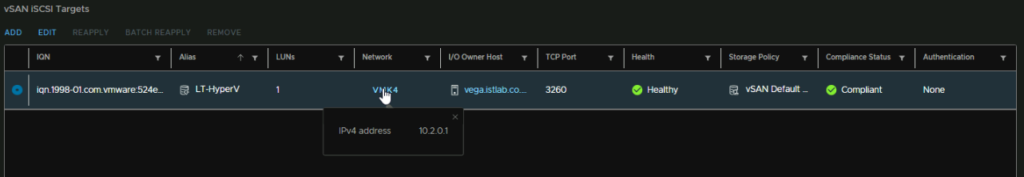

Click the cluster and head to Configure/vSAN/iSCSI Targets then click Add

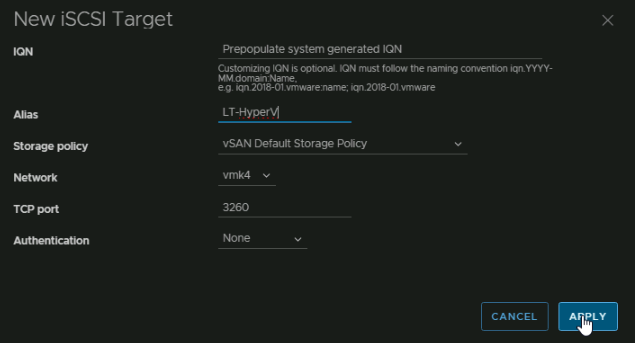

Leave the IQN empty, this will auto populate, give it an Alias so we know what the LUN is for in the vSAN configuration section, select your policy, I am just using the default, but you can change the policy to change the amount of space/redundancy

Make sure network is using the vmk we set this up with, which is vmk4 on all hosts for iSCSI, the port is the stock 3260 and you can set authentication but this is normally ignored anyway, so I am leaving it black, then click Apply

Now we have our Target remote hosts can connect to

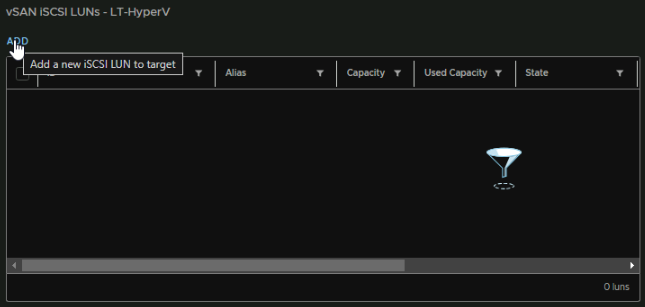

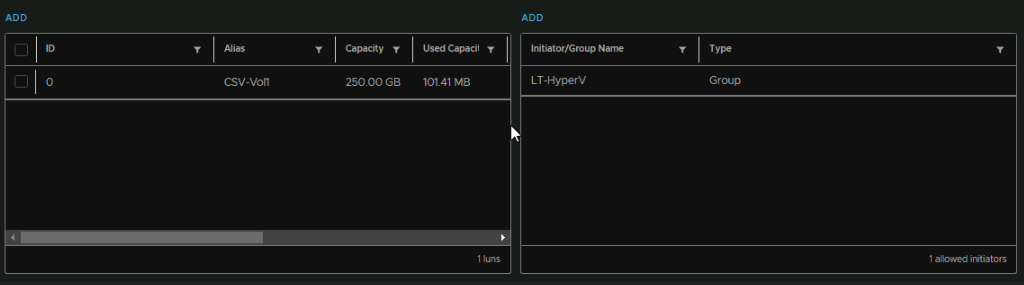

Towards the bottom we can add a LUN for this selected target by clicking Add

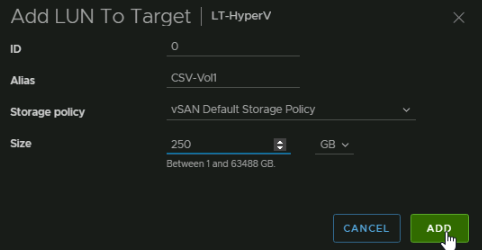

Leave the ID at the default value, give it an alias so we know what volume this is, select the storage policy, and add a size, then click Add

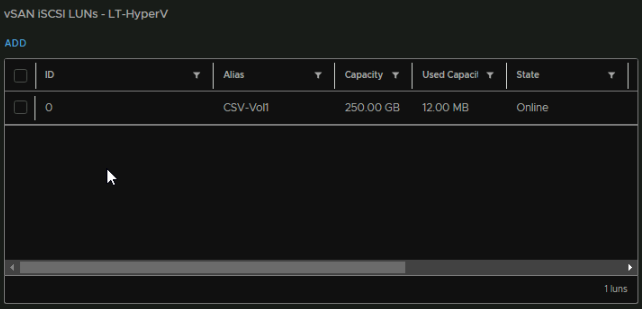

Here we can see the LUN and its used capacity

Now we have our LUN, we need to connect our client, this varies depending on the OS

I will be testing a Windows VM for iSCSI storage for a Hyper-V lab using CSV storage

For nested VMs you may need your port group to have these Security settings else the VM will not communicate properly, but I would try without this first in a production environment as it should work

For our Windows machine, lets test iSCSI, installing and configuring the server for iSCSI is out of scope for this and will be covered in the app you want to deploy its self

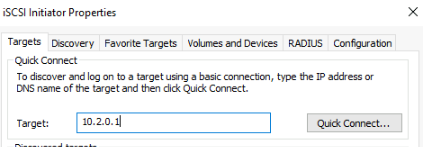

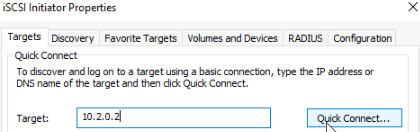

The VMK IP of the host that is the I/O owner is 10.2.0.1 so if we add that in Windows as a target and click Quick Connect

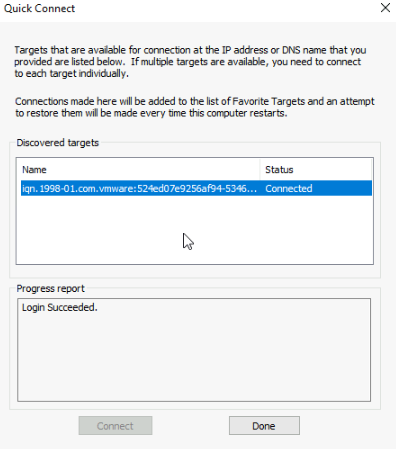

We can see the vSAN initiator shows up and logs in

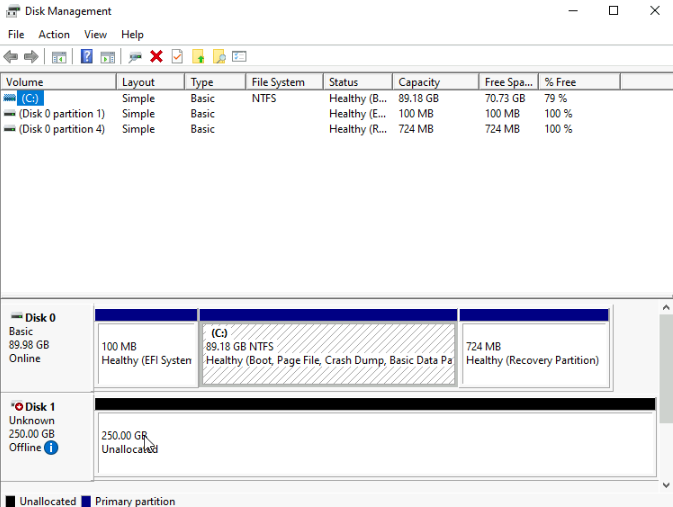

And in Disk Management we can see our 250GB disk showing up ok

Now, this is great, but what if we want our host doing the I/O to go into maintenance mode, the connection is only for that IP

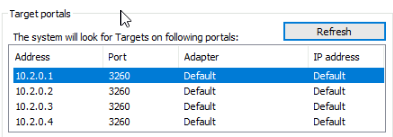

We can add these to the quick connect

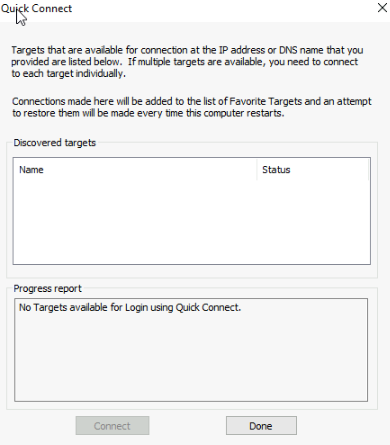

It will say there are no targets, as this host isnt the I/O owner, but thats fine, its meant as another path if the host is in maintenance mode

Now all the servers are in the Target Pool, so the client should be able to get its iSCSI disk from any host in our cluster

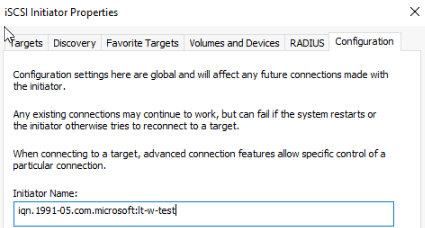

Now, by default, all servers can connect to a LUN, for Hyper-V in this example, I only want my cluster nodes connecting to this LUN, not someone else cluster, so under iSCSI and then Configuration, we can copy the Initiator Name

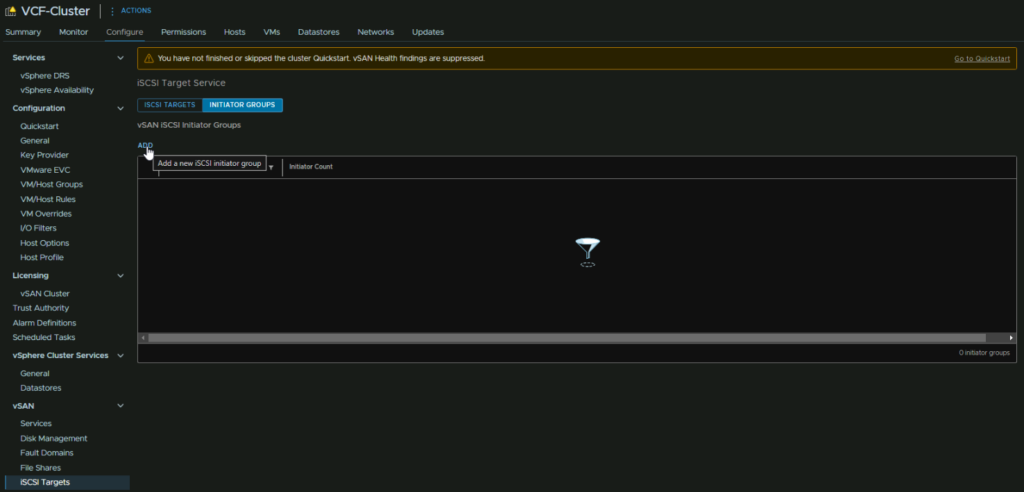

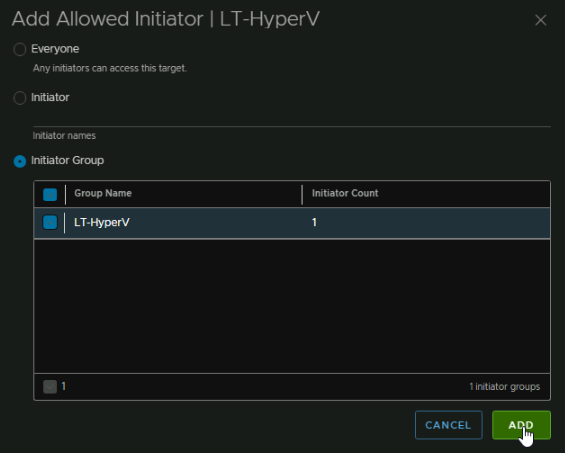

Back in vSphere, click the Cluster and head to Configure/vSAN/iSCSI Targets/Initiator Groups and click Add

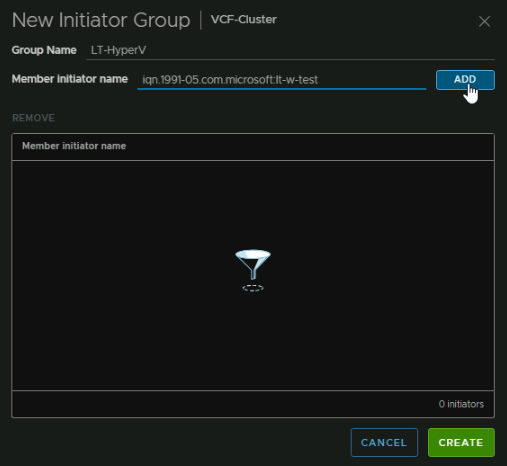

Give the group a name, and add the initiators of all hosts you want to access the target we just set up, and all its LUNs

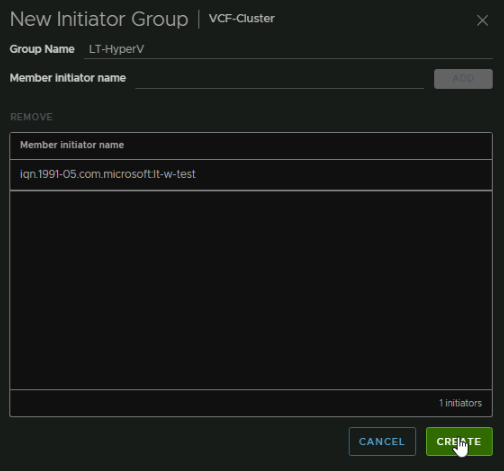

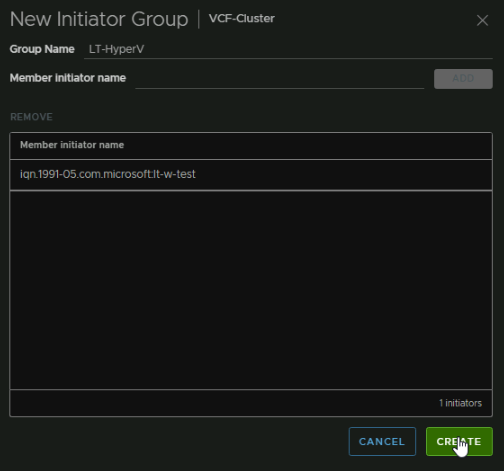

When you have all your hosts, I just have one for this demo, click Create

When you have all your hosts, I just have one for this demo, click Create

Back under iSCSI targets select the Target, then click Add under Allow Initiators, you will see the default group allowing everyone

Select the Initiator Group radio button and click your new group, then click Add

Now we can see the Everyone group is replaced by this group, so only my Hyper-V servers can access this

On a sequential write to the test VM, then putting the host managing the I/O into maintenance mode to force a failover of the I/O owner did extend the time for the host to enter maintenance mode which isnt an issue, but there was a 15-30 second drop where the file copy went to 0 while Windows reconnected iSCSI as we added all hosts to the list which doesnt seem too bad, though I dont have the same test with a PowerStore to compare with a controller failover

2.2.9 – Data Protection Services

This is only supported on the vSAN ESA architecture due to the entire design overhaul and the way data is stored, so is donest work with the OSA architecture

2.2.9.1 – Data Protection Services

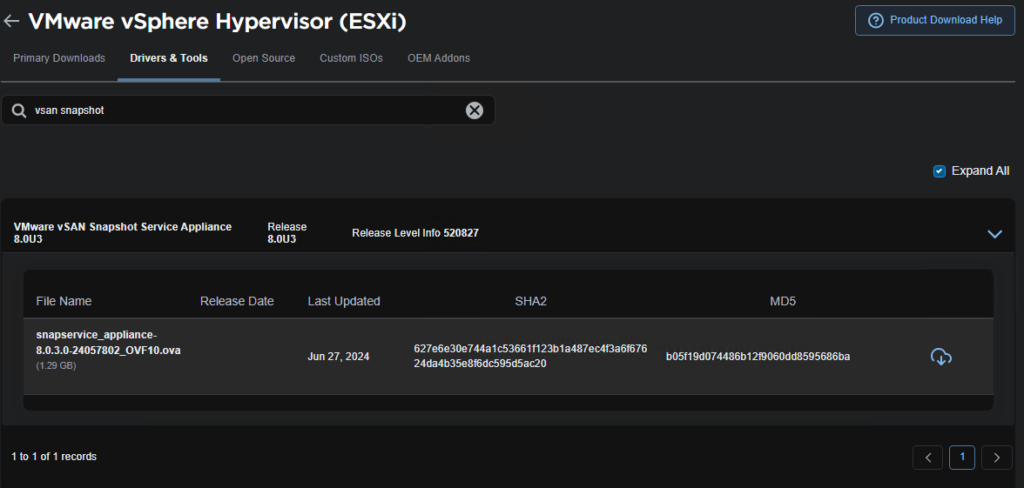

To get this set up we need to download the appliance, we can get that from VMware vSphere on the Broadcom downloads page

Select the version you are entitled to

Click View Group on ESXi

Then under Drivers & Tools, we can search for “vSAN Snapshot” and see the appliance to download

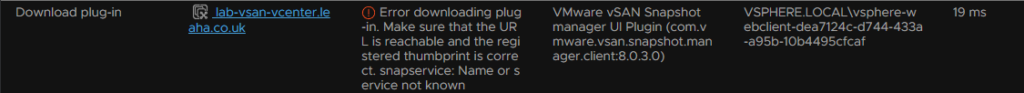

I did get a few DNS issues with my vCenter causing the plugin failure

This was a DNS issue and you need the search path properly added to vCenter

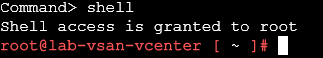

You can check this by accessing the vCenter with SSH and using the shell command to get to the bash shell

Then run

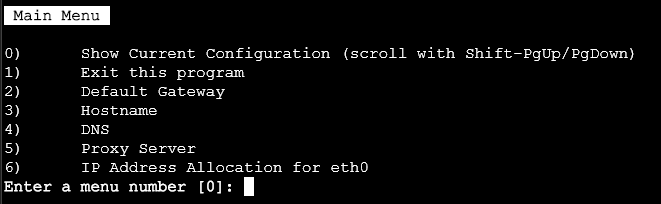

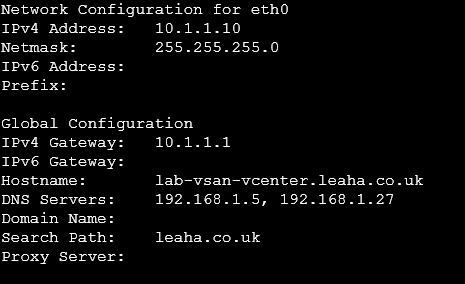

/opt/vmware/share/vami/vami_config_netPress 0 to show the current config

Ensure the search path is populated

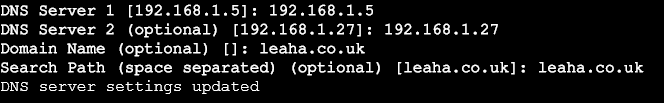

If its not, use option 4 to set DNS, and set the correct fields, dont worry if DNS server 1 is 127.0.0.1, just use your normal DNS servers like so

This will use WilliamLam’s script as it consistently works and using the UI is a little difficult with exporting the vCenter certificate, and even when I could get it deployed, the plugin kept giving me a ‘No Healthy Upstream’ in the menu within vCenter, so I would recommend using this script

You can find his article and the script link here

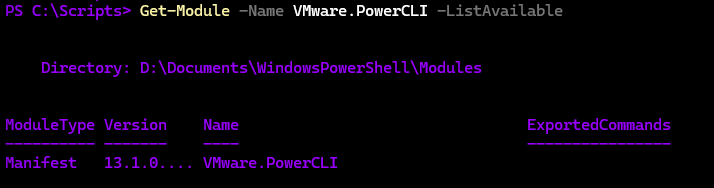

First you need to use PowerCLI for this

It can be installed with

Install-Module -Name VMware.PowerCLIYou can then check its installed with

Get-Module -Name VMware.PowerCLI -ListAvailable

First, opt out of the CEIP with

Set-PowerCLIConfiguration -Scope User -ParticipateInCEIP $falseThen set PowerCLI to ignore certifications being untrusted with

Set-PowerCLIConfiguration -InvalidCertificateAction Ignore -Confirm:$falseThe connect to your vCenter with

Connect-VIServer -Server <vCenter-FQDN> -User <admin-Username> -Password <password>An example with my vCenter is

Connect-VIServer -Server lab-vsan-vcenter.leaha.co.uk -User [email protected] -Password <password>Then allow running of any script with

Set-ExecutionPolicy UnrestrictedWe’ll need to open and edit the script to match our environment

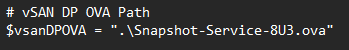

Add the OVA path at the top, I moved it into the same folder for ease, as I had issues pulling it directly from my SMB share

We need to set the following

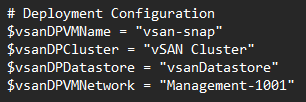

Change the vsanDPVMName to whatever you want the VM name to be in vSphere

Change the vsanDPCluster to the name of your cluster in vSphere

Change the vsanDPDatatsore to match the vSAN datastore name you have

CHange the vsanDPNetwork name to the port group you want to deploy it too

Then we need to edit the OVF properties

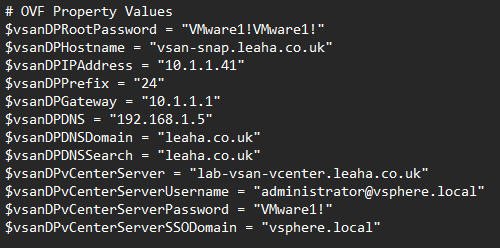

Set the root password to the root password for the appliance, it needs to be 15 characters with capitals, numbers and a symbol

The hostname should be the fqdn of the appliance in DNS

Then the IP address of the appliance

The subnet prefix

The gateway

DNS Servers, comma separated

DNS domain

DNS search

vCenter fqdn

vCenter administrator username

Administrator password

SSO domain

Then do not edit any further, save the script and browse to the directory in PowerShell its located

I have mine under C:\Scripts so I ran

cd C:\ScriptsThen execute the script with

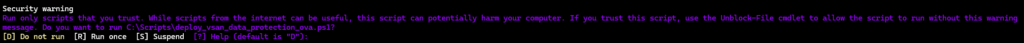

./deploy_vsan_data_protection_ova.ps1And press R if this prompt comes up

Once thats done, the appliance should deploy and automatically configure its self, the script will also have this output

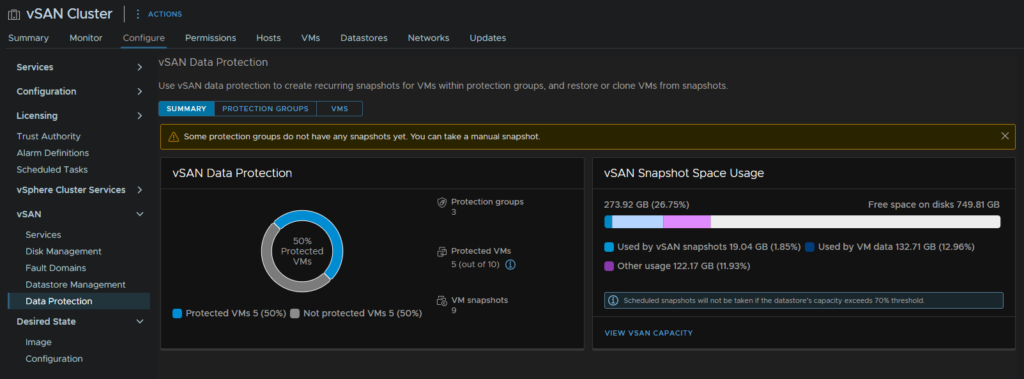

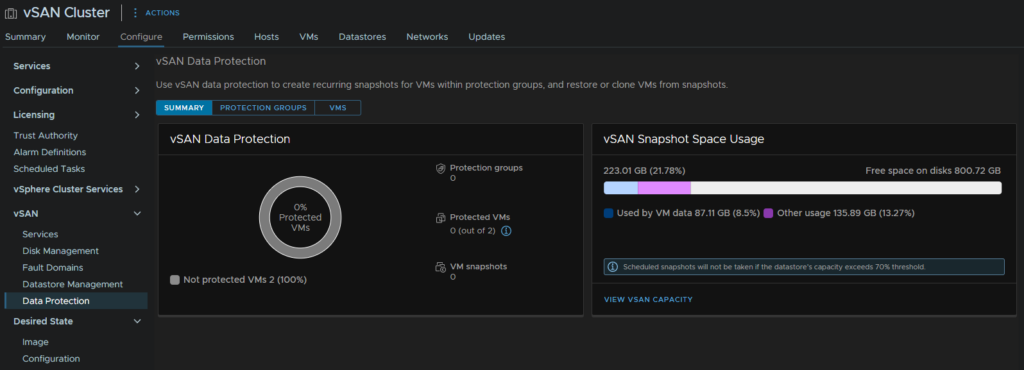

Click your cluster and head to Configure/vSAN/Data Protection and we can see the plugin is ready to use

2.2.9.2 – Creating Protection Groups

Now we have the service deployed, we can use it to take periodic snapshots on VMs to restore to, the idea here is that this is meant to me like volume level snapshots on a traditional SAN, except better as its per VM meaning you dont have to restore the entire VM

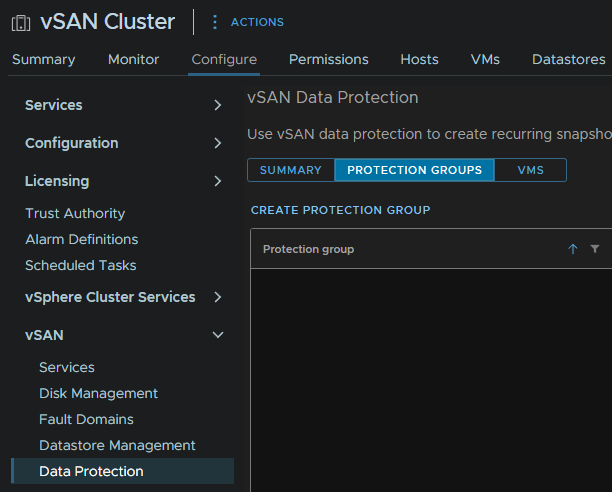

Click your cluster, and head to Configure/vSAN/Data Protection/Protection Groups and click Create Protection Group

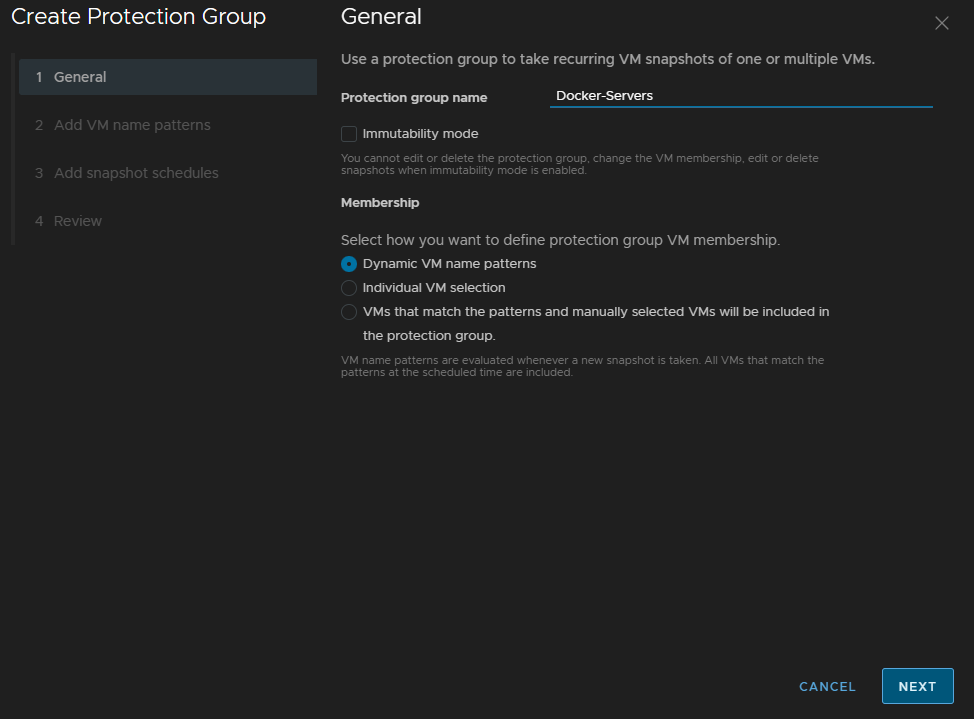

Lets create a group for docker servers

Give the group a name, you can enable immutability, however you need be very very careful not to overfill the vSAN capacity, over 70% and snapshots will start failing

You cannt edit or delete the group with immutability, this includes adding VMs, if you use a naming pattern it will add them, but you still cant remove them other than deleting the VM from disk, and there is no way to remove the group, so once you set it, its there to stay, so maybe only use this for your absolute most critical VMs, but you should have immutable backups with something like Veeam, so its not a must

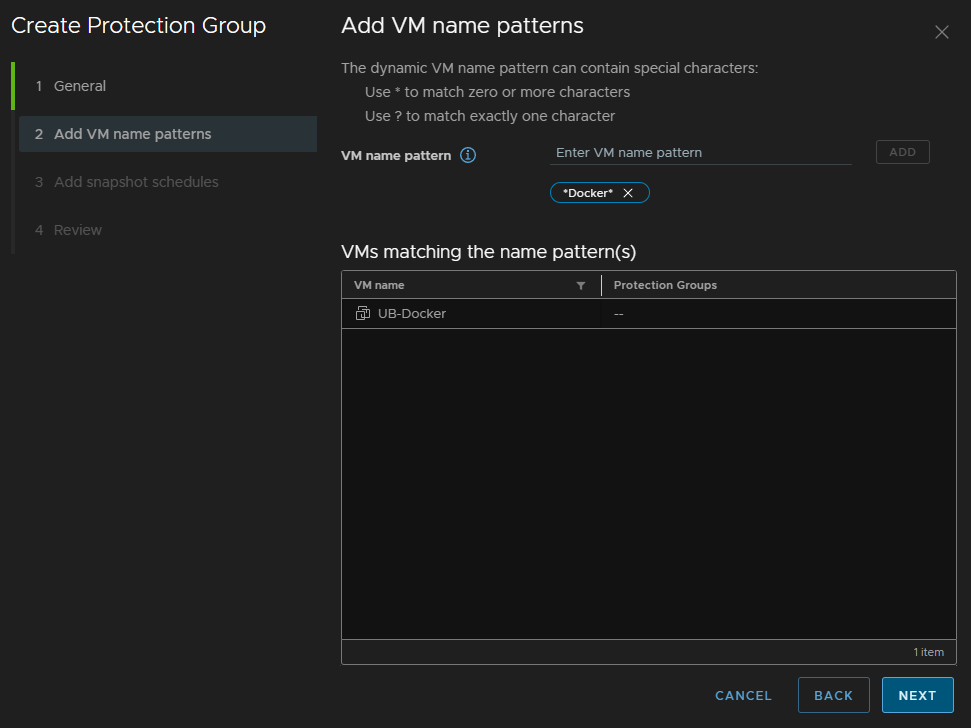

And for this, we will use Dynamic Naming, then click Next

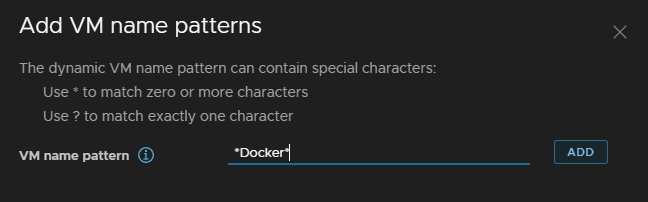

Add your naming scheme, this will include anything with Docker in the name, and click Add

That has then populated ok, click Next

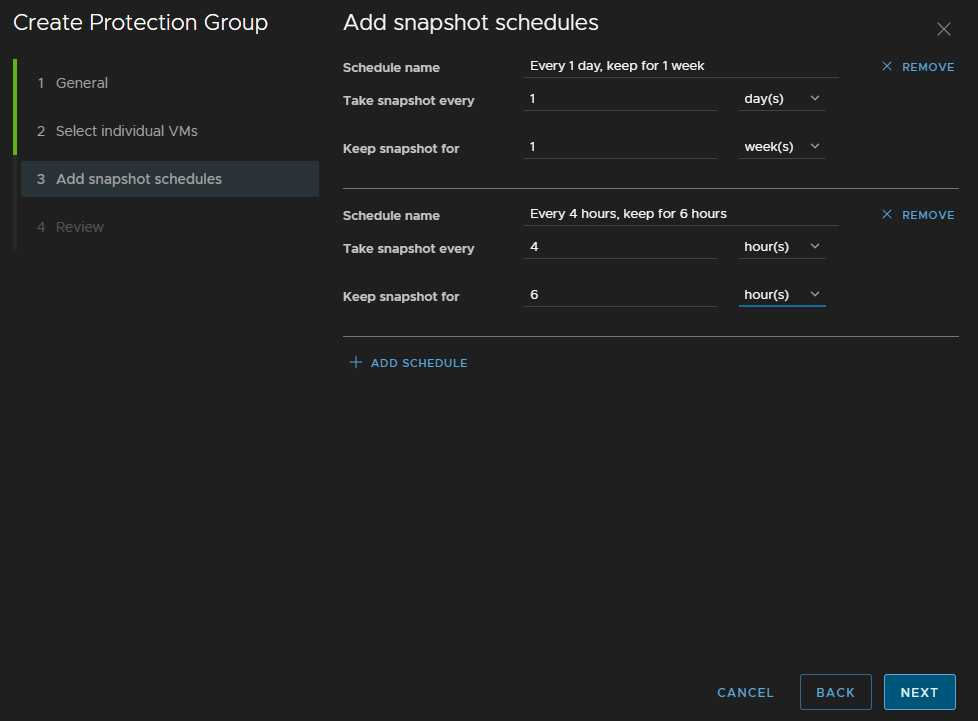

Then we can add our policies, the default I like to go for on a SAN has two policies

- 1 daily snapshot, keep for 7 days

- 1 every 4 hours keeping for 6 hours

We can create the same rule here by also clicking Add Schedule for the second, then click Next

And click Finish

If you deploy new VMs after the group has been created, and it matches a naming scheme in a protection group, it will be added on the next snapshot run automatically which is a huge benefit

2.2.9.3 – Managing Snapshots

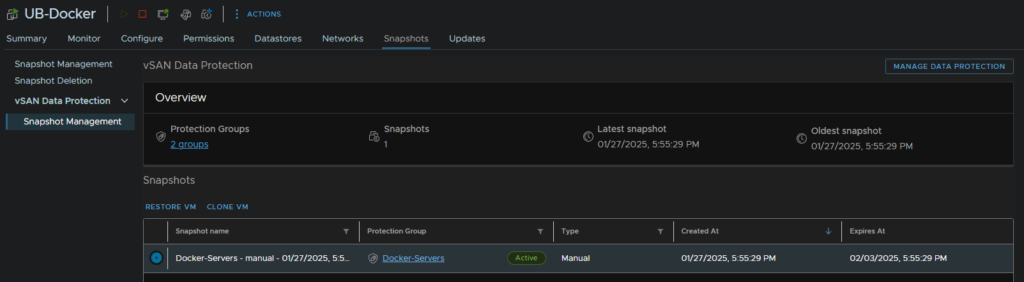

When snapshots start being made on your schedule, they appear on their own tab on the VM under Snapshots/vSAN Data Protection/Snapshot Management

From here you can see all your snapshots, restore them, or clone it to a new VM if you need

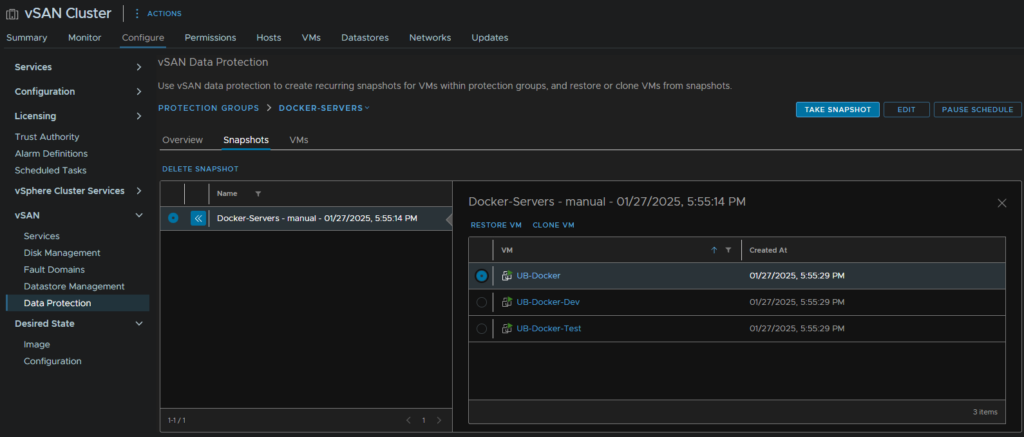

We can also manage this from the cluster then Configure/vSAN/Data Protection/Protection groups and selecting your group

Here you can remove the snapshot for all VMs, click the VM and have the same restore/clone options

You can also manually take a snapshot, helpful for patching rounds, edit the group, if its not immutable, or pause the schedule

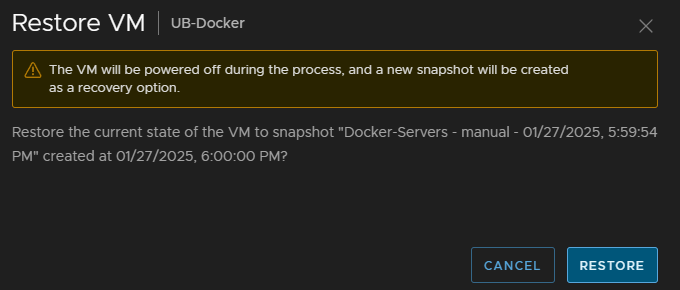

If you want to restore a VM, as maybe something went wrong, you can click Restore, then Restore again on this pop up

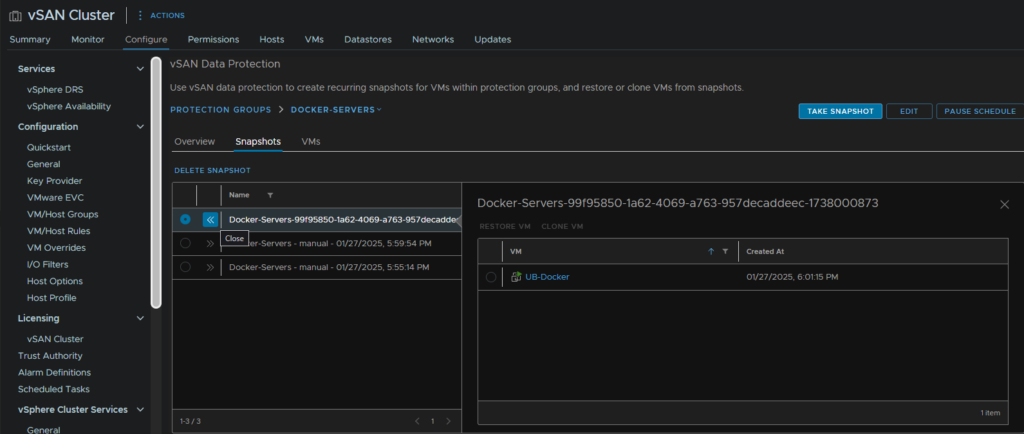

This will take a new snapshot, then revert to the old one, the key benefit here is that with the new snapshot, you can go back to the original state before you starting restoring anything

You will need to ensure the system snapshot has been created, the group will show a new snapshot only applied to that VM, the VM will be powered off, so once this is taken, you will need to manually power the VM back on

And when I test on my server, I can see its reverted and a test file has gone

I can revert the GUID style snapshot above to revert to before I started editing things, though this snapshots the VM and powers it off again, so it does get a little messy, but I can see my other test file is now back

When you have done reverting VMs and are sure you dont need the other snapshots created as part of a restore, you’ll want to select them and click Delete Snapshot to keep things tidy

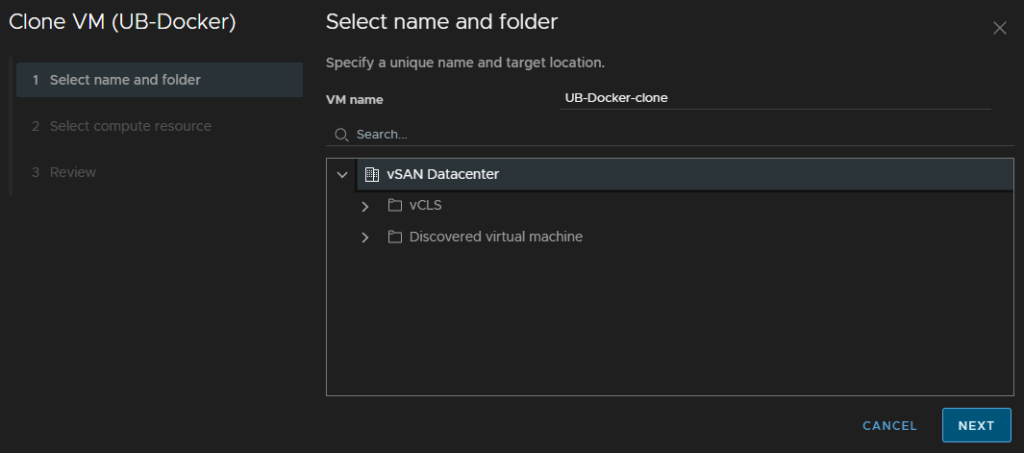

If we go down the Clone route, we can create a clone VM, this may be helpful for testing on a problematic VM where you want to test on a copy, should the worst happen

Enter a VM name, I left mine as default, the select a folder and click Next

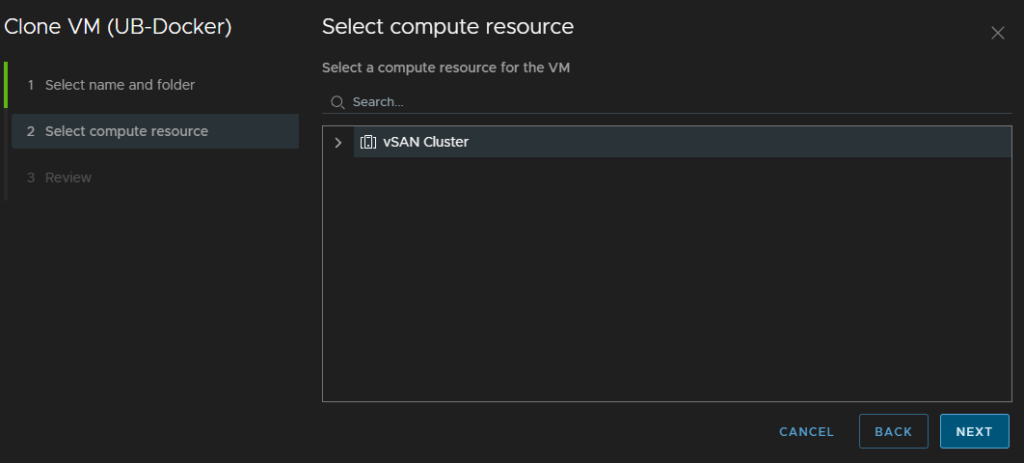

Select the cluster for compute and click Next

Then click Clone

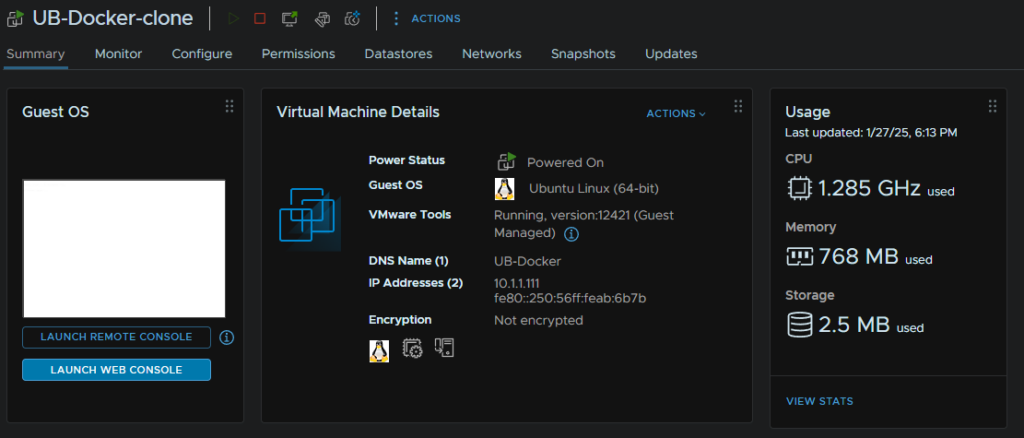

Once its cloned, we can shutdown the original machine and power the clone on, once its up, we can access it like the original but at the point in time the snapshot was taken

And I can see in the guest via SSH, my test files are gone as this clone was from a snapshot before the files were created

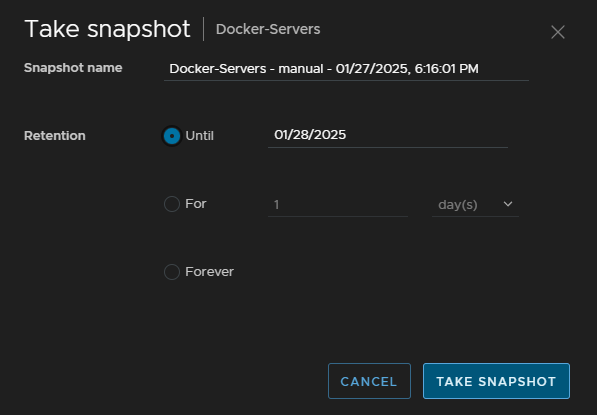

For the option on manually taking a snapshot from the protection group menu we can add a name, and specify when this snapshot should be automatically deleted

As we use this more, the initial dashboard from the cluster under Configure/vSAN/Data protection will update with how many of our VMs are protected, and the storage space vSAN and the snapshots are using